#!/usr/bin/env python

# coding: utf-8

#

# # Introduction

#

# Welcome to the Neuromatch computational neuroscience course!

#

# # Introduction

#

# Welcome to the Neuromatch computational neuroscience course!

#

# ## Orientation Video

# In[ ]:

# @markdown

from ipywidgets import widgets

from IPython.display import YouTubeVideo

from IPython.display import IFrame

from IPython.display import display

class PlayVideo(IFrame):

def __init__(self, id, source, page=1, width=400, height=300, **kwargs):

self.id = id

if source == 'Bilibili':

src = f'https://player.bilibili.com/player.html?bvid={id}&page={page}'

elif source == 'Osf':

src = f'https://mfr.ca-1.osf.io/render?url=https://osf.io/download/{id}/?direct%26mode=render'

super(PlayVideo, self).__init__(src, width, height, **kwargs)

def display_videos(video_ids, W=400, H=300, fs=1):

tab_contents = []

for i, video_id in enumerate(video_ids):

out = widgets.Output()

with out:

if video_ids[i][0] == 'Youtube':

video = YouTubeVideo(id=video_ids[i][1], width=W,

height=H, fs=fs, rel=0)

print(f'Video available at https://youtube.com/watch?v={video.id}')

else:

video = PlayVideo(id=video_ids[i][1], source=video_ids[i][0], width=W,

height=H, fs=fs, autoplay=False)

if video_ids[i][0] == 'Bilibili':

print(f'Video available at https://www.bilibili.com/video/{video.id}')

elif video_ids[i][0] == 'Osf':

print(f'Video available at https://osf.io/{video.id}')

display(video)

tab_contents.append(out)

return tab_contents

video_ids = [('Youtube', 'FwjyhCLeqx0'), ('Bilibili', 'BV1QE421P7EJ')]

tab_contents = display_videos(video_ids, W=854, H=480)

tabs = widgets.Tab()

tabs.children = tab_contents

for i in range(len(tab_contents)):

tabs.set_title(i, video_ids[i][0])

display(tabs)

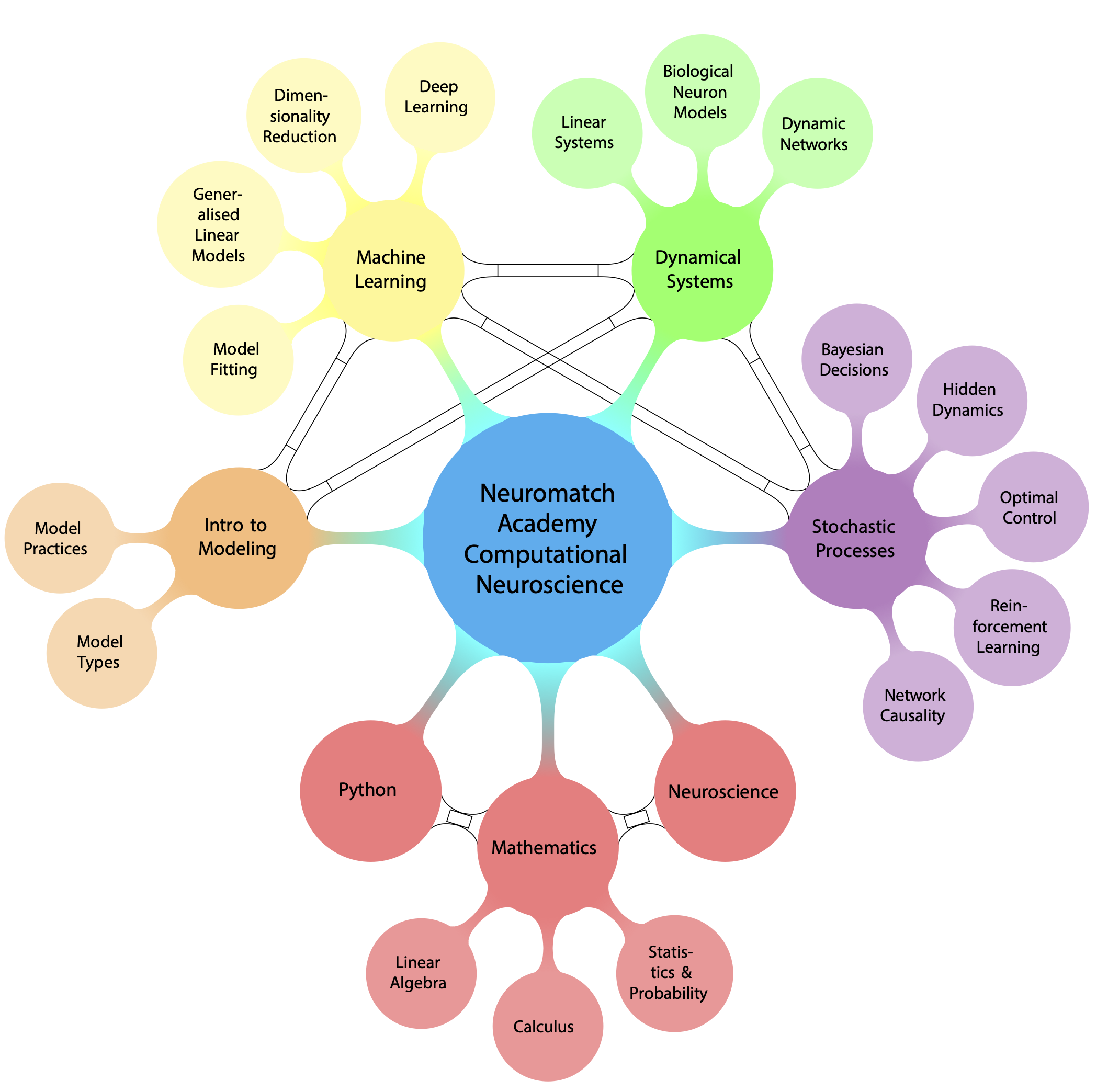

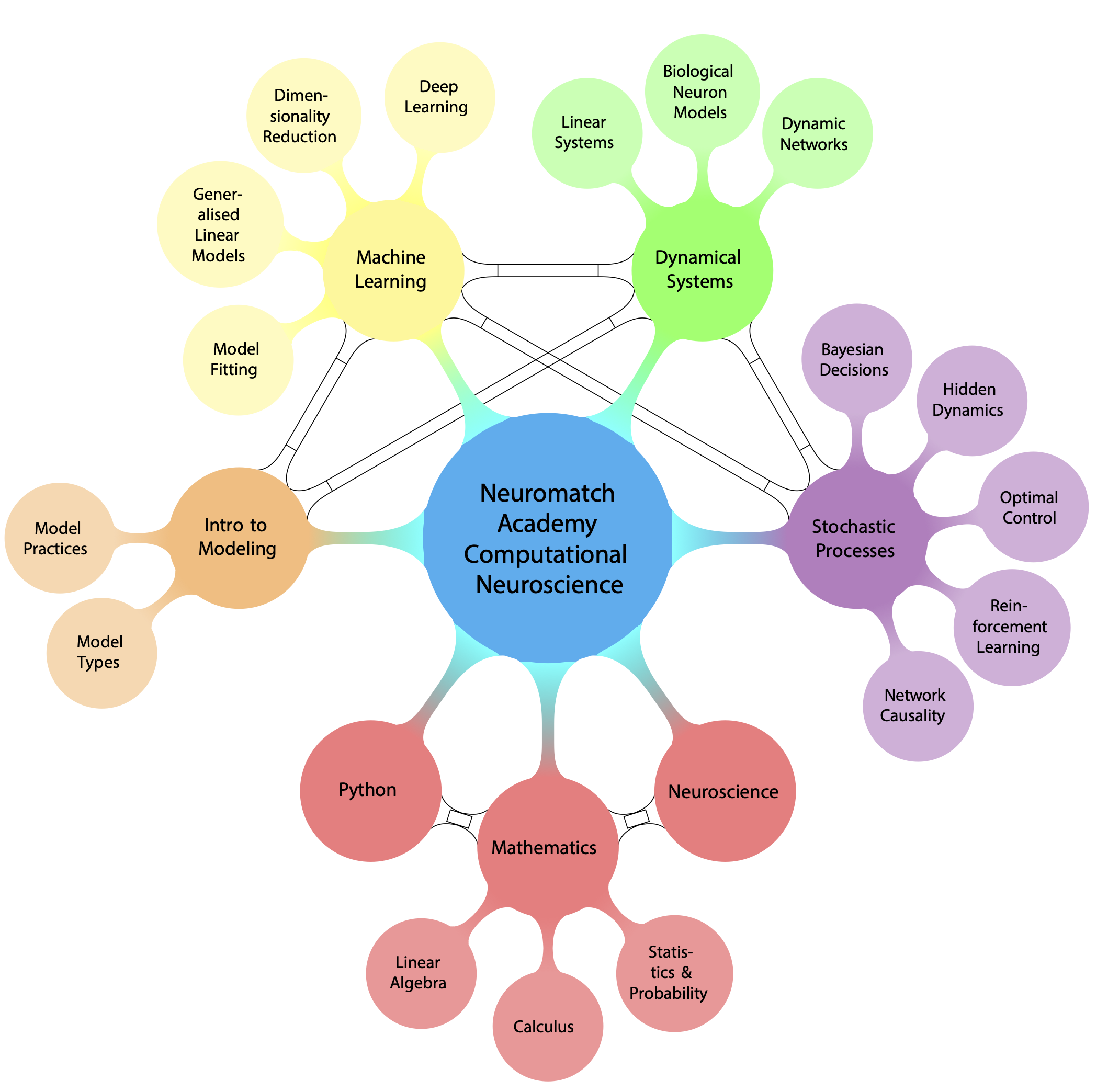

# ## Concepts map

#

#  #

# *Image made by John Butler, with expert color advice from Isabelle Butler*

# ## Curriculum overview

#

# Welcome to the comp neuro course!

#

# We have curated a curriculum that spans most areas of computational neuroscience (a hard task in an increasingly big field!).

# We will expose you to both theoretical modeling and more data-driven analyses. This section will overview the curriculum.

#

# We will start with several optional pre-reqs refreshers.

#

# The **Neuro Video Series** is a series of 12 videos that covers basic neuroscience concepts and neuroscience methods. These videos

# are completely optional and do not need to be watched in a fixed order so you can pick and choose which videos will help you

# brush up on your knowledge.

#

# The pre-reqs refresher days are asynchronous, so you can go through the material on your own time.

# You will learn how to code in Python from scratch using a simple neural model, the leaky integrate-and-fire model, as a motivation.

# Then, you will cover linear algebra, calculus and probability & statistics.

# The topics covered on these days were carefully chosen based on what you need for the comp neuro course.

#

# After this, it’s the start of the proper course! You will start out with the module on **Intro to Modeling**. On the first day,

# you’ll learn all about the broad types of questions we can ask with models in neuroscience (*Model Types*). You will learn

# that we can use models to ask what happens, how it happens, and why it happens in the brain. Importantly, we classify models

# into ‘what’, ‘how’, and ‘why’ models, not based on the toolkit used, but on the questions asked!

#

# After this solid grounding in what questions you can ask with models and the process to start doing so, you will move to the module on **Machine Learning**.

# This module covers fitting models to data and using them to ask and answer questions in neuroscience. We can pose all sorts of questions

# (including what, how, and why questions) using machine learning — we focus especially on more data-driven analyses that often result

# in asking what is happening in the brain. You will learn about key principles behind fitting models to data (*Model Fitting*),

# how to use generalized linear models to fit encoding and decoding models (*Generalized Linear Models*), how to uncover underlying

# lower dimensional structure in data (*Dimensionality Reduction*), and how to use deep learning to build more complex encoding models,

# including comparing deep networks to the visual system (*Deep Learning*). You’ll then dive into projects and

# learn about the process of modeling using a step-by-step guide to modeling that you will apply to your own projects (*Modeling Practice*).

#

# Next, you’ll move to the module on **Dynamical Systems**. In this module, you will learn all about dynamical systems and

# how to apply them to build more biologically plausible models of neurons and networks of neurons. In *Linear Systems*,

# you will cover a lot of the really foundational knowledge on dynamical systems that you will use throughout the rest of the course,

# including a brief dive into stochastic systems which will underlie the next module. During *Biological Neuron Models*,

# you will start to use this knowledge to build models of individual neurons that are more rooted in biology. In *Dynamic Networks*,

# you will extend upon the previous day to start building and analyzing networks of neurons. We will often ask ‘how’ questions

# using these models: how are things in the brain happening mechanistically? These models are often not directly fit to data

# (in contrast to the machine learning models), but instead are built based on bottom up knowledge of the system.

#

# Next, you will move to the module on **Stochastic Processes**. We start with a day learning about Bayesian inference, within the context

# of making decisions (*Bayesian Decisions*). Specifically, we are learning about how to estimate a state of the world from measurements.

# In the next day, we extend this to include time: the state of the world is now changing over time (*Hidden Dynamics*). Next, we look

# at how we can take actions to affect the state of the world (*Optimal Control* and *Reinforcement Learning*). Once again, these models

# can be used as ‘what’, ‘how’, or ‘why’ models but we focus on asking ‘why’ questions (why should the brain compute this?).

#

# Finally, we end with learning all about causality (*Network Causality*). This covers one of the most important science questions:

# when can we determine if something is causally related vs. just correlated?

#

# *Image made by John Butler, with expert color advice from Isabelle Butler*

# ## Curriculum overview

#

# Welcome to the comp neuro course!

#

# We have curated a curriculum that spans most areas of computational neuroscience (a hard task in an increasingly big field!).

# We will expose you to both theoretical modeling and more data-driven analyses. This section will overview the curriculum.

#

# We will start with several optional pre-reqs refreshers.

#

# The **Neuro Video Series** is a series of 12 videos that covers basic neuroscience concepts and neuroscience methods. These videos

# are completely optional and do not need to be watched in a fixed order so you can pick and choose which videos will help you

# brush up on your knowledge.

#

# The pre-reqs refresher days are asynchronous, so you can go through the material on your own time.

# You will learn how to code in Python from scratch using a simple neural model, the leaky integrate-and-fire model, as a motivation.

# Then, you will cover linear algebra, calculus and probability & statistics.

# The topics covered on these days were carefully chosen based on what you need for the comp neuro course.

#

# After this, it’s the start of the proper course! You will start out with the module on **Intro to Modeling**. On the first day,

# you’ll learn all about the broad types of questions we can ask with models in neuroscience (*Model Types*). You will learn

# that we can use models to ask what happens, how it happens, and why it happens in the brain. Importantly, we classify models

# into ‘what’, ‘how’, and ‘why’ models, not based on the toolkit used, but on the questions asked!

#

# After this solid grounding in what questions you can ask with models and the process to start doing so, you will move to the module on **Machine Learning**.

# This module covers fitting models to data and using them to ask and answer questions in neuroscience. We can pose all sorts of questions

# (including what, how, and why questions) using machine learning — we focus especially on more data-driven analyses that often result

# in asking what is happening in the brain. You will learn about key principles behind fitting models to data (*Model Fitting*),

# how to use generalized linear models to fit encoding and decoding models (*Generalized Linear Models*), how to uncover underlying

# lower dimensional structure in data (*Dimensionality Reduction*), and how to use deep learning to build more complex encoding models,

# including comparing deep networks to the visual system (*Deep Learning*). You’ll then dive into projects and

# learn about the process of modeling using a step-by-step guide to modeling that you will apply to your own projects (*Modeling Practice*).

#

# Next, you’ll move to the module on **Dynamical Systems**. In this module, you will learn all about dynamical systems and

# how to apply them to build more biologically plausible models of neurons and networks of neurons. In *Linear Systems*,

# you will cover a lot of the really foundational knowledge on dynamical systems that you will use throughout the rest of the course,

# including a brief dive into stochastic systems which will underlie the next module. During *Biological Neuron Models*,

# you will start to use this knowledge to build models of individual neurons that are more rooted in biology. In *Dynamic Networks*,

# you will extend upon the previous day to start building and analyzing networks of neurons. We will often ask ‘how’ questions

# using these models: how are things in the brain happening mechanistically? These models are often not directly fit to data

# (in contrast to the machine learning models), but instead are built based on bottom up knowledge of the system.

#

# Next, you will move to the module on **Stochastic Processes**. We start with a day learning about Bayesian inference, within the context

# of making decisions (*Bayesian Decisions*). Specifically, we are learning about how to estimate a state of the world from measurements.

# In the next day, we extend this to include time: the state of the world is now changing over time (*Hidden Dynamics*). Next, we look

# at how we can take actions to affect the state of the world (*Optimal Control* and *Reinforcement Learning*). Once again, these models

# can be used as ‘what’, ‘how’, or ‘why’ models but we focus on asking ‘why’ questions (why should the brain compute this?).

#

# Finally, we end with learning all about causality (*Network Causality*). This covers one of the most important science questions:

# when can we determine if something is causally related vs. just correlated?

# # Introduction

#

# Welcome to the Neuromatch computational neuroscience course!

#

# # Introduction

#

# Welcome to the Neuromatch computational neuroscience course!

#

#

# *Image made by John Butler, with expert color advice from Isabelle Butler*

# ## Curriculum overview

#

# Welcome to the comp neuro course!

#

# We have curated a curriculum that spans most areas of computational neuroscience (a hard task in an increasingly big field!).

# We will expose you to both theoretical modeling and more data-driven analyses. This section will overview the curriculum.

#

# We will start with several optional pre-reqs refreshers.

#

# The **Neuro Video Series** is a series of 12 videos that covers basic neuroscience concepts and neuroscience methods. These videos

# are completely optional and do not need to be watched in a fixed order so you can pick and choose which videos will help you

# brush up on your knowledge.

#

# The pre-reqs refresher days are asynchronous, so you can go through the material on your own time.

# You will learn how to code in Python from scratch using a simple neural model, the leaky integrate-and-fire model, as a motivation.

# Then, you will cover linear algebra, calculus and probability & statistics.

# The topics covered on these days were carefully chosen based on what you need for the comp neuro course.

#

# After this, it’s the start of the proper course! You will start out with the module on **Intro to Modeling**. On the first day,

# you’ll learn all about the broad types of questions we can ask with models in neuroscience (*Model Types*). You will learn

# that we can use models to ask what happens, how it happens, and why it happens in the brain. Importantly, we classify models

# into ‘what’, ‘how’, and ‘why’ models, not based on the toolkit used, but on the questions asked!

#

# After this solid grounding in what questions you can ask with models and the process to start doing so, you will move to the module on **Machine Learning**.

# This module covers fitting models to data and using them to ask and answer questions in neuroscience. We can pose all sorts of questions

# (including what, how, and why questions) using machine learning — we focus especially on more data-driven analyses that often result

# in asking what is happening in the brain. You will learn about key principles behind fitting models to data (*Model Fitting*),

# how to use generalized linear models to fit encoding and decoding models (*Generalized Linear Models*), how to uncover underlying

# lower dimensional structure in data (*Dimensionality Reduction*), and how to use deep learning to build more complex encoding models,

# including comparing deep networks to the visual system (*Deep Learning*). You’ll then dive into projects and

# learn about the process of modeling using a step-by-step guide to modeling that you will apply to your own projects (*Modeling Practice*).

#

# Next, you’ll move to the module on **Dynamical Systems**. In this module, you will learn all about dynamical systems and

# how to apply them to build more biologically plausible models of neurons and networks of neurons. In *Linear Systems*,

# you will cover a lot of the really foundational knowledge on dynamical systems that you will use throughout the rest of the course,

# including a brief dive into stochastic systems which will underlie the next module. During *Biological Neuron Models*,

# you will start to use this knowledge to build models of individual neurons that are more rooted in biology. In *Dynamic Networks*,

# you will extend upon the previous day to start building and analyzing networks of neurons. We will often ask ‘how’ questions

# using these models: how are things in the brain happening mechanistically? These models are often not directly fit to data

# (in contrast to the machine learning models), but instead are built based on bottom up knowledge of the system.

#

# Next, you will move to the module on **Stochastic Processes**. We start with a day learning about Bayesian inference, within the context

# of making decisions (*Bayesian Decisions*). Specifically, we are learning about how to estimate a state of the world from measurements.

# In the next day, we extend this to include time: the state of the world is now changing over time (*Hidden Dynamics*). Next, we look

# at how we can take actions to affect the state of the world (*Optimal Control* and *Reinforcement Learning*). Once again, these models

# can be used as ‘what’, ‘how’, or ‘why’ models but we focus on asking ‘why’ questions (why should the brain compute this?).

#

# Finally, we end with learning all about causality (*Network Causality*). This covers one of the most important science questions:

# when can we determine if something is causally related vs. just correlated?

#

# *Image made by John Butler, with expert color advice from Isabelle Butler*

# ## Curriculum overview

#

# Welcome to the comp neuro course!

#

# We have curated a curriculum that spans most areas of computational neuroscience (a hard task in an increasingly big field!).

# We will expose you to both theoretical modeling and more data-driven analyses. This section will overview the curriculum.

#

# We will start with several optional pre-reqs refreshers.

#

# The **Neuro Video Series** is a series of 12 videos that covers basic neuroscience concepts and neuroscience methods. These videos

# are completely optional and do not need to be watched in a fixed order so you can pick and choose which videos will help you

# brush up on your knowledge.

#

# The pre-reqs refresher days are asynchronous, so you can go through the material on your own time.

# You will learn how to code in Python from scratch using a simple neural model, the leaky integrate-and-fire model, as a motivation.

# Then, you will cover linear algebra, calculus and probability & statistics.

# The topics covered on these days were carefully chosen based on what you need for the comp neuro course.

#

# After this, it’s the start of the proper course! You will start out with the module on **Intro to Modeling**. On the first day,

# you’ll learn all about the broad types of questions we can ask with models in neuroscience (*Model Types*). You will learn

# that we can use models to ask what happens, how it happens, and why it happens in the brain. Importantly, we classify models

# into ‘what’, ‘how’, and ‘why’ models, not based on the toolkit used, but on the questions asked!

#

# After this solid grounding in what questions you can ask with models and the process to start doing so, you will move to the module on **Machine Learning**.

# This module covers fitting models to data and using them to ask and answer questions in neuroscience. We can pose all sorts of questions

# (including what, how, and why questions) using machine learning — we focus especially on more data-driven analyses that often result

# in asking what is happening in the brain. You will learn about key principles behind fitting models to data (*Model Fitting*),

# how to use generalized linear models to fit encoding and decoding models (*Generalized Linear Models*), how to uncover underlying

# lower dimensional structure in data (*Dimensionality Reduction*), and how to use deep learning to build more complex encoding models,

# including comparing deep networks to the visual system (*Deep Learning*). You’ll then dive into projects and

# learn about the process of modeling using a step-by-step guide to modeling that you will apply to your own projects (*Modeling Practice*).

#

# Next, you’ll move to the module on **Dynamical Systems**. In this module, you will learn all about dynamical systems and

# how to apply them to build more biologically plausible models of neurons and networks of neurons. In *Linear Systems*,

# you will cover a lot of the really foundational knowledge on dynamical systems that you will use throughout the rest of the course,

# including a brief dive into stochastic systems which will underlie the next module. During *Biological Neuron Models*,

# you will start to use this knowledge to build models of individual neurons that are more rooted in biology. In *Dynamic Networks*,

# you will extend upon the previous day to start building and analyzing networks of neurons. We will often ask ‘how’ questions

# using these models: how are things in the brain happening mechanistically? These models are often not directly fit to data

# (in contrast to the machine learning models), but instead are built based on bottom up knowledge of the system.

#

# Next, you will move to the module on **Stochastic Processes**. We start with a day learning about Bayesian inference, within the context

# of making decisions (*Bayesian Decisions*). Specifically, we are learning about how to estimate a state of the world from measurements.

# In the next day, we extend this to include time: the state of the world is now changing over time (*Hidden Dynamics*). Next, we look

# at how we can take actions to affect the state of the world (*Optimal Control* and *Reinforcement Learning*). Once again, these models

# can be used as ‘what’, ‘how’, or ‘why’ models but we focus on asking ‘why’ questions (why should the brain compute this?).

#

# Finally, we end with learning all about causality (*Network Causality*). This covers one of the most important science questions:

# when can we determine if something is causally related vs. just correlated?