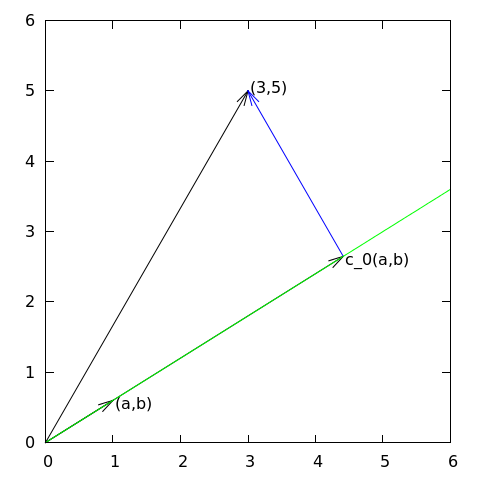

Approximation of a two-dimensional vector in a one-dimensional vector space.

# #

#

#

#

# We introduce the vector space $V$

# spanned by the vector $\boldsymbol{\psi}_0=(a,b)$:

#

#

#

# $$

# \begin{equation}

# V = \mbox{span}\,\{ \boldsymbol{\psi}_0\}{\thinspace .} \label{_auto1} \tag{2}

# \end{equation}

# $$

# We say that $\boldsymbol{\psi}_0$ is a *basis vector* in the space $V$.

# Our aim is to find the vector

#

#

#

# $$

# \begin{equation}

# \label{uc0} \tag{3}

# \boldsymbol{u} = c_0\boldsymbol{\psi}_0\in V

# \end{equation}

# $$

# which best approximates

# the given vector $\boldsymbol{f} = (3,5)$. A reasonable criterion for a best

# approximation could be to minimize the length of the difference between

# the approximate $\boldsymbol{u}$ and the given $\boldsymbol{f}$. The difference, or error

# $\boldsymbol{e} = \boldsymbol{f} -\boldsymbol{u}$, has its length given by the *norm*

# $$

# ||\boldsymbol{e}|| = (\boldsymbol{e},\boldsymbol{e})^{\frac{1}{2}},

# $$

# where $(\boldsymbol{e},\boldsymbol{e})$ is the *inner product* of $\boldsymbol{e}$ and itself. The inner

# product, also called *scalar product* or *dot product*, of two vectors

# $\boldsymbol{u}=(u_0,u_1)$ and $\boldsymbol{v} =(v_0,v_1)$ is defined as

#

#

#

# $$

# \begin{equation}

# (\boldsymbol{u}, \boldsymbol{v}) = u_0v_0 + u_1v_1{\thinspace .} \label{_auto2} \tag{4}

# \end{equation}

# $$

# **Remark.** We should point out that we use the notation

# $(\cdot,\cdot)$ for two different things: $(a,b)$ for scalar

# quantities $a$ and $b$ means the vector starting at the origin and

# ending in the point $(a,b)$, while $(\boldsymbol{u},\boldsymbol{v})$ with vectors $\boldsymbol{u}$ and

# $\boldsymbol{v}$ means the inner product of these vectors. Since vectors are here

# written in boldface font there should be no confusion. We may add

# that the norm associated with this inner product is the usual

# Euclidean length of a vector, i.e.,

# $$

# \|\boldsymbol{u}\| = \sqrt{(\boldsymbol{u},\boldsymbol{u})} = \sqrt{u_0^2 + u_1^2}

# $$

# ### The least squares method

#

# We now want to determine the $\boldsymbol{u}$ that minimizes $||\boldsymbol{e}||$, that is

# we want to compute the optimal $c_0$ in ([3](#uc0)). The algebra

# is simplified if we minimize the square of the norm, $||\boldsymbol{e}||^2 = (\boldsymbol{e}, \boldsymbol{e})$,

# instead of the norm itself.

# Define the function

#

#

#

# $$

# \begin{equation}

# E(c_0) = (\boldsymbol{e},\boldsymbol{e}) = (\boldsymbol{f} - c_0\boldsymbol{\psi}_0, \boldsymbol{f} - c_0\boldsymbol{\psi}_0)

# {\thinspace .}

# \label{_auto3} \tag{5}

# \end{equation}

# $$

# We can rewrite the expressions of the right-hand side in a more

# convenient form for further use:

#

#

#

# $$

# \begin{equation}

# E(c_0) = (\boldsymbol{f},\boldsymbol{f}) - 2c_0(\boldsymbol{f},\boldsymbol{\psi}_0) + c_0^2(\boldsymbol{\psi}_0,\boldsymbol{\psi}_0){\thinspace .}

# \label{fem:vec:E} \tag{6}

# \end{equation}

# $$

# This rewrite results from using the following fundamental rules for inner

# product spaces:

#

#

#

# $$

# \begin{equation}

# (\alpha\boldsymbol{u},\boldsymbol{v})=\alpha(\boldsymbol{u},\boldsymbol{v}),\quad \alpha\in\mathbb{R},

# \label{fem:vec:rule:scalarmult} \tag{7}

# \end{equation}

# $$

#

#

#

# $$

# \begin{equation}

# (\boldsymbol{u} +\boldsymbol{v},\boldsymbol{w}) = (\boldsymbol{u},\boldsymbol{w}) + (\boldsymbol{v}, \boldsymbol{w}),

# \label{fem:vec:rule:sum} \tag{8}

# \end{equation}

# $$

#

#

#

# $$

# \begin{equation}

# \label{fem:vec:rule:symmetry} \tag{9}

# (\boldsymbol{u}, \boldsymbol{v}) = (\boldsymbol{v}, \boldsymbol{u}){\thinspace .}

# \end{equation}

# $$

# Minimizing $E(c_0)$ implies finding $c_0$ such that

# $$

# \frac{\partial E}{\partial c_0} = 0{\thinspace .}

# $$

# It turns out that $E$ has one unique minimum and no maximum point.

# Now, when differentiating ([6](#fem:vec:E)) with respect to $c_0$, note

# that none of the inner product expressions depend on $c_0$, so we simply get

#

#

#

# $$

# \begin{equation}

# \frac{\partial E}{\partial c_0} = -2(\boldsymbol{f},\boldsymbol{\psi}_0) + 2c_0 (\boldsymbol{\psi}_0,\boldsymbol{\psi}_0)

# {\thinspace .}

# \label{fem:vec:dEdc0:v1} \tag{10}

# \end{equation}

# $$

# Setting the above expression equal to zero and solving for $c_0$ gives

#

#

#

# $$

# \begin{equation}

# c_0 = \frac{(\boldsymbol{f},\boldsymbol{\psi}_0)}{(\boldsymbol{\psi}_0,\boldsymbol{\psi}_0)},

# \label{fem:vec:c0} \tag{11}

# \end{equation}

# $$

# which in the present case, with $\boldsymbol{\psi}_0=(a,b)$, results in

#

#

#

# $$

# \begin{equation}

# c_0 = \frac{3a + 5b}{a^2 + b^2}{\thinspace .} \label{_auto4} \tag{12}

# \end{equation}

# $$

# For later, it is worth mentioning that setting

# the key equation ([10](#fem:vec:dEdc0:v1)) to zero and ordering

# the terms lead to

# $$

# (\boldsymbol{f}-c_0\boldsymbol{\psi}_0,\boldsymbol{\psi}_0) = 0,

# $$

# or

#

#

#

# $$

# \begin{equation}

# (\boldsymbol{e}, \boldsymbol{\psi}_0) = 0

# {\thinspace .}

# \label{fem:vec:dEdc0:Galerkin} \tag{13}

# \end{equation}

# $$

# This implication of minimizing $E$ is an important result that we shall

# make much use of.

#

#

#

#

# ### The projection method

#

# We shall now show that minimizing $||\boldsymbol{e}||^2$ implies that $\boldsymbol{e}$ is

# orthogonal to *any* vector $\boldsymbol{v}$ in the space $V$. This result is

# visually quite clear from [Figure](#fem:approx:vec:plane:fig) (think of

# other vectors along the line $(a,b)$: all of them will lead to

# a larger distance between the approximation and $\boldsymbol{f}$).

# Then we see mathematically that $\boldsymbol{e}$ is orthogonal to any vector $\boldsymbol{v}$

# in the space $V$ and we may

# express any $\boldsymbol{v}\in V$ as $\boldsymbol{v}=s\boldsymbol{\psi}_0$ for any scalar parameter $s$

# (recall that two vectors are orthogonal when their inner product vanishes).

# Then we calculate the inner product

# $$

# \begin{align*}

# (\boldsymbol{e}, s\boldsymbol{\psi}_0) &= (\boldsymbol{f} - c_0\boldsymbol{\psi}_0, s\boldsymbol{\psi}_0)\\

# &= (\boldsymbol{f},s\boldsymbol{\psi}_0) - (c_0\boldsymbol{\psi}_0, s\boldsymbol{\psi}_0)\\

# &= s(\boldsymbol{f},\boldsymbol{\psi}_0) - sc_0(\boldsymbol{\psi}_0, \boldsymbol{\psi}_0)\\

# &= s(\boldsymbol{f},\boldsymbol{\psi}_0) - s\frac{(\boldsymbol{f},\boldsymbol{\psi}_0)}{(\boldsymbol{\psi}_0,\boldsymbol{\psi}_0)}(\boldsymbol{\psi}_0,\boldsymbol{\psi}_0)\\

# &= s\left( (\boldsymbol{f},\boldsymbol{\psi}_0) - (\boldsymbol{f},\boldsymbol{\psi}_0)\right)\\

# &=0{\thinspace .}

# \end{align*}

# $$

# Therefore, instead of minimizing the square of the norm, we could

# demand that $\boldsymbol{e}$ is orthogonal to any vector in $V$, which in our present

# simple case amounts to a single vector only.

# This method is known as *projection*.

# (The approach can also be referred to as a Galerkin method as

# explained at the end of the section [Approximation of general vectors](#fem:approx:vec:Np1dim).)

#

# Mathematically, the projection method is stated

# by the equation

#

#

#

# $$

# \begin{equation}

# (\boldsymbol{e}, \boldsymbol{v}) = 0,\quad\forall\boldsymbol{v}\in V{\thinspace .}

# \label{fem:vec:Galerkin1} \tag{14}

# \end{equation}

# $$

# An arbitrary $\boldsymbol{v}\in V$ can be expressed as

# $s\boldsymbol{\psi}_0$, $s\in\mathbb{R}$, and therefore

# ([14](#fem:vec:Galerkin1)) implies

# $$

# (\boldsymbol{e},s\boldsymbol{\psi}_0) = s(\boldsymbol{e}, \boldsymbol{\psi}_0) = 0,

# $$

# which means that the error must be orthogonal to the basis vector in

# the space $V$:

# $$

# (\boldsymbol{e}, \boldsymbol{\psi}_0)=0\quad\hbox{or}\quad

# (\boldsymbol{f} - c_0\boldsymbol{\psi}_0, \boldsymbol{\psi}_0)=0,

# $$

# which is what we found in ([13](#fem:vec:dEdc0:Galerkin)) from

# the least squares computations.

#

#

#

#

# ## Approximation of general vectors

#

#

#

# Let us generalize the vector approximation from the previous section

# to vectors in spaces with arbitrary dimension. Given some vector $\boldsymbol{f}$,

# we want to find the best approximation to this vector in

# the space

# $$

# V = \hbox{span}\,\{\boldsymbol{\psi}_0,\ldots,\boldsymbol{\psi}_N\}

# {\thinspace .}

# $$

# We assume that the space has dimension $N+1$ and

# that *basis vectors* $\boldsymbol{\psi}_0,\ldots,\boldsymbol{\psi}_N$ are

# linearly independent so that none of them are redundant.

# Any vector $\boldsymbol{u}\in V$ can then be written as a linear combination

# of the basis vectors, i.e.,

# $$

# \boldsymbol{u} = \sum_{j=0}^N c_j\boldsymbol{\psi}_j,

# $$

# where $c_j\in\mathbb{R}$ are scalar coefficients to be determined.

#

# ### The least squares method

#

# Now we want to find $c_0,\ldots,c_N$, such that $\boldsymbol{u}$ is the best

# approximation to $\boldsymbol{f}$ in the sense that the distance (error)

# $\boldsymbol{e} = \boldsymbol{f} - \boldsymbol{u}$ is minimized. Again, we define

# the squared distance as a function of the free parameters

# $c_0,\ldots,c_N$,

# $$

# E(c_0,\ldots,c_N) = (\boldsymbol{e},\boldsymbol{e}) = (\boldsymbol{f} -\sum_jc_j\boldsymbol{\psi}_j,\boldsymbol{f} -\sum_jc_j\boldsymbol{\psi}_j)

# \nonumber

# $$

#

#

#

# $$

# \begin{equation}

# = (\boldsymbol{f},\boldsymbol{f}) - 2\sum_{j=0}^N c_j(\boldsymbol{f},\boldsymbol{\psi}_j) +

# \sum_{p=0}^N\sum_{q=0}^N c_pc_q(\boldsymbol{\psi}_p,\boldsymbol{\psi}_q){\thinspace .}

# \label{fem:vec:genE} \tag{15}

# \end{equation}

# $$

# Minimizing this $E$ with respect to the independent variables

# $c_0,\ldots,c_N$ is obtained by requiring

# $$

# \frac{\partial E}{\partial c_i} = 0,\quad i=0,\ldots,N

# {\thinspace .}

# $$

# The first term in ([15](#fem:vec:genE)) is independent of $c_i$, so its

# derivative vanishes.

# The second term in ([15](#fem:vec:genE)) is differentiated as follows:

#

#

#

# $$

# \begin{equation}

# \frac{\partial}{\partial c_i}

# 2\sum_{j=0}^N c_j(\boldsymbol{f},\boldsymbol{\psi}_j) = 2(\boldsymbol{f},\boldsymbol{\psi}_i),

# \label{_auto5} \tag{16}

# \end{equation}

# $$

# since the expression to be differentiated is a sum and only one term,

# $c_i(\boldsymbol{f},\boldsymbol{\psi}_i)$,

# contains $c_i$ (this term is linear in $c_i$).

# To understand this differentiation in detail,

# write out the sum specifically for,

# e.g, $N=3$ and $i=1$.

#

# The last term in ([15](#fem:vec:genE))

# is more tedious to differentiate. It can be wise to write out the

# double sum for $N=1$ and perform differentiation with respect to

# $c_0$ and $c_1$ to see the structure of the expression. Thereafter,

# one can generalize to an arbitrary $N$ and observe that

#

#

#

# $$

# \begin{equation}

# \frac{\partial}{\partial c_i}

# c_pc_q =

# \left\lbrace\begin{array}{ll}

# 0, & \hbox{ if } p\neq i\hbox{ and } q\neq i,\\

# c_q, & \hbox{ if } p=i\hbox{ and } q\neq i,\\

# c_p, & \hbox{ if } p\neq i\hbox{ and } q=i,\\

# 2c_i, & \hbox{ if } p=q= i{\thinspace .}\\

# \end{array}\right.

# \label{_auto6} \tag{17}

# \end{equation}

# $$

# Then

# $$

# \frac{\partial}{\partial c_i}

# \sum_{p=0}^N\sum_{q=0}^N c_pc_q(\boldsymbol{\psi}_p,\boldsymbol{\psi}_q)

# = \sum_{p=0, p\neq i}^N c_p(\boldsymbol{\psi}_p,\boldsymbol{\psi}_i)

# + \sum_{q=0, q\neq i}^N c_q(\boldsymbol{\psi}_i,\boldsymbol{\psi}_q)

# +2c_i(\boldsymbol{\psi}_i,\boldsymbol{\psi}_i){\thinspace .}

# $$

# Since each of the two sums is missing the term $c_i(\boldsymbol{\psi}_i,\boldsymbol{\psi}_i)$,

# we may split the very last term in two, to get exactly that "missing"

# term for each sum. This idea allows us to write

#

#

#

# $$

# \begin{equation}

# \frac{\partial}{\partial c_i}

# \sum_{p=0}^N\sum_{q=0}^N c_pc_q(\boldsymbol{\psi}_p,\boldsymbol{\psi}_q)

# = 2\sum_{j=0}^N c_i(\boldsymbol{\psi}_j,\boldsymbol{\psi}_i){\thinspace .}

# \label{_auto7} \tag{18}

# \end{equation}

# $$

# It then follows that setting

# $$

# \frac{\partial E}{\partial c_i} = 0,\quad i=0,\ldots,N,

# $$

# implies

# $$

# - 2(\boldsymbol{f},\boldsymbol{\psi}_i) + 2\sum_{j=0}^N c_i(\boldsymbol{\psi}_j,\boldsymbol{\psi}_i) = 0,\quad i=0,\ldots,N{\thinspace .}

# $$

# Moving the first term to the right-hand side shows that the equation is

# actually a *linear system* for the unknown parameters $c_0,\ldots,c_N$:

#

#

#

# $$

# \begin{equation}

# \sum_{j=0}^N A_{i,j} c_j = b_i, \quad i=0,\ldots,N,

# \label{fem:approx:vec:Np1dim:eqsys} \tag{19}

# \end{equation}

# $$

# where

#

#

#

# $$

# \begin{equation}

# A_{i,j} = (\boldsymbol{\psi}_i,\boldsymbol{\psi}_j),

# \label{_auto8} \tag{20}

# \end{equation}

# $$

#

#

#

# $$

# \begin{equation}

# b_i = (\boldsymbol{\psi}_i, \boldsymbol{f}){\thinspace .} \label{_auto9} \tag{21}

# \end{equation}

# $$

# We have changed the order of the two vectors in the inner

# product according to ([9](#fem:vec:rule:symmetry)):

# $$

# A_{i,j} = (\boldsymbol{\psi}_j,\boldsymbol{\psi}_i) = (\boldsymbol{\psi}_i,\boldsymbol{\psi}_j),

# $$

# simply because the sequence $i$-$j$ looks more aesthetic.

#

# ### The Galerkin or projection method

#

# In analogy with the "one-dimensional" example in

# the section [Approximation of planar vectors](#fem:approx:vec:plane), it holds also here in the general

# case that minimizing the distance

# (error) $\boldsymbol{e}$ is equivalent to demanding that $\boldsymbol{e}$ is orthogonal to

# all $\boldsymbol{v}\in V$:

#

#

#

# $$

# \begin{equation}

# (\boldsymbol{e},\boldsymbol{v})=0,\quad \forall\boldsymbol{v}\in V{\thinspace .}

# \label{fem:approx:vec:Np1dim:Galerkin} \tag{22}

# \end{equation}

# $$

# Since any $\boldsymbol{v}\in V$ can be written as $\boldsymbol{v} =\sum_{i=0}^N c_i\boldsymbol{\psi}_i$,

# the statement ([22](#fem:approx:vec:Np1dim:Galerkin)) is equivalent to

# saying that

# $$

# (\boldsymbol{e}, \sum_{i=0}^N c_i\boldsymbol{\psi}_i) = 0,

# $$

# for any choice of coefficients $c_0,\ldots,c_N$.

# The latter equation can be rewritten as

# $$

# \sum_{i=0}^N c_i (\boldsymbol{e},\boldsymbol{\psi}_i) =0{\thinspace .}

# $$

# If this is to hold for arbitrary values of $c_0,\ldots,c_N$,

# we must require that each term in the sum vanishes, which means that

#

#

#

# $$

# \begin{equation}

# (\boldsymbol{e},\boldsymbol{\psi}_i)=0,\quad i=0,\ldots,N{\thinspace .}

# \label{fem:approx:vec:Np1dim:Galerkin0} \tag{23}

# \end{equation}

# $$

# These $N+1$ equations result in the same linear system as

# ([19](#fem:approx:vec:Np1dim:eqsys)):

# $$

# (\boldsymbol{f} - \sum_{j=0}^N c_j\boldsymbol{\psi}_j, \boldsymbol{\psi}_i) = (\boldsymbol{f}, \boldsymbol{\psi}_i) - \sum_{j=0}^N

# (\boldsymbol{\psi}_i,\boldsymbol{\psi}_j)c_j = 0,

# $$

# and hence

# $$

# \sum_{j=0}^N (\boldsymbol{\psi}_i,\boldsymbol{\psi}_j)c_j = (\boldsymbol{f}, \boldsymbol{\psi}_i),\quad i=0,\ldots, N

# {\thinspace .}

# $$

# So, instead of differentiating the

# $E(c_0,\ldots,c_N)$ function, we could simply use

# ([22](#fem:approx:vec:Np1dim:Galerkin)) as the principle for

# determining $c_0,\ldots,c_N$, resulting in the $N+1$

# equations ([23](#fem:approx:vec:Np1dim:Galerkin0)).

#

# The names *least squares method* or *least squares approximation*

# are natural since the calculations consists of

# minimizing $||\boldsymbol{e}||^2$, and $||\boldsymbol{e}||^2$ is a sum of squares

# of differences between the components in $\boldsymbol{f}$ and $\boldsymbol{u}$.

# We find $\boldsymbol{u}$ such that this sum of squares is minimized.

#

# The principle ([22](#fem:approx:vec:Np1dim:Galerkin)),

# or the equivalent form ([23](#fem:approx:vec:Np1dim:Galerkin0)),

# is known as *projection*. Almost the same mathematical idea

# was used by the Russian mathematician [Boris Galerkin](http://en.wikipedia.org/wiki/Boris_Galerkin) to solve

# differential equations, resulting in what is widely known as

# *Galerkin's method*.

#

# # Approximation principles

#

#

# Let $V$ be a function space spanned by a set of *basis functions*

# ${\psi}_0,\ldots,{\psi}_N$,

# $$

# V = \hbox{span}\,\{{\psi}_0,\ldots,{\psi}_N\},

# $$

# such that any function $u\in V$ can be written as a linear

# combination of the basis functions:

#

#

#

# $$

# \begin{equation}

# \label{fem:approx:ufem} \tag{24}

# u = \sum_{j\in{\mathcal{I}_s}} c_j{\psi}_j{\thinspace .}

# \end{equation}

# $$

# That is, we consider functions as vectors in a vector space - a

# so-called function space - and we have a finite set of basis

# functions that span the space just as basis vectors or unit vectors

# span a vector space.

#

# The index set ${\mathcal{I}_s}$ is defined as ${\mathcal{I}_s} =\{0,\ldots,N\}$ and is

# from now on used

# both for compact notation and for flexibility in the numbering of

# elements in sequences.

#

# For now, in this introduction, we shall look at functions of a

# single variable $x$:

# $u=u(x)$, ${\psi}_j={\psi}_j(x)$, $j\in{\mathcal{I}_s}$. Later, we will almost

# trivially extend the mathematical details

# to functions of two- or three-dimensional physical spaces.

# The approximation ([24](#fem:approx:ufem)) is typically used

# to discretize a problem in space. Other methods, most notably

# finite differences, are common for time discretization, although the

# form ([24](#fem:approx:ufem)) can be used in time as well.

#

# ## The least squares method

#

#

# Given a function $f(x)$, how can we determine its best approximation

# $u(x)\in V$? A natural starting point is to apply the same reasoning

# as we did for vectors in the section [Approximation of general vectors](#fem:approx:vec:Np1dim). That is,

# we minimize the distance between $u$ and $f$. However, this requires

# a norm for measuring distances, and a norm is most conveniently

# defined through an

# inner product. Viewing a function as a vector of infinitely

# many point values, one for each value of $x$, the inner product of

# two arbitrary functions $f(x)$ and $g(x)$ could

# intuitively be defined as the usual summation of

# pairwise "components" (values), with summation replaced by integration:

# $$

# (f,g) = \int f(x)g(x)\, {\, \mathrm{d}x}

# {\thinspace .}

# $$

# To fix the integration domain, we let $f(x)$ and ${\psi}_i(x)$

# be defined for a domain $\Omega\subset\mathbb{R}$.

# The inner product of two functions $f(x)$ and $g(x)$ is then

#

#

#

# $$

# \begin{equation}

# (f,g) = \int_\Omega f(x)g(x)\, {\, \mathrm{d}x}

# \label{fem:approx:LS:innerprod} \tag{25}

# {\thinspace .}

# \end{equation}

# $$

# The distance between $f$ and any function $u\in V$ is simply

# $f-u$, and the squared norm of this distance is

#

#

#

# $$

# \begin{equation}

# E = (f(x)-\sum_{j\in{\mathcal{I}_s}} c_j{\psi}_j(x), f(x)-\sum_{j\in{\mathcal{I}_s}} c_j{\psi}_j(x)){\thinspace .}

# \label{fem:approx:LS:E} \tag{26}

# \end{equation}

# $$

# Note the analogy with ([15](#fem:vec:genE)): the given function

# $f$ plays the role of the given vector $\boldsymbol{f}$, and the basis function

# ${\psi}_i$ plays the role of the basis vector $\boldsymbol{\psi}_i$.

# We can rewrite ([26](#fem:approx:LS:E)),

# through similar steps as used for the result

# ([15](#fem:vec:genE)), leading to

#

#

#

# $$

# \begin{equation}

# E(c_i, \ldots, c_N) = (f,f) -2\sum_{j\in{\mathcal{I}_s}} c_j(f,{\psi}_i)

# + \sum_{p\in{\mathcal{I}_s}}\sum_{q\in{\mathcal{I}_s}} c_pc_q({\psi}_p,{\psi}_q){\thinspace .} \label{_auto10} \tag{27}

# \end{equation}

# $$

# Minimizing this function of $N+1$ scalar variables

# $\left\{ {c}_i \right\}_{i\in{\mathcal{I}_s}}$, requires differentiation

# with respect to $c_i$, for all $i\in{\mathcal{I}_s}$. The resulting

# equations are very similar to those we had in the vector case,

# and we hence end up with a

# linear system of the form ([19](#fem:approx:vec:Np1dim:eqsys)), with

# basically the same expressions:

#

#

#

# $$

# \begin{equation}

# A_{i,j} = ({\psi}_i,{\psi}_j),

# \label{fem:approx:Aij} \tag{28}

# \end{equation}

# $$

#

#

#

# $$

# \begin{equation}

# b_i = (f,{\psi}_i){\thinspace .}

# \label{fem:approx:bi} \tag{29}

# \end{equation}

# $$

# The only difference from

# ([19](#fem:approx:vec:Np1dim:eqsys))

# is that the inner product is defined in terms

# of integration rather than summation.

#

# ## The projection (or Galerkin) method

#

#

# As in the section [Approximation of general vectors](#fem:approx:vec:Np1dim), the minimization of $(e,e)$

# is equivalent to

#

#

#

# $$

# \begin{equation}

# (e,v)=0,\quad\forall v\in V{\thinspace .}

# \label{fem:approx:Galerkin} \tag{30}

# \end{equation}

# $$

# This is known as a projection of a function $f$ onto the subspace $V$.

# We may also call it a Galerkin method for approximating functions.

# Using the same reasoning as

# in

# ([22](#fem:approx:vec:Np1dim:Galerkin))-([23](#fem:approx:vec:Np1dim:Galerkin0)),

# it follows that ([30](#fem:approx:Galerkin)) is equivalent to

#

#

#

# $$

# \begin{equation}

# (e,{\psi}_i)=0,\quad i\in{\mathcal{I}_s}{\thinspace .}

# \label{fem:approx:Galerkin0} \tag{31}

# \end{equation}

# $$

# Inserting $e=f-u$ in this equation and ordering terms, as in the

# multi-dimensional vector case, we end up with a linear

# system with a coefficient matrix ([28](#fem:approx:Aij)) and

# right-hand side vector ([29](#fem:approx:bi)).

#

# Whether we work with vectors in the plane, general vectors, or

# functions in function spaces, the least squares principle and

# the projection or Galerkin method are equivalent.

#

# ## Example of linear approximation

#

#

# Let us apply the theory in the previous section to a simple problem:

# given a parabola $f(x)=10(x-1)^2-1$ for $x\in\Omega=[1,2]$, find

# the best approximation $u(x)$ in the space of all linear functions:

# $$

# V = \hbox{span}\,\{1, x\}{\thinspace .}

# $$

# With our notation, ${\psi}_0(x)=1$, ${\psi}_1(x)=x$, and $N=1$.

# We seek

# $$

# u=c_0{\psi}_0(x) + c_1{\psi}_1(x) = c_0 + c_1x,

# $$

# where

# $c_0$ and $c_1$ are found by solving a $2\times 2$ linear system.

# The coefficient matrix has elements

#

#

#

# $$

# \begin{equation}

# A_{0,0} = ({\psi}_0,{\psi}_0) = \int_1^21\cdot 1\, {\, \mathrm{d}x} = 1,

# \label{_auto11} \tag{32}

# \end{equation}

# $$

#

#

#

# $$

# \begin{equation}

# A_{0,1} = ({\psi}_0,{\psi}_1) = \int_1^2 1\cdot x\, {\, \mathrm{d}x} = 3/2,

# \label{_auto12} \tag{33}

# \end{equation}

# $$

#

#

#

# $$

# \begin{equation}

# A_{1,0} = A_{0,1} = 3/2,

# \label{_auto13} \tag{34}

# \end{equation}

# $$

#

#

#

# $$

# \begin{equation}

# A_{1,1} = ({\psi}_1,{\psi}_1) = \int_1^2 x\cdot x\,{\, \mathrm{d}x} = 7/3{\thinspace .} \label{_auto14} \tag{35}

# \end{equation}

# $$

# The corresponding right-hand side is

#

#

#

# $$

# \begin{equation}

# b_1 = (f,{\psi}_0) = \int_1^2 (10(x-1)^2 - 1)\cdot 1 \, {\, \mathrm{d}x} = 7/3,

# \label{_auto15} \tag{36}

# \end{equation}

# $$

#

#

#

# $$

# \begin{equation}

# b_2 = (f,{\psi}_1) = \int_1^2 (10(x-1)^2 - 1)\cdot x\, {\, \mathrm{d}x} = 13/3{\thinspace .} \label{_auto16} \tag{37}

# \end{equation}

# $$

# Solving the linear system results in

#

#

#

# $$

# \begin{equation}

# c_0 = -38/3,\quad c_1 = 10,

# \label{_auto17} \tag{38}

# \end{equation}

# $$

# and consequently

#

#

#

# $$

# \begin{equation}

# u(x) = 10x - \frac{38}{3}{\thinspace .} \label{_auto18} \tag{39}

# \end{equation}

# $$

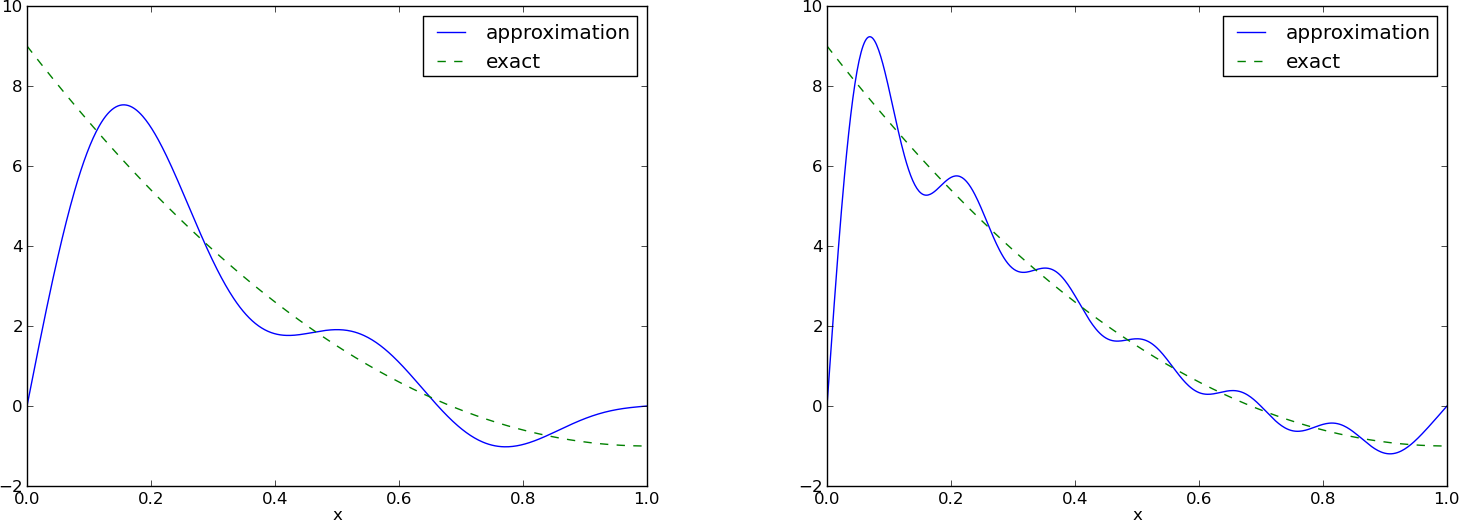

# [Figure](#fem:approx:global:fig:parabola:linear) displays the

# parabola and its best approximation in the space of all linear functions.

#

#

#

#

#

#

#

#

#

#

# We introduce the vector space $V$

# spanned by the vector $\boldsymbol{\psi}_0=(a,b)$:

#

#

#

# $$

# \begin{equation}

# V = \mbox{span}\,\{ \boldsymbol{\psi}_0\}{\thinspace .} \label{_auto1} \tag{2}

# \end{equation}

# $$

# We say that $\boldsymbol{\psi}_0$ is a *basis vector* in the space $V$.

# Our aim is to find the vector

#

#

#

# $$

# \begin{equation}

# \label{uc0} \tag{3}

# \boldsymbol{u} = c_0\boldsymbol{\psi}_0\in V

# \end{equation}

# $$

# which best approximates

# the given vector $\boldsymbol{f} = (3,5)$. A reasonable criterion for a best

# approximation could be to minimize the length of the difference between

# the approximate $\boldsymbol{u}$ and the given $\boldsymbol{f}$. The difference, or error

# $\boldsymbol{e} = \boldsymbol{f} -\boldsymbol{u}$, has its length given by the *norm*

# $$

# ||\boldsymbol{e}|| = (\boldsymbol{e},\boldsymbol{e})^{\frac{1}{2}},

# $$

# where $(\boldsymbol{e},\boldsymbol{e})$ is the *inner product* of $\boldsymbol{e}$ and itself. The inner

# product, also called *scalar product* or *dot product*, of two vectors

# $\boldsymbol{u}=(u_0,u_1)$ and $\boldsymbol{v} =(v_0,v_1)$ is defined as

#

#

#

# $$

# \begin{equation}

# (\boldsymbol{u}, \boldsymbol{v}) = u_0v_0 + u_1v_1{\thinspace .} \label{_auto2} \tag{4}

# \end{equation}

# $$

# **Remark.** We should point out that we use the notation

# $(\cdot,\cdot)$ for two different things: $(a,b)$ for scalar

# quantities $a$ and $b$ means the vector starting at the origin and

# ending in the point $(a,b)$, while $(\boldsymbol{u},\boldsymbol{v})$ with vectors $\boldsymbol{u}$ and

# $\boldsymbol{v}$ means the inner product of these vectors. Since vectors are here

# written in boldface font there should be no confusion. We may add

# that the norm associated with this inner product is the usual

# Euclidean length of a vector, i.e.,

# $$

# \|\boldsymbol{u}\| = \sqrt{(\boldsymbol{u},\boldsymbol{u})} = \sqrt{u_0^2 + u_1^2}

# $$

# ### The least squares method

#

# We now want to determine the $\boldsymbol{u}$ that minimizes $||\boldsymbol{e}||$, that is

# we want to compute the optimal $c_0$ in ([3](#uc0)). The algebra

# is simplified if we minimize the square of the norm, $||\boldsymbol{e}||^2 = (\boldsymbol{e}, \boldsymbol{e})$,

# instead of the norm itself.

# Define the function

#

#

#

# $$

# \begin{equation}

# E(c_0) = (\boldsymbol{e},\boldsymbol{e}) = (\boldsymbol{f} - c_0\boldsymbol{\psi}_0, \boldsymbol{f} - c_0\boldsymbol{\psi}_0)

# {\thinspace .}

# \label{_auto3} \tag{5}

# \end{equation}

# $$

# We can rewrite the expressions of the right-hand side in a more

# convenient form for further use:

#

#

#

# $$

# \begin{equation}

# E(c_0) = (\boldsymbol{f},\boldsymbol{f}) - 2c_0(\boldsymbol{f},\boldsymbol{\psi}_0) + c_0^2(\boldsymbol{\psi}_0,\boldsymbol{\psi}_0){\thinspace .}

# \label{fem:vec:E} \tag{6}

# \end{equation}

# $$

# This rewrite results from using the following fundamental rules for inner

# product spaces:

#

#

#

# $$

# \begin{equation}

# (\alpha\boldsymbol{u},\boldsymbol{v})=\alpha(\boldsymbol{u},\boldsymbol{v}),\quad \alpha\in\mathbb{R},

# \label{fem:vec:rule:scalarmult} \tag{7}

# \end{equation}

# $$

#

#

#

# $$

# \begin{equation}

# (\boldsymbol{u} +\boldsymbol{v},\boldsymbol{w}) = (\boldsymbol{u},\boldsymbol{w}) + (\boldsymbol{v}, \boldsymbol{w}),

# \label{fem:vec:rule:sum} \tag{8}

# \end{equation}

# $$

#

#

#

# $$

# \begin{equation}

# \label{fem:vec:rule:symmetry} \tag{9}

# (\boldsymbol{u}, \boldsymbol{v}) = (\boldsymbol{v}, \boldsymbol{u}){\thinspace .}

# \end{equation}

# $$

# Minimizing $E(c_0)$ implies finding $c_0$ such that

# $$

# \frac{\partial E}{\partial c_0} = 0{\thinspace .}

# $$

# It turns out that $E$ has one unique minimum and no maximum point.

# Now, when differentiating ([6](#fem:vec:E)) with respect to $c_0$, note

# that none of the inner product expressions depend on $c_0$, so we simply get

#

#

#

# $$

# \begin{equation}

# \frac{\partial E}{\partial c_0} = -2(\boldsymbol{f},\boldsymbol{\psi}_0) + 2c_0 (\boldsymbol{\psi}_0,\boldsymbol{\psi}_0)

# {\thinspace .}

# \label{fem:vec:dEdc0:v1} \tag{10}

# \end{equation}

# $$

# Setting the above expression equal to zero and solving for $c_0$ gives

#

#

#

# $$

# \begin{equation}

# c_0 = \frac{(\boldsymbol{f},\boldsymbol{\psi}_0)}{(\boldsymbol{\psi}_0,\boldsymbol{\psi}_0)},

# \label{fem:vec:c0} \tag{11}

# \end{equation}

# $$

# which in the present case, with $\boldsymbol{\psi}_0=(a,b)$, results in

#

#

#

# $$

# \begin{equation}

# c_0 = \frac{3a + 5b}{a^2 + b^2}{\thinspace .} \label{_auto4} \tag{12}

# \end{equation}

# $$

# For later, it is worth mentioning that setting

# the key equation ([10](#fem:vec:dEdc0:v1)) to zero and ordering

# the terms lead to

# $$

# (\boldsymbol{f}-c_0\boldsymbol{\psi}_0,\boldsymbol{\psi}_0) = 0,

# $$

# or

#

#

#

# $$

# \begin{equation}

# (\boldsymbol{e}, \boldsymbol{\psi}_0) = 0

# {\thinspace .}

# \label{fem:vec:dEdc0:Galerkin} \tag{13}

# \end{equation}

# $$

# This implication of minimizing $E$ is an important result that we shall

# make much use of.

#

#

#

#

# ### The projection method

#

# We shall now show that minimizing $||\boldsymbol{e}||^2$ implies that $\boldsymbol{e}$ is

# orthogonal to *any* vector $\boldsymbol{v}$ in the space $V$. This result is

# visually quite clear from [Figure](#fem:approx:vec:plane:fig) (think of

# other vectors along the line $(a,b)$: all of them will lead to

# a larger distance between the approximation and $\boldsymbol{f}$).

# Then we see mathematically that $\boldsymbol{e}$ is orthogonal to any vector $\boldsymbol{v}$

# in the space $V$ and we may

# express any $\boldsymbol{v}\in V$ as $\boldsymbol{v}=s\boldsymbol{\psi}_0$ for any scalar parameter $s$

# (recall that two vectors are orthogonal when their inner product vanishes).

# Then we calculate the inner product

# $$

# \begin{align*}

# (\boldsymbol{e}, s\boldsymbol{\psi}_0) &= (\boldsymbol{f} - c_0\boldsymbol{\psi}_0, s\boldsymbol{\psi}_0)\\

# &= (\boldsymbol{f},s\boldsymbol{\psi}_0) - (c_0\boldsymbol{\psi}_0, s\boldsymbol{\psi}_0)\\

# &= s(\boldsymbol{f},\boldsymbol{\psi}_0) - sc_0(\boldsymbol{\psi}_0, \boldsymbol{\psi}_0)\\

# &= s(\boldsymbol{f},\boldsymbol{\psi}_0) - s\frac{(\boldsymbol{f},\boldsymbol{\psi}_0)}{(\boldsymbol{\psi}_0,\boldsymbol{\psi}_0)}(\boldsymbol{\psi}_0,\boldsymbol{\psi}_0)\\

# &= s\left( (\boldsymbol{f},\boldsymbol{\psi}_0) - (\boldsymbol{f},\boldsymbol{\psi}_0)\right)\\

# &=0{\thinspace .}

# \end{align*}

# $$

# Therefore, instead of minimizing the square of the norm, we could

# demand that $\boldsymbol{e}$ is orthogonal to any vector in $V$, which in our present

# simple case amounts to a single vector only.

# This method is known as *projection*.

# (The approach can also be referred to as a Galerkin method as

# explained at the end of the section [Approximation of general vectors](#fem:approx:vec:Np1dim).)

#

# Mathematically, the projection method is stated

# by the equation

#

#

#

# $$

# \begin{equation}

# (\boldsymbol{e}, \boldsymbol{v}) = 0,\quad\forall\boldsymbol{v}\in V{\thinspace .}

# \label{fem:vec:Galerkin1} \tag{14}

# \end{equation}

# $$

# An arbitrary $\boldsymbol{v}\in V$ can be expressed as

# $s\boldsymbol{\psi}_0$, $s\in\mathbb{R}$, and therefore

# ([14](#fem:vec:Galerkin1)) implies

# $$

# (\boldsymbol{e},s\boldsymbol{\psi}_0) = s(\boldsymbol{e}, \boldsymbol{\psi}_0) = 0,

# $$

# which means that the error must be orthogonal to the basis vector in

# the space $V$:

# $$

# (\boldsymbol{e}, \boldsymbol{\psi}_0)=0\quad\hbox{or}\quad

# (\boldsymbol{f} - c_0\boldsymbol{\psi}_0, \boldsymbol{\psi}_0)=0,

# $$

# which is what we found in ([13](#fem:vec:dEdc0:Galerkin)) from

# the least squares computations.

#

#

#

#

# ## Approximation of general vectors

#

#

#

# Let us generalize the vector approximation from the previous section

# to vectors in spaces with arbitrary dimension. Given some vector $\boldsymbol{f}$,

# we want to find the best approximation to this vector in

# the space

# $$

# V = \hbox{span}\,\{\boldsymbol{\psi}_0,\ldots,\boldsymbol{\psi}_N\}

# {\thinspace .}

# $$

# We assume that the space has dimension $N+1$ and

# that *basis vectors* $\boldsymbol{\psi}_0,\ldots,\boldsymbol{\psi}_N$ are

# linearly independent so that none of them are redundant.

# Any vector $\boldsymbol{u}\in V$ can then be written as a linear combination

# of the basis vectors, i.e.,

# $$

# \boldsymbol{u} = \sum_{j=0}^N c_j\boldsymbol{\psi}_j,

# $$

# where $c_j\in\mathbb{R}$ are scalar coefficients to be determined.

#

# ### The least squares method

#

# Now we want to find $c_0,\ldots,c_N$, such that $\boldsymbol{u}$ is the best

# approximation to $\boldsymbol{f}$ in the sense that the distance (error)

# $\boldsymbol{e} = \boldsymbol{f} - \boldsymbol{u}$ is minimized. Again, we define

# the squared distance as a function of the free parameters

# $c_0,\ldots,c_N$,

# $$

# E(c_0,\ldots,c_N) = (\boldsymbol{e},\boldsymbol{e}) = (\boldsymbol{f} -\sum_jc_j\boldsymbol{\psi}_j,\boldsymbol{f} -\sum_jc_j\boldsymbol{\psi}_j)

# \nonumber

# $$

#

#

#

# $$

# \begin{equation}

# = (\boldsymbol{f},\boldsymbol{f}) - 2\sum_{j=0}^N c_j(\boldsymbol{f},\boldsymbol{\psi}_j) +

# \sum_{p=0}^N\sum_{q=0}^N c_pc_q(\boldsymbol{\psi}_p,\boldsymbol{\psi}_q){\thinspace .}

# \label{fem:vec:genE} \tag{15}

# \end{equation}

# $$

# Minimizing this $E$ with respect to the independent variables

# $c_0,\ldots,c_N$ is obtained by requiring

# $$

# \frac{\partial E}{\partial c_i} = 0,\quad i=0,\ldots,N

# {\thinspace .}

# $$

# The first term in ([15](#fem:vec:genE)) is independent of $c_i$, so its

# derivative vanishes.

# The second term in ([15](#fem:vec:genE)) is differentiated as follows:

#

#

#

# $$

# \begin{equation}

# \frac{\partial}{\partial c_i}

# 2\sum_{j=0}^N c_j(\boldsymbol{f},\boldsymbol{\psi}_j) = 2(\boldsymbol{f},\boldsymbol{\psi}_i),

# \label{_auto5} \tag{16}

# \end{equation}

# $$

# since the expression to be differentiated is a sum and only one term,

# $c_i(\boldsymbol{f},\boldsymbol{\psi}_i)$,

# contains $c_i$ (this term is linear in $c_i$).

# To understand this differentiation in detail,

# write out the sum specifically for,

# e.g, $N=3$ and $i=1$.

#

# The last term in ([15](#fem:vec:genE))

# is more tedious to differentiate. It can be wise to write out the

# double sum for $N=1$ and perform differentiation with respect to

# $c_0$ and $c_1$ to see the structure of the expression. Thereafter,

# one can generalize to an arbitrary $N$ and observe that

#

#

#

# $$

# \begin{equation}

# \frac{\partial}{\partial c_i}

# c_pc_q =

# \left\lbrace\begin{array}{ll}

# 0, & \hbox{ if } p\neq i\hbox{ and } q\neq i,\\

# c_q, & \hbox{ if } p=i\hbox{ and } q\neq i,\\

# c_p, & \hbox{ if } p\neq i\hbox{ and } q=i,\\

# 2c_i, & \hbox{ if } p=q= i{\thinspace .}\\

# \end{array}\right.

# \label{_auto6} \tag{17}

# \end{equation}

# $$

# Then

# $$

# \frac{\partial}{\partial c_i}

# \sum_{p=0}^N\sum_{q=0}^N c_pc_q(\boldsymbol{\psi}_p,\boldsymbol{\psi}_q)

# = \sum_{p=0, p\neq i}^N c_p(\boldsymbol{\psi}_p,\boldsymbol{\psi}_i)

# + \sum_{q=0, q\neq i}^N c_q(\boldsymbol{\psi}_i,\boldsymbol{\psi}_q)

# +2c_i(\boldsymbol{\psi}_i,\boldsymbol{\psi}_i){\thinspace .}

# $$

# Since each of the two sums is missing the term $c_i(\boldsymbol{\psi}_i,\boldsymbol{\psi}_i)$,

# we may split the very last term in two, to get exactly that "missing"

# term for each sum. This idea allows us to write

#

#

#

# $$

# \begin{equation}

# \frac{\partial}{\partial c_i}

# \sum_{p=0}^N\sum_{q=0}^N c_pc_q(\boldsymbol{\psi}_p,\boldsymbol{\psi}_q)

# = 2\sum_{j=0}^N c_i(\boldsymbol{\psi}_j,\boldsymbol{\psi}_i){\thinspace .}

# \label{_auto7} \tag{18}

# \end{equation}

# $$

# It then follows that setting

# $$

# \frac{\partial E}{\partial c_i} = 0,\quad i=0,\ldots,N,

# $$

# implies

# $$

# - 2(\boldsymbol{f},\boldsymbol{\psi}_i) + 2\sum_{j=0}^N c_i(\boldsymbol{\psi}_j,\boldsymbol{\psi}_i) = 0,\quad i=0,\ldots,N{\thinspace .}

# $$

# Moving the first term to the right-hand side shows that the equation is

# actually a *linear system* for the unknown parameters $c_0,\ldots,c_N$:

#

#

#

# $$

# \begin{equation}

# \sum_{j=0}^N A_{i,j} c_j = b_i, \quad i=0,\ldots,N,

# \label{fem:approx:vec:Np1dim:eqsys} \tag{19}

# \end{equation}

# $$

# where

#

#

#

# $$

# \begin{equation}

# A_{i,j} = (\boldsymbol{\psi}_i,\boldsymbol{\psi}_j),

# \label{_auto8} \tag{20}

# \end{equation}

# $$

#

#

#

# $$

# \begin{equation}

# b_i = (\boldsymbol{\psi}_i, \boldsymbol{f}){\thinspace .} \label{_auto9} \tag{21}

# \end{equation}

# $$

# We have changed the order of the two vectors in the inner

# product according to ([9](#fem:vec:rule:symmetry)):

# $$

# A_{i,j} = (\boldsymbol{\psi}_j,\boldsymbol{\psi}_i) = (\boldsymbol{\psi}_i,\boldsymbol{\psi}_j),

# $$

# simply because the sequence $i$-$j$ looks more aesthetic.

#

# ### The Galerkin or projection method

#

# In analogy with the "one-dimensional" example in

# the section [Approximation of planar vectors](#fem:approx:vec:plane), it holds also here in the general

# case that minimizing the distance

# (error) $\boldsymbol{e}$ is equivalent to demanding that $\boldsymbol{e}$ is orthogonal to

# all $\boldsymbol{v}\in V$:

#

#

#

# $$

# \begin{equation}

# (\boldsymbol{e},\boldsymbol{v})=0,\quad \forall\boldsymbol{v}\in V{\thinspace .}

# \label{fem:approx:vec:Np1dim:Galerkin} \tag{22}

# \end{equation}

# $$

# Since any $\boldsymbol{v}\in V$ can be written as $\boldsymbol{v} =\sum_{i=0}^N c_i\boldsymbol{\psi}_i$,

# the statement ([22](#fem:approx:vec:Np1dim:Galerkin)) is equivalent to

# saying that

# $$

# (\boldsymbol{e}, \sum_{i=0}^N c_i\boldsymbol{\psi}_i) = 0,

# $$

# for any choice of coefficients $c_0,\ldots,c_N$.

# The latter equation can be rewritten as

# $$

# \sum_{i=0}^N c_i (\boldsymbol{e},\boldsymbol{\psi}_i) =0{\thinspace .}

# $$

# If this is to hold for arbitrary values of $c_0,\ldots,c_N$,

# we must require that each term in the sum vanishes, which means that

#

#

#

# $$

# \begin{equation}

# (\boldsymbol{e},\boldsymbol{\psi}_i)=0,\quad i=0,\ldots,N{\thinspace .}

# \label{fem:approx:vec:Np1dim:Galerkin0} \tag{23}

# \end{equation}

# $$

# These $N+1$ equations result in the same linear system as

# ([19](#fem:approx:vec:Np1dim:eqsys)):

# $$

# (\boldsymbol{f} - \sum_{j=0}^N c_j\boldsymbol{\psi}_j, \boldsymbol{\psi}_i) = (\boldsymbol{f}, \boldsymbol{\psi}_i) - \sum_{j=0}^N

# (\boldsymbol{\psi}_i,\boldsymbol{\psi}_j)c_j = 0,

# $$

# and hence

# $$

# \sum_{j=0}^N (\boldsymbol{\psi}_i,\boldsymbol{\psi}_j)c_j = (\boldsymbol{f}, \boldsymbol{\psi}_i),\quad i=0,\ldots, N

# {\thinspace .}

# $$

# So, instead of differentiating the

# $E(c_0,\ldots,c_N)$ function, we could simply use

# ([22](#fem:approx:vec:Np1dim:Galerkin)) as the principle for

# determining $c_0,\ldots,c_N$, resulting in the $N+1$

# equations ([23](#fem:approx:vec:Np1dim:Galerkin0)).

#

# The names *least squares method* or *least squares approximation*

# are natural since the calculations consists of

# minimizing $||\boldsymbol{e}||^2$, and $||\boldsymbol{e}||^2$ is a sum of squares

# of differences between the components in $\boldsymbol{f}$ and $\boldsymbol{u}$.

# We find $\boldsymbol{u}$ such that this sum of squares is minimized.

#

# The principle ([22](#fem:approx:vec:Np1dim:Galerkin)),

# or the equivalent form ([23](#fem:approx:vec:Np1dim:Galerkin0)),

# is known as *projection*. Almost the same mathematical idea

# was used by the Russian mathematician [Boris Galerkin](http://en.wikipedia.org/wiki/Boris_Galerkin) to solve

# differential equations, resulting in what is widely known as

# *Galerkin's method*.

#

# # Approximation principles

#

#

# Let $V$ be a function space spanned by a set of *basis functions*

# ${\psi}_0,\ldots,{\psi}_N$,

# $$

# V = \hbox{span}\,\{{\psi}_0,\ldots,{\psi}_N\},

# $$

# such that any function $u\in V$ can be written as a linear

# combination of the basis functions:

#

#

#

# $$

# \begin{equation}

# \label{fem:approx:ufem} \tag{24}

# u = \sum_{j\in{\mathcal{I}_s}} c_j{\psi}_j{\thinspace .}

# \end{equation}

# $$

# That is, we consider functions as vectors in a vector space - a

# so-called function space - and we have a finite set of basis

# functions that span the space just as basis vectors or unit vectors

# span a vector space.

#

# The index set ${\mathcal{I}_s}$ is defined as ${\mathcal{I}_s} =\{0,\ldots,N\}$ and is

# from now on used

# both for compact notation and for flexibility in the numbering of

# elements in sequences.

#

# For now, in this introduction, we shall look at functions of a

# single variable $x$:

# $u=u(x)$, ${\psi}_j={\psi}_j(x)$, $j\in{\mathcal{I}_s}$. Later, we will almost

# trivially extend the mathematical details

# to functions of two- or three-dimensional physical spaces.

# The approximation ([24](#fem:approx:ufem)) is typically used

# to discretize a problem in space. Other methods, most notably

# finite differences, are common for time discretization, although the

# form ([24](#fem:approx:ufem)) can be used in time as well.

#

# ## The least squares method

#

#

# Given a function $f(x)$, how can we determine its best approximation

# $u(x)\in V$? A natural starting point is to apply the same reasoning

# as we did for vectors in the section [Approximation of general vectors](#fem:approx:vec:Np1dim). That is,

# we minimize the distance between $u$ and $f$. However, this requires

# a norm for measuring distances, and a norm is most conveniently

# defined through an

# inner product. Viewing a function as a vector of infinitely

# many point values, one for each value of $x$, the inner product of

# two arbitrary functions $f(x)$ and $g(x)$ could

# intuitively be defined as the usual summation of

# pairwise "components" (values), with summation replaced by integration:

# $$

# (f,g) = \int f(x)g(x)\, {\, \mathrm{d}x}

# {\thinspace .}

# $$

# To fix the integration domain, we let $f(x)$ and ${\psi}_i(x)$

# be defined for a domain $\Omega\subset\mathbb{R}$.

# The inner product of two functions $f(x)$ and $g(x)$ is then

#

#

#

# $$

# \begin{equation}

# (f,g) = \int_\Omega f(x)g(x)\, {\, \mathrm{d}x}

# \label{fem:approx:LS:innerprod} \tag{25}

# {\thinspace .}

# \end{equation}

# $$

# The distance between $f$ and any function $u\in V$ is simply

# $f-u$, and the squared norm of this distance is

#

#

#

# $$

# \begin{equation}

# E = (f(x)-\sum_{j\in{\mathcal{I}_s}} c_j{\psi}_j(x), f(x)-\sum_{j\in{\mathcal{I}_s}} c_j{\psi}_j(x)){\thinspace .}

# \label{fem:approx:LS:E} \tag{26}

# \end{equation}

# $$

# Note the analogy with ([15](#fem:vec:genE)): the given function

# $f$ plays the role of the given vector $\boldsymbol{f}$, and the basis function

# ${\psi}_i$ plays the role of the basis vector $\boldsymbol{\psi}_i$.

# We can rewrite ([26](#fem:approx:LS:E)),

# through similar steps as used for the result

# ([15](#fem:vec:genE)), leading to

#

#

#

# $$

# \begin{equation}

# E(c_i, \ldots, c_N) = (f,f) -2\sum_{j\in{\mathcal{I}_s}} c_j(f,{\psi}_i)

# + \sum_{p\in{\mathcal{I}_s}}\sum_{q\in{\mathcal{I}_s}} c_pc_q({\psi}_p,{\psi}_q){\thinspace .} \label{_auto10} \tag{27}

# \end{equation}

# $$

# Minimizing this function of $N+1$ scalar variables

# $\left\{ {c}_i \right\}_{i\in{\mathcal{I}_s}}$, requires differentiation

# with respect to $c_i$, for all $i\in{\mathcal{I}_s}$. The resulting

# equations are very similar to those we had in the vector case,

# and we hence end up with a

# linear system of the form ([19](#fem:approx:vec:Np1dim:eqsys)), with

# basically the same expressions:

#

#

#

# $$

# \begin{equation}

# A_{i,j} = ({\psi}_i,{\psi}_j),

# \label{fem:approx:Aij} \tag{28}

# \end{equation}

# $$

#

#

#

# $$

# \begin{equation}

# b_i = (f,{\psi}_i){\thinspace .}

# \label{fem:approx:bi} \tag{29}

# \end{equation}

# $$

# The only difference from

# ([19](#fem:approx:vec:Np1dim:eqsys))

# is that the inner product is defined in terms

# of integration rather than summation.

#

# ## The projection (or Galerkin) method

#

#

# As in the section [Approximation of general vectors](#fem:approx:vec:Np1dim), the minimization of $(e,e)$

# is equivalent to

#

#

#

# $$

# \begin{equation}

# (e,v)=0,\quad\forall v\in V{\thinspace .}

# \label{fem:approx:Galerkin} \tag{30}

# \end{equation}

# $$

# This is known as a projection of a function $f$ onto the subspace $V$.

# We may also call it a Galerkin method for approximating functions.

# Using the same reasoning as

# in

# ([22](#fem:approx:vec:Np1dim:Galerkin))-([23](#fem:approx:vec:Np1dim:Galerkin0)),

# it follows that ([30](#fem:approx:Galerkin)) is equivalent to

#

#

#

# $$

# \begin{equation}

# (e,{\psi}_i)=0,\quad i\in{\mathcal{I}_s}{\thinspace .}

# \label{fem:approx:Galerkin0} \tag{31}

# \end{equation}

# $$

# Inserting $e=f-u$ in this equation and ordering terms, as in the

# multi-dimensional vector case, we end up with a linear

# system with a coefficient matrix ([28](#fem:approx:Aij)) and

# right-hand side vector ([29](#fem:approx:bi)).

#

# Whether we work with vectors in the plane, general vectors, or

# functions in function spaces, the least squares principle and

# the projection or Galerkin method are equivalent.

#

# ## Example of linear approximation

#

#

# Let us apply the theory in the previous section to a simple problem:

# given a parabola $f(x)=10(x-1)^2-1$ for $x\in\Omega=[1,2]$, find

# the best approximation $u(x)$ in the space of all linear functions:

# $$

# V = \hbox{span}\,\{1, x\}{\thinspace .}

# $$

# With our notation, ${\psi}_0(x)=1$, ${\psi}_1(x)=x$, and $N=1$.

# We seek

# $$

# u=c_0{\psi}_0(x) + c_1{\psi}_1(x) = c_0 + c_1x,

# $$

# where

# $c_0$ and $c_1$ are found by solving a $2\times 2$ linear system.

# The coefficient matrix has elements

#

#

#

# $$

# \begin{equation}

# A_{0,0} = ({\psi}_0,{\psi}_0) = \int_1^21\cdot 1\, {\, \mathrm{d}x} = 1,

# \label{_auto11} \tag{32}

# \end{equation}

# $$

#

#

#

# $$

# \begin{equation}

# A_{0,1} = ({\psi}_0,{\psi}_1) = \int_1^2 1\cdot x\, {\, \mathrm{d}x} = 3/2,

# \label{_auto12} \tag{33}

# \end{equation}

# $$

#

#

#

# $$

# \begin{equation}

# A_{1,0} = A_{0,1} = 3/2,

# \label{_auto13} \tag{34}

# \end{equation}

# $$

#

#

#

# $$

# \begin{equation}

# A_{1,1} = ({\psi}_1,{\psi}_1) = \int_1^2 x\cdot x\,{\, \mathrm{d}x} = 7/3{\thinspace .} \label{_auto14} \tag{35}

# \end{equation}

# $$

# The corresponding right-hand side is

#

#

#

# $$

# \begin{equation}

# b_1 = (f,{\psi}_0) = \int_1^2 (10(x-1)^2 - 1)\cdot 1 \, {\, \mathrm{d}x} = 7/3,

# \label{_auto15} \tag{36}

# \end{equation}

# $$

#

#

#

# $$

# \begin{equation}

# b_2 = (f,{\psi}_1) = \int_1^2 (10(x-1)^2 - 1)\cdot x\, {\, \mathrm{d}x} = 13/3{\thinspace .} \label{_auto16} \tag{37}

# \end{equation}

# $$

# Solving the linear system results in

#

#

#

# $$

# \begin{equation}

# c_0 = -38/3,\quad c_1 = 10,

# \label{_auto17} \tag{38}

# \end{equation}

# $$

# and consequently

#

#

#

# $$

# \begin{equation}

# u(x) = 10x - \frac{38}{3}{\thinspace .} \label{_auto18} \tag{39}

# \end{equation}

# $$

# [Figure](#fem:approx:global:fig:parabola:linear) displays the

# parabola and its best approximation in the space of all linear functions.

#

#

#

#

#

# Best approximation of a parabola by a straight line.

# #

#

#

#

# ## Implementation of the least squares method

#

#

# ### Symbolic integration

#

# The linear system can be computed either symbolically or

# numerically (a numerical integration rule is needed in the latter case).

# Let us first compute the system and its solution symbolically, i.e.,

# using classical "pen and paper" mathematics with symbols.

# The Python package `sympy` can greatly help with this type of

# mathematics, and will therefore be frequently used in this text.

# Some basic familiarity with `sympy` is assumed, typically

# `symbols`, `integrate`, `diff`, `expand`, and `simplify`. Much can be learned

# by studying the many applications of `sympy` that will be presented.

#

# Below is a function for symbolic computation of the linear system,

# where $f(x)$ is given as a `sympy` expression `f` involving

# the symbol `x`, `psi` is a list of expressions for $\left\{ {{\psi}}_i \right\}_{i\in{\mathcal{I}_s}}$,

# and `Omega` is a 2-tuple/list holding the limits of the domain $\Omega$:

# In[1]:

import sympy as sym

def least_squares(f, psi, Omega):

N = len(psi) - 1

A = sym.zeros(N+1, N+1)

b = sym.zeros(N+1, 1)

x = sym.Symbol('x')

for i in range(N+1):

for j in range(i, N+1):

A[i,j] = sym.integrate(psi[i]*psi[j],

(x, Omega[0], Omega[1]))

A[j,i] = A[i,j]

b[i,0] = sym.integrate(psi[i]*f, (x, Omega[0], Omega[1]))

c = A.LUsolve(b)

# Note: c is a sympy Matrix object, solution is in c[:,0]

u = 0

for i in range(len(psi)):

u += c[i,0]*psi[i]

return u, c

# Observe that we exploit the symmetry of the coefficient matrix:

# only the upper triangular part is computed. Symbolic integration, also in

# `sympy`, is often time consuming, and (roughly) halving the

# work has noticeable effect on the waiting time for the computations to

# finish.

#

# **Notice.**

#

# We remark that the symbols in `sympy` are created and stored in

# a symbol factory that is indexed by the expression used in the construction

# and that repeated constructions from the same expression will not create

# new objects. The following code illustrates the behavior of the

# symbol factory:

# In[2]:

from sympy import *

x0 = Symbol("x")

x1 = Symbol("x")

id(x0) ==id(x1)

# In[3]:

a0 = 3.0

a1 = 3.0

id(a0) ==id(a1)

# ### Fall back on numerical integration

#

# Obviously, `sympy` may fail to successfully integrate

# $\int_\Omega{\psi}_i{\psi}_j{\, \mathrm{d}x}$, and

# especially $\int_\Omega f{\psi}_i{\, \mathrm{d}x}$, symbolically.

# Therefore, we should extend

# the `least_squares` function such that it falls back on

# numerical integration if the symbolic integration is unsuccessful.

# In the latter case, the returned value from `sympy`'s

# `integrate` function is an object of type `Integral`.

# We can test on this type and utilize the `mpmath` module

# to perform numerical integration of high precision.

# Even when `sympy` manages to integrate symbolically, it can

# take an undesirably long time. We therefore include an

# argument `symbolic` that governs whether or not to try

# symbolic integration. Here is a complete and

# improved version of the previous function `least_squares`:

# In[11]:

def least_squares(f, psi, Omega, symbolic=True):

N = len(psi) - 1

A = sym.zeros(N+1, N+1)

b = sym.zeros(N+1, 1)

x = sym.Symbol('x')

for i in range(N+1):

for j in range(i, N+1):

integrand = psi[i]*psi[j]

if symbolic:

I = sym.integrate(integrand, (x, Omega[0], Omega[1]))

if not symbolic or isinstance(I, sym.Integral):

# Could not integrate symbolically, use numerical int.

integrand = sym.lambdify([x], integrand, 'mpmath')

I = mpmath.quad(integrand, [Omega[0], Omega[1]])

A[i,j] = A[j,i] = I

integrand = psi[i]*f

if symbolic:

I = sym.integrate(integrand, (x, Omega[0], Omega[1]))

if not symbolic or isinstance(I, sym.Integral):

# Could not integrate symbolically, use numerical int.

integrand = sym.lambdify([x], integrand, 'mpmath')

I = mpmath.quad(integrand, [Omega[0], Omega[1]])

b[i,0] = I

if symbolic:

c = A.LUsolve(b) # symbolic solve

# c is a sympy Matrix object, numbers are in c[i,0]

c = [sym.simplify(c[i,0]) for i in range(c.shape[0])]

else:

c = mpmath.lu_solve(A, b) # numerical solve

c = [c[i,0] for i in range(c.rows)]

u = sum(c[i]*psi[i] for i in range(len(psi)))

return u, c

# The function is found in the file `approx1D.py`.

#

# ### Plotting the approximation

#

# Comparing the given $f(x)$ and the approximate $u(x)$ visually is done

# with the following function, which utilizes `sympy`'s `lambdify` tool to

# convert a `sympy` expression to a Python function for numerical

# computations:

# In[13]:

import numpy as np

import matplotlib.pyplot as plt

def comparison_plot(f, u, Omega, filename='tmp.pdf'):

x = sym.Symbol('x')

f = sym.lambdify([x], f, modules="numpy")

u = sym.lambdify([x], u, modules="numpy")

resolution = 401 # no of points in plot

xcoor = np.linspace(Omega[0], Omega[1], resolution)

exact = f(xcoor)

approx = u(xcoor)

plt.plot(xcoor, approx)

plt.plot(xcoor, exact)

plt.legend(['approximation', 'exact'])

# plt.savefig(filename)

# The `modules='numpy'` argument to `lambdify` is important

# if there are mathematical functions, such as `sin` or `exp`

# in the symbolic expressions in `f` or `u`, and these

# mathematical functions are to be used with vector arguments, like

# `xcoor` above.

#

# Both the `least_squares` and `comparison_plot` functions are found in

# the file [`approx1D.py`](src/approx1D.py). The

# `comparison_plot` function in this file is more advanced and flexible

# than the simplistic version shown above. The file [`ex_approx1D.py`](src/ex_approx1D.py)

# applies the `approx1D` module to accomplish the forthcoming examples.

# ## Perfect approximation

#

#

# Let us use the code above to recompute the problem from

# the section [Example of linear approximation](#fem:approx:global:linear) where we want to approximate

# a parabola. What happens if we add an element $x^2$ to the set of basis functions and test what

# the best approximation is if $V$ is the space of all parabolic functions?

# The answer is quickly found by running

# In[6]:

x = sym.Symbol('x')

f = 10*(x-1)**2-1

u, c = least_squares(f=f, psi=[1, x, x**2], Omega=[1, 2])

print(u)

print(sym.expand(f))

# Now, what if we use ${\psi}_i(x)=x^i$ for $i=0,1,\ldots,N=40$?

# The output from `least_squares` gives $c_i=0$ for $i>2$, which

# means that the method finds the perfect approximation.

# ### The residual: an indirect but computationally cheap measure of the error.

#

# When attempting to solve

# a system $A c = b$, we may question how far off a start vector or a current approximation $c_0$ is.

# The error is clearly the difference between $c$ and $c_0$, $e=c-c_0$, but since we do

# not know the true solution $c$ we are unable to assess the error.

# However, the vector $c_0$ is the solution of the an alternative problem $A c_0 = b_0$.

# If the input, i.e., the right-hand sides $b_0$ and $b$ are close to each other then

# we expect the output of a solution process $c$ and $c_0$ to be close to each other.

# Furthermore, while $b_0$ in principle is unknown, it is easily computable as $b_0 = A c_0$

# and does not require inversion of $A$.

# The vector $b - b_0$ is the so-called residual $r$ defined by

# $$

# r = b - b_0 = b - A c_0 = A c - A c_0 .

# $$

# Clearly, the error and the residual are related by

# $$

# A e = r .

# $$

# While the computation of the error requires inversion of $A$,

# which may be computationally expensive, the residual

# is easily computable and do only require a matrix-vector product and vector additions.

#

#

#

#

#

# # Orthogonal basis functions

#

# Approximation of a function via orthogonal functions, especially sinusoidal

# functions or orthogonal polynomials, is a very popular and successful approach.

# The finite element method does not make use of orthogonal functions, but

# functions that are "almost orthogonal".

#

#

# ## Ill-conditioning

#

#

# For basis functions that are not orthogonal the condition number

# of the matrix may create problems during the solution process

# due to, for example, round-off errors as will be illustrated in the

# following. The computational example in the section [Perfect approximation](#fem:approx:global:exact1)

# applies the `least_squares` function which invokes symbolic

# methods to calculate and solve the linear system. The correct

# solution $c_0=9, c_1=-20, c_2=10, c_i=0$ for $i\geq 3$ is perfectly

# recovered.

#

# Suppose we

# convert the matrix and right-hand side to floating-point arrays

# and then solve the system using finite-precision arithmetics, which

# is what one will (almost) always do in real life. This time we

# get astonishing results! Up to about $N=7$ we get a solution that

# is reasonably close to the exact one. Increasing $N$ shows that

# seriously wrong coefficients are computed.

# Below is a table showing the solution of the linear system arising from

# approximating a parabola

# by functions of the form $u(x)=c_0 + c_1x + c_2x^2 + \cdots + c_{10}x^{10}$.

# Analytically, we know that $c_j=0$ for $j>2$, but numerically we may get

# $c_j\neq 0$ for $j>2$.

#

#

#

#

#

#

# ## Implementation of the least squares method

#

#

# ### Symbolic integration

#

# The linear system can be computed either symbolically or

# numerically (a numerical integration rule is needed in the latter case).

# Let us first compute the system and its solution symbolically, i.e.,

# using classical "pen and paper" mathematics with symbols.

# The Python package `sympy` can greatly help with this type of

# mathematics, and will therefore be frequently used in this text.

# Some basic familiarity with `sympy` is assumed, typically

# `symbols`, `integrate`, `diff`, `expand`, and `simplify`. Much can be learned

# by studying the many applications of `sympy` that will be presented.

#

# Below is a function for symbolic computation of the linear system,

# where $f(x)$ is given as a `sympy` expression `f` involving

# the symbol `x`, `psi` is a list of expressions for $\left\{ {{\psi}}_i \right\}_{i\in{\mathcal{I}_s}}$,

# and `Omega` is a 2-tuple/list holding the limits of the domain $\Omega$:

# In[1]:

import sympy as sym

def least_squares(f, psi, Omega):

N = len(psi) - 1

A = sym.zeros(N+1, N+1)

b = sym.zeros(N+1, 1)

x = sym.Symbol('x')

for i in range(N+1):

for j in range(i, N+1):

A[i,j] = sym.integrate(psi[i]*psi[j],

(x, Omega[0], Omega[1]))

A[j,i] = A[i,j]

b[i,0] = sym.integrate(psi[i]*f, (x, Omega[0], Omega[1]))

c = A.LUsolve(b)

# Note: c is a sympy Matrix object, solution is in c[:,0]

u = 0

for i in range(len(psi)):

u += c[i,0]*psi[i]

return u, c

# Observe that we exploit the symmetry of the coefficient matrix:

# only the upper triangular part is computed. Symbolic integration, also in

# `sympy`, is often time consuming, and (roughly) halving the

# work has noticeable effect on the waiting time for the computations to

# finish.

#

# **Notice.**

#

# We remark that the symbols in `sympy` are created and stored in

# a symbol factory that is indexed by the expression used in the construction

# and that repeated constructions from the same expression will not create

# new objects. The following code illustrates the behavior of the

# symbol factory:

# In[2]:

from sympy import *

x0 = Symbol("x")

x1 = Symbol("x")

id(x0) ==id(x1)

# In[3]:

a0 = 3.0

a1 = 3.0

id(a0) ==id(a1)

# ### Fall back on numerical integration

#

# Obviously, `sympy` may fail to successfully integrate

# $\int_\Omega{\psi}_i{\psi}_j{\, \mathrm{d}x}$, and

# especially $\int_\Omega f{\psi}_i{\, \mathrm{d}x}$, symbolically.

# Therefore, we should extend

# the `least_squares` function such that it falls back on

# numerical integration if the symbolic integration is unsuccessful.

# In the latter case, the returned value from `sympy`'s

# `integrate` function is an object of type `Integral`.

# We can test on this type and utilize the `mpmath` module

# to perform numerical integration of high precision.

# Even when `sympy` manages to integrate symbolically, it can

# take an undesirably long time. We therefore include an

# argument `symbolic` that governs whether or not to try

# symbolic integration. Here is a complete and

# improved version of the previous function `least_squares`:

# In[11]:

def least_squares(f, psi, Omega, symbolic=True):

N = len(psi) - 1

A = sym.zeros(N+1, N+1)

b = sym.zeros(N+1, 1)

x = sym.Symbol('x')

for i in range(N+1):

for j in range(i, N+1):

integrand = psi[i]*psi[j]

if symbolic:

I = sym.integrate(integrand, (x, Omega[0], Omega[1]))

if not symbolic or isinstance(I, sym.Integral):

# Could not integrate symbolically, use numerical int.

integrand = sym.lambdify([x], integrand, 'mpmath')

I = mpmath.quad(integrand, [Omega[0], Omega[1]])

A[i,j] = A[j,i] = I

integrand = psi[i]*f

if symbolic:

I = sym.integrate(integrand, (x, Omega[0], Omega[1]))

if not symbolic or isinstance(I, sym.Integral):

# Could not integrate symbolically, use numerical int.

integrand = sym.lambdify([x], integrand, 'mpmath')

I = mpmath.quad(integrand, [Omega[0], Omega[1]])

b[i,0] = I

if symbolic:

c = A.LUsolve(b) # symbolic solve

# c is a sympy Matrix object, numbers are in c[i,0]

c = [sym.simplify(c[i,0]) for i in range(c.shape[0])]

else:

c = mpmath.lu_solve(A, b) # numerical solve

c = [c[i,0] for i in range(c.rows)]

u = sum(c[i]*psi[i] for i in range(len(psi)))

return u, c

# The function is found in the file `approx1D.py`.

#

# ### Plotting the approximation

#

# Comparing the given $f(x)$ and the approximate $u(x)$ visually is done

# with the following function, which utilizes `sympy`'s `lambdify` tool to

# convert a `sympy` expression to a Python function for numerical

# computations:

# In[13]:

import numpy as np

import matplotlib.pyplot as plt

def comparison_plot(f, u, Omega, filename='tmp.pdf'):

x = sym.Symbol('x')

f = sym.lambdify([x], f, modules="numpy")

u = sym.lambdify([x], u, modules="numpy")

resolution = 401 # no of points in plot

xcoor = np.linspace(Omega[0], Omega[1], resolution)

exact = f(xcoor)

approx = u(xcoor)

plt.plot(xcoor, approx)

plt.plot(xcoor, exact)

plt.legend(['approximation', 'exact'])

# plt.savefig(filename)

# The `modules='numpy'` argument to `lambdify` is important

# if there are mathematical functions, such as `sin` or `exp`

# in the symbolic expressions in `f` or `u`, and these

# mathematical functions are to be used with vector arguments, like

# `xcoor` above.

#

# Both the `least_squares` and `comparison_plot` functions are found in

# the file [`approx1D.py`](src/approx1D.py). The

# `comparison_plot` function in this file is more advanced and flexible

# than the simplistic version shown above. The file [`ex_approx1D.py`](src/ex_approx1D.py)

# applies the `approx1D` module to accomplish the forthcoming examples.

# ## Perfect approximation

#

#

# Let us use the code above to recompute the problem from

# the section [Example of linear approximation](#fem:approx:global:linear) where we want to approximate

# a parabola. What happens if we add an element $x^2$ to the set of basis functions and test what

# the best approximation is if $V$ is the space of all parabolic functions?

# The answer is quickly found by running

# In[6]:

x = sym.Symbol('x')

f = 10*(x-1)**2-1

u, c = least_squares(f=f, psi=[1, x, x**2], Omega=[1, 2])

print(u)

print(sym.expand(f))

# Now, what if we use ${\psi}_i(x)=x^i$ for $i=0,1,\ldots,N=40$?

# The output from `least_squares` gives $c_i=0$ for $i>2$, which

# means that the method finds the perfect approximation.

# ### The residual: an indirect but computationally cheap measure of the error.

#

# When attempting to solve

# a system $A c = b$, we may question how far off a start vector or a current approximation $c_0$ is.

# The error is clearly the difference between $c$ and $c_0$, $e=c-c_0$, but since we do

# not know the true solution $c$ we are unable to assess the error.

# However, the vector $c_0$ is the solution of the an alternative problem $A c_0 = b_0$.

# If the input, i.e., the right-hand sides $b_0$ and $b$ are close to each other then

# we expect the output of a solution process $c$ and $c_0$ to be close to each other.

# Furthermore, while $b_0$ in principle is unknown, it is easily computable as $b_0 = A c_0$

# and does not require inversion of $A$.

# The vector $b - b_0$ is the so-called residual $r$ defined by

# $$

# r = b - b_0 = b - A c_0 = A c - A c_0 .

# $$

# Clearly, the error and the residual are related by

# $$

# A e = r .

# $$

# While the computation of the error requires inversion of $A$,

# which may be computationally expensive, the residual

# is easily computable and do only require a matrix-vector product and vector additions.

#

#

#

#

#

# # Orthogonal basis functions

#

# Approximation of a function via orthogonal functions, especially sinusoidal

# functions or orthogonal polynomials, is a very popular and successful approach.

# The finite element method does not make use of orthogonal functions, but

# functions that are "almost orthogonal".

#

#

# ## Ill-conditioning

#

#

# For basis functions that are not orthogonal the condition number

# of the matrix may create problems during the solution process

# due to, for example, round-off errors as will be illustrated in the

# following. The computational example in the section [Perfect approximation](#fem:approx:global:exact1)

# applies the `least_squares` function which invokes symbolic

# methods to calculate and solve the linear system. The correct

# solution $c_0=9, c_1=-20, c_2=10, c_i=0$ for $i\geq 3$ is perfectly

# recovered.

#

# Suppose we

# convert the matrix and right-hand side to floating-point arrays

# and then solve the system using finite-precision arithmetics, which

# is what one will (almost) always do in real life. This time we

# get astonishing results! Up to about $N=7$ we get a solution that

# is reasonably close to the exact one. Increasing $N$ shows that

# seriously wrong coefficients are computed.

# Below is a table showing the solution of the linear system arising from

# approximating a parabola

# by functions of the form $u(x)=c_0 + c_1x + c_2x^2 + \cdots + c_{10}x^{10}$.

# Analytically, we know that $c_j=0$ for $j>2$, but numerically we may get

# $c_j\neq 0$ for $j>2$.

#

# | exact | sympy | numpy32 | numpy64 |

|---|---|---|---|

| 9 | 9.62 | 5.57 | 8.98 |

| -20 | -23.39 | -7.65 | -19.93 |

| 10 | 17.74 | -4.50 | 9.96 |

| 0 | -9.19 | 4.13 | -0.26 |

| 0 | 5.25 | 2.99 | 0.72 |

| 0 | 0.18 | -1.21 | -0.93 |

| 0 | -2.48 | -0.41 | 0.73 |

| 0 | 1.81 | -0.013 | -0.36 |

| 0 | -0.66 | 0.08 | 0.11 |

| 0 | 0.12 | 0.04 | -0.02 |

| 0 | -0.001 | -0.02 | 0.002 |

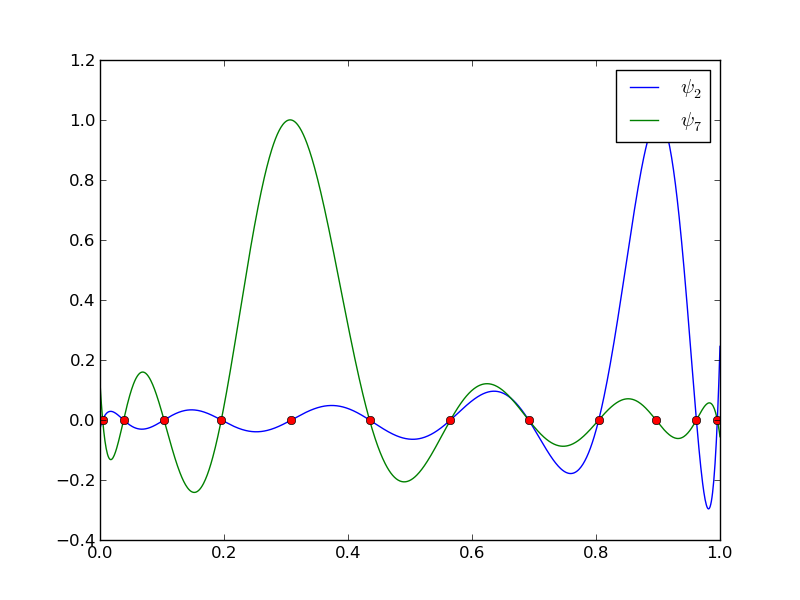

The 15 first basis functions $x^i$, $i=0,\ldots,14$.

# #

#

#

#

# On the other hand, the double precision `numpy` solver does run for

# $N=100$, resulting in answers that are not significantly worse than

# those in the table above, and large powers are

# associated with small coefficients (e.g., $c_j < 10^{-2}$ for $10\leq

# j\leq 20$ and $c_j<10^{-5}$ for $j > 20$). Even for $N=100$ the

# approximation still lies on top of the exact curve in a plot (!).

#

# The conclusion is that visual inspection of the quality of the approximation

# may not uncover fundamental numerical problems with the computations.

# However, numerical analysts have studied approximations and ill-conditioning

# for decades, and it is well known that the basis $\{1,x,x^2,x^3,\ldots,\}$

# is a bad basis. The best basis from a matrix conditioning point of view

# is to have orthogonal functions such that $(\psi_i,\psi_j)=0$ for

# $i\neq j$. There are many known sets of orthogonal polynomials and

# other functions.

# The functions used in the finite element method are almost orthogonal,

# and this property helps to avoid problems when solving matrix systems.

# Almost orthogonal is helpful, but not enough when it comes to

# partial differential equations, and ill-conditioning

# of the coefficient matrix is a theme when solving large-scale matrix

# systems arising from finite element discretizations.

#

#

# ## Fourier series

#

#

# A set of sine functions is widely used for approximating functions

# (note that the sines are orthogonal with respect to the $L_2$ inner product as can be easily

# verified using `sympy`). Let us take

# $$

# V = \hbox{span}\,\{ \sin \pi x, \sin 2\pi x,\ldots,\sin (N+1)\pi x\}

# {\thinspace .}

# $$

# That is,

# $$

# {\psi}_i(x) = \sin ((i+1)\pi x),\quad i\in{\mathcal{I}_s}{\thinspace .}

# $$

# An approximation to the parabola $f(x)=10(x-1)^2-1$ for $x\in\Omega=[1,2]$ from

# the section [Example of linear approximation](#fem:approx:global:linear) can then be computed by the

# `least_squares` function from the section [Implementation of the least squares method](#fem:approx:global:LS:code):

# In[14]:

N = 3

import sympy as sym

x = sym.Symbol('x')

psi = [sym.sin(sym.pi*(i+1)*x) for i in range(N+1)]

f = 10*(x-1)**2 - 1

Omega = [0, 1]

u, c = least_squares(f, psi, Omega)

comparison_plot(f, u, Omega)

# [Figure](#fem:approx:global:fig:parabola:sine1) (left) shows the oscillatory approximation

# of $\sum_{j=0}^Nc_j\sin ((j+1)\pi x)$ when $N=3$.

# Changing $N$ to 11 improves the approximation considerably, see

# [Figure](#fem:approx:global:fig:parabola:sine1) (right).

#

#

#

#

#

#

#

#

#

#

# On the other hand, the double precision `numpy` solver does run for

# $N=100$, resulting in answers that are not significantly worse than

# those in the table above, and large powers are

# associated with small coefficients (e.g., $c_j < 10^{-2}$ for $10\leq

# j\leq 20$ and $c_j<10^{-5}$ for $j > 20$). Even for $N=100$ the

# approximation still lies on top of the exact curve in a plot (!).

#

# The conclusion is that visual inspection of the quality of the approximation