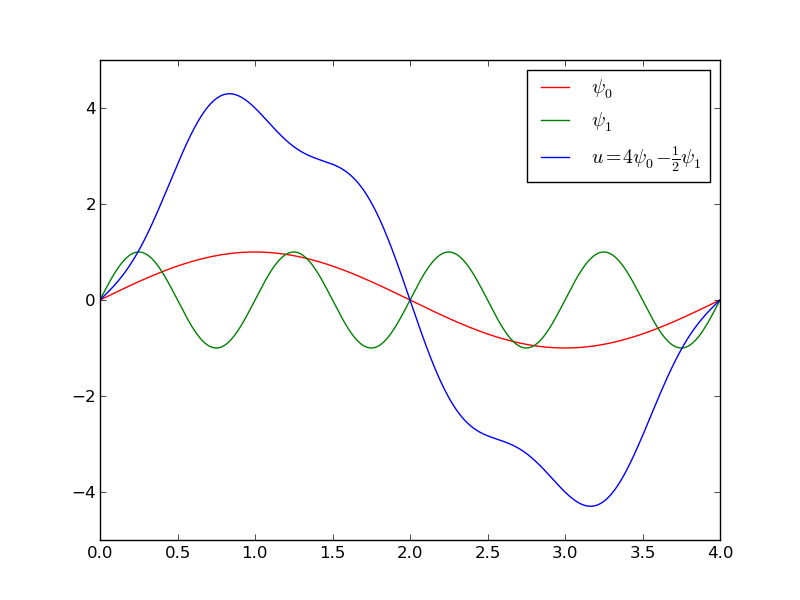

A function resulting from a weighted sum of two sine basis functions.

# #

#

#

#

#

#

#

#

#

#

#

#

#

#

#

#

#

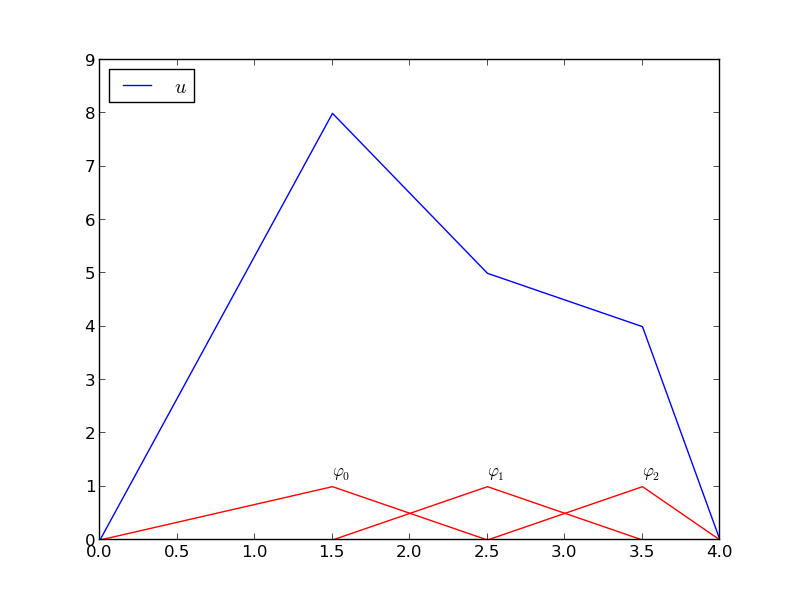

# A function resulting from a weighted sum of three local piecewise linear (hat) functions.

# #

#

#

#

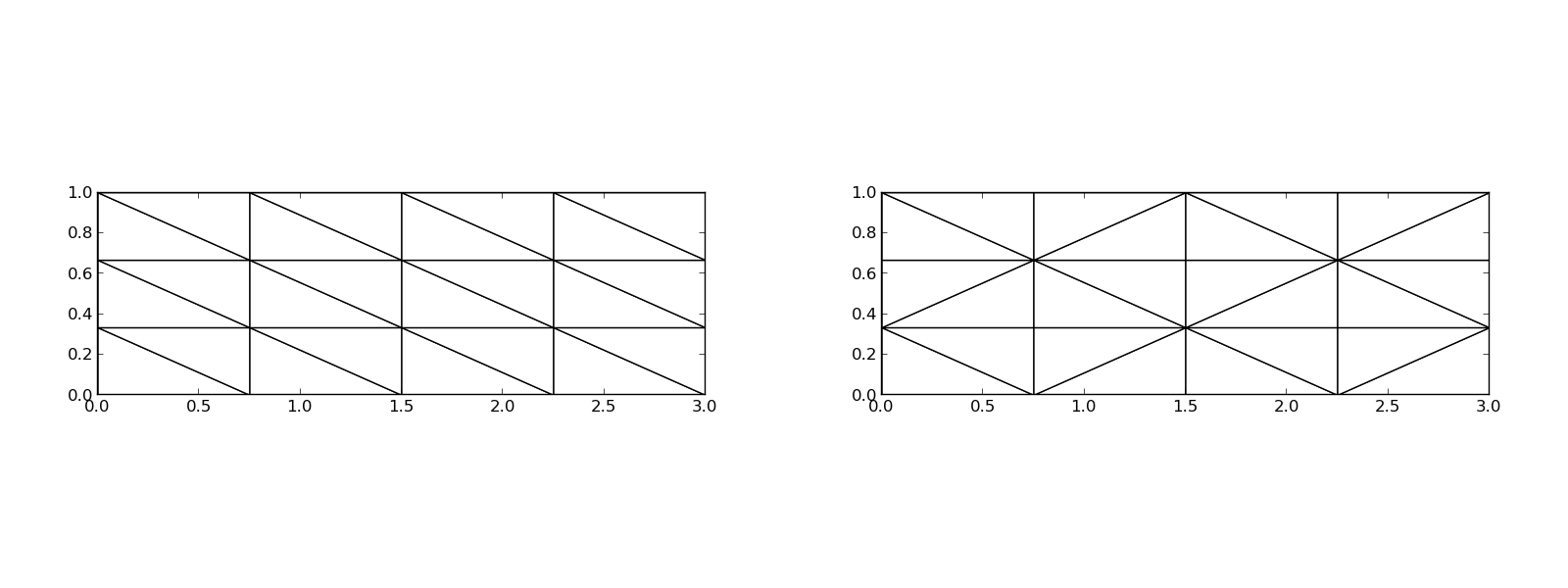

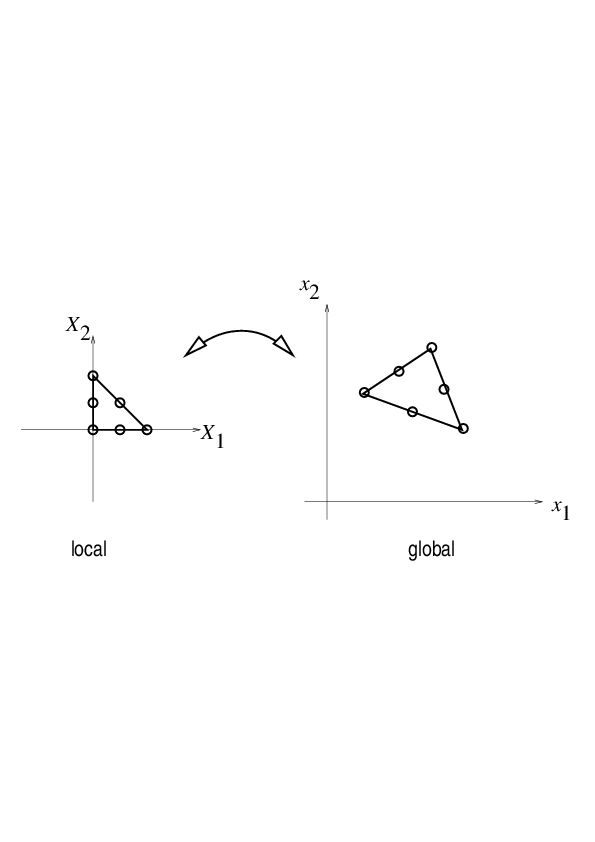

# We first introduce the concepts of elements and nodes in a simplistic fashion.

# Later, we shall generalize the concept

# of an element, which is a necessary step before treating a wider class of

# approximations within the family of finite element methods.

# The generalization is also compatible with

# the concepts used in the [FEniCS](http://fenicsproject.org) finite

# element software.

#

# ## Elements and nodes

#

#

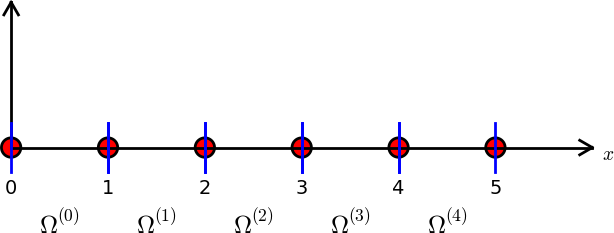

# Let $u$ and $f$ be defined on an interval $\Omega$. We divide $\Omega$

# into $N_e$ non-overlapping subintervals $\Omega^{(e)}$,

# $e=0,\ldots,N_e-1$:

#

#

#

# $$

# \begin{equation}

# \Omega = \Omega^{(0)}\cup \cdots \cup \Omega^{(N_e)}{\thinspace .} \label{_auto32} \tag{68}

# \end{equation}

# $$

# We shall for now refer to $\Omega^{(e)}$ as an *element*, identified

# by the unique number $e$. On each element we introduce a set of

# points called *nodes*. For now we assume that the nodes are uniformly

# spaced throughout the element and that the boundary points of the

# elements are also nodes. The nodes are given numbers both within an

# element and in the global domain. These are referred to as *local* and

# *global* node numbers, respectively. Local nodes are numbered with an

# index $r=0,\ldots,d$, while the $N_n$ global nodes are numbered as

# $i=0,\ldots,N_n-1$. [Figure](#fem:approx:fe:def:elements:nodes:fig:P1) shows nodes as small

# circular disks and element boundaries as small vertical lines. Global

# node numbers appear under the nodes, but local node numbers are not

# shown. Since there are two nodes in each element, the local nodes are

# numbered 0 (left) and 1 (right) in each element.

#

#

#

#

#

#

#

#

#

#

# We first introduce the concepts of elements and nodes in a simplistic fashion.

# Later, we shall generalize the concept

# of an element, which is a necessary step before treating a wider class of

# approximations within the family of finite element methods.

# The generalization is also compatible with

# the concepts used in the [FEniCS](http://fenicsproject.org) finite

# element software.

#

# ## Elements and nodes

#

#

# Let $u$ and $f$ be defined on an interval $\Omega$. We divide $\Omega$

# into $N_e$ non-overlapping subintervals $\Omega^{(e)}$,

# $e=0,\ldots,N_e-1$:

#

#

#

# $$

# \begin{equation}

# \Omega = \Omega^{(0)}\cup \cdots \cup \Omega^{(N_e)}{\thinspace .} \label{_auto32} \tag{68}

# \end{equation}

# $$

# We shall for now refer to $\Omega^{(e)}$ as an *element*, identified

# by the unique number $e$. On each element we introduce a set of

# points called *nodes*. For now we assume that the nodes are uniformly

# spaced throughout the element and that the boundary points of the

# elements are also nodes. The nodes are given numbers both within an

# element and in the global domain. These are referred to as *local* and

# *global* node numbers, respectively. Local nodes are numbered with an

# index $r=0,\ldots,d$, while the $N_n$ global nodes are numbered as

# $i=0,\ldots,N_n-1$. [Figure](#fem:approx:fe:def:elements:nodes:fig:P1) shows nodes as small

# circular disks and element boundaries as small vertical lines. Global

# node numbers appear under the nodes, but local node numbers are not

# shown. Since there are two nodes in each element, the local nodes are

# numbered 0 (left) and 1 (right) in each element.

#

#

#

#

#

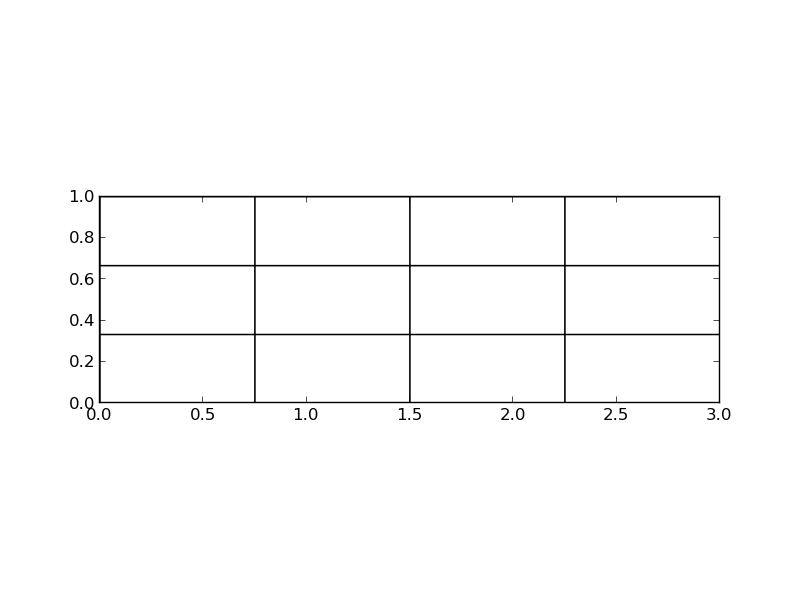

# Finite element mesh with 5 elements and 6 nodes.

# #

#

#

#

#

# Nodes and elements uniquely define a *finite element mesh*, which is

# our discrete representation of the domain in the computations. A

# common special case is that of a *uniformly partitioned mesh* where

# each element has the same length and the distance between nodes is

# constant. [Figure](#fem:approx:fe:def:elements:nodes:fig:P1) shows

# an example on a uniformly partitioned mesh. The strength of the finite

# element method (in contrast to the finite difference method) is that

# it is just as easy to work with a non-uniformly partitioned mesh in 3D as a

# uniformly partitioned mesh in 1D.

#

# ### Example

#

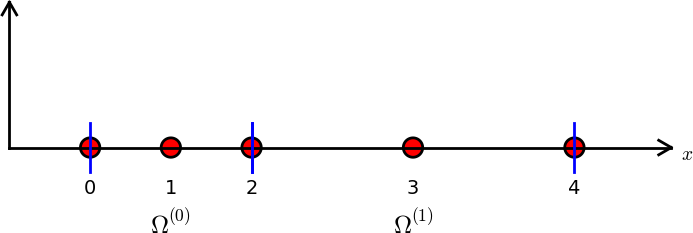

# On $\Omega =[0,1]$ we may introduce two elements,

# $\Omega^{(0)}=[0,0.4]$ and $\Omega^{(1)}=[0.4,1]$. Furthermore, let us

# introduce three nodes per element, equally spaced within each element.

# [Figure](#fem:approx:fe:def:elements:nodes:fig:P2) shows the mesh

# with $N_e=2$ elements and $N_n=2N_e+1=5$ nodes. A node's coordinate

# is denoted by $x_i$, where $i$ is either a global node number or a

# local one. In the latter case we also need to know the element number

# to uniquely define the node.

#

# The three nodes in element number 0 are $x_0=0$, $x_1=0.2$, and

# $x_2=0.4$. The local and global node numbers are here equal. In

# element number 1, we have the local nodes $x_0=0.4$, $x_1=0.7$, and

# $x_2=1$ and the corresponding global nodes $x_2=0.4$, $x_3=0.7$, and

# $x_4=1$. Note that the global node $x_2=0.4$ is shared by the two

# elements.

#

#

#

#

#

#

#

#

#

#

#

# Nodes and elements uniquely define a *finite element mesh*, which is

# our discrete representation of the domain in the computations. A

# common special case is that of a *uniformly partitioned mesh* where

# each element has the same length and the distance between nodes is

# constant. [Figure](#fem:approx:fe:def:elements:nodes:fig:P1) shows

# an example on a uniformly partitioned mesh. The strength of the finite

# element method (in contrast to the finite difference method) is that

# it is just as easy to work with a non-uniformly partitioned mesh in 3D as a

# uniformly partitioned mesh in 1D.

#

# ### Example

#

# On $\Omega =[0,1]$ we may introduce two elements,

# $\Omega^{(0)}=[0,0.4]$ and $\Omega^{(1)}=[0.4,1]$. Furthermore, let us

# introduce three nodes per element, equally spaced within each element.

# [Figure](#fem:approx:fe:def:elements:nodes:fig:P2) shows the mesh

# with $N_e=2$ elements and $N_n=2N_e+1=5$ nodes. A node's coordinate

# is denoted by $x_i$, where $i$ is either a global node number or a

# local one. In the latter case we also need to know the element number

# to uniquely define the node.

#

# The three nodes in element number 0 are $x_0=0$, $x_1=0.2$, and

# $x_2=0.4$. The local and global node numbers are here equal. In

# element number 1, we have the local nodes $x_0=0.4$, $x_1=0.7$, and

# $x_2=1$ and the corresponding global nodes $x_2=0.4$, $x_3=0.7$, and

# $x_4=1$. Note that the global node $x_2=0.4$ is shared by the two

# elements.

#

#

#

#

#

# Finite element mesh with 2 elements and 5 nodes.

# #

#

#

#

# For the purpose of implementation, we introduce two lists or arrays:

# `nodes` for storing the coordinates of the nodes, with the global node

# numbers as indices, and `elements` for holding the global node numbers

# in each element. By defining `elements` as a list of lists, where each

# sublist contains the global node numbers of one particular element,

# the indices of each sublist will correspond to local node numbers for

# that element. The `nodes` and `elements` lists for the sample mesh

# above take the form

# In[1]:

nodes = [0, 0.2, 0.4, 0.7, 1]

elements = [[0, 1, 2], [2, 3, 4]]

# Looking up the coordinate of, e.g., local node number 2 in element 1,

# is done by `nodes[elements[1][2]]` (recall that nodes and

# elements start their numbering at 0). The corresponding global node number

# is 4, so we could alternatively look up the coordinate as `nodes[4]`.

#

# The numbering of elements and nodes does not need to be regular.

# [Figure](#fem:approx:fe:def:elements:nodes:fig:P1:irregular) shows

# an example corresponding to

# In[2]:

nodes = [1.5, 5.5, 4.2, 0.3, 2.2, 3.1]

elements = [[2, 1], [4, 5], [0, 4], [3, 0], [5, 2]]

#

#

#

#

#

#

#

#

#

# For the purpose of implementation, we introduce two lists or arrays:

# `nodes` for storing the coordinates of the nodes, with the global node

# numbers as indices, and `elements` for holding the global node numbers

# in each element. By defining `elements` as a list of lists, where each

# sublist contains the global node numbers of one particular element,

# the indices of each sublist will correspond to local node numbers for

# that element. The `nodes` and `elements` lists for the sample mesh

# above take the form

# In[1]:

nodes = [0, 0.2, 0.4, 0.7, 1]

elements = [[0, 1, 2], [2, 3, 4]]

# Looking up the coordinate of, e.g., local node number 2 in element 1,

# is done by `nodes[elements[1][2]]` (recall that nodes and

# elements start their numbering at 0). The corresponding global node number

# is 4, so we could alternatively look up the coordinate as `nodes[4]`.

#

# The numbering of elements and nodes does not need to be regular.

# [Figure](#fem:approx:fe:def:elements:nodes:fig:P1:irregular) shows

# an example corresponding to

# In[2]:

nodes = [1.5, 5.5, 4.2, 0.3, 2.2, 3.1]

elements = [[2, 1], [4, 5], [0, 4], [3, 0], [5, 2]]

#

#

#

#

# Example on irregular numbering of elements and nodes.

# #

#

#

#

# ## The basis functions

#

# ### Construction principles

#

# Finite element basis functions are in this text recognized by

# the notation ${\varphi}_i(x)$, where the index (now in the beginning)

# corresponds to

# a global node number. Since ${\psi}_i$ is the symbol for basis

# functions in general in this text, the particular choice of

# finite element basis functions means that we take

# ${\psi}_i = {\varphi}_i$.

#

#

#

# Let $i$ be the global node number corresponding to local node $r$ in

# element number $e$ with $d+1$ local nodes. We distinguish between

# *internal* nodes in an element and *shared* nodes. The latter are

# nodes that are shared with the neighboring elements.

# The finite element basis functions ${\varphi}_i$

# are now defined as follows.

#

# * For an internal node, with global number $i$ and local number $r$,

# take ${\varphi}_i(x)$ to be the Lagrange

# polynomial that is 1 at the local node $r$ and zero

# at all other nodes in the element.

# The degree of the polynomial is $d$.

# On all other elements, ${\varphi}_i=0$.

#

# * For a shared node,

# let ${\varphi}_i$ be made up of the Lagrange polynomial on this element

# that is 1 at node $i$, combined with the Lagrange polynomial over

# the neighboring element that is also 1 at node $i$.

# On all other elements, ${\varphi}_i=0$.

#

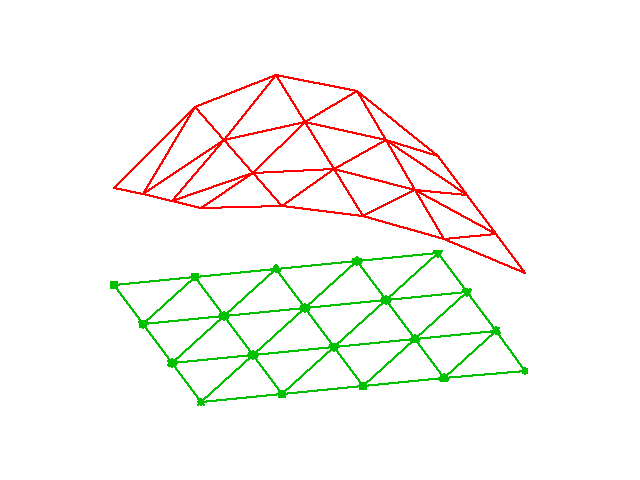

# A visual impression of three such basis functions is given in

# [Figure](#fem:approx:fe:fig:P2). When solving differential equations,

# we need the derivatives of these basis functions as well, and the

# corresponding derivatives are shown in [Figure](#fem:approx:fe:fig:dP2).

# Note that the derivatives are highly discontinuous!

# In these figures,

# the domain $\Omega = [0,1]$ is divided into four equal-sized elements,

# each having three local nodes.

# The element boundaries are

# marked by vertical dashed lines and the nodes by small circles.

# The function ${\varphi}_2(x)$

# is composed of a quadratic Lagrange polynomial over element 0 and 1,

# ${\varphi}_3(x)$ corresponds to an internal node in element 1 and

# is therefore nonzero on this element only, while ${\varphi}_4(x)$

# is like ${\varphi}_2(x)$ composed to two Lagrange polynomials over two

# elements. Also observe that the basis function ${\varphi}_i$ is zero at

# all nodes, except at global node number $i$.

# We also remark that

# the shape of a basis function over an element is completely determined

# by the coordinates of the local nodes in the element.

#

#

#

#

#

#

#

#

#

#

#

#

#

#

#

# ## The basis functions

#

# ### Construction principles

#

# Finite element basis functions are in this text recognized by

# the notation ${\varphi}_i(x)$, where the index (now in the beginning)

# corresponds to

# a global node number. Since ${\psi}_i$ is the symbol for basis

# functions in general in this text, the particular choice of

# finite element basis functions means that we take

# ${\psi}_i = {\varphi}_i$.

#

#

#

# Let $i$ be the global node number corresponding to local node $r$ in

# element number $e$ with $d+1$ local nodes. We distinguish between

# *internal* nodes in an element and *shared* nodes. The latter are

# nodes that are shared with the neighboring elements.

# The finite element basis functions ${\varphi}_i$

# are now defined as follows.

#

# * For an internal node, with global number $i$ and local number $r$,

# take ${\varphi}_i(x)$ to be the Lagrange

# polynomial that is 1 at the local node $r$ and zero

# at all other nodes in the element.

# The degree of the polynomial is $d$.

# On all other elements, ${\varphi}_i=0$.

#

# * For a shared node,

# let ${\varphi}_i$ be made up of the Lagrange polynomial on this element

# that is 1 at node $i$, combined with the Lagrange polynomial over

# the neighboring element that is also 1 at node $i$.

# On all other elements, ${\varphi}_i=0$.

#

# A visual impression of three such basis functions is given in

# [Figure](#fem:approx:fe:fig:P2). When solving differential equations,

# we need the derivatives of these basis functions as well, and the

# corresponding derivatives are shown in [Figure](#fem:approx:fe:fig:dP2).

# Note that the derivatives are highly discontinuous!

# In these figures,

# the domain $\Omega = [0,1]$ is divided into four equal-sized elements,

# each having three local nodes.

# The element boundaries are

# marked by vertical dashed lines and the nodes by small circles.

# The function ${\varphi}_2(x)$

# is composed of a quadratic Lagrange polynomial over element 0 and 1,

# ${\varphi}_3(x)$ corresponds to an internal node in element 1 and

# is therefore nonzero on this element only, while ${\varphi}_4(x)$

# is like ${\varphi}_2(x)$ composed to two Lagrange polynomials over two

# elements. Also observe that the basis function ${\varphi}_i$ is zero at

# all nodes, except at global node number $i$.

# We also remark that

# the shape of a basis function over an element is completely determined

# by the coordinates of the local nodes in the element.

#

#

#

#

#

#

#

#

#

#

# Illustration of the piecewise quadratic basis functions associated with nodes in an element.

# #

#

#

#

#

#

#

#

#

#

#

#

#

#

#

#

#

# Illustration of the derivatives of the piecewise quadratic basis functions associated with nodes in an element.

# #

#

#

#

# ### Properties of ${\varphi}_i$

#

# The construction of basis functions according to the principles above

# lead to two important properties of ${\varphi}_i(x)$. First,

#

#

#

# $$

# \begin{equation}

# {\varphi}_i(x_{j}) =\delta_{ij},\quad \delta_{ij} =

# \left\lbrace\begin{array}{ll}

# 1, & i=j,\\

# 0, & i\neq j,

# \end{array}\right.

# \label{fem:approx:fe:phi:prop1} \tag{69}

# \end{equation}

# $$

# when $x_{j}$ is a node in the mesh with global node number $j$.

# The

# result ${\varphi}_i(x_{j}) =\delta_{ij}$ is obtained as

# the Lagrange polynomials are constructed to have

# exactly this property.

# The property also implies a convenient interpretation of $c_i$

# as the value of $u$ at node $i$. To show this, we expand $u$

# in the usual way as $\sum_jc_j{\psi}_j$ and choose ${\psi}_i = {\varphi}_i$:

# $$

# u(x_{i}) = \sum_{j\in{\mathcal{I}_s}} c_j {\psi}_j (x_{i}) =

# \sum_{j\in{\mathcal{I}_s}} c_j {\varphi}_j (x_{i}) = c_i {\varphi}_i (x_{i}) = c_i

# {\thinspace .}

# $$

# Because of this interpretation,

# the coefficient $c_i$ is by many named $u_i$ or $U_i$.

#

#

#

# Second,

# ${\varphi}_i(x)$ is mostly zero throughout the domain:

#

# * ${\varphi}_i(x) \neq 0$ only on those elements that contain global node $i$,

#

# * ${\varphi}_i(x){\varphi}_j(x) \neq 0$ if and only if $i$ and $j$ are global node

# numbers in the same element.

#

# Since $A_{i,j}$ is the integral of

# ${\varphi}_i{\varphi}_j$ it means that

# *most of the elements in the coefficient matrix will be zero*.

# We will come back to these properties and use

# them actively in computations to save memory and CPU time.

#

# In our example so far, each element has $d+1$ nodes, resulting in

# local Lagrange polynomials of degree $d$, but it is not a requirement to have

# the same $d$ value in each element.

#

# ## Example on quadratic finite element functions

#

# Let us set up the `nodes` and `elements` lists corresponding to the

# mesh implied by [Figure](#fem:approx:fe:fig:P2).

# [Figure](#fem:approx:fe:fig:P2:mesh) sketches the mesh and the

# numbering. We have

# In[3]:

nodes = [0, 0.125, 0.25, 0.375, 0.5, 0.625, 0.75, 0.875, 1.0]

elements = [[0, 1, 2], [2, 3, 4], [4, 5, 6], [6, 7, 8]]

#

#

#

#

#

#

#

#

#

# ### Properties of ${\varphi}_i$

#

# The construction of basis functions according to the principles above

# lead to two important properties of ${\varphi}_i(x)$. First,

#

#

#

# $$

# \begin{equation}

# {\varphi}_i(x_{j}) =\delta_{ij},\quad \delta_{ij} =

# \left\lbrace\begin{array}{ll}

# 1, & i=j,\\

# 0, & i\neq j,

# \end{array}\right.

# \label{fem:approx:fe:phi:prop1} \tag{69}

# \end{equation}

# $$

# when $x_{j}$ is a node in the mesh with global node number $j$.

# The

# result ${\varphi}_i(x_{j}) =\delta_{ij}$ is obtained as

# the Lagrange polynomials are constructed to have

# exactly this property.

# The property also implies a convenient interpretation of $c_i$

# as the value of $u$ at node $i$. To show this, we expand $u$

# in the usual way as $\sum_jc_j{\psi}_j$ and choose ${\psi}_i = {\varphi}_i$:

# $$

# u(x_{i}) = \sum_{j\in{\mathcal{I}_s}} c_j {\psi}_j (x_{i}) =

# \sum_{j\in{\mathcal{I}_s}} c_j {\varphi}_j (x_{i}) = c_i {\varphi}_i (x_{i}) = c_i

# {\thinspace .}

# $$

# Because of this interpretation,

# the coefficient $c_i$ is by many named $u_i$ or $U_i$.

#

#

#

# Second,

# ${\varphi}_i(x)$ is mostly zero throughout the domain:

#

# * ${\varphi}_i(x) \neq 0$ only on those elements that contain global node $i$,

#

# * ${\varphi}_i(x){\varphi}_j(x) \neq 0$ if and only if $i$ and $j$ are global node

# numbers in the same element.

#

# Since $A_{i,j}$ is the integral of

# ${\varphi}_i{\varphi}_j$ it means that

# *most of the elements in the coefficient matrix will be zero*.

# We will come back to these properties and use

# them actively in computations to save memory and CPU time.

#

# In our example so far, each element has $d+1$ nodes, resulting in

# local Lagrange polynomials of degree $d$, but it is not a requirement to have

# the same $d$ value in each element.

#

# ## Example on quadratic finite element functions

#

# Let us set up the `nodes` and `elements` lists corresponding to the

# mesh implied by [Figure](#fem:approx:fe:fig:P2).

# [Figure](#fem:approx:fe:fig:P2:mesh) sketches the mesh and the

# numbering. We have

# In[3]:

nodes = [0, 0.125, 0.25, 0.375, 0.5, 0.625, 0.75, 0.875, 1.0]

elements = [[0, 1, 2], [2, 3, 4], [4, 5, 6], [6, 7, 8]]

#

#

#

#

# Sketch of mesh with 4 elements and 3 nodes per element.

# #

#

#

#

#

# Let us explain mathematically how the basis functions are constructed

# according to the principles.

# Consider element number 1 in [Figure](#fem:approx:fe:fig:P2:mesh),

# $\Omega^{(1)}=[0.25, 0.5]$, with local nodes

# 0, 1, and 2 corresponding to global nodes 2, 3, and 4.

# The coordinates of these nodes are

# $0.25$, $0.375$, and $0.5$, respectively.

# We define three Lagrange

# polynomials on this element:

#

# 1. The polynomial that is 1 at local node 1

# (global node 3) makes up the basis function

# ${\varphi}_3(x)$ over this element,

# with ${\varphi}_3(x)=0$ outside the element.

#

# 2. The polynomial that is 1 at local node 0 (global node 2) is the "right

# part" of the global basis function

# ${\varphi}_2(x)$. The "left part" of ${\varphi}_2(x)$ consists of

# a Lagrange polynomial associated with local node 2 in

# the neighboring element $\Omega^{(0)}=[0, 0.25]$.

#

# 3. Finally, the polynomial that is 1 at local node 2 (global node 4)

# is the "left part" of the global basis function ${\varphi}_4(x)$.

# The "right part" comes from the Lagrange polynomial that is 1 at

# local node 0 in the neighboring element $\Omega^{(2)}=[0.5, 0.75]$.

#

# The specific mathematical form of the polynomials *over element* 1 is

# given by the formula ([56](#fem:approx:global:Lagrange:poly)):

# $$

# \begin{align*}

# {\varphi}_3(x) &= \frac{(x-0.25)(x-0.5)}{(0.375-0.25)(0.375-0.5)},\quad x\in\Omega^{(1)}\\

# {\varphi}_2(x) &= \frac{(x-0.375)(x-0.5)}{(0.25-0.375)(0.25-0.5)},\quad x\in\Omega^{(1)}\\

# {\varphi}_4(x) &= \frac{(x-0.25)(x-0.375)}{(0.5-0.25)(0.5-0.25)},\quad x\in\Omega^{(1)} .

# \end{align*}

# $$

# As mentioned earlier, any global basis function ${\varphi}_i(x)$ is zero

# on elements that do not contain the node with global node number $i$.

# Clearly, the property ([69](#fem:approx:fe:phi:prop1)) is easily

# verified, see for instance that ${\varphi}_3(0.375) = 1$ while

# ${\varphi}_3(0.25) = 0$ and ${\varphi}_3(0.5) = 0$.

#

# The other global functions associated with internal nodes,

# ${\varphi}_1$, ${\varphi}_5$, and ${\varphi}_7$, are all of the same shape

# as the drawn ${\varphi}_3$ in [Figure](#fem:approx:fe:fig:P2), while

# the global basis functions associated with shared nodes have the same

# shape as shown ${\varphi}_2$ and ${\varphi}_4$. If the elements were of

# different length, the basis functions would be stretched according to

# the element size and hence be different.

#

#

#

#

#

#

#

#

#

#

#

#

#

# ## Example on linear finite element functions

#

#

# [Figure](#fem:approx:fe:fig:P1) shows

# piecewise linear basis functions ($d=1$) (with derivatives in

# [Figure](#fem:approx:fe:fig:dP1)). These are mathematically

# simpler than the quadratic functions in the previous section, and one would

# therefore think that it is easier to understand the linear functions

# first. However, linear basis functions do not involve internal nodes

# and are therefore a special case of the general situation. That is why

# we think it is better to understand the construction of quadratic functions

# first, which easily generalize to any $d > 2$, and then look at the

# special case $d=1$.

#

#

#

#

#

#

#

#

#

#

#

# Let us explain mathematically how the basis functions are constructed

# according to the principles.

# Consider element number 1 in [Figure](#fem:approx:fe:fig:P2:mesh),

# $\Omega^{(1)}=[0.25, 0.5]$, with local nodes

# 0, 1, and 2 corresponding to global nodes 2, 3, and 4.

# The coordinates of these nodes are

# $0.25$, $0.375$, and $0.5$, respectively.

# We define three Lagrange

# polynomials on this element:

#

# 1. The polynomial that is 1 at local node 1

# (global node 3) makes up the basis function

# ${\varphi}_3(x)$ over this element,

# with ${\varphi}_3(x)=0$ outside the element.

#

# 2. The polynomial that is 1 at local node 0 (global node 2) is the "right

# part" of the global basis function

# ${\varphi}_2(x)$. The "left part" of ${\varphi}_2(x)$ consists of

# a Lagrange polynomial associated with local node 2 in

# the neighboring element $\Omega^{(0)}=[0, 0.25]$.

#

# 3. Finally, the polynomial that is 1 at local node 2 (global node 4)

# is the "left part" of the global basis function ${\varphi}_4(x)$.

# The "right part" comes from the Lagrange polynomial that is 1 at

# local node 0 in the neighboring element $\Omega^{(2)}=[0.5, 0.75]$.

#

# The specific mathematical form of the polynomials *over element* 1 is

# given by the formula ([56](#fem:approx:global:Lagrange:poly)):

# $$

# \begin{align*}

# {\varphi}_3(x) &= \frac{(x-0.25)(x-0.5)}{(0.375-0.25)(0.375-0.5)},\quad x\in\Omega^{(1)}\\

# {\varphi}_2(x) &= \frac{(x-0.375)(x-0.5)}{(0.25-0.375)(0.25-0.5)},\quad x\in\Omega^{(1)}\\

# {\varphi}_4(x) &= \frac{(x-0.25)(x-0.375)}{(0.5-0.25)(0.5-0.25)},\quad x\in\Omega^{(1)} .

# \end{align*}

# $$

# As mentioned earlier, any global basis function ${\varphi}_i(x)$ is zero

# on elements that do not contain the node with global node number $i$.

# Clearly, the property ([69](#fem:approx:fe:phi:prop1)) is easily

# verified, see for instance that ${\varphi}_3(0.375) = 1$ while

# ${\varphi}_3(0.25) = 0$ and ${\varphi}_3(0.5) = 0$.

#

# The other global functions associated with internal nodes,

# ${\varphi}_1$, ${\varphi}_5$, and ${\varphi}_7$, are all of the same shape

# as the drawn ${\varphi}_3$ in [Figure](#fem:approx:fe:fig:P2), while

# the global basis functions associated with shared nodes have the same

# shape as shown ${\varphi}_2$ and ${\varphi}_4$. If the elements were of

# different length, the basis functions would be stretched according to

# the element size and hence be different.

#

#

#

#

#

#

#

#

#

#

#

#

#

# ## Example on linear finite element functions

#

#

# [Figure](#fem:approx:fe:fig:P1) shows

# piecewise linear basis functions ($d=1$) (with derivatives in

# [Figure](#fem:approx:fe:fig:dP1)). These are mathematically

# simpler than the quadratic functions in the previous section, and one would

# therefore think that it is easier to understand the linear functions

# first. However, linear basis functions do not involve internal nodes

# and are therefore a special case of the general situation. That is why

# we think it is better to understand the construction of quadratic functions

# first, which easily generalize to any $d > 2$, and then look at the

# special case $d=1$.

#

#

#

#

#

# Illustration of the piecewise linear basis functions associated with nodes in an element.

# #

#

#

#

#

#

#

#

#

#

#

#

#

#

#

#

#

# Illustration of the derivatives of piecewise linear basis functions associated with nodes in an element.

# #

#

#

#

#

# We have the same four elements on $\Omega = [0,1]$. Now there are no

# internal nodes in the elements so that all basis functions are

# associated with shared nodes and hence made up of two Lagrange

# polynomials, one from each of the two neighboring elements. For

# example, ${\varphi}_1(x)$ results from the Lagrange polynomial in

# element 0 that is 1 at local node 1 and 0 at local node 0, combined

# with the Lagrange polynomial in element 1 that is 1 at local node 0

# and 0 at local node 1. The other basis functions are constructed

# similarly.

#

# Explicit mathematical formulas are needed for ${\varphi}_i(x)$ in

# computations. In the piecewise linear case, the formula

# ([56](#fem:approx:global:Lagrange:poly)) leads to

#

#

#

# $$

# \begin{equation}

# {\varphi}_i(x) = \left\lbrace\begin{array}{ll}

# 0, & x < x_{i-1},\\

# (x - x_{i-1})/(x_{i} - x_{i-1}),

# & x_{i-1} \leq x < x_{i},\\

# 1 -

# (x - x_{i})/(x_{i+1} - x_{i}),

# & x_{i} \leq x < x_{i+1},\\

# 0, & x\geq x_{i+1}{\thinspace .} \end{array}

# \right.

# \label{fem:approx:fe:phi:1:formula1} \tag{70}

# \end{equation}

# $$

# Here, $x_{j}$, $j=i-1,i,i+1$, denotes the coordinate of node $j$.

# For elements of equal length $h$ the formulas can be simplified to

#

#

#

# $$

# \begin{equation}

# {\varphi}_i(x) = \left\lbrace\begin{array}{ll}

# 0, & x < x_{i-1},\\

# (x - x_{i-1})/h,

# & x_{i-1} \leq x < x_{i},\\

# 1 -

# (x - x_{i})/h,

# & x_{i} \leq x < x_{i+1},\\

# 0, & x\geq x_{i+1} .

# \end{array}

# \right.

# \label{fem:approx:fe:phi:1:formula2} \tag{71}

# \end{equation}

# $$

# ## Example on cubic finite element functions

#

# Piecewise cubic basis functions can be defined by introducing four

# nodes per element. [Figure](#fem:approx:fe:fig:P3) shows

# examples on ${\varphi}_i(x)$, $i=3,4,5,6$, associated with element number 1.

# Note that ${\varphi}_4$ and ${\varphi}_5$ are nonzero only on element number 1,

# while

# ${\varphi}_3$ and ${\varphi}_6$ are made up of Lagrange polynomials on two

# neighboring elements.

#

#

#

#

#

#

#

#

#

#

#

# We have the same four elements on $\Omega = [0,1]$. Now there are no

# internal nodes in the elements so that all basis functions are

# associated with shared nodes and hence made up of two Lagrange

# polynomials, one from each of the two neighboring elements. For

# example, ${\varphi}_1(x)$ results from the Lagrange polynomial in

# element 0 that is 1 at local node 1 and 0 at local node 0, combined

# with the Lagrange polynomial in element 1 that is 1 at local node 0

# and 0 at local node 1. The other basis functions are constructed

# similarly.

#

# Explicit mathematical formulas are needed for ${\varphi}_i(x)$ in

# computations. In the piecewise linear case, the formula

# ([56](#fem:approx:global:Lagrange:poly)) leads to

#

#

#

# $$

# \begin{equation}

# {\varphi}_i(x) = \left\lbrace\begin{array}{ll}

# 0, & x < x_{i-1},\\

# (x - x_{i-1})/(x_{i} - x_{i-1}),

# & x_{i-1} \leq x < x_{i},\\

# 1 -

# (x - x_{i})/(x_{i+1} - x_{i}),

# & x_{i} \leq x < x_{i+1},\\

# 0, & x\geq x_{i+1}{\thinspace .} \end{array}

# \right.

# \label{fem:approx:fe:phi:1:formula1} \tag{70}

# \end{equation}

# $$

# Here, $x_{j}$, $j=i-1,i,i+1$, denotes the coordinate of node $j$.

# For elements of equal length $h$ the formulas can be simplified to

#

#

#

# $$

# \begin{equation}

# {\varphi}_i(x) = \left\lbrace\begin{array}{ll}

# 0, & x < x_{i-1},\\

# (x - x_{i-1})/h,

# & x_{i-1} \leq x < x_{i},\\

# 1 -

# (x - x_{i})/h,

# & x_{i} \leq x < x_{i+1},\\

# 0, & x\geq x_{i+1} .

# \end{array}

# \right.

# \label{fem:approx:fe:phi:1:formula2} \tag{71}

# \end{equation}

# $$

# ## Example on cubic finite element functions

#

# Piecewise cubic basis functions can be defined by introducing four

# nodes per element. [Figure](#fem:approx:fe:fig:P3) shows

# examples on ${\varphi}_i(x)$, $i=3,4,5,6$, associated with element number 1.

# Note that ${\varphi}_4$ and ${\varphi}_5$ are nonzero only on element number 1,

# while

# ${\varphi}_3$ and ${\varphi}_6$ are made up of Lagrange polynomials on two

# neighboring elements.

#

#

#

#

#

# Illustration of the piecewise cubic basis functions associated with nodes in an element.

# #

#

#

#

#

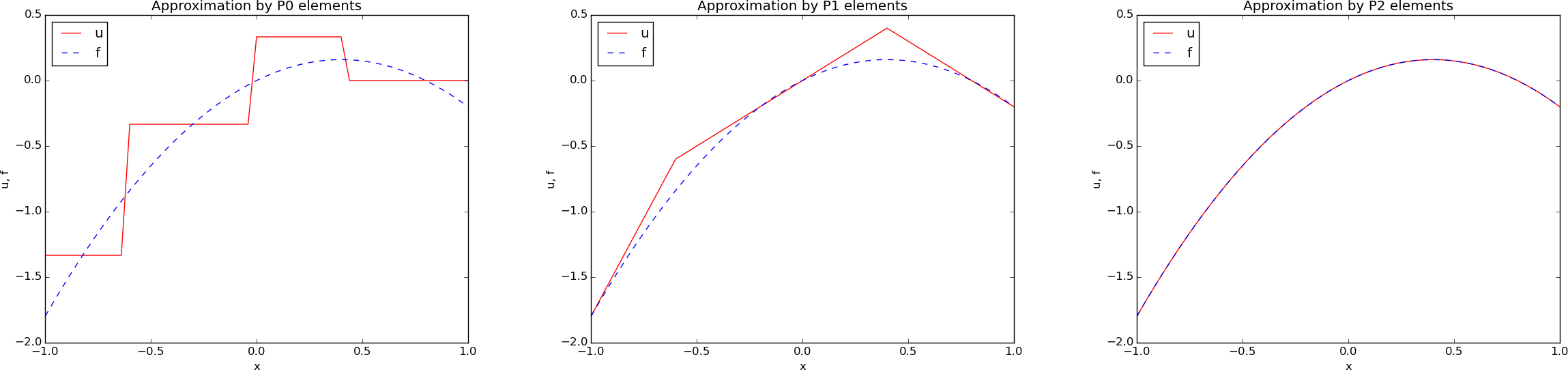

# We see that all the piecewise linear basis functions have the same

# "hat" shape. They are naturally referred to as *hat functions*,

# also called *chapeau functions*.

# The piecewise quadratic functions in [Figure](#fem:approx:fe:fig:P2)

# are seen to be of two types. "Rounded hats" associated with internal

# nodes in the elements and some more "sombrero" shaped hats associated

# with element boundary nodes. Higher-order basis functions also have

# hat-like shapes, but the functions have pronounced oscillations in addition,

# as illustrated in [Figure](#fem:approx:fe:fig:P3).

#

#

# A common terminology is to speak about *linear elements* as

# elements with two local nodes associated with

# piecewise linear basis functions. Similarly, *quadratic elements* and

# *cubic elements* refer to piecewise quadratic or cubic functions

# over elements with three or four local nodes, respectively.

# Alternative names, frequently used in the following, are **P1** for linear

# elements, **P2** for quadratic elements, and so forth: P$d$ signifies

# degree $d$ of the polynomial basis functions.

#

#

# ## Calculating the linear system

#

#

# The elements in the coefficient matrix and right-hand side are given

# by the formulas ([28](#fem:approx:Aij)) and ([29](#fem:approx:bi)), but

# now the choice of ${\psi}_i$ is ${\varphi}_i$. Consider P1 elements

# where ${\varphi}_i(x)$ is piecewise linear. Nodes and elements numbered

# consecutively from left to right in a uniformly partitioned mesh imply

# the nodes

# $$

# x_i=i h,\quad i=0,\ldots,N_n-1,

# $$

# and the elements

#

#

#

# $$

# \begin{equation}

# \Omega^{(i)} = [x_{i},x_{i+1}] = [i h, (i+1)h],\quad

# i=0,\ldots,N_e-1

# {\thinspace .}

# \label{_auto33} \tag{72}

# \end{equation}

# $$

# We have in this case $N_e$ elements and $N_n=N_e+1$ nodes.

# The parameter $N$ denotes the number of unknowns in the expansion

# for $u$, and with the P1 elements, $N=N_n$.

# The domain is $\Omega=[x_{0},x_{N}]$.

# The formula for ${\varphi}_i(x)$ is given by

# ([71](#fem:approx:fe:phi:1:formula2)) and a graphical illustration is

# provided in Figures [fem:approx:fe:fig:P1](#fem:approx:fe:fig:P1) and

# [fem:approx:fe:fig:phi:i:im1](#fem:approx:fe:fig:phi:i:im1).

#

#

#

#

#

#

#

#

#

#

#

#

#

#

#

#

#

#

#

# We see that all the piecewise linear basis functions have the same

# "hat" shape. They are naturally referred to as *hat functions*,

# also called *chapeau functions*.

# The piecewise quadratic functions in [Figure](#fem:approx:fe:fig:P2)

# are seen to be of two types. "Rounded hats" associated with internal

# nodes in the elements and some more "sombrero" shaped hats associated

# with element boundary nodes. Higher-order basis functions also have

# hat-like shapes, but the functions have pronounced oscillations in addition,

# as illustrated in [Figure](#fem:approx:fe:fig:P3).

#

#

# A common terminology is to speak about *linear elements* as

# elements with two local nodes associated with

# piecewise linear basis functions. Similarly, *quadratic elements* and

# *cubic elements* refer to piecewise quadratic or cubic functions

# over elements with three or four local nodes, respectively.

# Alternative names, frequently used in the following, are **P1** for linear

# elements, **P2** for quadratic elements, and so forth: P$d$ signifies

# degree $d$ of the polynomial basis functions.

#

#

# ## Calculating the linear system

#

#

# The elements in the coefficient matrix and right-hand side are given

# by the formulas ([28](#fem:approx:Aij)) and ([29](#fem:approx:bi)), but

# now the choice of ${\psi}_i$ is ${\varphi}_i$. Consider P1 elements

# where ${\varphi}_i(x)$ is piecewise linear. Nodes and elements numbered

# consecutively from left to right in a uniformly partitioned mesh imply

# the nodes

# $$

# x_i=i h,\quad i=0,\ldots,N_n-1,

# $$

# and the elements

#

#

#

# $$

# \begin{equation}

# \Omega^{(i)} = [x_{i},x_{i+1}] = [i h, (i+1)h],\quad

# i=0,\ldots,N_e-1

# {\thinspace .}

# \label{_auto33} \tag{72}

# \end{equation}

# $$

# We have in this case $N_e$ elements and $N_n=N_e+1$ nodes.

# The parameter $N$ denotes the number of unknowns in the expansion

# for $u$, and with the P1 elements, $N=N_n$.

# The domain is $\Omega=[x_{0},x_{N}]$.

# The formula for ${\varphi}_i(x)$ is given by

# ([71](#fem:approx:fe:phi:1:formula2)) and a graphical illustration is

# provided in Figures [fem:approx:fe:fig:P1](#fem:approx:fe:fig:P1) and

# [fem:approx:fe:fig:phi:i:im1](#fem:approx:fe:fig:phi:i:im1).

#

#

#

#

#

#

#

#

#

#

#

#

#

# Illustration of the piecewise linear basis functions corresponding to global node 2 and 3.

# #

#

#

#

# ### Calculating specific matrix entries

#

# Let us calculate the specific matrix entry $A_{2,3} = \int_\Omega

# {\varphi}_2{\varphi}_3{\, \mathrm{d}x}$. [Figure](#fem:approx:fe:fig:phi:2:3)

# shows what ${\varphi}_2$ and ${\varphi}_3$ look like. We realize

# from this figure that the product ${\varphi}_2{\varphi}_3\neq 0$

# only over element 2, which contains node 2 and 3.

# The particular formulas for ${\varphi}_{2}(x)$ and ${\varphi}_3(x)$ on

# $[x_{2},x_{3}]$ are found from ([71](#fem:approx:fe:phi:1:formula2)).

# The function

# ${\varphi}_3$ has positive slope over $[x_{2},x_{3}]$ and corresponds

# to the interval $[x_{i-1},x_{i}]$ in

# ([71](#fem:approx:fe:phi:1:formula2)). With $i=3$ we get

# $$

# {\varphi}_3(x) = (x-x_2)/h,

# $$

# while ${\varphi}_2(x)$ has negative slope over $[x_{2},x_{3}]$

# and corresponds to setting $i=2$ in ([71](#fem:approx:fe:phi:1:formula2)),

# $$

# {\varphi}_2(x) = 1- (x-x_2)/h{\thinspace .}

# $$

# We can now easily integrate,

# $$

# A_{2,3} = \int_\Omega {\varphi}_2{\varphi}_{3}{\, \mathrm{d}x} =

# \int_{x_{2}}^{x_{3}}

# \left(1 - \frac{x - x_{2}}{h}\right) \frac{x - x_{2}}{h}

# {\, \mathrm{d}x} = \frac{h}{6}{\thinspace .}

# $$

# The diagonal entry in the coefficient matrix becomes

# $$

# A_{2,2} =

# \int_{x_{1}}^{x_{2}}

# \left(\frac{x - x_{1}}{h}\right)^2{\, \mathrm{d}x} +

# \int_{x_{2}}^{x_{3}}

# \left(1 - \frac{x - x_{2}}{h}\right)^2{\, \mathrm{d}x}

# = \frac{2h}{3}{\thinspace .}

# $$

# The entry $A_{2,1}$ has an

# integral that is geometrically similar to the situation in

# [Figure](#fem:approx:fe:fig:phi:2:3), so we get

# $A_{2,1}=h/6$.

#

#

# ### Calculating a general row in the matrix

#

# We can now generalize the calculation of matrix entries to

# a general row number $i$. The entry

# $A_{i,i-1}=\int_\Omega{\varphi}_i{\varphi}_{i-1}{\, \mathrm{d}x}$ involves

# hat functions as depicted in

# [Figure](#fem:approx:fe:fig:phi:i:im1). Since the integral

# is geometrically identical to the situation with specific nodes

# 2 and 3, we realize that $A_{i,i-1}=A_{i,i+1}=h/6$ and

# $A_{i,i}=2h/3$. However, we can compute the integral directly

# too:

# $$

# \begin{align*}

# A_{i,i-1} &= \int_\Omega {\varphi}_i{\varphi}_{i-1}{\, \mathrm{d}x}\\

# &=

# \underbrace{\int_{x_{i-2}}^{x_{i-1}} {\varphi}_i{\varphi}_{i-1}{\, \mathrm{d}x}}_{{\varphi}_i=0} +

# \int_{x_{i-1}}^{x_{i}} {\varphi}_i{\varphi}_{i-1}{\, \mathrm{d}x} +

# \underbrace{\int_{x_{i}}^{x_{i+1}} {\varphi}_i{\varphi}_{i-1}{\, \mathrm{d}x}}_{{\varphi}_{i-1}=0}\\

# &= \int_{x_{i-1}}^{x_{i}}

# \underbrace{\left(\frac{x - x_{i}}{h}\right)}_{{\varphi}_i(x)}

# \underbrace{\left(1 - \frac{x - x_{i-1}}{h}\right)}_{{\varphi}_{i-1}(x)} {\, \mathrm{d}x} =

# \frac{h}{6}

# {\thinspace .}

# \end{align*}

# $$

# The particular formulas for ${\varphi}_{i-1}(x)$ and ${\varphi}_i(x)$ on

# $[x_{i-1},x_{i}]$ are found from ([71](#fem:approx:fe:phi:1:formula2)):

# ${\varphi}_i$ is the linear function with positive slope, corresponding

# to the interval $[x_{i-1},x_{i}]$ in

# ([71](#fem:approx:fe:phi:1:formula2)), while $\phi_{i-1}$ has a

# negative slope so the definition in interval

# $[x_{i},x_{i+1}]$ in ([71](#fem:approx:fe:phi:1:formula2)) must be

# used.

#

#

#

#

#

#

#

#

#

#

# ### Calculating specific matrix entries

#

# Let us calculate the specific matrix entry $A_{2,3} = \int_\Omega

# {\varphi}_2{\varphi}_3{\, \mathrm{d}x}$. [Figure](#fem:approx:fe:fig:phi:2:3)

# shows what ${\varphi}_2$ and ${\varphi}_3$ look like. We realize

# from this figure that the product ${\varphi}_2{\varphi}_3\neq 0$

# only over element 2, which contains node 2 and 3.

# The particular formulas for ${\varphi}_{2}(x)$ and ${\varphi}_3(x)$ on

# $[x_{2},x_{3}]$ are found from ([71](#fem:approx:fe:phi:1:formula2)).

# The function

# ${\varphi}_3$ has positive slope over $[x_{2},x_{3}]$ and corresponds

# to the interval $[x_{i-1},x_{i}]$ in

# ([71](#fem:approx:fe:phi:1:formula2)). With $i=3$ we get

# $$

# {\varphi}_3(x) = (x-x_2)/h,

# $$

# while ${\varphi}_2(x)$ has negative slope over $[x_{2},x_{3}]$

# and corresponds to setting $i=2$ in ([71](#fem:approx:fe:phi:1:formula2)),

# $$

# {\varphi}_2(x) = 1- (x-x_2)/h{\thinspace .}

# $$

# We can now easily integrate,

# $$

# A_{2,3} = \int_\Omega {\varphi}_2{\varphi}_{3}{\, \mathrm{d}x} =

# \int_{x_{2}}^{x_{3}}

# \left(1 - \frac{x - x_{2}}{h}\right) \frac{x - x_{2}}{h}

# {\, \mathrm{d}x} = \frac{h}{6}{\thinspace .}

# $$

# The diagonal entry in the coefficient matrix becomes

# $$

# A_{2,2} =

# \int_{x_{1}}^{x_{2}}

# \left(\frac{x - x_{1}}{h}\right)^2{\, \mathrm{d}x} +

# \int_{x_{2}}^{x_{3}}

# \left(1 - \frac{x - x_{2}}{h}\right)^2{\, \mathrm{d}x}

# = \frac{2h}{3}{\thinspace .}

# $$

# The entry $A_{2,1}$ has an

# integral that is geometrically similar to the situation in

# [Figure](#fem:approx:fe:fig:phi:2:3), so we get

# $A_{2,1}=h/6$.

#

#

# ### Calculating a general row in the matrix

#

# We can now generalize the calculation of matrix entries to

# a general row number $i$. The entry

# $A_{i,i-1}=\int_\Omega{\varphi}_i{\varphi}_{i-1}{\, \mathrm{d}x}$ involves

# hat functions as depicted in

# [Figure](#fem:approx:fe:fig:phi:i:im1). Since the integral

# is geometrically identical to the situation with specific nodes

# 2 and 3, we realize that $A_{i,i-1}=A_{i,i+1}=h/6$ and

# $A_{i,i}=2h/3$. However, we can compute the integral directly

# too:

# $$

# \begin{align*}

# A_{i,i-1} &= \int_\Omega {\varphi}_i{\varphi}_{i-1}{\, \mathrm{d}x}\\

# &=

# \underbrace{\int_{x_{i-2}}^{x_{i-1}} {\varphi}_i{\varphi}_{i-1}{\, \mathrm{d}x}}_{{\varphi}_i=0} +

# \int_{x_{i-1}}^{x_{i}} {\varphi}_i{\varphi}_{i-1}{\, \mathrm{d}x} +

# \underbrace{\int_{x_{i}}^{x_{i+1}} {\varphi}_i{\varphi}_{i-1}{\, \mathrm{d}x}}_{{\varphi}_{i-1}=0}\\

# &= \int_{x_{i-1}}^{x_{i}}

# \underbrace{\left(\frac{x - x_{i}}{h}\right)}_{{\varphi}_i(x)}

# \underbrace{\left(1 - \frac{x - x_{i-1}}{h}\right)}_{{\varphi}_{i-1}(x)} {\, \mathrm{d}x} =

# \frac{h}{6}

# {\thinspace .}

# \end{align*}

# $$

# The particular formulas for ${\varphi}_{i-1}(x)$ and ${\varphi}_i(x)$ on

# $[x_{i-1},x_{i}]$ are found from ([71](#fem:approx:fe:phi:1:formula2)):

# ${\varphi}_i$ is the linear function with positive slope, corresponding

# to the interval $[x_{i-1},x_{i}]$ in

# ([71](#fem:approx:fe:phi:1:formula2)), while $\phi_{i-1}$ has a

# negative slope so the definition in interval

# $[x_{i},x_{i+1}]$ in ([71](#fem:approx:fe:phi:1:formula2)) must be

# used.

#

#

#

#

#

# Illustration of two neighboring linear (hat) functions with general node numbers.

# #

#

#

#

#

# The first and last row of the coefficient matrix lead to slightly

# different integrals:

# $$

# A_{0,0} = \int_\Omega {\varphi}_0^2{\, \mathrm{d}x} = \int_{x_{0}}^{x_{1}}

# \left(1 - \frac{x-x_0}{h}\right)^2{\, \mathrm{d}x} = \frac{h}{3}{\thinspace .}

# $$

# Similarly, $A_{N,N}$ involves an integral over only one element

# and hence equals $h/3$.

#

#

#

#

#

#

#

#

#

#

#

# The first and last row of the coefficient matrix lead to slightly

# different integrals:

# $$

# A_{0,0} = \int_\Omega {\varphi}_0^2{\, \mathrm{d}x} = \int_{x_{0}}^{x_{1}}

# \left(1 - \frac{x-x_0}{h}\right)^2{\, \mathrm{d}x} = \frac{h}{3}{\thinspace .}

# $$

# Similarly, $A_{N,N}$ involves an integral over only one element

# and hence equals $h/3$.

#

#

#

#

#

# Right-hand side integral with the product of a basis function and the given function to approximate.

# #

#

#

#

#

# The general formula for $b_i$,

# see [Figure](#fem:approx:fe:fig:phi:i:f), is now easy to set up

#

#

#

# $$

# \begin{equation}

# b_i = \int_\Omega{\varphi}_i(x)f(x){\, \mathrm{d}x}

# = \int_{x_{i-1}}^{x_{i}} \frac{x - x_{i-1}}{h} f(x){\, \mathrm{d}x}

# + \int_{x_{i}}^{x_{i+1}} \left(1 - \frac{x - x_{i}}{h}\right) f(x)

# {\, \mathrm{d}x}{\thinspace .}

# \label{fem:approx:fe:bi:formula1} \tag{73}

# \end{equation}

# $$

# We remark that the above formula applies to internal nodes (living at the interface between two elements)

# and that for the nodes on the boundaries only one integral needs to be computed.

#

# We need a specific $f(x)$ function to compute these integrals.

# With $f(x)=x(1-x)$ and

# two equal-sized elements in $\Omega=[0,1]$, one gets

# $$

# A = \frac{h}{6}\left(\begin{array}{ccc}

# 2 & 1 & 0\\

# 1 & 4 & 1\\

# 0 & 1 & 2

# \end{array}\right),\quad

# b = \frac{h^2}{12}\left(\begin{array}{c}

# 2 - h\\

# 12 - 14h\\

# 10 -17h

# \end{array}\right){\thinspace .}

# $$

# The solution becomes

# $$

# c_0 = \frac{h^2}{6},\quad c_1 = h - \frac{5}{6}h^2,\quad

# c_2 = 2h - \frac{23}{6}h^2{\thinspace .}

# $$

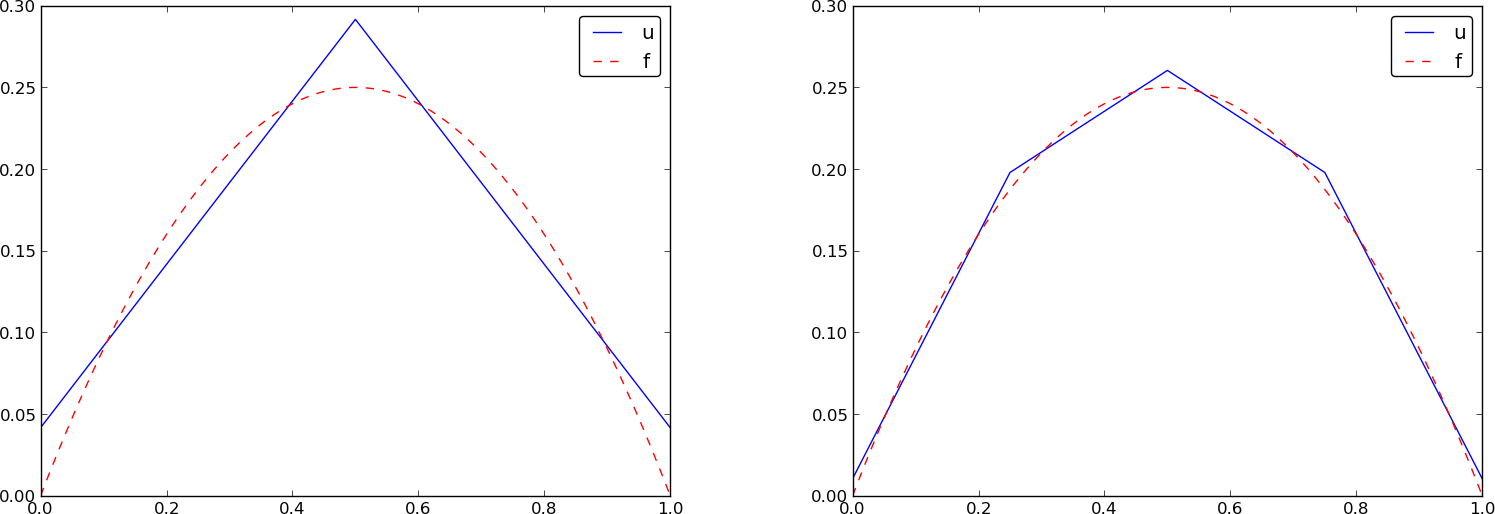

# The resulting function

# $$

# u(x)=c_0{\varphi}_0(x) + c_1{\varphi}_1(x) + c_2{\varphi}_2(x)

# $$

# is displayed in [Figure](#fem:approx:fe:fig:ls:P1:2:4) (left).

# Doubling the number of elements to four leads to the improved

# approximation in the right part of [Figure](#fem:approx:fe:fig:ls:P1:2:4).

#

#

#

#

#

#

#

#

#

#

#

# The general formula for $b_i$,

# see [Figure](#fem:approx:fe:fig:phi:i:f), is now easy to set up

#

#

#

# $$

# \begin{equation}

# b_i = \int_\Omega{\varphi}_i(x)f(x){\, \mathrm{d}x}

# = \int_{x_{i-1}}^{x_{i}} \frac{x - x_{i-1}}{h} f(x){\, \mathrm{d}x}

# + \int_{x_{i}}^{x_{i+1}} \left(1 - \frac{x - x_{i}}{h}\right) f(x)

# {\, \mathrm{d}x}{\thinspace .}

# \label{fem:approx:fe:bi:formula1} \tag{73}

# \end{equation}

# $$

# We remark that the above formula applies to internal nodes (living at the interface between two elements)

# and that for the nodes on the boundaries only one integral needs to be computed.

#

# We need a specific $f(x)$ function to compute these integrals.

# With $f(x)=x(1-x)$ and

# two equal-sized elements in $\Omega=[0,1]$, one gets

# $$

# A = \frac{h}{6}\left(\begin{array}{ccc}

# 2 & 1 & 0\\

# 1 & 4 & 1\\

# 0 & 1 & 2

# \end{array}\right),\quad

# b = \frac{h^2}{12}\left(\begin{array}{c}

# 2 - h\\

# 12 - 14h\\

# 10 -17h

# \end{array}\right){\thinspace .}

# $$

# The solution becomes

# $$

# c_0 = \frac{h^2}{6},\quad c_1 = h - \frac{5}{6}h^2,\quad

# c_2 = 2h - \frac{23}{6}h^2{\thinspace .}

# $$

# The resulting function

# $$

# u(x)=c_0{\varphi}_0(x) + c_1{\varphi}_1(x) + c_2{\varphi}_2(x)

# $$

# is displayed in [Figure](#fem:approx:fe:fig:ls:P1:2:4) (left).

# Doubling the number of elements to four leads to the improved

# approximation in the right part of [Figure](#fem:approx:fe:fig:ls:P1:2:4).

#

#

#

#

#

# Least squares approximation of a parabola using 2 (left) and 4 (right) P1 elements.

# #

#

#

#

#

#

# ## Assembly of elementwise computations

#

#

# Our integral computations so far have been straightforward. However,

# with higher-degree polynomials and in higher dimensions (2D and 3D),

# integrating in the physical domain gets increasingly complicated. Instead,

# integrating over one element at a time, and transforming each element

# to a common standardized geometry in a new reference coordinate system,

# is technically easier. Almost all computer codes employ a finite element

# algorithm that calculates the linear system by integrating over one

# element at a time. We shall therefore explain this algorithm next.

# The amount of details might be overwhelming during a first reading, but

# once all those details are done right, one has a general

# finite element algorithm that can be applied to all sorts of elements,

# in any space dimension, no matter how geometrically complicated the domain

# is.

#

#

# ### The element matrix

#

# We start by splitting

# the integral over $\Omega$ into a sum of contributions from

# each element:

#

#

#

# $$

# \begin{equation}

# A_{i,j} = \int_\Omega{\varphi}_i{\varphi}_j {\, \mathrm{d}x} = \sum_{e} A^{(e)}_{i,j},\quad

# A^{(e)}_{i,j}=\int_{\Omega^{(e)}} {\varphi}_i{\varphi}_j {\, \mathrm{d}x}

# {\thinspace .}

# \label{fem:approx:fe:elementwise:Asplit} \tag{74}

# \end{equation}

# $$

# Now, $A^{(e)}_{i,j}\neq 0$, if and only if, $i$ and $j$ are nodes in element

# $e$ (look at [Figure](#fem:approx:fe:fig:phi:i:im1) to realize this

# property, but the result also holds for all types of elements).

# Introduce $i=q(e,r)$ as the mapping of local node number $r$ in element

# $e$ to the global node number $i$. This is just a short mathematical notation

# for the expression `i=elements[e][r]` in a program.

# Let $r$ and $s$ be the local node numbers corresponding to the global

# node numbers $i=q(e,r)$ and

# $j=q(e,s)$. With $d+1$ nodes per element, all the nonzero matrix entries

# in $A^{(e)}_{i,j}$ arise from the integrals involving basis functions with

# indices corresponding to the global node numbers in element number $e$:

# $$

# \int_{\Omega^{(e)}}{\varphi}_{q(e,r)}{\varphi}_{q(e,s)} {\, \mathrm{d}x},

# \quad r,s=0,\ldots, d{\thinspace .}

# $$

# These contributions can be collected in a $(d+1)\times (d+1)$ matrix known as

# the *element matrix*. Let ${I_d}=\{0,\ldots,d\}$ be the valid indices

# of $r$ and $s$.

# We introduce the notation

# $$

# \tilde A^{(e)} = \{ \tilde A^{(e)}_{r,s}\},\quad

# r,s\in{I_d},

# $$

# for the element matrix. For P1 elements ($d=2$) we have

# $$

# \tilde A^{(e)} = \left\lbrack\begin{array}{ll}

# \tilde A^{(e)}_{0,0} & \tilde A^{(e)}_{0,1}\\

# \tilde A^{(e)}_{1,0} & \tilde A^{(e)}_{1,1}

# \end{array}\right\rbrack

# {\thinspace .}

# $$

# while P2 elements have a $3\times 3$ element matrix:

# $$

# \tilde A^{(e)} = \left\lbrack\begin{array}{lll}

# \tilde A^{(e)}_{0,0} & \tilde A^{(e)}_{0,1} & \tilde A^{(e)}_{0,2}\\

# \tilde A^{(e)}_{1,0} & \tilde A^{(e)}_{1,1} & \tilde A^{(e)}_{1,2}\\

# \tilde A^{(e)}_{2,0} & \tilde A^{(e)}_{2,1} & \tilde A^{(e)}_{2,2}

# \end{array}\right\rbrack

# {\thinspace .}

# $$

# ### Assembly of element matrices

#

# Given the numbers $\tilde A^{(e)}_{r,s}$,

# we should, according to ([74](#fem:approx:fe:elementwise:Asplit)),

# add the contributions to the global coefficient matrix by

#

#

#

# $$

# \begin{equation}

# A_{q(e,r),q(e,s)} := A_{q(e,r),q(e,s)} + \tilde A^{(e)}_{r,s},\quad

# r,s\in{I_d}{\thinspace .}

# \label{_auto34} \tag{75}

# \end{equation}

# $$

# This process of adding in elementwise contributions to the global matrix

# is called *finite element assembly* or simply *assembly*.

#

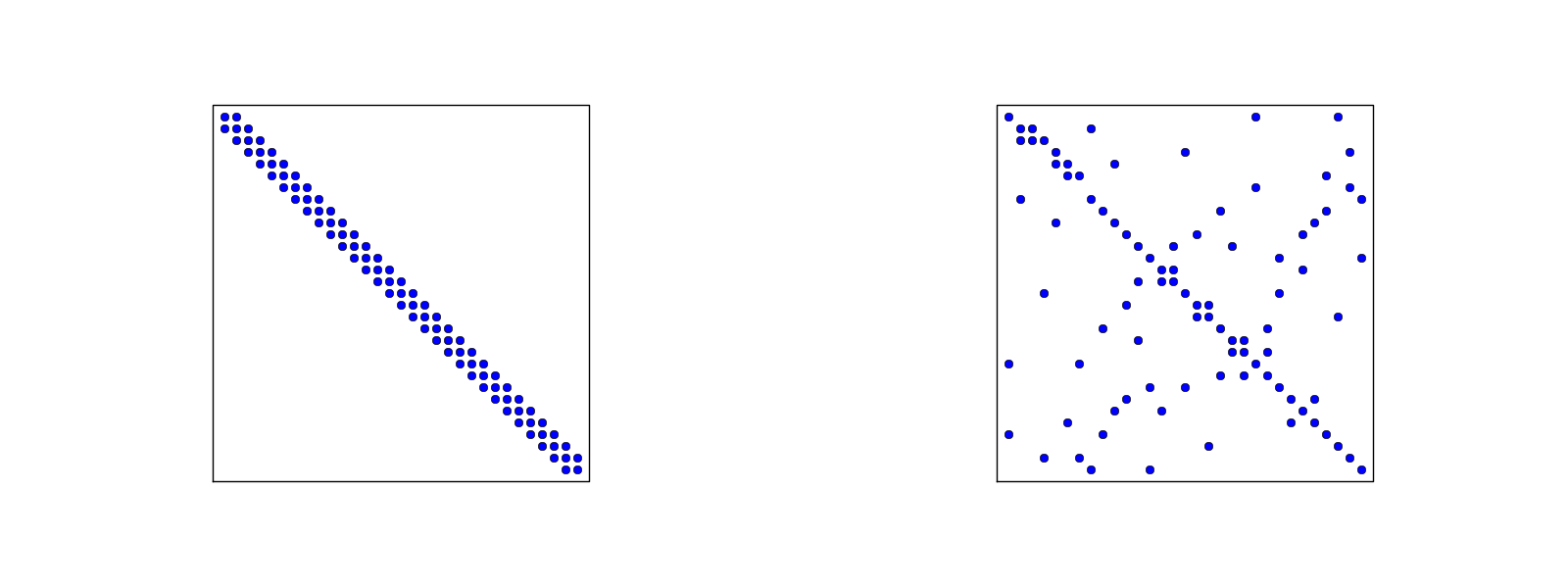

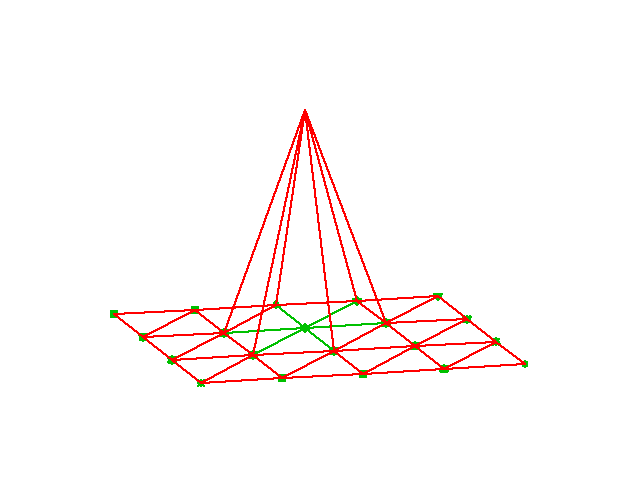

# [Figure](#fem:approx:fe:fig:assembly:2x2) illustrates how element matrices

# for elements with two nodes are added into the global matrix.

# More specifically, the figure shows how the element matrix associated with

# elements 1 and 2 assembled, assuming that global nodes are numbered

# from left to right in the domain. With regularly numbered P3 elements, where

# the element matrices have size $4\times 4$, the assembly of elements 1 and 2

# are sketched in [Figure](#fem:approx:fe:fig:assembly:4x4).

#

#

#

#

#

#

#

#

#

#

#

#

# ## Assembly of elementwise computations

#

#

# Our integral computations so far have been straightforward. However,

# with higher-degree polynomials and in higher dimensions (2D and 3D),

# integrating in the physical domain gets increasingly complicated. Instead,

# integrating over one element at a time, and transforming each element

# to a common standardized geometry in a new reference coordinate system,

# is technically easier. Almost all computer codes employ a finite element

# algorithm that calculates the linear system by integrating over one

# element at a time. We shall therefore explain this algorithm next.

# The amount of details might be overwhelming during a first reading, but

# once all those details are done right, one has a general

# finite element algorithm that can be applied to all sorts of elements,

# in any space dimension, no matter how geometrically complicated the domain

# is.

#

#

# ### The element matrix

#

# We start by splitting

# the integral over $\Omega$ into a sum of contributions from

# each element:

#

#

#

# $$

# \begin{equation}

# A_{i,j} = \int_\Omega{\varphi}_i{\varphi}_j {\, \mathrm{d}x} = \sum_{e} A^{(e)}_{i,j},\quad

# A^{(e)}_{i,j}=\int_{\Omega^{(e)}} {\varphi}_i{\varphi}_j {\, \mathrm{d}x}

# {\thinspace .}

# \label{fem:approx:fe:elementwise:Asplit} \tag{74}

# \end{equation}

# $$

# Now, $A^{(e)}_{i,j}\neq 0$, if and only if, $i$ and $j$ are nodes in element

# $e$ (look at [Figure](#fem:approx:fe:fig:phi:i:im1) to realize this

# property, but the result also holds for all types of elements).

# Introduce $i=q(e,r)$ as the mapping of local node number $r$ in element

# $e$ to the global node number $i$. This is just a short mathematical notation

# for the expression `i=elements[e][r]` in a program.

# Let $r$ and $s$ be the local node numbers corresponding to the global

# node numbers $i=q(e,r)$ and

# $j=q(e,s)$. With $d+1$ nodes per element, all the nonzero matrix entries

# in $A^{(e)}_{i,j}$ arise from the integrals involving basis functions with

# indices corresponding to the global node numbers in element number $e$:

# $$

# \int_{\Omega^{(e)}}{\varphi}_{q(e,r)}{\varphi}_{q(e,s)} {\, \mathrm{d}x},

# \quad r,s=0,\ldots, d{\thinspace .}

# $$

# These contributions can be collected in a $(d+1)\times (d+1)$ matrix known as

# the *element matrix*. Let ${I_d}=\{0,\ldots,d\}$ be the valid indices

# of $r$ and $s$.

# We introduce the notation

# $$

# \tilde A^{(e)} = \{ \tilde A^{(e)}_{r,s}\},\quad

# r,s\in{I_d},

# $$

# for the element matrix. For P1 elements ($d=2$) we have

# $$

# \tilde A^{(e)} = \left\lbrack\begin{array}{ll}

# \tilde A^{(e)}_{0,0} & \tilde A^{(e)}_{0,1}\\

# \tilde A^{(e)}_{1,0} & \tilde A^{(e)}_{1,1}

# \end{array}\right\rbrack

# {\thinspace .}

# $$

# while P2 elements have a $3\times 3$ element matrix:

# $$

# \tilde A^{(e)} = \left\lbrack\begin{array}{lll}

# \tilde A^{(e)}_{0,0} & \tilde A^{(e)}_{0,1} & \tilde A^{(e)}_{0,2}\\

# \tilde A^{(e)}_{1,0} & \tilde A^{(e)}_{1,1} & \tilde A^{(e)}_{1,2}\\

# \tilde A^{(e)}_{2,0} & \tilde A^{(e)}_{2,1} & \tilde A^{(e)}_{2,2}

# \end{array}\right\rbrack

# {\thinspace .}

# $$

# ### Assembly of element matrices

#

# Given the numbers $\tilde A^{(e)}_{r,s}$,

# we should, according to ([74](#fem:approx:fe:elementwise:Asplit)),

# add the contributions to the global coefficient matrix by

#

#

#

# $$

# \begin{equation}

# A_{q(e,r),q(e,s)} := A_{q(e,r),q(e,s)} + \tilde A^{(e)}_{r,s},\quad

# r,s\in{I_d}{\thinspace .}

# \label{_auto34} \tag{75}

# \end{equation}

# $$

# This process of adding in elementwise contributions to the global matrix

# is called *finite element assembly* or simply *assembly*.

#

# [Figure](#fem:approx:fe:fig:assembly:2x2) illustrates how element matrices

# for elements with two nodes are added into the global matrix.

# More specifically, the figure shows how the element matrix associated with

# elements 1 and 2 assembled, assuming that global nodes are numbered

# from left to right in the domain. With regularly numbered P3 elements, where

# the element matrices have size $4\times 4$, the assembly of elements 1 and 2

# are sketched in [Figure](#fem:approx:fe:fig:assembly:4x4).

#

#

#

#

#

# Illustration of matrix assembly: regularly numbered P1 elements.

# #

#

#

#

#

#

#

#

#

#

#

#

#

#

#

#

#

# Illustration of matrix assembly: regularly numbered P3 elements.

# #

#

#

#

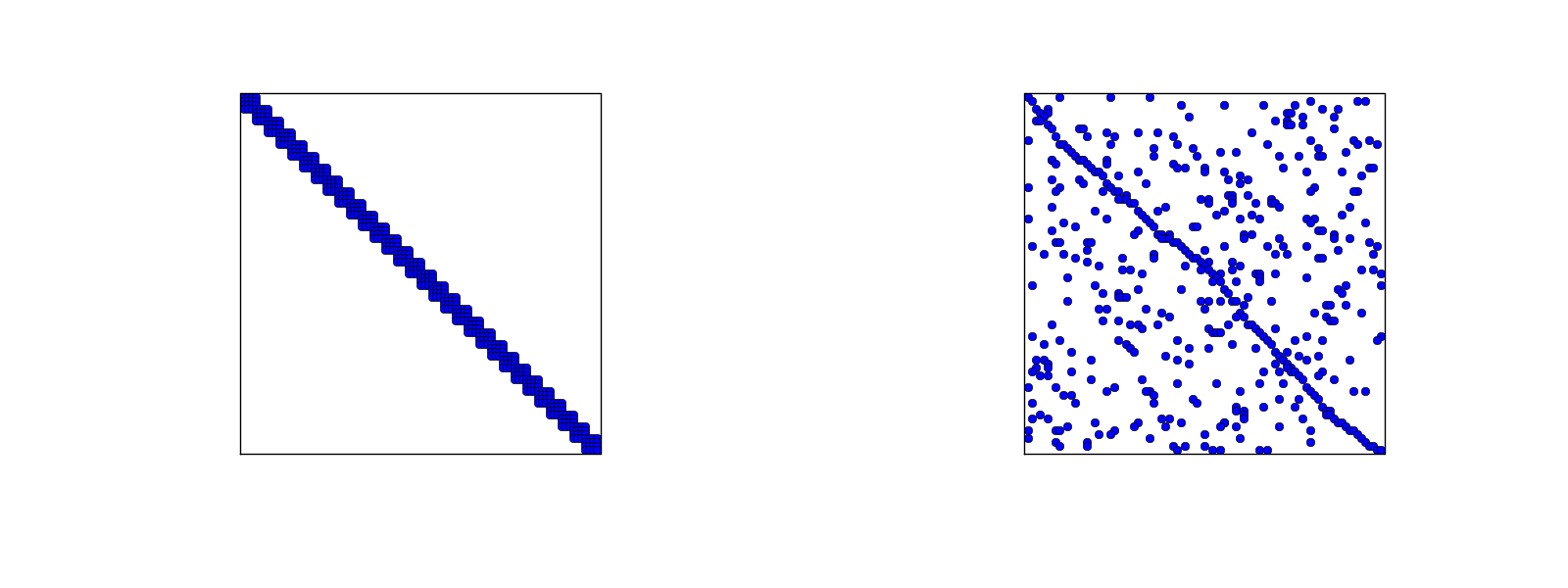

# ### Assembly of irregularly numbered elements and nodes

#

# After assembly of element matrices corresponding to regularly numbered elements

# and nodes are understood, it is wise to study the assembly process for

# irregularly numbered elements and nodes. [Figure](#fem:approx:fe:def:elements:nodes:fig:P1:irregular) shows a mesh where the `elements` array, or $q(e,r)$

# mapping in mathematical notation, is given as

# In[4]:

elements = [[2, 1], [4, 5], [0, 4], [3, 0], [5, 2]]

# The associated assembly of element matrices 1 and 2 is sketched in

# [Figure](#fem:approx:fe:fig:assembly:irr2x2).

#

# We have created [animations](${fem_doc}/mov/fe_assembly.html) to illustrate the assembly of

# P1 and P3 elements with regular numbering as well as P1 elements with

# irregular numbering. The reader is encouraged to develop a

# "geometric" understanding of how element matrix entries are added to

# the global matrix. This understanding is crucial for hand

# computations with the finite element method.

#

#

#

#

#

#

#

#

#

#

#

#

#

#

#

# ### Assembly of irregularly numbered elements and nodes

#

# After assembly of element matrices corresponding to regularly numbered elements

# and nodes are understood, it is wise to study the assembly process for

# irregularly numbered elements and nodes. [Figure](#fem:approx:fe:def:elements:nodes:fig:P1:irregular) shows a mesh where the `elements` array, or $q(e,r)$

# mapping in mathematical notation, is given as

# In[4]:

elements = [[2, 1], [4, 5], [0, 4], [3, 0], [5, 2]]

# The associated assembly of element matrices 1 and 2 is sketched in

# [Figure](#fem:approx:fe:fig:assembly:irr2x2).

#

# We have created [animations](${fem_doc}/mov/fe_assembly.html) to illustrate the assembly of

# P1 and P3 elements with regular numbering as well as P1 elements with

# irregular numbering. The reader is encouraged to develop a

# "geometric" understanding of how element matrix entries are added to

# the global matrix. This understanding is crucial for hand

# computations with the finite element method.

#

#

#

#

#

#

#

#

#

#

# Illustration of matrix assembly: irregularly numbered P1 elements.

# #

#

#

#

#

#

#

# ### The element vector

#

# The right-hand side of the linear system is also computed elementwise:

#

#

#

# $$

# \begin{equation}

# b_i = \int_\Omega f(x){\varphi}_i(x) {\, \mathrm{d}x} = \sum_{e} b^{(e)}_{i},\quad

# b^{(e)}_{i}=\int_{\Omega^{(e)}} f(x){\varphi}_i(x){\, \mathrm{d}x}

# {\thinspace .} \label{_auto35} \tag{76}

# \end{equation}

# $$

# We observe that

# $b_i^{(e)}\neq 0$ if and only if global node $i$ is a node in element $e$

# (look at [Figure](#fem:approx:fe:fig:phi:i:f) to realize this property).

# With $d$ nodes per element we can collect the $d+1$ nonzero contributions

# $b_i^{(e)}$, for $i=q(e,r)$, $r\in{I_d}$, in an *element vector*

# $$

# \tilde b_r^{(e)}=\{ \tilde b_r^{(e)}\},\quad r\in{I_d}{\thinspace .}

# $$

# These contributions are added to the

# global right-hand side by an assembly process similar to that for the

# element matrices:

#

#

#

# $$

# \begin{equation}

# b_{q(e,r)} := b_{q(e,r)} + \tilde b^{(e)}_{r},\quad

# r\in{I_d}{\thinspace .} \label{_auto36} \tag{77}

# \end{equation}

# $$

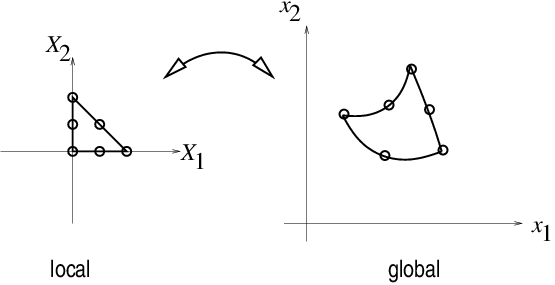

# ## Mapping to a reference element

#

#

#

# Instead of computing the integrals

# $$

# \tilde A^{(e)}_{r,s} = \int_{\Omega^{(e)}}{\varphi}_{q(e,r)}(x){\varphi}_{q(e,s)}(x){\, \mathrm{d}x}

# $$

# over some element

# $\Omega^{(e)} = [x_L, x_R]$ in the physical coordinate system,

# it turns out that it is considerably easier and more convenient

# to map the element domain $[x_L, x_R]$

# to a standardized reference element domain $[-1,1]$ and compute all

# integrals over the same domain $[-1,1]$.

# We have now introduced

# $x_L$ and $x_R$ as the left and right boundary points of an arbitrary element.

# With a natural, regular numbering of nodes and elements from left to right

# through the domain, we have $x_L=x_{e}$ and $x_R=x_{e+1}$ for P1 elements.

#

# ### The coordinate transformation

#

# Let $X\in [-1,1]$ be the coordinate

# in the reference element. A linear mapping, also known as an affine mapping,

# from $X$ to $x$ can be written

#

#

#

# $$

# \begin{equation}

# x = \frac{1}{2} (x_L + x_R) + \frac{1}{2} (x_R - x_L)X{\thinspace .}

# \label{fem:approx:fe:affine:mapping} \tag{78}

# \end{equation}

# $$

# This relation can alternatively be expressed as

#

#

#

# $$

# \begin{equation}

# x = x_m + {\frac{1}{2}}hX,

# \label{fem:approx:fe:affine:mapping2} \tag{79}

# \end{equation}

# $$

# where we have introduced the element midpoint $x_m=(x_L+x_R)/2$ and

# the element length $h=x_R-x_L$.

#

# ### Formulas for the element matrix and vector entries

#

# Integrating over the reference element is a matter of just changing the

# integration variable from $x$ to $X$. Let

#

#

#

# $$

# \begin{equation}

# {\tilde{\varphi}}_r(X) = {\varphi}_{q(e,r)}(x(X))

# \label{_auto37} \tag{80}

# \end{equation}

# $$

# be the basis function associated with local node number $r$ in the

# reference element. Switching from $x$ to $X$ as integration variable,

# using the rules from calculus, results in

#

#

#

# $$

# \begin{equation}

# \tilde A^{(e)}_{r,s} =

# \int_{\Omega^{(e)}}{\varphi}_{q(e,r)}(x){\varphi}_{q(e,s)}(x){\, \mathrm{d}x}

# = \int_{-1}^1 {\tilde{\varphi}}_r(X){\tilde{\varphi}}_s(X)\frac{{\, \mathrm{d}x}}{{\, \mathrm{d}X}}{\, \mathrm{d}X}

# {\thinspace .}

# \label{_auto38} \tag{81}

# \end{equation}

# $$

# In 2D and 3D, ${\, \mathrm{d}x}$ is transformed to $\hbox{det} J{\, \mathrm{d}X}$, where $J$ is

# the Jacobian of the mapping from $x$ to $X$. In 1D,

# $\hbox{det} J{\, \mathrm{d}X} = {\, \mathrm{d}x}/{\, \mathrm{d}X} = h/2$. To obtain a uniform

# notation for 1D, 2D, and 3D problems we therefore replace

# ${\, \mathrm{d}x}/{\, \mathrm{d}X}$ by $\det J$ now.

# The integration over the reference element is then written as

#

#

#

# $$

# \begin{equation}

# \tilde A^{(e)}_{r,s}

# = \int_{-1}^1 {\tilde{\varphi}}_r(X){\tilde{\varphi}}_s(X)\det J\,{\, \mathrm{d}X}

# \label{fem:approx:fe:mapping:Ae} \tag{82}

# {\thinspace .}

# \end{equation}

# $$

# The corresponding formula for the element vector entries becomes

#

#

#

# $$

# \begin{equation}

# \tilde b^{(e)}_{r} = \int_{\Omega^{(e)}}f(x){\varphi}_{q(e,r)}(x){\, \mathrm{d}x}

# = \int_{-1}^1 f(x(X)){\tilde{\varphi}}_r(X)\det J\,{\, \mathrm{d}X}

# \label{fem:approx:fe:mapping:be} \tag{83}

# {\thinspace .}

# \end{equation}

# $$

# **Why reference elements?**

#

# The great advantage of using reference elements is that

# the formulas for the basis functions, ${\tilde{\varphi}}_r(X)$, are the

# same for all elements and independent of the element geometry

# (length and location in the mesh). The geometric information

# is "factored out" in the simple mapping formula and the associated

# $\det J$ quantity. Also, the integration domain is the same for

# all elements. All these features contribute to simplify computer

# codes and make them more general.

#

#

#

#

#

#

#

#

#

#

#

#

#

#

# ### Formulas for local basis functions

#

# The ${\tilde{\varphi}}_r(x)$ functions are simply the Lagrange

# polynomials defined through the local nodes in the reference element.

# For $d=1$ and two nodes per element, we have the linear Lagrange

# polynomials

#

#

#

# $$

# \begin{equation}

# {\tilde{\varphi}}_0(X) = \frac{1}{2} (1 - X) ,

# \label{fem:approx:fe:mapping:P1:phi0} \tag{84}

# \end{equation}

# $$

#

#

#

# $$

# \begin{equation}

# {\tilde{\varphi}}_1(X) = \frac{1}{2} (1 + X) .

# \label{fem:approx:fe:mapping:P1:phi1} \tag{85}

# \end{equation}

# $$

# Quadratic polynomials, $d=2$, have the formulas

#

#

#

# $$

# \begin{equation}

# {\tilde{\varphi}}_0(X) = \frac{1}{2} (X-1)X,

# \label{fem:approx:fe:mapping:P2:phi0} \tag{86}

# \end{equation}

# $$

#

#

#

# $$

# \begin{equation}

# {\tilde{\varphi}}_1(X) = 1 - X^2,

# \label{fem:approx:fe:mapping:P2:phi1} \tag{87}

# \end{equation}

# $$

#

#

#

# $$

# \begin{equation}

# {\tilde{\varphi}}_2(X) = \frac{1}{2} (X+1)X .

# \label{fem:approx:fe:mapping:P2:phi2} \tag{88}

# \end{equation}

# $$

# In general,

#

#

#

# $$

# \begin{equation}

# {\tilde{\varphi}}_r(X) = \prod_{s=0,s\neq r}^d \frac{X-X_{(s)}}{X_{(r)}-X_{(s)}},

# \label{_auto39} \tag{89}

# \end{equation}

# $$

# where $X_{(0)},\ldots,X_{(d)}$ are the coordinates of the local nodes in

# the reference element.

# These are normally uniformly spaced: $X_{(r)} = -1 + 2r/d$,

# $r\in{I_d}$.

#

#

#

# ## Example on integration over a reference element

#

#

# To illustrate the concepts from the previous section in a specific

# example, we now

# consider calculation of the element matrix and vector for a specific choice of

# $d$ and $f(x)$. A simple choice is $d=1$ (P1 elements) and $f(x)=x(1-x)$

# on $\Omega =[0,1]$. We have the general expressions

# ([82](#fem:approx:fe:mapping:Ae)) and ([83](#fem:approx:fe:mapping:be))

# for $\tilde A^{(e)}_{r,s}$ and $\tilde b^{(e)}_{r}$.

# Writing these out for the choices ([84](#fem:approx:fe:mapping:P1:phi0))

# and ([85](#fem:approx:fe:mapping:P1:phi1)), and using that $\det J = h/2$,

# we can do the following calculations of the element matrix entries:

# $$

# \tilde A^{(e)}_{0,0}

# = \int_{-1}^1 {\tilde{\varphi}}_0(X){\tilde{\varphi}}_0(X)\frac{h}{2} {\, \mathrm{d}X}\nonumber

# $$

#

#

#

# $$

# \begin{equation}

# =\int_{-1}^1 \frac{1}{2}(1-X)\frac{1}{2}(1-X) \frac{h}{2} {\, \mathrm{d}X} =

# \frac{h}{8}\int_{-1}^1 (1-X)^2 {\, \mathrm{d}X} = \frac{h}{3},

# \label{fem:approx:fe:intg:ref:Ae00} \tag{90}

# \end{equation}

# $$

# $$

# \tilde A^{(e)}_{1,0}

# = \int_{-1}^1 {\tilde{\varphi}}_1(X){\tilde{\varphi}}_0(X)\frac{h}{2} {\, \mathrm{d}X}\nonumber

# $$

#

#

#

# $$

# \begin{equation}

# =\int_{-1}^1 \frac{1}{2}(1+X)\frac{1}{2}(1-X) \frac{h}{2} {\, \mathrm{d}X} =

# \frac{h}{8}\int_{-1}^1 (1-X^2) {\, \mathrm{d}X} = \frac{h}{6},

# \label{fem:approx:fe:intg:ref:Ae10} \tag{91}

# \end{equation}

# $$

#

#

#

# $$

# \begin{equation}

# \tilde A^{(e)}_{0,1} = \tilde A^{(e)}_{1,0},

# \label{_auto40} \tag{92}

# \end{equation}

# $$

# $$

# \tilde A^{(e)}_{1,1}

# = \int_{-1}^1 {\tilde{\varphi}}_1(X){\tilde{\varphi}}_1(X)\frac{h}{2} {\, \mathrm{d}X}\nonumber

# $$

#

#

#

# $$

# \begin{equation}

# =\int_{-1}^1 \frac{1}{2}(1+X)\frac{1}{2}(1+X) \frac{h}{2} {\, \mathrm{d}X} =

# \frac{h}{8}\int_{-1}^1 (1+X)^2 {\, \mathrm{d}X} = \frac{h}{3}

# \label{fem:approx:fe:intg:ref:Ae11} \tag{93}

# {\thinspace .}

# \end{equation}

# $$

# The corresponding entries in the element vector becomes

# using ([79](#fem:approx:fe:affine:mapping2)))

# $$

# \tilde b^{(e)}_{0}

# = \int_{-1}^1 f(x(X)){\tilde{\varphi}}_0(X)\frac{h}{2} {\, \mathrm{d}X}\nonumber

# $$

# $$

# = \int_{-1}^1 (x_m + \frac{1}{2} hX)(1-(x_m + \frac{1}{2} hX))

# \frac{1}{2}(1-X)\frac{h}{2} {\, \mathrm{d}X} \nonumber

# $$

#

#

#

# $$

# \begin{equation}

# = - \frac{1}{24} h^{3} + \frac{1}{6} h^{2} x_{m} - \frac{1}{12} h^{2} - \frac{1}{2} h x_{m}^{2} + \frac{1}{2} h x_{m},

# \label{fem:approx:fe:intg:ref:be0} \tag{94}

# \end{equation}

# $$

# $$

# \tilde b^{(e)}_{1}

# = \int_{-1}^1 f(x(X)){\tilde{\varphi}}_1(X)\frac{h}{2} {\, \mathrm{d}X}\nonumber

# $$

# $$

# = \int_{-1}^1 (x_m + \frac{1}{2} hX)(1-(x_m + \frac{1}{2} hX))

# \frac{1}{2}(1+X)\frac{h}{2} {\, \mathrm{d}X} \nonumber

# $$

#

#

#

# $$

# \begin{equation}

# = - \frac{1}{24} h^{3} - \frac{1}{6} h^{2} x_{m} + \frac{1}{12} h^{2} -

# \frac{1}{2} h x_{m}^{2} + \frac{1}{2} h x_{m}

# {\thinspace .}

# \label{_auto41} \tag{95}

# \end{equation}

# $$

# In the last two expressions we have used the element midpoint $x_m$.

#

# Integration of lower-degree polynomials above is tedious,

# and higher-degree polynomials involve much more algebra, but `sympy`

# may help. For example, we can easily calculate

# ([90](#fem:approx:fe:intg:ref:Ae00)),

# ([91](#fem:approx:fe:intg:ref:Ae10)),

# and ([94](#fem:approx:fe:intg:ref:be0)) by

# In[5]:

import sympy as sym

x, x_m, h, X = sym.symbols('x x_m h X')

sym.integrate(h/8*(1-X)**2, (X, -1, 1))

# In[6]:

sym.integrate(h/8*(1+X)*(1-X), (X, -1, 1))

# In[7]:

x = x_m + h/2*X

b_0 = sym.integrate(h/4*x*(1-x)*(1-X), (X, -1, 1))

print(b_0)

# # Implementation

#

#

# Based on the experience from the previous example, it makes sense to

# write some code to automate the analytical integration process for any

# choice of finite element basis functions. In addition, we can automate

# the assembly process and the solution of the linear system. Another

# advantage is that the code for these purposes document all details of

# all steps in the finite element computational machinery. The complete

# code can be found in the module file [`fe_approx1D.py`](src/fe_approx1D.py).

#

#

# ## Integration

#

#

# First we need a Python function for

# defining ${\tilde{\varphi}}_r(X)$ in terms of a Lagrange polynomial

# of degree `d`:

# In[8]:

import sympy as sym

import numpy as np

def basis(d, point_distribution='uniform', symbolic=False):

"""

Return all local basis function phi as functions of the

local point X in a 1D element with d+1 nodes.

If symbolic=True, return symbolic expressions, else

return Python functions of X.

point_distribution can be 'uniform' or 'Chebyshev'.

"""

X = sym.symbols('X')

if d == 0:

phi_sym = [1]

else:

if point_distribution == 'uniform':

if symbolic:

# Compute symbolic nodes

h = sym.Rational(1, d) # node spacing

nodes = [2*i*h - 1 for i in range(d+1)]

else:

nodes = np.linspace(-1, 1, d+1)

elif point_distribution == 'Chebyshev':

# Just numeric nodes

nodes = Chebyshev_nodes(-1, 1, d)

phi_sym = [Lagrange_polynomial(X, r, nodes)

for r in range(d+1)]

# Transform to Python functions

phi_num = [sym.lambdify([X], phi_sym[r], modules='numpy')

for r in range(d+1)]

return phi_sym if symbolic else phi_num

def Lagrange_polynomial(x, i, points):

p = 1

for k in range(len(points)):

if k != i:

p *= (x - points[k])/(points[i] - points[k])

return p

# Observe how we construct the `phi_sym` list to be

# symbolic expressions for ${\tilde{\varphi}}_r(X)$ with `X` as a

# `Symbol` object from `sympy`. Also note that the