#!/usr/bin/env python

# coding: utf-8

# Week 5: Introduction to neural Networks

#

# CSCI-UA 9473 - Introduction to Machine Learning

#

# Solutions

# ## Perceptron learning rule

# This week, we will start working with neural networks. For each of the exercises below you can use the method of your choice but you should display the final boundary of your classifier.

# ### Exercise 1.

# As a first exercise, load the binary dataset below and code a few steps of the perceptron learning rule.

# In[387]:

import scipy.io as sio

import numpy as np

import matplotlib.pyplot as plt

from matplotlib.colors import ListedColormap

cm_bright = ListedColormap(['#FF0000', '#0000FF'])

data1 = sio.loadmat('perceptron_data_class1.mat')

data2 = sio.loadmat('perceptron_data_class2.mat')

data1 = data1['perceptron_data_class1']

data2 = data2['perceptron_data_class2']

data = np.vstack((data1,data2))

target1 = np.ones((np.shape(data1)[0], 1))

target2 = -np.ones((np.shape(data2)[0], 1))

targets = np.vstack((target1, target2))

plt.scatter(data[:,0], data[:,1], c = targets, cmap = cm_bright)

plt.show()

# The code below fits a simple perceptron to the linearly separable dataset given above by repeatedly applying the perceptron learning rule until all the points are correctly classified.

# In[388]:

beta = np.random.normal(0,1,(3,))

# looking for the points sign(beta^T x^i) != t^i

Xtilde = np.hstack((np.ones((np.shape(data)[0], 1)) , data))

prediction = np.matmul(Xtilde, beta.reshape(-1,1))

indices_misclassified = np.squeeze(np.argwhere(np.squeeze(np.sign(prediction))\

!=np.squeeze(targets)))

# perceptron learning rule, beta <--- beta + eta* x^i * t^i

eta = .1

while indices_misclassified.size>0:

beta += np.squeeze(eta*Xtilde[indices_misclassified[0]] * \

targets[indices_misclassified[0]])

# re-evaluate the misclassified samples

prediction = np.matmul(Xtilde, beta.reshape(-1,1))

indices_misclassified = np.squeeze(np.argwhere(np.squeeze(np.sign(prediction))\

!=np.squeeze(targets)))

# We can then display the result using meshgrid and contourf.

# In[389]:

x1min = np.min(data[:,0])

x1max = np.max(data[:,0])

x2min = np.min(data[:,1])

x2max = np.max(data[:,1])

x1 = np.linspace(x1min, x1max, 500)

x2 = np.linspace(x2min, x2max, 500)

xx1, xx2 = np.meshgrid(x1, x2)

tmp = np.vstack((xx1.flatten(), xx2.flatten())).T

gridPoints = np.hstack((np.ones((len(xx1.flatten()), 1)), tmp))

prediction = np.sign(np.matmul(gridPoints, beta.reshape(-1,1)))

plt.contourf(xx1, xx2, prediction.reshape(np.shape(xx1)), alpha=.2, cmap = cm_bright)

plt.scatter(data[:,0], data[:,1], c = targets, cmap = cm_bright)

plt.show()

# ### Exercise 2.

#

# __2a.__ Load the data below. Using the neural_network module from scikit-learn and its MLPClassifier model, learn a classifier, for the dataset below using

#

# - One hidden layer with a linear activation function and

# - One neuron

# - Two neurons

#

#

#

# - One hidden layer with a non linear activation function (take Relu for example or a binary step)

# - One neuron

# - Two neurons

#

# How many neurons, hidden layers do you need to learn the distribution of the data? Do you have an idea why?

#

# Try increasing the number of neurons and hidden layers. Then try different values of the learning rate.

#

# In[381]:

from sklearn.neural_network import MLPClassifier

import matplotlib.pyplot as plt

import scipy.io as sio

import numpy as np

data1 = sio.loadmat('data_Week6_class1_XOR.mat')

data2 = sio.loadmat('data_Week6_class2_XOR.mat')

data1 = data1['data_Week6_class1_XOR']

data2 = data2['data_Week6_class2_XOR']

plt.scatter(data1[:,0], data1[:,1], c= 'r')

plt.scatter(data2[:,0], data2[:,1], c= 'b')

plt.show()

# We start by learning a simple 1 hidden layer classifier with a single (linear) unit in the hidden layer classifier. We can learn this classifier with SGD (default solver provided by scikit learn) as shown below.

# In[400]:

data = np.vstack((data1, data2))

target1 = np.ones((np.shape(data1)[0], 1))

target2 = 0*np.ones((np.shape(data2)[0], 1))

targets = np.vstack((target1, target2))

from sklearn.neural_network import MLPClassifier

import warnings

from sklearn.exceptions import DataConversionWarning

warnings.filterwarnings(action='ignore')

# generating the grid

x1min = np.min(data[:,0])

x1max = np.max(data[:,0])

x2min = np.min(data[:,1])

x2max = np.max(data[:,1])

x1 = np.linspace(x1min, x1max, 500)

x2 = np.linspace(x2min, x2max, 500)

xx1, xx2 = np.meshgrid(x1, x2)

data_grid = np.vstack((xx1.flatten(), xx2.flatten())).T

# single neuron (on top of the sigmoid activation of the output unit)

my_model = MLPClassifier(hidden_layer_sizes = (1,), \

activation = 'identity')

my_model.fit(data, np.squeeze(targets.reshape(-1,1)))

prediction = my_model.predict(data_grid)

plt.contourf(xx1, xx2, prediction.reshape(np.shape(xx1)), \

alpha = 0.2, cmap =cm_bright)

plt.scatter(data1[:,0], data1[:,1], c= 'r')

plt.scatter(data2[:,0], data2[:,1], c= 'b')

plt.title('Solution found through a couple of SGD steps')

plt.show()

# We can then look at the value of the loss returned for this first classifier by using the 'loss_' attribute provided by scikit's MLPClassifier implementation.

# In[390]:

my_model.loss_

# In this simple framework (2D dataset with a very small dataset), we can in fact improve the search by relying on more powerful (quasi second order) solvers such as LBFGS. Although we might not be able to escape some of the local minimizers (see spiral example with the sigmoid activation), if the landscape is relatively smooth, we should be able to reach a better minimizer.

# In[403]:

from sklearn.neural_network import MLPClassifier

import matplotlib.pyplot as plt

import scipy.io as sio

import numpy as np

data1 = sio.loadmat('data_Week6_class1_XOR.mat')

data2 = sio.loadmat('data_Week6_class2_XOR.mat')

data1 = data1['data_Week6_class1_XOR']

data2 = data2['data_Week6_class2_XOR']

data = np.vstack((data1,data2))

target1 = np.ones((np.shape(data1)[0], 1))

target2 = 0*np.ones((np.shape(data2)[0], 1))

targets = np.vstack((target1, target2))

my_model = MLPClassifier(hidden_layer_sizes = (1,), \

activation = 'identity', solver = 'lbfgs')

my_model.fit(data, np.squeeze(targets.reshape(-1,1)))

x1min = np.min(data[:,0])

x1max = np.max(data[:,0])

x2min = np.min(data[:,1])

x2max = np.max(data[:,1])

x1 = np.linspace(x1min, x1max, 100)

x2 = np.linspace(x2min, x2max, 100)

xx1, xx2 = np.meshgrid(x1, x2)

data_grid = np.vstack((xx1.flatten(), xx2.flatten())).T

prediction = my_model.predict(data_grid)

plt.contourf(xx1, xx2, prediction.reshape(np.shape(xx1)), \

alpha = 0.2, cmap =cm_bright)

plt.scatter(data[:,0], data[:,1], c = targets, cmap = cm_bright)

plt.title('Solution found through LBFGS')

plt.show()

# To compare our LBFGS solution with the solution returned by the Stochastic gradient steps, we can again check the final value of the loss (bianry cross entropy in this case) using the 'loss_' attribute.

# In[404]:

print(my_model.loss_)

# In turns out that in this case, the best solution puts all the sample in a single class.

# We then increase the number of neurons and consider non linear activation functions

# In[407]:

# two neurons with linear activation

my_model = MLPClassifier(hidden_layer_sizes = (2,), activation = 'identity')

my_model.fit(data, np.squeeze(targets.reshape(-1,1)))

prediction = my_model.predict(data_grid)

plt.contourf(xx1, xx2, prediction.reshape(np.shape(xx1)), \

alpha = 0.2, cmap =cm_bright)

plt.scatter(data1[:,0], data1[:,1], c= 'r')

plt.scatter(data2[:,0], data2[:,1], c= 'b')

plt.show()

# Considering the Sigmoid function first..

# In[409]:

my_model = MLPClassifier(hidden_layer_sizes = (10,10), \

activation = 'logistic',

solver = 'lbfgs', max_iter=100000, alpha=.1)

my_model.fit(data, np.squeeze(targets.reshape(-1,1)))

prediction = my_model.predict(data_grid)

plt.contourf(xx1, xx2, prediction.reshape(np.shape(xx1)), \

alpha = 0.2, cmap =cm_bright)

plt.scatter(data1[:,0], data1[:,1], c= 'b')

plt.scatter(data2[:,0], data2[:,1], c= 'r')

plt.show()

# And then the relu

# In[408]:

my_model = MLPClassifier(hidden_layer_sizes = (10,10), \

activation = 'relu',

solver = 'lbfgs', max_iter=100000, alpha=.1)

my_model.fit(data, np.squeeze(targets.reshape(-1,1)))

prediction = my_model.predict(data_grid)

plt.contourf(xx1, xx2, prediction.reshape(np.shape(xx1)), \

alpha = 0.2, cmap =cm_bright)

plt.scatter(data1[:,0], data1[:,1], c= 'b')

plt.scatter(data2[:,0], data2[:,1], c= 'r')

plt.show()

# ### Exercise 3.

#

# __3a.__Load the data below. Try to build the best neural network you can for this dataset. Split the data between a training and a test set and evaluate the models you built. What is the best validation error you can get?

# In[410]:

from sklearn.neural_network import MLPClassifier

import matplotlib.pyplot as plt

import scipy.io as sio

import numpy as np

data1 = sio.loadmat('neural_net_ex2_class1.mat')

data2 = sio.loadmat('neural_net_ex2_class2.mat')

data1 = data1['neural_net_ex2_class1']

data2 = data2['neural_net_ex2_class2']

data = np.vstack((data1, data2))

target1 = np.ones((np.shape(data1)[0], 1))

target2 = np.zeros((np.shape(data2)[0], 1))

targets = np.vstack((target1, target2))

# scaling the data (centering and dividig by the variance)

from sklearn.preprocessing import StandardScaler

scaler = StandardScaler()

scaler.fit(data)

scaled_data = scaler.transform(data)

plt.scatter(scaled_data[:,0], scaled_data[:,1], c= targets, cmap = cm_bright)

plt.show()

# We will successively train three neural networks with activations given by (1) the relu (2) the hypebolic tangent and (3) the sigmoid. For each of those MLP architecture, one can get a sense of the complexity of the landscape by playing with the 'random_state' parameter which controls weights and bias initialization. The quality of the solution also illustrates a difference in the convergence to local vs global solutions

# In[413]:

# learning a neural network with the relu activation function

my_neural_net = MLPClassifier(hidden_layer_sizes = (50,50),\

activation = 'relu', \

solver = 'lbfgs', max_iter = 15000, \

alpha=.005,random_state = 100)

my_neural_net.fit(scaled_data, np.squeeze(targets))

x1min=np.min(scaled_data[:,0])

x1max=np.max(scaled_data[:,0])

x2min=np.min(scaled_data[:,1])

x2max=np.max(scaled_data[:,1])

x1 = np.linspace(x1min, x1max)

x2 = np.linspace(x2min, x2max)

xx1, xx2 = np.meshgrid(x1, x2)

data_grid = np.vstack((xx1.flatten(), xx2.flatten())).T

prediction = my_neural_net.predict(data_grid)

plt.contourf(xx1, xx2, prediction.reshape(np.shape(xx1)), alpha =.2, cmap = cm_bright)

plt.scatter(scaled_data[:,0], scaled_data[:,1], c= targets, cmap = cm_bright)

plt.show()

# In[412]:

# learning the network with tanh

my_neural_net = MLPClassifier(hidden_layer_sizes = (30,30),max_iter=20000,\

learning_rate_init=.0001, alpha = .1, solver = 'lbfgs',\

activation = 'tanh', random_state = 100)

my_neural_net.fit(scaled_data, np.squeeze(targets))

x1min=np.min(scaled_data[:,0])

x1max=np.max(scaled_data[:,0])

x2min=np.min(scaled_data[:,1])

x2max=np.max(scaled_data[:,1])

x1 = np.linspace(x1min, x1max)

x2 = np.linspace(x2min, x2max)

xx1, xx2 = np.meshgrid(x1, x2)

data_grid = np.vstack((xx1.flatten(), xx2.flatten())).T

prediction = my_neural_net.predict(data_grid)

plt.contourf(xx1, xx2, prediction.reshape(np.shape(xx1)), alpha =.2, cmap = cm_bright)

plt.scatter(scaled_data[:,0], scaled_data[:,1], c= targets, cmap = cm_bright)

plt.show()

# In[411]:

# learning the network with a logistic activation

my_neural_net = MLPClassifier(hidden_layer_sizes = (10,10),max_iter=100000,\

alpha=1e-15, solver = 'lbfgs',\

activation = 'logistic', random_state = 100,max_fun = 1e7)

my_neural_net.fit(scaled_data, np.squeeze(targets))

x1min=np.min(scaled_data[:,0])

x1max=np.max(scaled_data[:,0])

x2min=np.min(scaled_data[:,1])

x2max=np.max(scaled_data[:,1])

x1 = np.linspace(x1min, x1max)

x2 = np.linspace(x2min, x2max)

xx1, xx2 = np.meshgrid(x1, x2)

data_grid = np.vstack((xx1.flatten(), xx2.flatten())).T

prediction = my_neural_net.predict(data_grid)

plt.contourf(xx1, xx2, prediction.reshape(np.shape(xx1)), alpha =.2, cmap = cm_bright)

plt.scatter(scaled_data[:,0], scaled_data[:,1], c= targets, cmap = cm_bright)

plt.show()

# __3b.__ With the same dataset, add additional features to your model, e.g. $\sin(x), \sin(y)$ or other monomials. Can you improve your classifier ?

# ### Exercise 4.

#

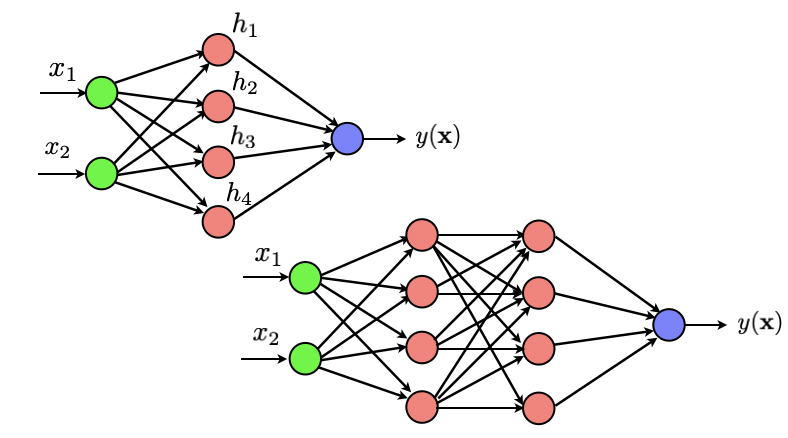

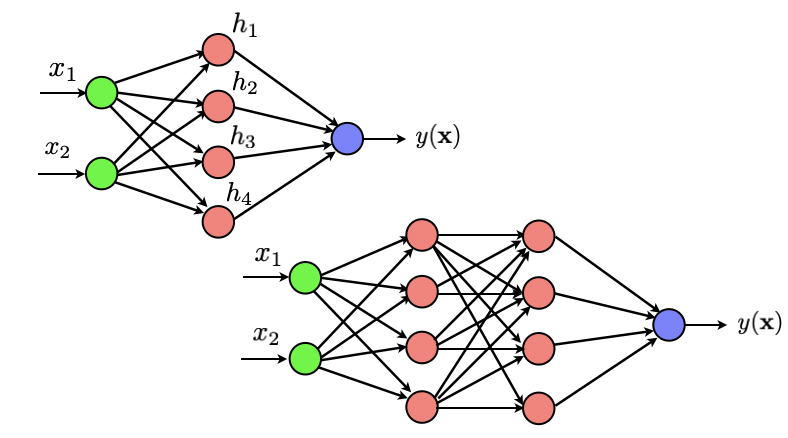

# Let us go back briefly to a simple dataset to make sure we understand how things work. As a first exercise, we will code a one hidden layer neural network that outputs a binary 0/1 variable indicating the class of our data. Throughout this exercise, we will use the notation $z^{\ell+1} = \sigma(a^{\ell+1})$ to denote the output of any neuron from the $(\ell+1)^{th}$ layer and where $a^{\ell+1} = \sum_{k} w_{\ell+1,k} z_k$ is the combination from the previous layer that is fed to the neuron.

#

#

#  #

# We will use the function ['minimize'](https://docs.scipy.org/doc/scipy/reference/generated/scipy.optimize.minimize.html) from scipy. Check the documentation of that function. We will set the 'jac' parameter of the optimizer to 'True', which implies that the (objective) function that we provide as an input should return both the value of the loss and the value of its gradient.

#

# For this first exercise, you are asked to write a function $f(W)$ wich takes as arguments a vector $W$ containing all the parameters of your network (as a first step consider building a network with only a few hidden units, in order to make sure the model is working). As indicated above, the function should return (1) the value of the binary cross entropy for the given set of weights and (2) the value of the gradient derived through Backpropagation (as we set the value of 'jac' to True).

#

# We will split the writing of your function into several steps. Once you have coded each step, you should gather them together in a single $fun(W)$ body that you will then pass as input to the minimize function.

#

# ### Exercise 4.a.

#

# Start Load the data using the lines below and plot it using scatter()

# In[ ]:

from scipy.optimize import minimize

import scipy.io as sio

data1 = sio.loadmat('pointsClass1Week6.mat')

data2 = sio.loadmat('pointsClass2Week6.mat')

from numpy import linalg as LA

data1 = data1['pointsClass1Week6']

data2 = data2['pointsClass2Week6']

# put your code here

# ### Exercise 4.b

#

# As indicated above, we want to apply the network to the simple binary dataset that you loaded above. We want to build an architecture similar to the one shown above, except that, since we only consider a 2D dataset, we only need 2 inputs. we want our activation function to be all sigmoid. Start by defining the function sigmoid and the gradient of this function. Once you are done, check your derivative. Also make sure you can compute the entrywise sigmoid on any numpy array if the input to your function is a numpy array.

# In[ ]:

# ### Exercise 4.c

#

# Now that we have the sigmoid and its gradient, we will code the loss. As you might remember from previous labs, the MLPClassifier from scikit-learn optimizes the 'log-loss function' (a.k.a Binary cross entropy) which reads for a set of $N$ prototypes $x_i$ with binary $0/1$ targets,

#

# $$−\frac{1}{N}\sum_{i=1}^N (y_i \log(p_{W}(x_i))+(1−y_i)\log(1−p_{W}(x_i))) $$

#

# Here the probability $p_{W}(x_i)$ is the output of your network. Instead of minimizing this function directly, we will consider its $\ell_2$ regularized version

#

# $$−\frac{1}{N}\sum_{i=1}^N (y_i \log(p_{W}(x_i))+(1−y_i)\log(1−p_{W}(x_i))) + \lambda \sum_{j\in\text{weights}\setminus \text{bias}} W^2_j$$

#

# Where the $W_j$ encode the weights. Code that function for a given labeled dataset $X,t$ such as given above, a set of weights stored in the vector $W$ (you can use a list or a dictionnary if you want but ultimately, you will need to store them in a numpy vector for use with the optimization routine). Note that one typically does not regularize the bias terms.

# In[ ]:

# ### Exercise 4.d.

#

# Towards Backpropagation. The example above is relatively simple so that backpropagation is not really needed. Compute the gradient of the Binary cross entropy loss with respect to the weights.

# In[ ]:

# ### Exercise 4.e.

#

# Combine all your previous steps into a single function and pass this function to the 'minimize' routine. To choose the initial value for the weights, a common heuristic is for the n^th layer to be initialized unformly at random in the interval $[-\varepsilon_n, \varepsilon_n]$ with $\epsilon_n$ defined as $\sqrt{\frac{2}{\text{size layer}_{\ell-1} + \text{size layer}_{\ell} }}$

#

#

# In[ ]:

# ### Bonus 1

#

# Change the activation to the Relu and reapeat the steps above. How do you compute the gradient in this case?

# In[ ]:

# ### Exercise 5.

#

# Coding a One hidden layer neural network is good to warm up but to really understand backpropagation, we will not add a few hidden layers. Still relying on the code that you developed above and using backpropagation, train a depth 4 neural network with 10 neurons in each layer on the binary dataset.

# ### Exercise 5a.

#

# In this exercise, because of the multiple layers of the network, you will get to really code backpropagation. To do this, follow the steps below

# (see [Bishop Pattern Recognition and Machine Learning](http://users.isr.ist.utl.pt/~wurmd/Livros/school/Bishop%20-%20Pattern%20Recognition%20And%20Machine%20Learning%20-%20Springer%20%202006.pdf)) for more details.

#

# - Take any of the sample (Once you have completed one step of backprop, you should re-apply it to the next sample to get the gradient contribution for that next sample and so on). Forward propagate that sample through the network and compute all the activations $z = \sigma(a_i)$ and input $a_i$ for each unit.

#

# - Evaluate the $\delta_k = y_k(x_n) - t_{n,k}$ for all the output units.

#

# - Backpropagate the $\delta_k$ using the formula

#

# $$\delta_j = \sigma'(a_j) \sum_{k} w_{k,j} \delta_k$$

#

# - Finally, compute the derivatives as

#

# $$\frac{\partial \text{loss}}{\partial w_{ij}} = \delta_j z_i$$

#

# Note that when coding the backpropagation algorithm, you don't need to account for the regularization part of the loss as you can just add the gradient of the regularization to the result of the backpropagation algorithm.

#

# In[ ]:

from scipy.optimize import minimize

# put your code here

# ### Exercise 6.

#

# Extending to multiple classes. Load the data using the lines given below, visualize it with scatter. How can you extend the Binary cross entropy function to the multiclass problem?

# In[7]:

import scipy.io as sio

data1 = sio.loadmat('xWeek6Ex3PointsClass1.mat')

data2 = sio.loadmat('xWeek6Ex3PointsClass2.mat')

data3 = sio.loadmat('xWeek6Ex3PointsClass3.mat')

from numpy import linalg as LA

data1 = data1['xWeek6Ex3PointsClass1']

data2 = data2['xWeek6Ex3PointsClass2']

data3 = data3['xWeek6Ex3PointsClass3']

# put your code here

#

# We will use the function ['minimize'](https://docs.scipy.org/doc/scipy/reference/generated/scipy.optimize.minimize.html) from scipy. Check the documentation of that function. We will set the 'jac' parameter of the optimizer to 'True', which implies that the (objective) function that we provide as an input should return both the value of the loss and the value of its gradient.

#

# For this first exercise, you are asked to write a function $f(W)$ wich takes as arguments a vector $W$ containing all the parameters of your network (as a first step consider building a network with only a few hidden units, in order to make sure the model is working). As indicated above, the function should return (1) the value of the binary cross entropy for the given set of weights and (2) the value of the gradient derived through Backpropagation (as we set the value of 'jac' to True).

#

# We will split the writing of your function into several steps. Once you have coded each step, you should gather them together in a single $fun(W)$ body that you will then pass as input to the minimize function.

#

# ### Exercise 4.a.

#

# Start Load the data using the lines below and plot it using scatter()

# In[ ]:

from scipy.optimize import minimize

import scipy.io as sio

data1 = sio.loadmat('pointsClass1Week6.mat')

data2 = sio.loadmat('pointsClass2Week6.mat')

from numpy import linalg as LA

data1 = data1['pointsClass1Week6']

data2 = data2['pointsClass2Week6']

# put your code here

# ### Exercise 4.b

#

# As indicated above, we want to apply the network to the simple binary dataset that you loaded above. We want to build an architecture similar to the one shown above, except that, since we only consider a 2D dataset, we only need 2 inputs. we want our activation function to be all sigmoid. Start by defining the function sigmoid and the gradient of this function. Once you are done, check your derivative. Also make sure you can compute the entrywise sigmoid on any numpy array if the input to your function is a numpy array.

# In[ ]:

# ### Exercise 4.c

#

# Now that we have the sigmoid and its gradient, we will code the loss. As you might remember from previous labs, the MLPClassifier from scikit-learn optimizes the 'log-loss function' (a.k.a Binary cross entropy) which reads for a set of $N$ prototypes $x_i$ with binary $0/1$ targets,

#

# $$−\frac{1}{N}\sum_{i=1}^N (y_i \log(p_{W}(x_i))+(1−y_i)\log(1−p_{W}(x_i))) $$

#

# Here the probability $p_{W}(x_i)$ is the output of your network. Instead of minimizing this function directly, we will consider its $\ell_2$ regularized version

#

# $$−\frac{1}{N}\sum_{i=1}^N (y_i \log(p_{W}(x_i))+(1−y_i)\log(1−p_{W}(x_i))) + \lambda \sum_{j\in\text{weights}\setminus \text{bias}} W^2_j$$

#

# Where the $W_j$ encode the weights. Code that function for a given labeled dataset $X,t$ such as given above, a set of weights stored in the vector $W$ (you can use a list or a dictionnary if you want but ultimately, you will need to store them in a numpy vector for use with the optimization routine). Note that one typically does not regularize the bias terms.

# In[ ]:

# ### Exercise 4.d.

#

# Towards Backpropagation. The example above is relatively simple so that backpropagation is not really needed. Compute the gradient of the Binary cross entropy loss with respect to the weights.

# In[ ]:

# ### Exercise 4.e.

#

# Combine all your previous steps into a single function and pass this function to the 'minimize' routine. To choose the initial value for the weights, a common heuristic is for the n^th layer to be initialized unformly at random in the interval $[-\varepsilon_n, \varepsilon_n]$ with $\epsilon_n$ defined as $\sqrt{\frac{2}{\text{size layer}_{\ell-1} + \text{size layer}_{\ell} }}$

#

#

# In[ ]:

# ### Bonus 1

#

# Change the activation to the Relu and reapeat the steps above. How do you compute the gradient in this case?

# In[ ]:

# ### Exercise 5.

#

# Coding a One hidden layer neural network is good to warm up but to really understand backpropagation, we will not add a few hidden layers. Still relying on the code that you developed above and using backpropagation, train a depth 4 neural network with 10 neurons in each layer on the binary dataset.

# ### Exercise 5a.

#

# In this exercise, because of the multiple layers of the network, you will get to really code backpropagation. To do this, follow the steps below

# (see [Bishop Pattern Recognition and Machine Learning](http://users.isr.ist.utl.pt/~wurmd/Livros/school/Bishop%20-%20Pattern%20Recognition%20And%20Machine%20Learning%20-%20Springer%20%202006.pdf)) for more details.

#

# - Take any of the sample (Once you have completed one step of backprop, you should re-apply it to the next sample to get the gradient contribution for that next sample and so on). Forward propagate that sample through the network and compute all the activations $z = \sigma(a_i)$ and input $a_i$ for each unit.

#

# - Evaluate the $\delta_k = y_k(x_n) - t_{n,k}$ for all the output units.

#

# - Backpropagate the $\delta_k$ using the formula

#

# $$\delta_j = \sigma'(a_j) \sum_{k} w_{k,j} \delta_k$$

#

# - Finally, compute the derivatives as

#

# $$\frac{\partial \text{loss}}{\partial w_{ij}} = \delta_j z_i$$

#

# Note that when coding the backpropagation algorithm, you don't need to account for the regularization part of the loss as you can just add the gradient of the regularization to the result of the backpropagation algorithm.

#

# In[ ]:

from scipy.optimize import minimize

# put your code here

# ### Exercise 6.

#

# Extending to multiple classes. Load the data using the lines given below, visualize it with scatter. How can you extend the Binary cross entropy function to the multiclass problem?

# In[7]:

import scipy.io as sio

data1 = sio.loadmat('xWeek6Ex3PointsClass1.mat')

data2 = sio.loadmat('xWeek6Ex3PointsClass2.mat')

data3 = sio.loadmat('xWeek6Ex3PointsClass3.mat')

from numpy import linalg as LA

data1 = data1['xWeek6Ex3PointsClass1']

data2 = data2['xWeek6Ex3PointsClass2']

data3 = data3['xWeek6Ex3PointsClass3']

# put your code here

#

# We will use the function ['minimize'](https://docs.scipy.org/doc/scipy/reference/generated/scipy.optimize.minimize.html) from scipy. Check the documentation of that function. We will set the 'jac' parameter of the optimizer to 'True', which implies that the (objective) function that we provide as an input should return both the value of the loss and the value of its gradient.

#

# For this first exercise, you are asked to write a function $f(W)$ wich takes as arguments a vector $W$ containing all the parameters of your network (as a first step consider building a network with only a few hidden units, in order to make sure the model is working). As indicated above, the function should return (1) the value of the binary cross entropy for the given set of weights and (2) the value of the gradient derived through Backpropagation (as we set the value of 'jac' to True).

#

# We will split the writing of your function into several steps. Once you have coded each step, you should gather them together in a single $fun(W)$ body that you will then pass as input to the minimize function.

#

# ### Exercise 4.a.

#

# Start Load the data using the lines below and plot it using scatter()

# In[ ]:

from scipy.optimize import minimize

import scipy.io as sio

data1 = sio.loadmat('pointsClass1Week6.mat')

data2 = sio.loadmat('pointsClass2Week6.mat')

from numpy import linalg as LA

data1 = data1['pointsClass1Week6']

data2 = data2['pointsClass2Week6']

# put your code here

# ### Exercise 4.b

#

# As indicated above, we want to apply the network to the simple binary dataset that you loaded above. We want to build an architecture similar to the one shown above, except that, since we only consider a 2D dataset, we only need 2 inputs. we want our activation function to be all sigmoid. Start by defining the function sigmoid and the gradient of this function. Once you are done, check your derivative. Also make sure you can compute the entrywise sigmoid on any numpy array if the input to your function is a numpy array.

# In[ ]:

# ### Exercise 4.c

#

# Now that we have the sigmoid and its gradient, we will code the loss. As you might remember from previous labs, the MLPClassifier from scikit-learn optimizes the 'log-loss function' (a.k.a Binary cross entropy) which reads for a set of $N$ prototypes $x_i$ with binary $0/1$ targets,

#

# $$−\frac{1}{N}\sum_{i=1}^N (y_i \log(p_{W}(x_i))+(1−y_i)\log(1−p_{W}(x_i))) $$

#

# Here the probability $p_{W}(x_i)$ is the output of your network. Instead of minimizing this function directly, we will consider its $\ell_2$ regularized version

#

# $$−\frac{1}{N}\sum_{i=1}^N (y_i \log(p_{W}(x_i))+(1−y_i)\log(1−p_{W}(x_i))) + \lambda \sum_{j\in\text{weights}\setminus \text{bias}} W^2_j$$

#

# Where the $W_j$ encode the weights. Code that function for a given labeled dataset $X,t$ such as given above, a set of weights stored in the vector $W$ (you can use a list or a dictionnary if you want but ultimately, you will need to store them in a numpy vector for use with the optimization routine). Note that one typically does not regularize the bias terms.

# In[ ]:

# ### Exercise 4.d.

#

# Towards Backpropagation. The example above is relatively simple so that backpropagation is not really needed. Compute the gradient of the Binary cross entropy loss with respect to the weights.

# In[ ]:

# ### Exercise 4.e.

#

# Combine all your previous steps into a single function and pass this function to the 'minimize' routine. To choose the initial value for the weights, a common heuristic is for the n^th layer to be initialized unformly at random in the interval $[-\varepsilon_n, \varepsilon_n]$ with $\epsilon_n$ defined as $\sqrt{\frac{2}{\text{size layer}_{\ell-1} + \text{size layer}_{\ell} }}$

#

#

# In[ ]:

# ### Bonus 1

#

# Change the activation to the Relu and reapeat the steps above. How do you compute the gradient in this case?

# In[ ]:

# ### Exercise 5.

#

# Coding a One hidden layer neural network is good to warm up but to really understand backpropagation, we will not add a few hidden layers. Still relying on the code that you developed above and using backpropagation, train a depth 4 neural network with 10 neurons in each layer on the binary dataset.

# ### Exercise 5a.

#

# In this exercise, because of the multiple layers of the network, you will get to really code backpropagation. To do this, follow the steps below

# (see [Bishop Pattern Recognition and Machine Learning](http://users.isr.ist.utl.pt/~wurmd/Livros/school/Bishop%20-%20Pattern%20Recognition%20And%20Machine%20Learning%20-%20Springer%20%202006.pdf)) for more details.

#

# - Take any of the sample (Once you have completed one step of backprop, you should re-apply it to the next sample to get the gradient contribution for that next sample and so on). Forward propagate that sample through the network and compute all the activations $z = \sigma(a_i)$ and input $a_i$ for each unit.

#

# - Evaluate the $\delta_k = y_k(x_n) - t_{n,k}$ for all the output units.

#

# - Backpropagate the $\delta_k$ using the formula

#

# $$\delta_j = \sigma'(a_j) \sum_{k} w_{k,j} \delta_k$$

#

# - Finally, compute the derivatives as

#

# $$\frac{\partial \text{loss}}{\partial w_{ij}} = \delta_j z_i$$

#

# Note that when coding the backpropagation algorithm, you don't need to account for the regularization part of the loss as you can just add the gradient of the regularization to the result of the backpropagation algorithm.

#

# In[ ]:

from scipy.optimize import minimize

# put your code here

# ### Exercise 6.

#

# Extending to multiple classes. Load the data using the lines given below, visualize it with scatter. How can you extend the Binary cross entropy function to the multiclass problem?

# In[7]:

import scipy.io as sio

data1 = sio.loadmat('xWeek6Ex3PointsClass1.mat')

data2 = sio.loadmat('xWeek6Ex3PointsClass2.mat')

data3 = sio.loadmat('xWeek6Ex3PointsClass3.mat')

from numpy import linalg as LA

data1 = data1['xWeek6Ex3PointsClass1']

data2 = data2['xWeek6Ex3PointsClass2']

data3 = data3['xWeek6Ex3PointsClass3']

# put your code here

#

# We will use the function ['minimize'](https://docs.scipy.org/doc/scipy/reference/generated/scipy.optimize.minimize.html) from scipy. Check the documentation of that function. We will set the 'jac' parameter of the optimizer to 'True', which implies that the (objective) function that we provide as an input should return both the value of the loss and the value of its gradient.

#

# For this first exercise, you are asked to write a function $f(W)$ wich takes as arguments a vector $W$ containing all the parameters of your network (as a first step consider building a network with only a few hidden units, in order to make sure the model is working). As indicated above, the function should return (1) the value of the binary cross entropy for the given set of weights and (2) the value of the gradient derived through Backpropagation (as we set the value of 'jac' to True).

#

# We will split the writing of your function into several steps. Once you have coded each step, you should gather them together in a single $fun(W)$ body that you will then pass as input to the minimize function.

#

# ### Exercise 4.a.

#

# Start Load the data using the lines below and plot it using scatter()

# In[ ]:

from scipy.optimize import minimize

import scipy.io as sio

data1 = sio.loadmat('pointsClass1Week6.mat')

data2 = sio.loadmat('pointsClass2Week6.mat')

from numpy import linalg as LA

data1 = data1['pointsClass1Week6']

data2 = data2['pointsClass2Week6']

# put your code here

# ### Exercise 4.b

#

# As indicated above, we want to apply the network to the simple binary dataset that you loaded above. We want to build an architecture similar to the one shown above, except that, since we only consider a 2D dataset, we only need 2 inputs. we want our activation function to be all sigmoid. Start by defining the function sigmoid and the gradient of this function. Once you are done, check your derivative. Also make sure you can compute the entrywise sigmoid on any numpy array if the input to your function is a numpy array.

# In[ ]:

# ### Exercise 4.c

#

# Now that we have the sigmoid and its gradient, we will code the loss. As you might remember from previous labs, the MLPClassifier from scikit-learn optimizes the 'log-loss function' (a.k.a Binary cross entropy) which reads for a set of $N$ prototypes $x_i$ with binary $0/1$ targets,

#

# $$−\frac{1}{N}\sum_{i=1}^N (y_i \log(p_{W}(x_i))+(1−y_i)\log(1−p_{W}(x_i))) $$

#

# Here the probability $p_{W}(x_i)$ is the output of your network. Instead of minimizing this function directly, we will consider its $\ell_2$ regularized version

#

# $$−\frac{1}{N}\sum_{i=1}^N (y_i \log(p_{W}(x_i))+(1−y_i)\log(1−p_{W}(x_i))) + \lambda \sum_{j\in\text{weights}\setminus \text{bias}} W^2_j$$

#

# Where the $W_j$ encode the weights. Code that function for a given labeled dataset $X,t$ such as given above, a set of weights stored in the vector $W$ (you can use a list or a dictionnary if you want but ultimately, you will need to store them in a numpy vector for use with the optimization routine). Note that one typically does not regularize the bias terms.

# In[ ]:

# ### Exercise 4.d.

#

# Towards Backpropagation. The example above is relatively simple so that backpropagation is not really needed. Compute the gradient of the Binary cross entropy loss with respect to the weights.

# In[ ]:

# ### Exercise 4.e.

#

# Combine all your previous steps into a single function and pass this function to the 'minimize' routine. To choose the initial value for the weights, a common heuristic is for the n^th layer to be initialized unformly at random in the interval $[-\varepsilon_n, \varepsilon_n]$ with $\epsilon_n$ defined as $\sqrt{\frac{2}{\text{size layer}_{\ell-1} + \text{size layer}_{\ell} }}$

#

#

# In[ ]:

# ### Bonus 1

#

# Change the activation to the Relu and reapeat the steps above. How do you compute the gradient in this case?

# In[ ]:

# ### Exercise 5.

#

# Coding a One hidden layer neural network is good to warm up but to really understand backpropagation, we will not add a few hidden layers. Still relying on the code that you developed above and using backpropagation, train a depth 4 neural network with 10 neurons in each layer on the binary dataset.

# ### Exercise 5a.

#

# In this exercise, because of the multiple layers of the network, you will get to really code backpropagation. To do this, follow the steps below

# (see [Bishop Pattern Recognition and Machine Learning](http://users.isr.ist.utl.pt/~wurmd/Livros/school/Bishop%20-%20Pattern%20Recognition%20And%20Machine%20Learning%20-%20Springer%20%202006.pdf)) for more details.

#

# - Take any of the sample (Once you have completed one step of backprop, you should re-apply it to the next sample to get the gradient contribution for that next sample and so on). Forward propagate that sample through the network and compute all the activations $z = \sigma(a_i)$ and input $a_i$ for each unit.

#

# - Evaluate the $\delta_k = y_k(x_n) - t_{n,k}$ for all the output units.

#

# - Backpropagate the $\delta_k$ using the formula

#

# $$\delta_j = \sigma'(a_j) \sum_{k} w_{k,j} \delta_k$$

#

# - Finally, compute the derivatives as

#

# $$\frac{\partial \text{loss}}{\partial w_{ij}} = \delta_j z_i$$

#

# Note that when coding the backpropagation algorithm, you don't need to account for the regularization part of the loss as you can just add the gradient of the regularization to the result of the backpropagation algorithm.

#

# In[ ]:

from scipy.optimize import minimize

# put your code here

# ### Exercise 6.

#

# Extending to multiple classes. Load the data using the lines given below, visualize it with scatter. How can you extend the Binary cross entropy function to the multiclass problem?

# In[7]:

import scipy.io as sio

data1 = sio.loadmat('xWeek6Ex3PointsClass1.mat')

data2 = sio.loadmat('xWeek6Ex3PointsClass2.mat')

data3 = sio.loadmat('xWeek6Ex3PointsClass3.mat')

from numpy import linalg as LA

data1 = data1['xWeek6Ex3PointsClass1']

data2 = data2['xWeek6Ex3PointsClass2']

data3 = data3['xWeek6Ex3PointsClass3']

# put your code here