#!/usr/bin/env python

# coding: utf-8

# Fitting and model building

#

# #### **Quick intro to the following packages**

# - The core package `iminuit`.

# - Model building and a word on the Scikit-HEP affiliated project/package `zfit`.

#

#

#  #

# Python wrapper to Minuit2 minimization and error computation package

#

# The `iminuit` package provides Python bindings for the [C++ Minuit2 library](https://root.cern.ch/guides/minuit2-manual) maintained at CERN, which is effectively the only fitting engine used in HEP. The package has no external dependency apart from NumPy.

#

# `iminuit` gives the user full power of the engine internals. That can be really useful, but it often demands a certain level of expertise. It is hence no wonder that many fitting libraries around build atop it. That's in particular the case for Astronomy.

#

# Note: feel free to complement the introduction below with the several *great tutorials* available from the [GitHub repository](https://github.com/scikit-hep/iminuit). Indeed what is shown here is just an appetiser.

# ### **1. A very simple example**

#

# Minimisation of a function:

# In[ ]:

from iminuit import Minuit

def fcn(x, y, z):

return (x - 2) ** 2 + (y - 3) ** 2 + (z - 4) ** 2

fcn.errordef = Minuit.LEAST_SQUARES

m = Minuit(fcn, x=0, y=0, z=0)

m.migrad() # run optimiser

# In[ ]:

m.hesse() # run covariance estimator

# ### **2. A more evolved example**

#

# Let's look at a little sample of track information generated with a toy.

#

#

# Acknowledgements

#

# This mini-tutorial is kindly provided by Hans Dembinski (TU Dortmund), with minor modifications.

#

# In[ ]:

import uproot

f = uproot.open("data/sample_tracks.root")

event = f["event"]

# In[ ]:

event.show()

# Legend :-):

# * mc_trk_len: number of true tracks in event

# * mc_trk_px: x-component of true momentum of particle (variable-length array)

# * mc_trk_py: y-component of true momentum of particle (variable-length array)

# * mc_trk_pz: z-component of true momentum of particle (variable-length array)

# * trk_len: number of reconstructed tracks in event

# * trk_px: x-component of momentum of reconstructed track (variable-length array)

# * trk_py: y-component of momentum of reconstructed track (variable-length array)

# * trk_pz: z-component of momentum of reconstructed track (variable-length array)

# * trk_imc: index of matched true particle or -1 (variable-length array)

# Get the content of branches as arrays (don't use this in large trees - you will exhaust the computer memory):

# In[ ]:

trk_len = event["trk_len"].array()

mc_trk_len = event["mc_trk_len"].array()

#trk_mom = event.arrays(["trk_px", "trk_py", "trk_pz"], library="pd")

trk_px = event["trk_px"].array(library="pd")

# In[ ]:

# first ten entries, this is a normal numpy array

trk_len[:10]

# In[ ]:

import numpy as np

print(f"{np.sum(trk_len == 0)} events with zero tracks")

# In[ ]:

import matplotlib.pyplot as plt

plt.hist(trk_len);

# In[ ]:

# first ten entries, trk_px is a special jagged array

trk_px[:10]

# In[ ]:

plt.hist(trk_px);

# #### **Fits**

#

# * Typical analysis work flow:

# 1. Pre-select data and make compact data trees

# 2. Make histograms and profiles from tree data

# 3. Fit histograms and profiles to extract physical parameters

# * Many specialized fitting tools for individual purposes, e.g.:

# - [scipy.optimize.curve_fit](https://docs.scipy.org/doc/scipy/reference/generated/scipy.optimize.curve_fit.html#scipy.optimize.curve_fit)

# - [RooFit](https://root.cern.ch/roofit)

# * Generic method

# - Select mathematical model (PDF) which describes data

# - Use maximum-likelihood method to adapt model to data

# * Specialised methods give fast results for some types of problems

# * Generic method allows one to do advanced things not implemented in specialised methods

# Let's fit the $p_x$ distribution with a normal distribution to extract the parameters $\mu$ and $\sigma$.

#

# - To apply a maximum-likelihood method, we need a statistical model that describes the data

# - Assumption 1: original data before histogramming is normal distributed; pdf is $\mathcal{N}(\mu, \sigma)$ with parameters $\mu$ and $\sigma$

# - Assumption 2: count in histogram cell is Poisson distributed $P(n_i, \lambda_i)$

# - Expected content in a histogram cell is $\lambda_i = N \int_{x_i}^{x_{i+1}} \mathcal{N}(\mu, \sigma) \, \text{d}x$, where $N$ is total number of events

# - Likelihood is joint probability of data under model

# $L = \prod_i P(n_i, \lambda_i)$, need to maximize this by varying model parameters $\mu$ and $\sigma$

# - Technical step to achieve this: Minimize score $S(\mu,\sigma) = -2\ln L(\mu, \sigma) = -2\sum_i \ln P(n_i; \lambda_i)$

# In[ ]:

import boost_histogram as bh

xaxis = bh.axis.Regular(50, -2, 2)

h_px = bh.Histogram(xaxis)

h_px.fill(trk_px)

# In[ ]:

# get data from before

px_axis = h_px.axes[0]

cx = px_axis.centers

dx = px_axis.widths

xe = px_axis.edges

n = h_px.view()

plt.errorbar(cx, n, n**0.5, dx, fmt="o", label="data");

# In[ ]:

# scipy has efficient and correct implementations for most statistical distributions

from scipy.stats import norm, poisson

n_total = np.sum(n)

def score(mu, sigma):

cdf = norm(mu, sigma).cdf

lambdas = n_total * (cdf(xe[1:]) - cdf(xe[:-1]))

probs = poisson.pmf(n, lambdas)

return -2 * np.sum(np.log(probs + 1e-100)) # avoid taking log of zero

# In[ ]:

from matplotlib.ticker import LogLocator

mus = np.linspace(-1, 1, 20)

sigmas = np.linspace(1e-10, 2, 20)

g_mu, g_sigma = np.meshgrid(mus, sigmas)

g_score = np.vectorize(score)(g_mu, g_sigma)

plt.contourf(g_mu, g_sigma, g_score)

plt.xlabel("$\mu$")

plt.ylabel("$\sigma$")

plt.colorbar();

# In[ ]:

m = Minuit(score, mu=0, sigma=1)

m.errordef = Minuit.LEAST_SQUARES

m.migrad()

mu, sigma = m.values

s_mu, s_sigma = m.errors

plt.errorbar(cx, n, n ** 0.5, dx, fmt="o", label="data");

plt.plot(cx, norm(mu, sigma).pdf(cx) * n_total * dx, label="fit")

plt.title(f"$\mu = {mu:.3f} \pm {s_mu:.3f}, \quad \sigma = {sigma:.3f} \pm {s_sigma:.3f}$")

plt.legend();

# Check whether the fit is good:

# - by looking at *pull distribution*

# - $(n_i - \lambda_i) / \lambda_i$ for Poisson distribute data

# - by checking the $\chi^2$ value against the degrees of freedom

# - Simple check: $\chi^2/n_\text{dof}$ should be about 1

# - Better check: chance probability $\int_{\chi^2_\text{observed}}^{-\infty} P(\chi^2; n_\text{dof}) \, \text{d}\chi^2$ to obtain a higher value than the observed should not be too small

# In[ ]:

cdf = norm(mu, sigma).cdf

n_pred = (cdf(xe[1:]) - cdf(xe[:-1])) * n_total

n_sigma = n_pred ** 0.5 # for Poisson-distributed data

pull = (n - n_pred) / n_sigma

plt.errorbar(cx, pull, np.ones_like(pull), fmt="o")

plt.axhline(0, ls="--")

# degrees of freedom: number of fitted bins minus number of fitted parameters

n_dof = len(n) - 2 # need to subtract two fitted parameters

chi2_obs = np.sum(pull ** 2)

print(f"chi2/ndof = {chi2_obs} / {n_dof} = {chi2_obs / n_dof}")

from scipy.stats import chi2

chance_prob = 1 - chi2(n_dof).cdf(chi2_obs)

print(f"{chance_prob}")

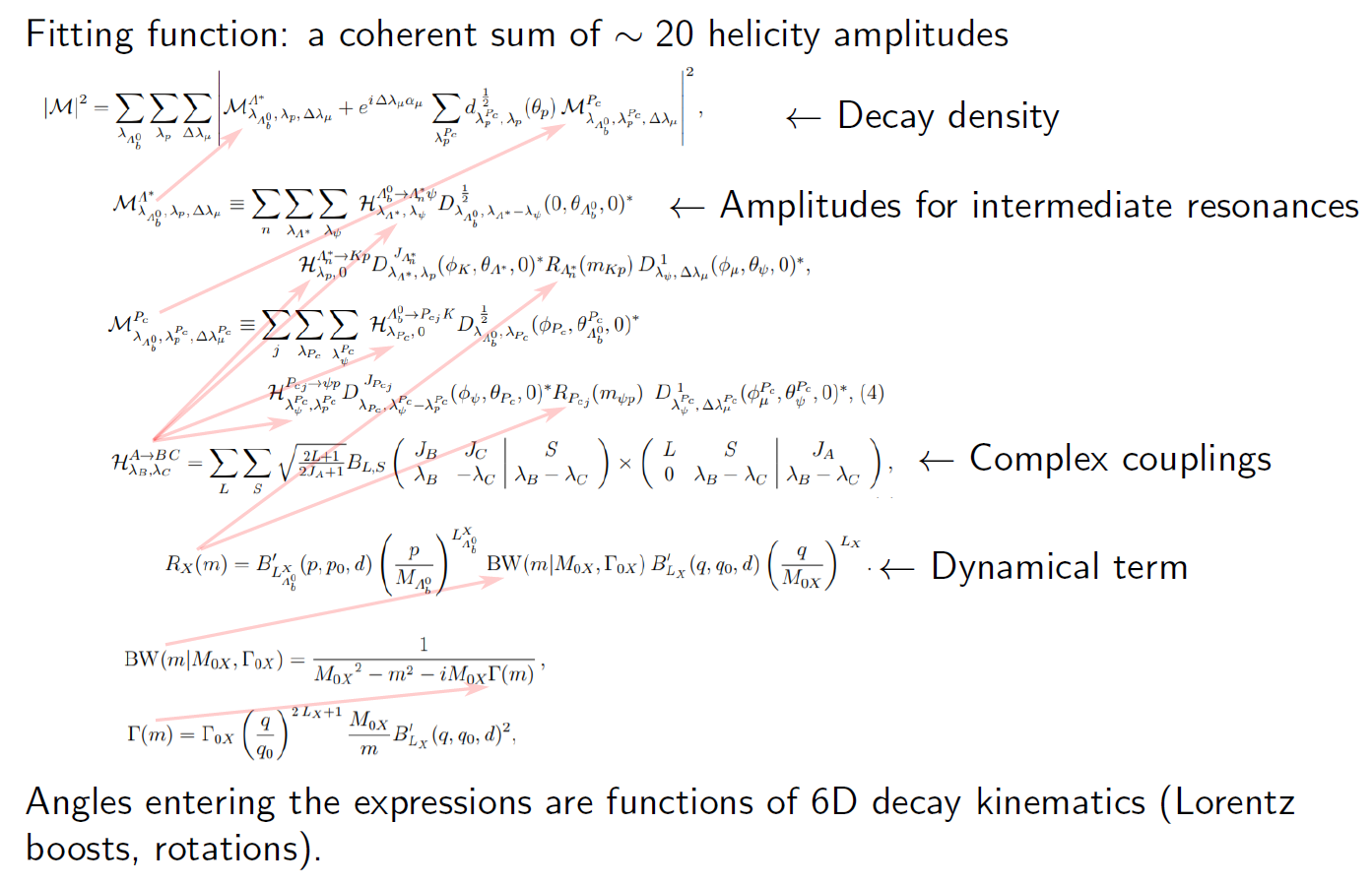

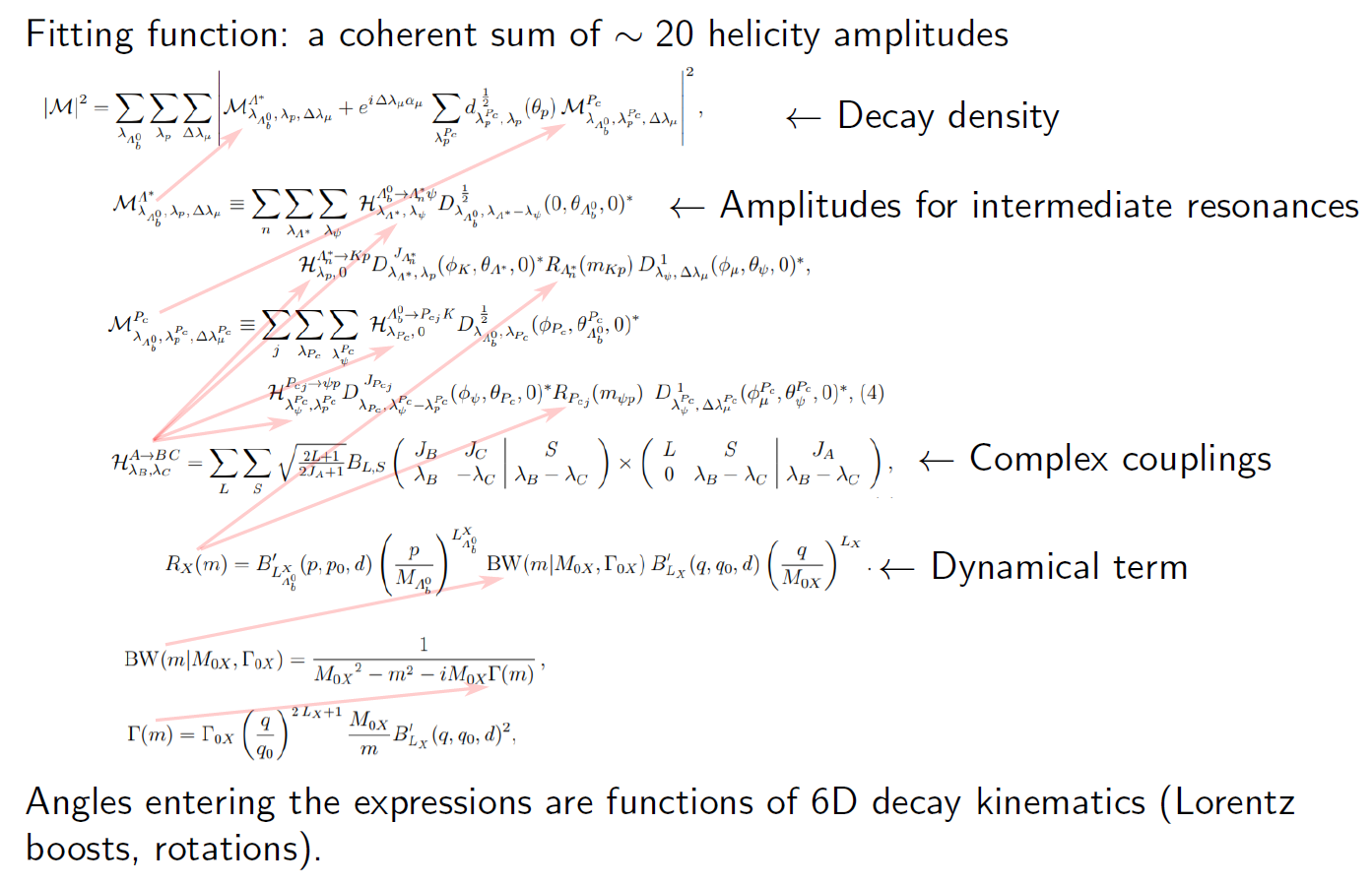

# ### **3. Towards realistic HEP data use-cases**

#

# HEP analyses typically involve far more sophisticated fitting work. It is often about **data model building** and performing **(un)binned maximum likelihood fits** to describe experimental distributions. Also, fitting is often performed to extract decay mode yields atop the model parameters.

#

# The `iminuit` package has functionality to performed so-called *simultaneous fits*,

# where the models being fitted to two or more datasets share at least a parameter.

# But it is not meant as a package providing commonly-used goodies and a "framework" for (complex) model building. Imagine how cumbersome it would be to verbosely describe the model below for an amplitude analysis ...!

# #### **A simple binned extended maximum-likelihood fit example**

#

# (Taken straight from one of the excellent `iminuit` tutorials.)

#

# The binned extended maximum-likelihood fit is strictly the binned equivalent of the corresponding unbinned fit. One sums the logarithm of Poisson probabilities for the observed counts as a function of the predicted counts in this case (times -1 to turn maximization into minimization).

#

# Instead of a density, you need to provide a cdf of the density in this case (which must be vectorized). There is no need to separately return the total integral like the unbinned case. The parameters are the same as in the unbinned extended fit.

# In[ ]:

from iminuit import cost

from scipy.stats import norm, uniform

xrange = -1, 1

rng = np.random.default_rng(1)

xdata = rng.normal(0, 0.1, size=400)

xdata = np.append(xdata, rng.uniform(*xrange, size=1000))

def model_pdf(x, z, mu, sigma):

return (z * norm.pdf(x, mu, sigma) +

(1 - z) * uniform.pdf(x, xrange[0], xrange[1] - xrange[0]))

c = cost.UnbinnedNLL(xdata, model_pdf)

m = Minuit(c, z=0.4, mu=0, sigma=0.2)

m.limits["sigma"] = (0, None)

m.limits["z"] = (0, 1)

m.limits["mu"] = (-1, 1)

m.migrad()

# In[ ]:

n, xe = np.histogram(xdata, bins=50, range=xrange)

cx = 0.5 * (xe[1:] + xe[:-1])

dx = np.diff(xe)

plt.errorbar(cx, n, n ** 0.5, fmt="ok")

xm = np.linspace(xe[0], xe[-1])

plt.plot(xm, model_pdf(xm, *[p.value for p in m.init_params]) * len(xdata) * dx[0],

ls=":", label="init")

plt.plot(xm, model_pdf(xm, *m.values) * len(xdata) * dx[0], label="fit")

plt.legend();

# #### **Scaling up in complexity**

#

# In spite of the example above being a *simple* example of an extended maximum-likelihood fit to binned data, the code is, honestly, very verbose.

# Clearly much of the above could be simplified for the general user. And now imagine that the (realistic example!) function to fit is not a uniform+normal distribution but rather this:

#  # [Example taken from a presentation by Anton Poluektov.]

# The ROOT framework provides `RooFit` as a model fitting library. The PyHEP ecosystem in particular has the `zfit` package, a model fitting library based on TensorFlow and optimised for simple and direct manipulation of probability density functions ...

# [Example taken from a presentation by Anton Poluektov.]

# The ROOT framework provides `RooFit` as a model fitting library. The PyHEP ecosystem in particular has the `zfit` package, a model fitting library based on TensorFlow and optimised for simple and direct manipulation of probability density functions ...

#

#  #

#