#!/usr/bin/env python

# coding: utf-8

# # **Building an Image Similarity System with 🤗 Transformers**

#

#

# In this notebook, you'll learn to build an image similarity system with 🤗 Transformers. Finding out the similarity between a query image and potential candidates is an important use case for information retrieval systems, reverse image search, for example. All the system is trying to answer is, given a _query_ image and a set of _candidate_ images, which images are the most similar to the query image.

#

# ## 🤗 Datasets library

#

# This notebook leverages the [`datasets` library](https://huggingface.co/docs/datasets/) as it seamlessly supports parallel processing, which will come in handy when building this system.

#

#

# ## Any model and dataset

#

# Although the notebook uses a ViT-based model ([`nateraw/vit-base-beans`](https://huggingface.co/nateraw/vit-base-beans)) and a particular dataset ([Beans](https://huggingface.co/datasets/beans)), it can be easily extended to use other models supporting vision modality and other image datasets. Some notable models you could try:

#

# * [Swin Transformer](https://huggingface.co/docs/transformers/model_doc/swin)

# * [ConvNeXT](https://huggingface.co/docs/transformers/model_doc/convnext)

# * [RegNet](https://huggingface.co/docs/transformers/model_doc/regnet)

#

# The approach presented in the notebook can potentially be extended to other modalities as well.

#

# ---

#

# Before we start, let's install the `datasets` and `transformers` libraries.

#

# In[1]:

get_ipython().system('pip install transformers datasets -q')

# If you're opening this notebook locally, make sure your environment has an install from the last version of those libraries.

# We also quickly upload some telemetry - this tells us which examples and software versions are getting used so we know where to prioritize our maintenance efforts. We don't collect (or care about) any personally identifiable information, but if you'd prefer not to be counted, feel free to skip this step or delete this cell entirely.

# In[ ]:

from transformers.utils import send_example_telemetry

send_example_telemetry("image_similarity_notebook", framework="pytorch")

# ## Building an image similarity system

#

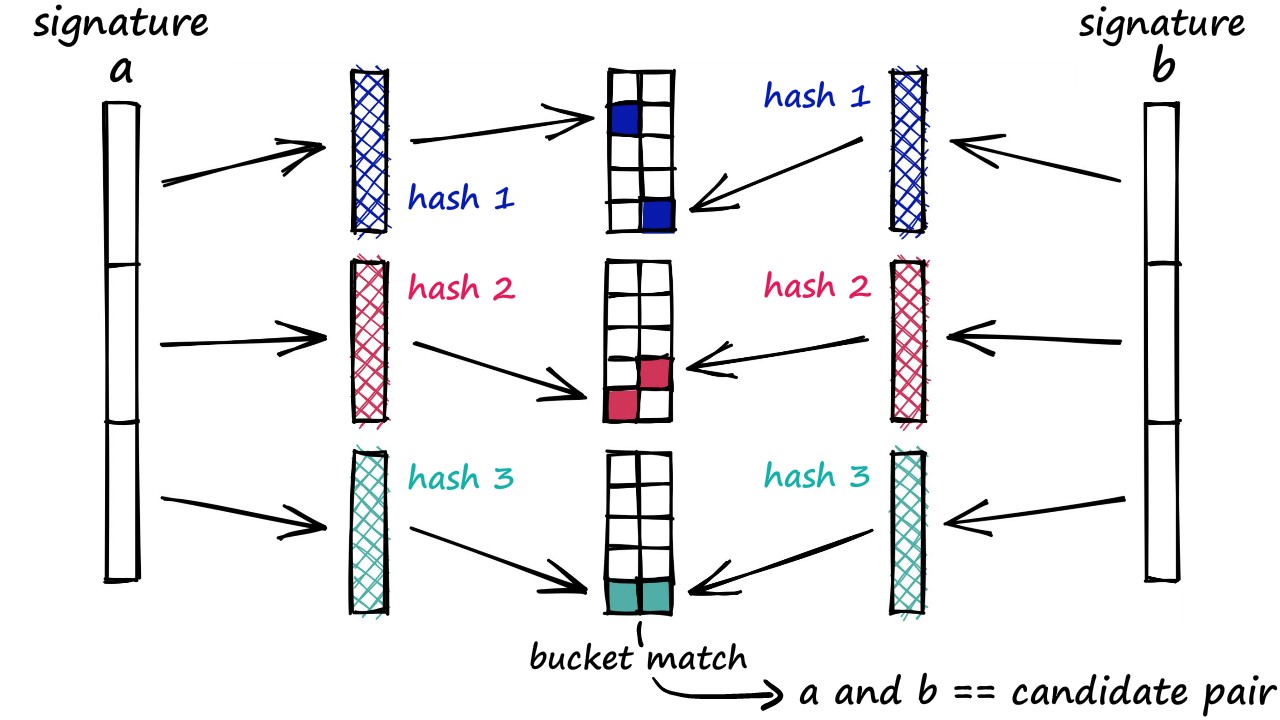

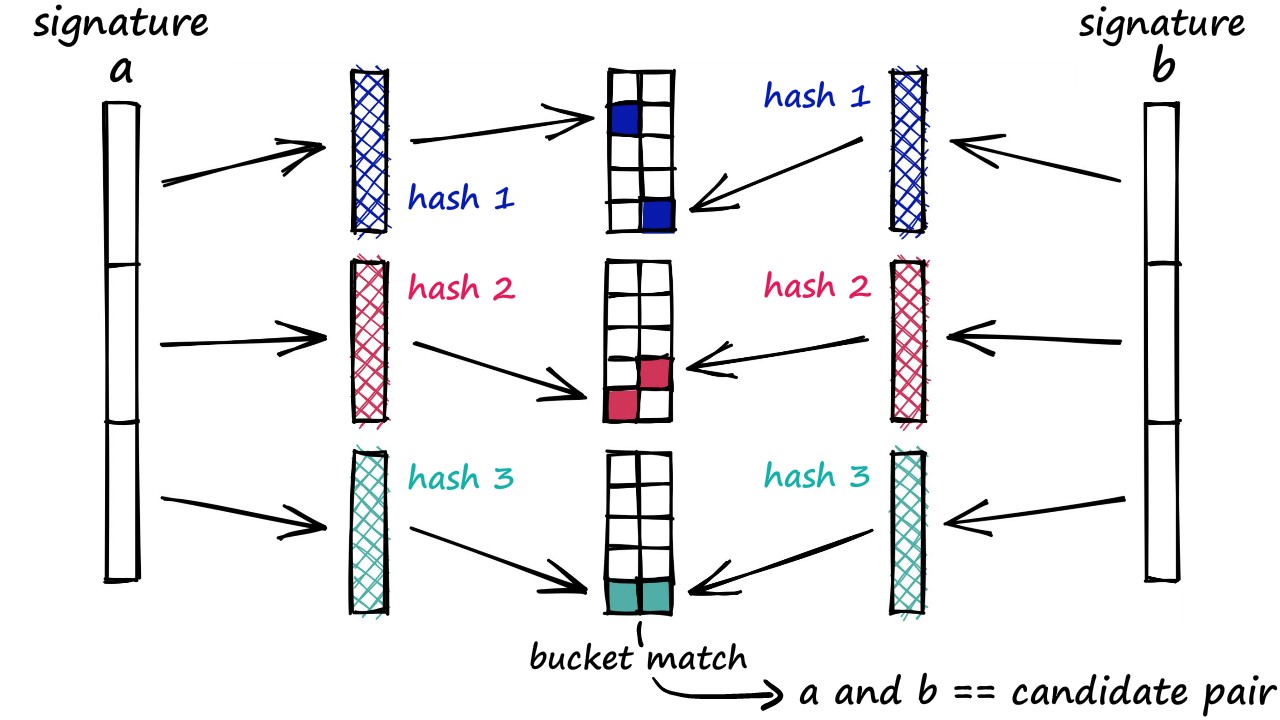

# To build this system, we first need to define how we want to compute the similarity between two images. One widely popular practice is to compute dense representations (embeddings) of the given images and then use the [cosine similarity metric](https://en.wikipedia.org/wiki/Cosine_similarity) to determine how similar the two images are.

#

# For this tutorial, we'll be using “embeddings” to represent images in vector space. This gives us a nice way to meaningfully compress the high-dimensional pixel space of images (224 x 224 x 3, for example) to something much lower dimensional (768, for example). The primary advantage of doing this is the reduced computation time in the subsequent steps.

#

#

#

# Don't worry if these things do not make sense at all. We will discuss these things in more detail shortly.

# ### Loading a base model to compute embeddings

#

# "Embeddings" encode the semantic information of images. To compute the embeddings from the images, we'll use a vision model that has some understanding of how to represent the input images in the vector space. This type of models is also commonly referred to as image encoders.

#

# For loading the model, we leverage the [`AutoModel` class](https://huggingface.co/docs/transformers/model_doc/auto#transformers.AutoModel). It provides an interface for us to load any compatible model checkpoint from the Hugging Face Hub. Alongside the model, we also load the processor associated with the model for data preprocessing.

# In[2]:

from transformers import AutoFeatureExtractor, AutoModel

model_ckpt = "nateraw/vit-base-beans"

extractor = AutoFeatureExtractor.from_pretrained(model_ckpt)

model = AutoModel.from_pretrained(model_ckpt)

hidden_dim = model.config.hidden_size

# In this case, the checkpoint was obtained by fine-tuning a [Vision Transformer based model](https://huggingface.co/google/vit-base-patch16-224-in21k) on the [`beans` dataset](https://huggingface.co/datasets/beans). To learn more about the model, just click the model link and check out its model card.

# The warning is telling us that the underlying model didn't use anything from the `classifier`. _Why did we not use `AutoModelForImageClassification`?_

#

# This is because we want to obtain dense representations of the images and not discrete categories, which are what `AutoModelForImageClassification` would have provided.

#

# Then comes another question - _why this checkpoint in particular?_

#

# We're using a specific dataset to build the system as mentioned earlier. So, instead of using a generalist model (like the [ones trained on the ImageNet-1k dataset](https://huggingface.co/models?dataset=dataset:imagenet-1k&sort=downloads), for example), it's better to use a model that has been fine-tuned on the dataset being used. That way, the underlying model has a better understanding of the input images.

# Now that we have a model for computing the embeddings, we need some candidate images to query against.

# ### Loading the dataset for candidate images

#

#

# To find out similar images, we need a set of candidate images to query against. We'll use the `train` split of the [`beans` dataset](https://huggingface.co/datasets/beans) for that purpose. To know more about the dataset, just follow the link and explore its dataset card.

# In[3]:

from datasets import load_dataset

dataset = load_dataset("beans")

# In[4]:

# Check a sample image.

dataset["train"][0]["image"]

# The dataset has got three columns / features:

# In[5]:

dataset["train"].features

# Next, we set up two dictionaries for our upcoming utilities:

#

# * `label2id` which maps the class labels to integers.

# * `id2label` doing the opposite of `label2id`.

# In[6]:

labels = dataset["train"].features["labels"].names

label2id, id2label = dict(), dict()

for i, label in enumerate(labels):

label2id[label] = i

id2label[i] = label

# With these components, we can proceed to build our image similarity system. To demonstrate this, we'll use 100 samples from the candidate image dataset to keep the overall runtime short.

# In[7]:

num_samples = 100

seed = 42

candidate_subset = dataset["train"].shuffle(seed=seed).select(range(num_samples))

# Below, you can find a pictorial overview of the process underlying fetching similar images.

#

#

#

#

#

#

#

#  #

#  #

#