#!/usr/bin/env python

# coding: utf-8

# # Using HEPData

# In[ ]:

import json

import pyhf

import pyhf.contrib.utils

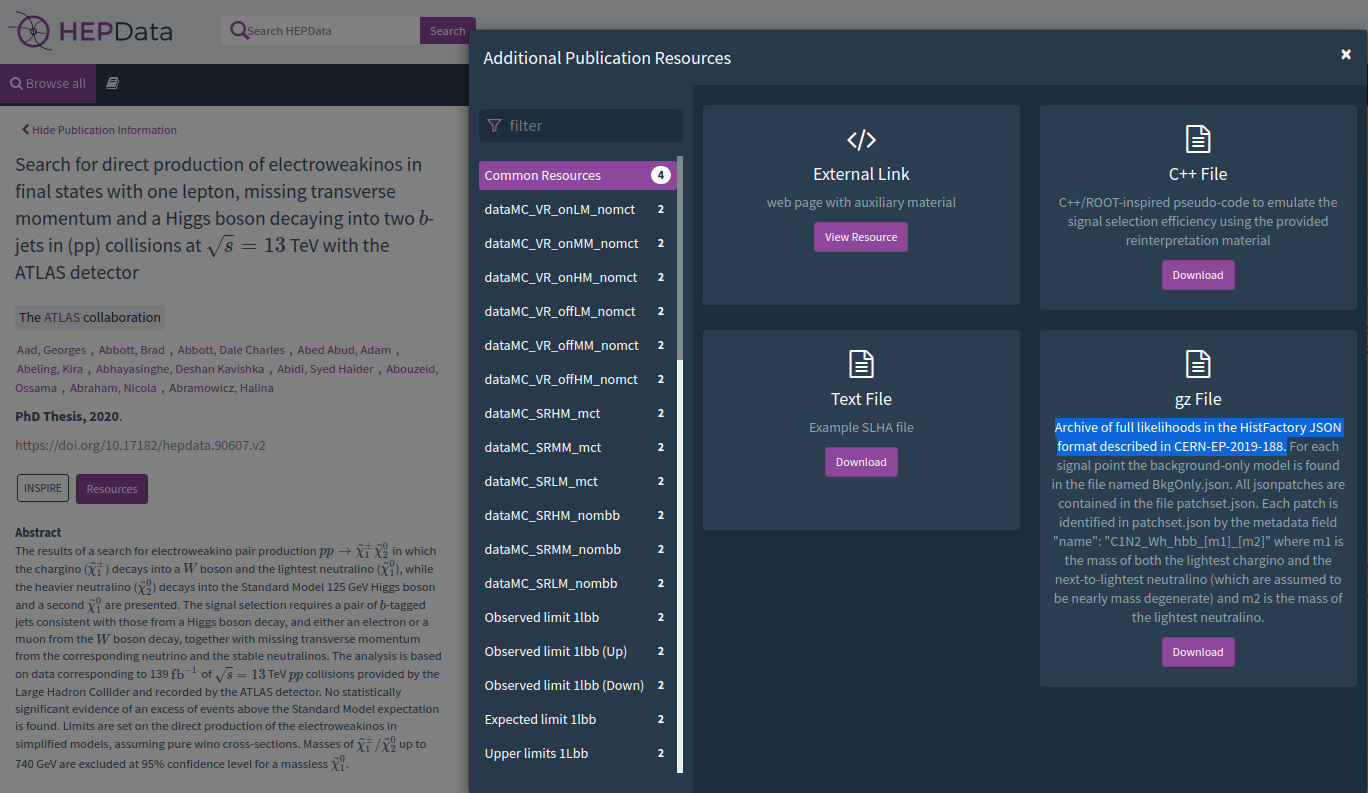

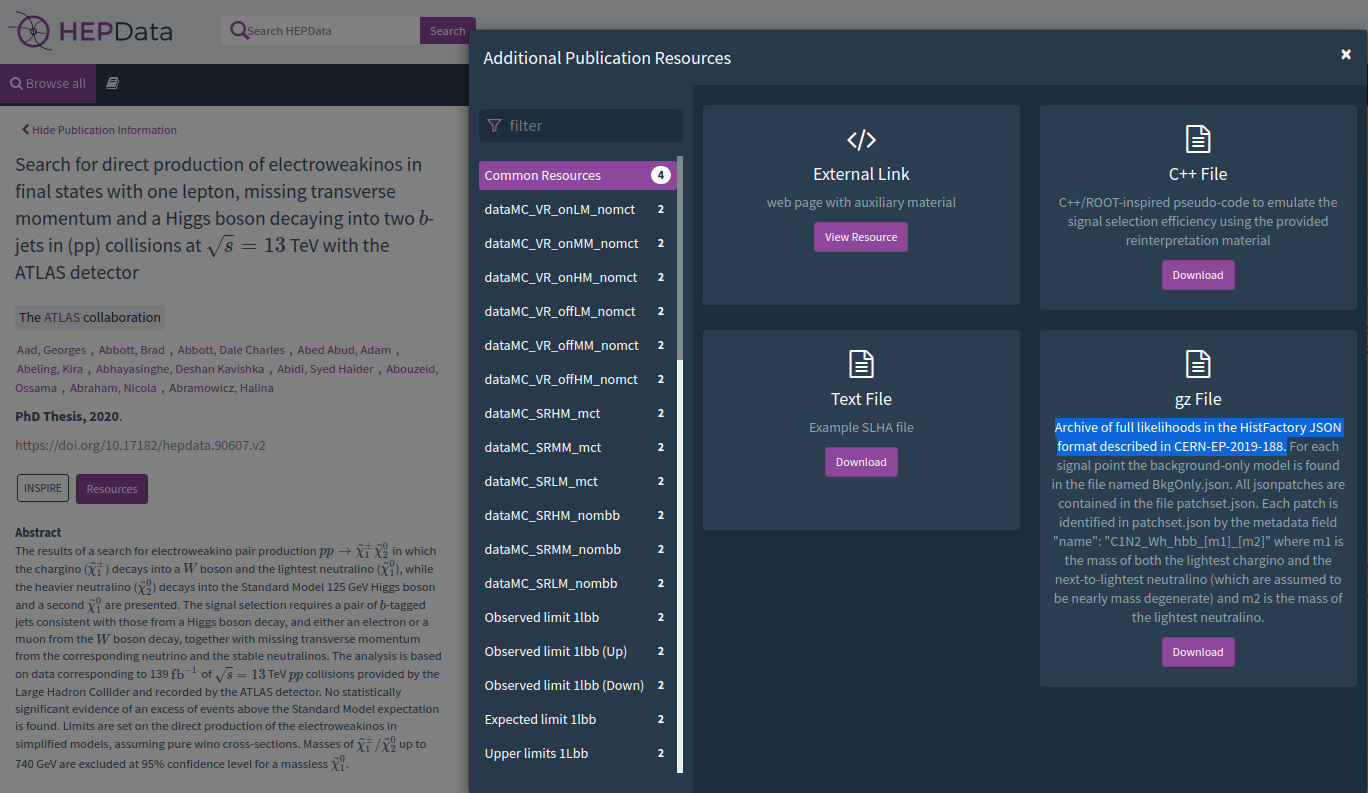

# ## Preserved on HEPData

# As of this tutorial, ATLAS has [published 7 full likelihoods to HEPData](https://pyhf.readthedocs.io/en/v0.6.1/citations.html#published-likelihoods)

#

#

#  #

#

#

# Let's explore the 1Lbb workspace a little bit shall we?

# ### Getting the Data

#

# We'll use the `pyhf[contrib]` extra (which relies on `requests` and `tarfile`) to download the HEPData minted DOI and extract the files we need.

# In[ ]:

pyhf.contrib.utils.download(

"https://doi.org/10.17182/hepdata.90607.v3/r3", "1Lbb-likelihoods"

)

# This will nicely download and extract everything we need.

# In[ ]:

get_ipython().system('ls -lavh 1Lbb-likelihoods')

# ## Instantiate our objects

#

# We have a background-only workspace `BkgOnly.json` and a signal patchset collection `patchset.json`. Let's create our python objects and play with them:

# In[ ]:

spec = json.load(open("1Lbb-likelihoods/BkgOnly.json"))

patchset = pyhf.PatchSet(json.load(open("1Lbb-likelihoods/patchset.json")))

# So what did the analyzers give us for signal patches?

# ## Patching in Signals

#

# Let's look at this [`pyhf.PatchSet`](https://pyhf.readthedocs.io/en/v0.6.1/_generated/pyhf.patchset.PatchSet.html#pyhf.patchset.PatchSet) object which provides a user-friendly way to interact with many signal patches at once.

#

# ### PatchSet

# In[ ]:

patchset

# Oh wow, we've got 125 patches. What information does it have?

# In[ ]:

print(f"description: {patchset.description}")

print(f" digests: {patchset.digests}")

print(f" labels: {patchset.labels}")

print(f" references: {patchset.references}")

print(f" version: {patchset.version}")

# So we've got a useful description of the signal patches... there's a digest. Does that match the background-only workspace we have?

# In[ ]:

pyhf.utils.digest(spec)

# It does! In fact, this sort of verification check will be done automatically when applying patches using `pyhf.PatchSet` as we will see shortly. To manually verify, simply run `pyhf.PatchSet.verify` on the workspace. No error means everything is fine. It will loudly complain otherwise.

# In[ ]:

patchset.verify(spec)

# No error, whew. Let's move on.

#

# The labels `m1` and `m2` tells us that we have the signal patches parametrized in 2-dimensional space, likely as $m_1 = \tilde{\chi}_1^\pm$ and $m_2 = \tilde{\chi}_1^0$... but I guess we'll see?

#

# The references list the references for this dataset, which is pointing at the hepdata record for now.

#

# Next, the version is the version of the schema set we're using with `pyhf` (`1.0.0`).

#

# And last, but certainly not least... its patches:

# In[ ]:

patchset.patches

# So we can see all the patches listed both by name such as `C1N2_Wh_hbb_900_250` as well as a pair of points `(900, 250)`. Why is this useful? The `PatchSet` object acts like a special dictionary look-up where it will grab the patch you need based on the unique key you provide it.

#

# For example, we can look up by name

# In[ ]:

patchset["C1N2_Wh_hbb_900_250"]

# or by the pair of points

# In[ ]:

patchset[(900, 250)]

# ### Patches

#

# A `pyhf.PatchSet` is a collection of `pyhf.Patch` objects. What is a patch indeed? It contains enough information about how to apply the signal patch to the corresponding background-only workspace (matched by digest).

# In[ ]:

patch = patchset["C1N2_Wh_hbb_900_250"]

print(f" name: {patch.name}")

print(f"values: {patch.values}")

# Most importantly, it contains the patch information itself. Specifically, this inherits from the `jsonpatch.JsonPatch` object, which is a 3rd party module providing native support for json patching in python. That means we can simply apply the patch to our workspace directly!

# In[ ]:

print(f" samples pre-patch: {pyhf.Workspace(spec).samples}")

print(f"samples post-patch: {pyhf.Workspace(patch.apply(spec)).samples}")

# Or, more quickly, from the `PatchSet` object:

# In[ ]:

print(f" samples pre-patch: {pyhf.Workspace(spec).samples}")

print(f"samples post-patch: {pyhf.Workspace(patchset.apply(spec, (900, 250))).samples}")

# ### Patching via Model Creation

#

# One last way to apply the patching is to, instead of patching workspaces, we patch the models as we build them from the background-only workspace. This maybe makes it easier to treat the background-only workspace as immutable, and patch in signal models when grabbing the model. Check it out.

# In[ ]:

workspace = pyhf.Workspace(spec)

# First, load up our background-only spec into the workspace. Then let's create a model.

# In[ ]:

model = workspace.model(patches=[patchset["C1N2_Wh_hbb_900_250"]])

print(f"samples (workspace): {workspace.samples}")

print(f"samples ( model ): {model.config.samples}")

# ## Doing Physics

#

# So we want to try and reproduce part of the contour. At least convince ourselves we're doing *physics* and not *fauxsics*. ... Anyway... Let's remind ourselves of the 1Lbb contour as we don't have the photographic memory of the ATLAS SUSY conveners

#

#  #

# So let's work around the 700-900 GeV $\tilde{\chi}_1^\pm, \tilde{\chi}_2^0$ region. We'll look at two points here:

#

# * `C1N2_Wh_hbb_650_0(650, 0)` which is below the contour and excluded

# * `C1N2_Wh_hbb_1000_0(1000, 0)` which is above the contour and not excluded

#

# Let's perform a "standard" hypothesis test (with $\mu = 1$ null BSM hypothesis) on both of these and use the $\text{CL}_\text{s}$ values to convince ourselves that we just did reproducible physics!?!

# ### Doing Physics, for real now

# In[ ]:

model_below = workspace.model(patches=[patchset["C1N2_Wh_hbb_650_0"]])

model_above = workspace.model(patches=[patchset["C1N2_Wh_hbb_1000_0"]])

# We've made our models. Let's test hypotheses!

#

# *Note: this will not be as instantaneous as our simple models.. but it should still be pretty fast!*

# In[ ]:

test_poi = 1.0

result_below = pyhf.infer.hypotest(

test_poi,

workspace.data(model_below),

model_below,

test_stat="qtilde",

return_expected_set=True,

)

print(

f"Observed: {result_below[0]}, Expected band: {[exp.tolist() for exp in result_below[1]]}"

)

# In[ ]:

result_above = pyhf.infer.hypotest(

test_poi,

workspace.data(model_above),

model_above,

test_stat="qtilde",

return_expected_set=True,

)

print(

f"Observed: {result_above[0]}, Expected band: {[exp.tolist() for exp in result_above[1]]}"

)

# And as you can see, we're getting results that we generally expect. Excluded models are those for which $\text{CL}_\text{s} < 0.05$. Additionally, you can see that the expected bands $-2\sigma$ for the $(1000, 0)$ point is just slightly below the observed result for the $(650, 0)$ point which is what we observe in the figure above.

#

# So let's work around the 700-900 GeV $\tilde{\chi}_1^\pm, \tilde{\chi}_2^0$ region. We'll look at two points here:

#

# * `C1N2_Wh_hbb_650_0(650, 0)` which is below the contour and excluded

# * `C1N2_Wh_hbb_1000_0(1000, 0)` which is above the contour and not excluded

#

# Let's perform a "standard" hypothesis test (with $\mu = 1$ null BSM hypothesis) on both of these and use the $\text{CL}_\text{s}$ values to convince ourselves that we just did reproducible physics!?!

# ### Doing Physics, for real now

# In[ ]:

model_below = workspace.model(patches=[patchset["C1N2_Wh_hbb_650_0"]])

model_above = workspace.model(patches=[patchset["C1N2_Wh_hbb_1000_0"]])

# We've made our models. Let's test hypotheses!

#

# *Note: this will not be as instantaneous as our simple models.. but it should still be pretty fast!*

# In[ ]:

test_poi = 1.0

result_below = pyhf.infer.hypotest(

test_poi,

workspace.data(model_below),

model_below,

test_stat="qtilde",

return_expected_set=True,

)

print(

f"Observed: {result_below[0]}, Expected band: {[exp.tolist() for exp in result_below[1]]}"

)

# In[ ]:

result_above = pyhf.infer.hypotest(

test_poi,

workspace.data(model_above),

model_above,

test_stat="qtilde",

return_expected_set=True,

)

print(

f"Observed: {result_above[0]}, Expected band: {[exp.tolist() for exp in result_above[1]]}"

)

# And as you can see, we're getting results that we generally expect. Excluded models are those for which $\text{CL}_\text{s} < 0.05$. Additionally, you can see that the expected bands $-2\sigma$ for the $(1000, 0)$ point is just slightly below the observed result for the $(650, 0)$ point which is what we observe in the figure above.

#

#  #

# So let's work around the 700-900 GeV $\tilde{\chi}_1^\pm, \tilde{\chi}_2^0$ region. We'll look at two points here:

#

# * `C1N2_Wh_hbb_650_0(650, 0)` which is below the contour and excluded

# * `C1N2_Wh_hbb_1000_0(1000, 0)` which is above the contour and not excluded

#

# Let's perform a "standard" hypothesis test (with $\mu = 1$ null BSM hypothesis) on both of these and use the $\text{CL}_\text{s}$ values to convince ourselves that we just did reproducible physics!?!

# ### Doing Physics, for real now

# In[ ]:

model_below = workspace.model(patches=[patchset["C1N2_Wh_hbb_650_0"]])

model_above = workspace.model(patches=[patchset["C1N2_Wh_hbb_1000_0"]])

# We've made our models. Let's test hypotheses!

#

# *Note: this will not be as instantaneous as our simple models.. but it should still be pretty fast!*

# In[ ]:

test_poi = 1.0

result_below = pyhf.infer.hypotest(

test_poi,

workspace.data(model_below),

model_below,

test_stat="qtilde",

return_expected_set=True,

)

print(

f"Observed: {result_below[0]}, Expected band: {[exp.tolist() for exp in result_below[1]]}"

)

# In[ ]:

result_above = pyhf.infer.hypotest(

test_poi,

workspace.data(model_above),

model_above,

test_stat="qtilde",

return_expected_set=True,

)

print(

f"Observed: {result_above[0]}, Expected band: {[exp.tolist() for exp in result_above[1]]}"

)

# And as you can see, we're getting results that we generally expect. Excluded models are those for which $\text{CL}_\text{s} < 0.05$. Additionally, you can see that the expected bands $-2\sigma$ for the $(1000, 0)$ point is just slightly below the observed result for the $(650, 0)$ point which is what we observe in the figure above.

#

# So let's work around the 700-900 GeV $\tilde{\chi}_1^\pm, \tilde{\chi}_2^0$ region. We'll look at two points here:

#

# * `C1N2_Wh_hbb_650_0(650, 0)` which is below the contour and excluded

# * `C1N2_Wh_hbb_1000_0(1000, 0)` which is above the contour and not excluded

#

# Let's perform a "standard" hypothesis test (with $\mu = 1$ null BSM hypothesis) on both of these and use the $\text{CL}_\text{s}$ values to convince ourselves that we just did reproducible physics!?!

# ### Doing Physics, for real now

# In[ ]:

model_below = workspace.model(patches=[patchset["C1N2_Wh_hbb_650_0"]])

model_above = workspace.model(patches=[patchset["C1N2_Wh_hbb_1000_0"]])

# We've made our models. Let's test hypotheses!

#

# *Note: this will not be as instantaneous as our simple models.. but it should still be pretty fast!*

# In[ ]:

test_poi = 1.0

result_below = pyhf.infer.hypotest(

test_poi,

workspace.data(model_below),

model_below,

test_stat="qtilde",

return_expected_set=True,

)

print(

f"Observed: {result_below[0]}, Expected band: {[exp.tolist() for exp in result_below[1]]}"

)

# In[ ]:

result_above = pyhf.infer.hypotest(

test_poi,

workspace.data(model_above),

model_above,

test_stat="qtilde",

return_expected_set=True,

)

print(

f"Observed: {result_above[0]}, Expected band: {[exp.tolist() for exp in result_above[1]]}"

)

# And as you can see, we're getting results that we generally expect. Excluded models are those for which $\text{CL}_\text{s} < 0.05$. Additionally, you can see that the expected bands $-2\sigma$ for the $(1000, 0)$ point is just slightly below the observed result for the $(650, 0)$ point which is what we observe in the figure above.