#!/usr/bin/env python

# coding: utf-8

#

#

#

L2RPN 2019 Starting Kit

#

#

# ALL INFORMATION, SOFTWARE, DOCUMENTATION, AND DATA ARE PROVIDED "AS-IS". The CDS, CHALEARN, AND/OR OTHER ORGANIZERS OR CODE AUTHORS DISCLAIM ANY EXPRESSED OR IMPLIED WARRANTIES, INCLUDING, BUT NOT LIMITED TO, THE IMPLIED WARRANTIES OF MERCHANTABILITY AND FITNESS FOR ANY PARTICULAR PURPOSE, AND THE WARRANTY OF NON-INFRIGEMENT OF ANY THIRD PARTY'S INTELLECTUAL PROPERTY RIGHTS. IN NO EVENT SHALL AUTHORS AND ORGANIZERS BE LIABLE FOR ANY SPECIAL,

# INDIRECT OR CONSEQUENTIAL DAMAGES OR ANY DAMAGES WHATSOEVER ARISING OUT OF OR IN CONNECTION WITH THE USE OR PERFORMANCE OF SOFTWARE, DOCUMENTS, MATERIALS, PUBLICATIONS, OR INFORMATION MADE AVAILABLE FOR THE CHALLENGE.

#

#

Introduction

#

#

# The goal of this challenge is to use Reinforcement Learning in Power Grid management by designing RL agents to automate the control of the power grid. We are using a power network simulator : Grid2Op, it is a simulator that is able to emulate a power grid of any size and electrical properties subject to a set of temporal injections for discretized time-steps.

#

# References and credits:

# The creator of Grid2Op was Benjamin Donnot. The competition was designed by Isabelle Guyon, Antoine Marot, Benjamin Donnot and Balthazar Donon. Luca Veyrin, Camillo Romero, Marvin Lerousseau and Kimang Khun are distinguished contributors to make the L2RPN challenge happen.

#

#

#

Step 1: Exploratory data analysis

#

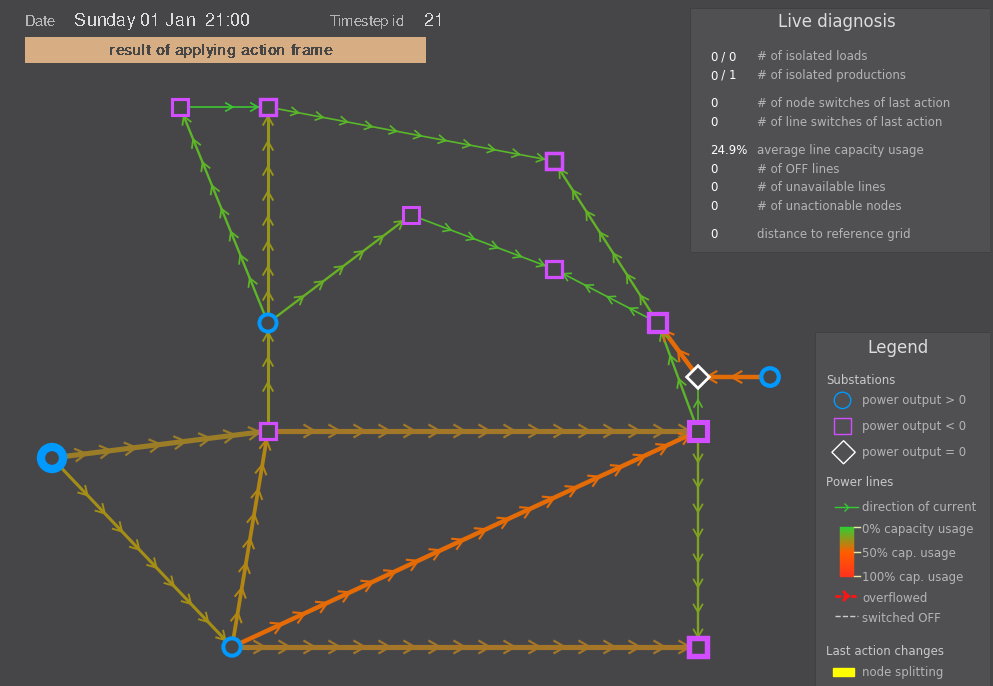

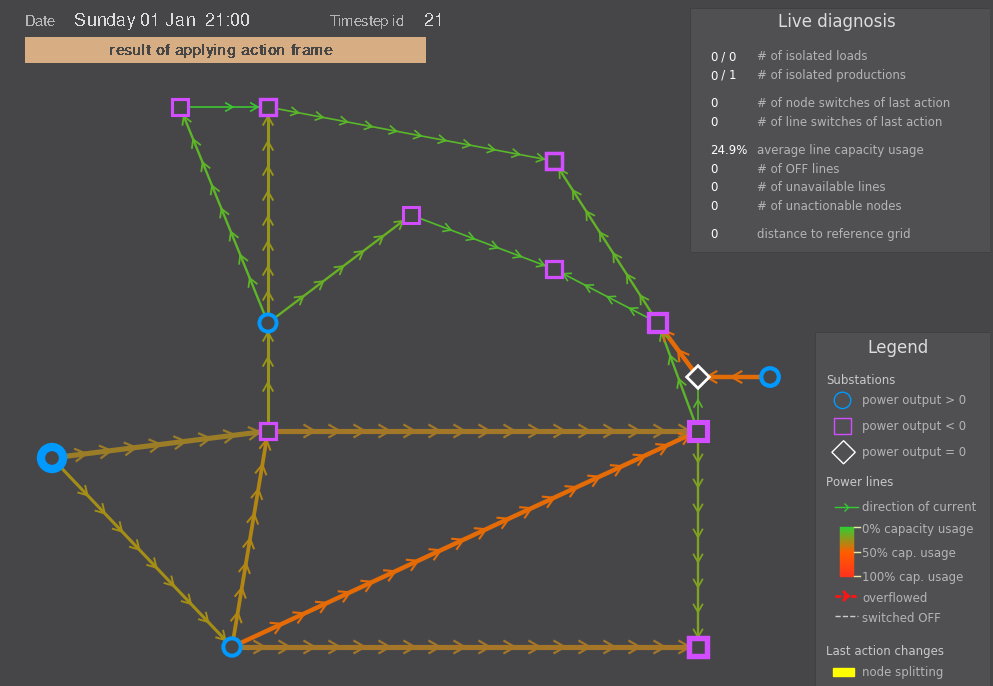

# ## Electrical grid

#

#

#

#

# (courtesy of Marvin Lerousseau)

#

#

Step 2: Building an Agent

#

#

#

Building an agent

#

# We provide simple examples of agent in the `starting-kit/example_submission` directory. We illustrate it here with the most simple agent: the lazy "do nothing". To make your own agent, you can change the agent init to load your own model, you can modify the act method to take action with your model and specify a reward, especially if you are doing reinforcement learning.

#

#

# \[

# R= \sum_{l\in lines} \max\left(\frac{i_{l_{max}}-i_l}{i},0\right)

# \]

#

# With $i_l$ the flow on the line l and $i_{l_{max}}$ the maximum allowed flow on this line.

# The final score on one chronic is the sum of the rewards over the each timestep

#

# for better understanding of the reward calulation please look at public_data/reward_function.py for training purposes you can modify this function.

#

# ### Run an agent

#

# Once you have defined an agent, you can run it under an environment over scenarios.

# **WARNING**: the following cell to compute the leaderboard score will not run if you did not use the "augmented" runner previously.

#

#

Step 3: Making a submission

#

# Unit testing

#

# It is important that you test your submission files before submitting them. All you have to do to make a submission is create or modify the Sumbission class the file submission.py in the starting_kit/example_submission/ directory, then run this test to make sure everything works fine. This is the actual program that will be run on the server to test your submission.

#

# take note that on codalab, your local directory is program/. Then if you want to load the file model.dump run : open("program/model.dump") even if the file is in at the root of your submission dir.

#

# In[11]:

print("The following cell will execute the command: \n"

"{} {} {} {} {} {}".format(sys.executable, os.path.join(utility_dir, "ingestion.py"),

path_train_set, tmp_outdir, utility_dir, model_dir))

# In[12]:

get_ipython().system('$sys.executable $utility_dir/ingestion.py $path_train_set $tmp_outdir $utility_dir $model_dir')

#

# The above command will run a file close really close to what codalab is running to assess the performance of the agent. The data (that you will not have access to for the real validation dataset) will be located in a subdirectory of "output_notebook_2DevelopAndRunLocally/tmp_results".

#

#

# As the data that are used in the codalab platform to compute your code are hidden, here we simulate the evaluation with 2 scenarios coming from the training data, for which we limit the number of time step at 100 each. The real length of validation scenarios is variable and not disclosed here.

#

#

# It is more than recommended to also test the scoring program with the following script.

#

# In[13]:

get_ipython().system('$sys.executable $utility_dir/evaluate.py $tmp_outdir $output_dir')

# print("watch : http:/view/"+ scoring_output_dir +"/scores.html")

#

#

Preparing the submission

#

# Zip the contents of `sample_code_submission/` (without the directory), or download the challenge public_data and run the command in the previous cell, after replacing sample_data by public_data.

# Then zip the contents of `sample_result_submission/` (without the directory).

# Do NOT zip the data with your submissions.

# In[14]:

import datetime

import zipfile

def zipdir(path, ziph):

# ziph is zipfile handle

for root, dirs, files in os.walk(path):

for file in files:

ziph.write(os.path.join(root, file))

the_date = datetime.datetime.now().strftime("%y%m%d_%H%M")

sample_code_submission = 'sample_code_submission_' + the_date + '.zip'

with zipfile.ZipFile(sample_code_submission, 'w', zipfile.ZIP_DEFLATED) as zipf:

zipdir(model_dir,zipf)

print("Submit this file:\n\t- {}\n\t- Located at{}".format(sample_code_submission, os.path.abspath(".")))

#

#  #

#  #

#