Introduction to Data Analysis with Python

##

Dr. Thomas Wiecki

#

Lead Data Scientist

# #

#  #

#  #

# Source: http://www.nutanix.com/2013/09/16/the-cup-has-been-flipped/

#

#

# Source: http://www.nutanix.com/2013/09/16/the-cup-has-been-flipped/

#  #

#  #

# * We back the best investment algorithms with investor capital, trading operations, and technology.

# * Do your research in our hosted IPython environment using stock price history, corporate fundamental data, and other data sets.

# * Write your algorithm in your browser. Then backtest it, for free, over 13 years of minute-level data.

# * When you enter the contest, your algorithm will also be considered for our hedge fund.

# * [We're hiring in Düsseldorf: Operations Engineer!](https://www.quantopian.com/about#op-37090-operations-engineer-israel)

# ## Why use Python for data analysis?

# * Python is a **general purpose language** -> No hodge-podge of perl, bash, matlab, fortran.

# * Very easy to learn.

# * Quality and quantity of data analysis libraries is very high and growing at a rapid pace.

# * What are the alternatives?

# - R: "The best thing about R is that it was written by statisticians. The worst thing about R is that it was written by statisticians." Bow Cogwill

# - Matlab: $$$, not open

# ## Jobs!

#

#

#

# * We back the best investment algorithms with investor capital, trading operations, and technology.

# * Do your research in our hosted IPython environment using stock price history, corporate fundamental data, and other data sets.

# * Write your algorithm in your browser. Then backtest it, for free, over 13 years of minute-level data.

# * When you enter the contest, your algorithm will also be considered for our hedge fund.

# * [We're hiring in Düsseldorf: Operations Engineer!](https://www.quantopian.com/about#op-37090-operations-engineer-israel)

# ## Why use Python for data analysis?

# * Python is a **general purpose language** -> No hodge-podge of perl, bash, matlab, fortran.

# * Very easy to learn.

# * Quality and quantity of data analysis libraries is very high and growing at a rapid pace.

# * What are the alternatives?

# - R: "The best thing about R is that it was written by statisticians. The worst thing about R is that it was written by statisticians." Bow Cogwill

# - Matlab: $$$, not open

# ## Jobs!

#

#  #

#

# # How to get started

#

# * Start by installing the [Anaconda Python distribution](http://continuum.io/downloads#py34) (use Python 3.4)

# * Install the jupyter notebook (former IPython)

# * Do a basic Python tutorial to get a handle on the syntax, e.g. [Learn Python the Hard Way](http://learnpythonthehardway.org/)

#

# # Python basics

# ### Interpreted and interactive

# In[1]:

3 * 4

# ### Lists

# In[2]:

x = [1, 2, 3]

print(x)

# In[3]:

x.append(4)

print(x)

# ### Dictionaries

# In[4]:

measurements = {'height': [1.70, 1.80, 1.50], 'weight': [60, 120, 50]}

measurements

# In[5]:

measurements['height']

# ### Comprehensions

# In[6]:

x = [1, 2, 3, 4]

[i**2 for i in x]

# In[7]:

def calc_bmi(weight, height):

return weight / height**2

[calc_bmi(w, h) for w, h in zip(measurements['weight'], measurements['height'])]

# ## Level 1: "The Pandas Wrangler"

#

#

# # How to get started

#

# * Start by installing the [Anaconda Python distribution](http://continuum.io/downloads#py34) (use Python 3.4)

# * Install the jupyter notebook (former IPython)

# * Do a basic Python tutorial to get a handle on the syntax, e.g. [Learn Python the Hard Way](http://learnpythonthehardway.org/)

#

# # Python basics

# ### Interpreted and interactive

# In[1]:

3 * 4

# ### Lists

# In[2]:

x = [1, 2, 3]

print(x)

# In[3]:

x.append(4)

print(x)

# ### Dictionaries

# In[4]:

measurements = {'height': [1.70, 1.80, 1.50], 'weight': [60, 120, 50]}

measurements

# In[5]:

measurements['height']

# ### Comprehensions

# In[6]:

x = [1, 2, 3, 4]

[i**2 for i in x]

# In[7]:

def calc_bmi(weight, height):

return weight / height**2

[calc_bmi(w, h) for w, h in zip(measurements['weight'], measurements['height'])]

# ## Level 1: "The Pandas Wrangler"

#

#  # ## How to become a "Pandas Wrangler"

#

# * Learn Pandas (data wrangling): http://pandas.pydata.org/pandas-docs/stable/tutorials.html

# * Learn Seaborn (data visualization): http://stanford.edu/~mwaskom/software/seaborn/

# ### Why not start with NumPy and Matplotlib?

# * These libraries have become core libraries.

# * Better results can be achieved starting with Pandas and Seaborn.

# * For more motivation, see http://twiecki.github.io/blog/2014/11/18/python-for-data-science/

# ## Pandas

# In[8]:

import pandas as pd

import numpy as np

# In[9]:

s = pd.Series([1,3,5,np.nan,6,8])

s

# In[10]:

dates = pd.date_range('20130101', periods=6)

df = pd.DataFrame(np.random.randn(6,4), index=dates, columns=list('ABCD'))

df

# In[11]:

df[df.A > 0]

# In[12]:

df.mean()

# In[13]:

df.mean(axis='columns')

# ## Mixed types

# In[14]:

df2 = pd.DataFrame({ 'A' : 1.,

'B' : pd.Timestamp('20130102'),

'C' : pd.Series(1,index=list(range(4)),dtype='float32'),

'D' : np.array([3] * 4,dtype='int32'),

'E' : pd.Categorical(["test","train","test","train"]),

'F' : 'foo' })

df2

# In[15]:

df2.dtypes

# ### Grouping

# In[16]:

df = pd.DataFrame({'A' : ['foo', 'bar', 'foo', 'bar',

'foo', 'bar', 'foo', 'foo'],

'B' : ['one', 'one', 'two', 'three',

'two', 'two', 'one', 'three'],

'C' : np.random.randn(8),

'D' : np.random.randn(8)})

# In[17]:

df

# In[18]:

df.groupby('A').sum()

# In[19]:

df.groupby(['A', 'B']).sum()

# ## Seaborn: Generating statistical plots

# In[20]:

get_ipython().run_line_magic('matplotlib', 'inline')

import seaborn as sns

# In[21]:

x = np.random.normal(size=100)

sns.distplot(x);

# ### 2D distributions

# In[22]:

mean, cov = [0, 1], [(1, .5), (.5, 1)]

data = np.random.multivariate_normal(mean, cov, 200)

df = pd.DataFrame(data, columns=["x", "y"])

df

# In[23]:

sns.jointplot(x="x", y="y", data=df, kind="kde");

# ### All pairwise combinations

# In[24]:

iris = sns.load_dataset("iris")

sns.pairplot(iris);

# ## Seaborn: Regressions

# In[25]:

tips = sns.load_dataset("tips")

# In[26]:

tips.head()

# In[27]:

sns.lmplot(x="total_bill", y="tip", hue="smoker", data=tips);

# In[28]:

sns.lmplot(x="total_bill", y="tip", col="day", data=tips,

col_wrap=2, size=3);

# In[29]:

sns.factorplot(x="time", y="total_bill", hue="smoker",

col="day", data=tips, kind="box", size=4, aspect=.5);

# ## Level 2: "The Kaggle top scorer"

#

#

# ## How to become a "Pandas Wrangler"

#

# * Learn Pandas (data wrangling): http://pandas.pydata.org/pandas-docs/stable/tutorials.html

# * Learn Seaborn (data visualization): http://stanford.edu/~mwaskom/software/seaborn/

# ### Why not start with NumPy and Matplotlib?

# * These libraries have become core libraries.

# * Better results can be achieved starting with Pandas and Seaborn.

# * For more motivation, see http://twiecki.github.io/blog/2014/11/18/python-for-data-science/

# ## Pandas

# In[8]:

import pandas as pd

import numpy as np

# In[9]:

s = pd.Series([1,3,5,np.nan,6,8])

s

# In[10]:

dates = pd.date_range('20130101', periods=6)

df = pd.DataFrame(np.random.randn(6,4), index=dates, columns=list('ABCD'))

df

# In[11]:

df[df.A > 0]

# In[12]:

df.mean()

# In[13]:

df.mean(axis='columns')

# ## Mixed types

# In[14]:

df2 = pd.DataFrame({ 'A' : 1.,

'B' : pd.Timestamp('20130102'),

'C' : pd.Series(1,index=list(range(4)),dtype='float32'),

'D' : np.array([3] * 4,dtype='int32'),

'E' : pd.Categorical(["test","train","test","train"]),

'F' : 'foo' })

df2

# In[15]:

df2.dtypes

# ### Grouping

# In[16]:

df = pd.DataFrame({'A' : ['foo', 'bar', 'foo', 'bar',

'foo', 'bar', 'foo', 'foo'],

'B' : ['one', 'one', 'two', 'three',

'two', 'two', 'one', 'three'],

'C' : np.random.randn(8),

'D' : np.random.randn(8)})

# In[17]:

df

# In[18]:

df.groupby('A').sum()

# In[19]:

df.groupby(['A', 'B']).sum()

# ## Seaborn: Generating statistical plots

# In[20]:

get_ipython().run_line_magic('matplotlib', 'inline')

import seaborn as sns

# In[21]:

x = np.random.normal(size=100)

sns.distplot(x);

# ### 2D distributions

# In[22]:

mean, cov = [0, 1], [(1, .5), (.5, 1)]

data = np.random.multivariate_normal(mean, cov, 200)

df = pd.DataFrame(data, columns=["x", "y"])

df

# In[23]:

sns.jointplot(x="x", y="y", data=df, kind="kde");

# ### All pairwise combinations

# In[24]:

iris = sns.load_dataset("iris")

sns.pairplot(iris);

# ## Seaborn: Regressions

# In[25]:

tips = sns.load_dataset("tips")

# In[26]:

tips.head()

# In[27]:

sns.lmplot(x="total_bill", y="tip", hue="smoker", data=tips);

# In[28]:

sns.lmplot(x="total_bill", y="tip", col="day", data=tips,

col_wrap=2, size=3);

# In[29]:

sns.factorplot(x="time", y="total_bill", hue="smoker",

col="day", data=tips, kind="box", size=4, aspect=.5);

# ## Level 2: "The Kaggle top scorer"

#

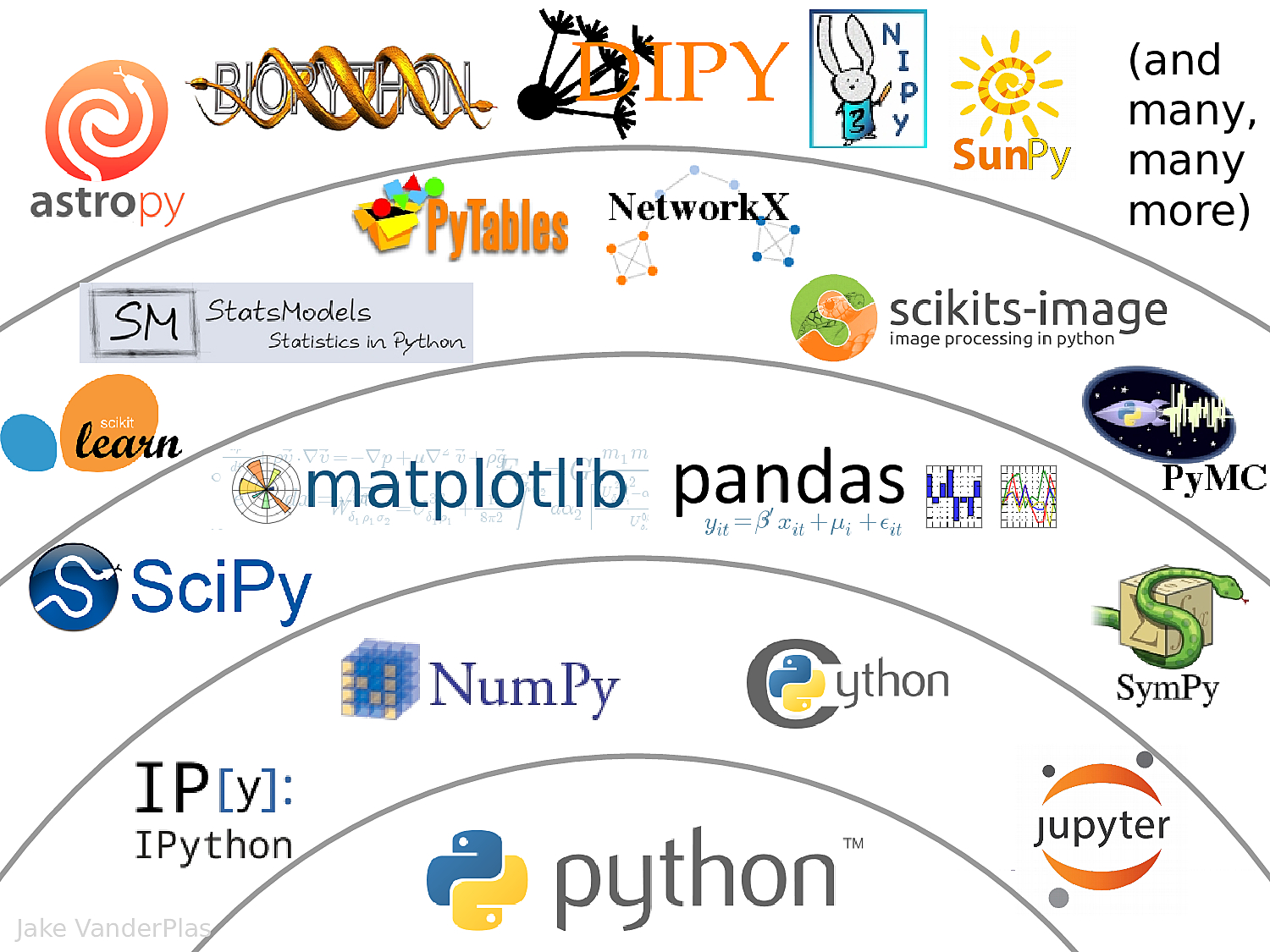

#  # ## Lots of machine learning and stats libraries

#

# * SciPy: comprehensive library of numerical routines like optimizers, integrators, FFT.

# * scikit-learn: **The** ML library out there

# * statsmodels: Frequentist statistics

# * SymPy: Symbolic Math

# * PyMC3: Probabilistic programming in Python

# ## scikit-learn

#

# Taken from http://scikit-learn.org/stable/modules/generated/sklearn.svm.SVC.html

# In[30]:

from sklearn import svm

X = [[0, 0], [1, 1]]

y = [0, 1]

clf = svm.SVC()

clf.fit(X, y)

# In[31]:

clf.predict([[0, .5]])

# ### Advanced example: Grid Search with Cross-Validation to find hyper parameters

#

# Taken from http://scikit-learn.org/stable/auto_examples/grid_search_digits.html and http://scikit-learn.org/stable/auto_examples/datasets/plot_digits_last_image.html

# In[32]:

from sklearn import datasets

from sklearn.cross_validation import train_test_split

from sklearn.grid_search import GridSearchCV

from sklearn.metrics import confusion_matrix

from sklearn.svm import SVC

digits = datasets.load_digits()

# In[33]:

import matplotlib.pyplot as plt

#Display the first digit

plt.figure(1, figsize=(3, 3))

plt.imshow(digits.images[-1], cmap=plt.cm.gray_r, interpolation='nearest')

plt.grid('off')

# In[34]:

n_samples = len(digits.images)

X = digits.images.reshape((n_samples, -1))

y = digits.target

# Split the dataset in two equal parts

X_train, X_test, y_train, y_test = train_test_split(

X, y, test_size=0.5, random_state=0)

# In[35]:

# Set the parameters by cross-validation

tuned_parameters = [{'kernel': ['rbf'], 'gamma': [1e-3, 1e-4],

'C': [1, 10, 100, 1000]},

{'kernel': ['linear'], 'C': [1, 10, 100, 1000]}]

clf = GridSearchCV(SVC(C=1), tuned_parameters, cv=5)

clf.fit(X_train, y_train)

# In[36]:

print(clf.best_params_)

# In[37]:

y_true, y_pred = y_test, clf.predict(X_test)

ax = sns.heatmap(confusion_matrix(y_true, y_pred))

ax.set(xlabel='true label', ylabel='predicted label');

# ## Level 3: "Lord of Speed"

#

#

# ## Lots of machine learning and stats libraries

#

# * SciPy: comprehensive library of numerical routines like optimizers, integrators, FFT.

# * scikit-learn: **The** ML library out there

# * statsmodels: Frequentist statistics

# * SymPy: Symbolic Math

# * PyMC3: Probabilistic programming in Python

# ## scikit-learn

#

# Taken from http://scikit-learn.org/stable/modules/generated/sklearn.svm.SVC.html

# In[30]:

from sklearn import svm

X = [[0, 0], [1, 1]]

y = [0, 1]

clf = svm.SVC()

clf.fit(X, y)

# In[31]:

clf.predict([[0, .5]])

# ### Advanced example: Grid Search with Cross-Validation to find hyper parameters

#

# Taken from http://scikit-learn.org/stable/auto_examples/grid_search_digits.html and http://scikit-learn.org/stable/auto_examples/datasets/plot_digits_last_image.html

# In[32]:

from sklearn import datasets

from sklearn.cross_validation import train_test_split

from sklearn.grid_search import GridSearchCV

from sklearn.metrics import confusion_matrix

from sklearn.svm import SVC

digits = datasets.load_digits()

# In[33]:

import matplotlib.pyplot as plt

#Display the first digit

plt.figure(1, figsize=(3, 3))

plt.imshow(digits.images[-1], cmap=plt.cm.gray_r, interpolation='nearest')

plt.grid('off')

# In[34]:

n_samples = len(digits.images)

X = digits.images.reshape((n_samples, -1))

y = digits.target

# Split the dataset in two equal parts

X_train, X_test, y_train, y_test = train_test_split(

X, y, test_size=0.5, random_state=0)

# In[35]:

# Set the parameters by cross-validation

tuned_parameters = [{'kernel': ['rbf'], 'gamma': [1e-3, 1e-4],

'C': [1, 10, 100, 1000]},

{'kernel': ['linear'], 'C': [1, 10, 100, 1000]}]

clf = GridSearchCV(SVC(C=1), tuned_parameters, cv=5)

clf.fit(X_train, y_train)

# In[36]:

print(clf.best_params_)

# In[37]:

y_true, y_pred = y_test, clf.predict(X_test)

ax = sns.heatmap(confusion_matrix(y_true, y_pred))

ax.set(xlabel='true label', ylabel='predicted label');

# ## Level 3: "Lord of Speed"

#

#  # ## Python is slow!

#

# * The interpreted language is indeed quite slow (just like matlab and R are slow)

# * Vectorize computations (i.e. the matlab way): leads to unreadable code.

#

# ## Great tools to generate C-code

#

# * Cython: Write Python-like syntax that can be translated to fast C-code and called from Python.

# * Numba: Directly write Python and auto-translate to LLVM.

# * Theano: Write numerical expressions in a NumPy-like syntax to build up compute-graph that can be compiled.

# * PyCUDA: GPU programming.

# ## Comparing Python, Cython and Numba

#

# Taken from https://jakevdp.github.io/blog/2013/06/15/numba-vs-cython-take-2/

# In[38]:

import numpy as np

X = np.random.random((1000, 3))

# In[39]:

def pairwise_python(X):

M = X.shape[0]

N = X.shape[1]

D = np.empty((M, M), dtype=np.float)

for i in range(M):

for j in range(M):

d = 0.0

for k in range(N):

tmp = X[i, k] - X[j, k]

d += tmp * tmp

D[i, j] = np.sqrt(d)

return D

get_ipython().run_line_magic('timeit', 'pairwise_python(X)')

# ## Cython

# In[40]:

get_ipython().run_line_magic('load_ext', 'cython')

# In[41]:

get_ipython().run_cell_magic('cython', '', 'import numpy as np\ncimport cython\nfrom libc.math cimport sqrt\n\n@cython.boundscheck(False)\n@cython.wraparound(False)\ndef pairwise_cython(double[:, ::1] X):\n cdef int M = X.shape[0]\n cdef int N = X.shape[1]\n cdef double tmp, d\n cdef double[:, ::1] D = np.empty((M, M), dtype=np.float64)\n for i in range(M):\n for j in range(M):\n d = 0.0\n for k in range(N):\n tmp = X[i, k] - X[j, k]\n d += tmp * tmp\n D[i, j] = sqrt(d)\n return np.asarray(D)\n')

# In[42]:

get_ipython().run_line_magic('timeit', 'pairwise_cython(X)')

# ## Numba

# In[43]:

from numba.decorators import autojit

pairwise_numba = autojit(pairwise_python)

# Run once to compile before timing

pairwise_numba(X)

get_ipython().run_line_magic('timeit', 'pairwise_numba(X)')

# # Level 4: "High Priest of Big Data"

#

#

# ## Python is slow!

#

# * The interpreted language is indeed quite slow (just like matlab and R are slow)

# * Vectorize computations (i.e. the matlab way): leads to unreadable code.

#

# ## Great tools to generate C-code

#

# * Cython: Write Python-like syntax that can be translated to fast C-code and called from Python.

# * Numba: Directly write Python and auto-translate to LLVM.

# * Theano: Write numerical expressions in a NumPy-like syntax to build up compute-graph that can be compiled.

# * PyCUDA: GPU programming.

# ## Comparing Python, Cython and Numba

#

# Taken from https://jakevdp.github.io/blog/2013/06/15/numba-vs-cython-take-2/

# In[38]:

import numpy as np

X = np.random.random((1000, 3))

# In[39]:

def pairwise_python(X):

M = X.shape[0]

N = X.shape[1]

D = np.empty((M, M), dtype=np.float)

for i in range(M):

for j in range(M):

d = 0.0

for k in range(N):

tmp = X[i, k] - X[j, k]

d += tmp * tmp

D[i, j] = np.sqrt(d)

return D

get_ipython().run_line_magic('timeit', 'pairwise_python(X)')

# ## Cython

# In[40]:

get_ipython().run_line_magic('load_ext', 'cython')

# In[41]:

get_ipython().run_cell_magic('cython', '', 'import numpy as np\ncimport cython\nfrom libc.math cimport sqrt\n\n@cython.boundscheck(False)\n@cython.wraparound(False)\ndef pairwise_cython(double[:, ::1] X):\n cdef int M = X.shape[0]\n cdef int N = X.shape[1]\n cdef double tmp, d\n cdef double[:, ::1] D = np.empty((M, M), dtype=np.float64)\n for i in range(M):\n for j in range(M):\n d = 0.0\n for k in range(N):\n tmp = X[i, k] - X[j, k]\n d += tmp * tmp\n D[i, j] = sqrt(d)\n return np.asarray(D)\n')

# In[42]:

get_ipython().run_line_magic('timeit', 'pairwise_cython(X)')

# ## Numba

# In[43]:

from numba.decorators import autojit

pairwise_numba = autojit(pairwise_python)

# Run once to compile before timing

pairwise_numba(X)

get_ipython().run_line_magic('timeit', 'pairwise_numba(X)')

# # Level 4: "High Priest of Big Data"

#

#  # # Lots of things happening!

#

# ## Big Data

# * Dask

# * Ibis

# * PySpark

# * bcolz

#

# ## Interactive data visualization

# * Bokeh

# * Plotly

# * pyxley

# ## Work interactively on Big Data with Dask

#

# Taken from https://jakevdp.github.io/blog/2015/08/14/out-of-core-dataframes-in-python/

# In[44]:

get_ipython().system('ls -lahL POIWorld.csv')

# In[45]:

from dask import dataframe as dd

columns = ["name", "amenity", "Longitude", "Latitude"]

data = dd.read_csv('POIWorld.csv', usecols=columns)

data

# In[46]:

with_name = data[data.name.notnull()]

# In[47]:

is_starbucks = with_name.name.str.contains('[Ss]tarbucks')

is_dunkin = with_name.name.str.contains('[Dd]unkin')

starbucks = with_name[is_starbucks]

dunkin = with_name[is_dunkin]

# In[48]:

from dask.diagnostics import ProgressBar

# In[49]:

with ProgressBar():

starbucks_count, dunkin_count = dd.compute(starbucks.name.count(), dunkin.name.count())

# In[50]:

starbucks_count, dunkin_count

# In[51]:

locs = dd.compute(starbucks.Longitude,

starbucks.Latitude,

dunkin.Longitude,

dunkin.Latitude)

# extract arrays of values fro the series:

lon_s, lat_s, lon_d, lat_d = [loc.values for loc in locs]

# In[52]:

import matplotlib.pyplot as plt

from mpl_toolkits.basemap import Basemap

def draw_USA():

"""initialize a basemap centered on the continental USA"""

plt.figure(figsize=(14, 10))

return Basemap(projection='lcc', resolution='l',

llcrnrlon=-119, urcrnrlon=-64,

llcrnrlat=22, urcrnrlat=49,

lat_1=33, lat_2=45, lon_0=-95,

area_thresh=10000)

m = draw_USA()

# Draw map background

m.fillcontinents(color='white', lake_color='#eeeeee')

m.drawstates(color='lightgray')

m.drawcoastlines(color='lightgray')

m.drawcountries(color='lightgray')

m.drawmapboundary(fill_color='#eeeeee')

# Plot the values in Starbucks Green and Dunkin Donuts Orange

style = dict(s=5, marker='o', alpha=0.5, zorder=2)

m.scatter(lon_s, lat_s, latlon=True,

label="Starbucks", color='#00592D', **style)

m.scatter(lon_d, lat_d, latlon=True,

label="Dunkin' Donuts", color='#FC772A', **style)

plt.legend(loc='lower left', frameon=False);

# ## Interactive data visualization with Bokeh

# In[55]:

from bokeh.io import output_notebook

from bokeh.resources import CDN

from bokeh.plotting import figure, show

output_notebook(resources=CDN)

from __future__ import print_function

from math import pi

from bokeh.browserlib import view

from bokeh.document import Document

from bokeh.embed import file_html

from bokeh.models.glyphs import Circle, Text

from bokeh.models import (

BasicTicker, ColumnDataSource, Grid, GridPlot, LinearAxis,

DataRange1d, PanTool, Plot, WheelZoomTool

)

from bokeh.resources import INLINE

from bokeh.sampledata.iris import flowers

from bokeh.plotting import show

colormap = {'setosa': 'red', 'versicolor': 'green', 'virginica': 'blue'}

flowers['color'] = flowers['species'].map(lambda x: colormap[x])

source = ColumnDataSource(

data=dict(

petal_length=flowers['petal_length'],

petal_width=flowers['petal_width'],

sepal_length=flowers['sepal_length'],

sepal_width=flowers['sepal_width'],

color=flowers['color']

)

)

text_source = ColumnDataSource(

data=dict(xcenter=[125], ycenter=[135])

)

xdr = DataRange1d()

ydr = DataRange1d()

def make_plot(xname, yname, xax=False, yax=False, text=None):

plot = Plot(

x_range=xdr, y_range=ydr, background_fill="#efe8e2",

border_fill='white', title="", min_border=2, h_symmetry=False, v_symmetry=False,

plot_width=150, plot_height=150)

circle = Circle(x=xname, y=yname, fill_color="color", fill_alpha=0.2, size=4, line_color="color")

r = plot.add_glyph(source, circle)

xdr.renderers.append(r)

ydr.renderers.append(r)

xticker = BasicTicker()

if xax:

xaxis = LinearAxis()

plot.add_layout(xaxis, 'below')

xticker = xaxis.ticker

plot.add_layout(Grid(dimension=0, ticker=xticker))

yticker = BasicTicker()

if yax:

yaxis = LinearAxis()

plot.add_layout(yaxis, 'left')

yticker = yaxis.ticker

plot.add_layout(Grid(dimension=1, ticker=yticker))

plot.add_tools(PanTool(), WheelZoomTool())

if text:

text = " ".join(text.split('_'))

text = Text(

x={'field':'xcenter', 'units':'screen'},

y={'field':'ycenter', 'units':'screen'},

text=[text], angle=pi/4, text_font_style="bold", text_baseline="top",

text_color="#ffaaaa", text_alpha=0.7, text_align="center", text_font_size="28pt"

)

plot.add_glyph(text_source, text)

return plot

xattrs = ["petal_length", "petal_width", "sepal_width", "sepal_length"]

yattrs = list(reversed(xattrs))

plots = []

for y in yattrs:

row = []

for x in xattrs:

xax = (y == yattrs[-1])

yax = (x == xattrs[0])

text = x if (x==y) else None

plot = make_plot(x, y, xax, yax, text)

row.append(plot)

plots.append(row)

grid = GridPlot(children=plots, title="iris_splom")

# In[56]:

show(grid)

# # Staying up-to-date

# * Get on [Twitter](https://twitter.com/twiecki)

# * Frequent [HackerNews](https://news.ycombinator.com)

# * Frequent [DataTau](https://datatau.com)

# * Visit [PyData conferences](http://pydata.org/) and the [SciPy conference](http://conference.scipy.org/)

#

# # Lots of things happening!

#

# ## Big Data

# * Dask

# * Ibis

# * PySpark

# * bcolz

#

# ## Interactive data visualization

# * Bokeh

# * Plotly

# * pyxley

# ## Work interactively on Big Data with Dask

#

# Taken from https://jakevdp.github.io/blog/2015/08/14/out-of-core-dataframes-in-python/

# In[44]:

get_ipython().system('ls -lahL POIWorld.csv')

# In[45]:

from dask import dataframe as dd

columns = ["name", "amenity", "Longitude", "Latitude"]

data = dd.read_csv('POIWorld.csv', usecols=columns)

data

# In[46]:

with_name = data[data.name.notnull()]

# In[47]:

is_starbucks = with_name.name.str.contains('[Ss]tarbucks')

is_dunkin = with_name.name.str.contains('[Dd]unkin')

starbucks = with_name[is_starbucks]

dunkin = with_name[is_dunkin]

# In[48]:

from dask.diagnostics import ProgressBar

# In[49]:

with ProgressBar():

starbucks_count, dunkin_count = dd.compute(starbucks.name.count(), dunkin.name.count())

# In[50]:

starbucks_count, dunkin_count

# In[51]:

locs = dd.compute(starbucks.Longitude,

starbucks.Latitude,

dunkin.Longitude,

dunkin.Latitude)

# extract arrays of values fro the series:

lon_s, lat_s, lon_d, lat_d = [loc.values for loc in locs]

# In[52]:

import matplotlib.pyplot as plt

from mpl_toolkits.basemap import Basemap

def draw_USA():

"""initialize a basemap centered on the continental USA"""

plt.figure(figsize=(14, 10))

return Basemap(projection='lcc', resolution='l',

llcrnrlon=-119, urcrnrlon=-64,

llcrnrlat=22, urcrnrlat=49,

lat_1=33, lat_2=45, lon_0=-95,

area_thresh=10000)

m = draw_USA()

# Draw map background

m.fillcontinents(color='white', lake_color='#eeeeee')

m.drawstates(color='lightgray')

m.drawcoastlines(color='lightgray')

m.drawcountries(color='lightgray')

m.drawmapboundary(fill_color='#eeeeee')

# Plot the values in Starbucks Green and Dunkin Donuts Orange

style = dict(s=5, marker='o', alpha=0.5, zorder=2)

m.scatter(lon_s, lat_s, latlon=True,

label="Starbucks", color='#00592D', **style)

m.scatter(lon_d, lat_d, latlon=True,

label="Dunkin' Donuts", color='#FC772A', **style)

plt.legend(loc='lower left', frameon=False);

# ## Interactive data visualization with Bokeh

# In[55]:

from bokeh.io import output_notebook

from bokeh.resources import CDN

from bokeh.plotting import figure, show

output_notebook(resources=CDN)

from __future__ import print_function

from math import pi

from bokeh.browserlib import view

from bokeh.document import Document

from bokeh.embed import file_html

from bokeh.models.glyphs import Circle, Text

from bokeh.models import (

BasicTicker, ColumnDataSource, Grid, GridPlot, LinearAxis,

DataRange1d, PanTool, Plot, WheelZoomTool

)

from bokeh.resources import INLINE

from bokeh.sampledata.iris import flowers

from bokeh.plotting import show

colormap = {'setosa': 'red', 'versicolor': 'green', 'virginica': 'blue'}

flowers['color'] = flowers['species'].map(lambda x: colormap[x])

source = ColumnDataSource(

data=dict(

petal_length=flowers['petal_length'],

petal_width=flowers['petal_width'],

sepal_length=flowers['sepal_length'],

sepal_width=flowers['sepal_width'],

color=flowers['color']

)

)

text_source = ColumnDataSource(

data=dict(xcenter=[125], ycenter=[135])

)

xdr = DataRange1d()

ydr = DataRange1d()

def make_plot(xname, yname, xax=False, yax=False, text=None):

plot = Plot(

x_range=xdr, y_range=ydr, background_fill="#efe8e2",

border_fill='white', title="", min_border=2, h_symmetry=False, v_symmetry=False,

plot_width=150, plot_height=150)

circle = Circle(x=xname, y=yname, fill_color="color", fill_alpha=0.2, size=4, line_color="color")

r = plot.add_glyph(source, circle)

xdr.renderers.append(r)

ydr.renderers.append(r)

xticker = BasicTicker()

if xax:

xaxis = LinearAxis()

plot.add_layout(xaxis, 'below')

xticker = xaxis.ticker

plot.add_layout(Grid(dimension=0, ticker=xticker))

yticker = BasicTicker()

if yax:

yaxis = LinearAxis()

plot.add_layout(yaxis, 'left')

yticker = yaxis.ticker

plot.add_layout(Grid(dimension=1, ticker=yticker))

plot.add_tools(PanTool(), WheelZoomTool())

if text:

text = " ".join(text.split('_'))

text = Text(

x={'field':'xcenter', 'units':'screen'},

y={'field':'ycenter', 'units':'screen'},

text=[text], angle=pi/4, text_font_style="bold", text_baseline="top",

text_color="#ffaaaa", text_alpha=0.7, text_align="center", text_font_size="28pt"

)

plot.add_glyph(text_source, text)

return plot

xattrs = ["petal_length", "petal_width", "sepal_width", "sepal_length"]

yattrs = list(reversed(xattrs))

plots = []

for y in yattrs:

row = []

for x in xattrs:

xax = (y == yattrs[-1])

yax = (x == xattrs[0])

text = x if (x==y) else None

plot = make_plot(x, y, xax, yax, text)

row.append(plot)

plots.append(row)

grid = GridPlot(children=plots, title="iris_splom")

# In[56]:

show(grid)

# # Staying up-to-date

# * Get on [Twitter](https://twitter.com/twiecki)

# * Frequent [HackerNews](https://news.ycombinator.com)

# * Frequent [DataTau](https://datatau.com)

# * Visit [PyData conferences](http://pydata.org/) and the [SciPy conference](http://conference.scipy.org/)

#