No surprises¶

$$ \newcommand{\eg}{{\it e.g.}} \newcommand{\ie}{{\it i.e.}} \newcommand{\argmin}{\operatornamewithlimits{argmin}} \newcommand{\mc}{\mathcal} \newcommand{\mb}{\mathbb} \newcommand{\mf}{\mathbf} \newcommand{\minimize}{{\text{minimize}}} \newcommand{\diag}{{\text{diag}}} \newcommand{\cond}{{\text{cond}}} \newcommand{\rank}{{\text{rank }}} \newcommand{\range}{{\mathcal{R}}} \newcommand{\null}{{\mathcal{N}}} \newcommand{\tr}{{\text{trace}}} \newcommand{\dom}{{\text{dom}}} \newcommand{\dist}{{\text{dist}}} \newcommand{\R}{\mathbf{R}} \newcommand{\SM}{\mathbf{S}} \newcommand{\ball}{\mathcal{B}} \newcommand{\bmat}[1]{\begin{bmatrix}#1\end{bmatrix}} \newcommand{\loss}{\ell} \newcommand{\eloss}{\mc{L}} \newcommand{\abs}[1]{| #1 |} \newcommand{\norm}[1]{\| #1 \|} \newcommand{\tp}{T} $$In this section, we will load a sound sample (.wav file), and examine which musical notes (or chords) the song consists of. For your information, the frequency-note matching table can be found via Googling, for example from, https://en.wikipedia.org/wiki/Piano_key_frequencies.

We will first need to import the following modules, the third for loading .wav file, and the last for playing it.

import numpy as np

import matplotlib.pyplot as plt

from scipy.io import wavfile

from IPython.display import Audio

In this lecture, we will access data files which are uploaded on the Google drive. In order to do this, we will have to mount the Goodle drive first.

Run the following cell. Then you will be presented with a link and will be asked to enter your authorization code.

Click on the link to log in again with your Inha University account, which you are working with. Then you will be presented with the authorization code.

Copy the authorization code, and paste it into the blank.

Note that this step is necessary ONLY when you work on Google Colab environment.

import os

from google.colab import drive

drive.mount('/content/drive')

os.chdir('/content/drive/My Drive/Colab Notebooks/ase3001')

Mounted at /content/drive

The following pieces of code loads the first 12 second sound clip of a song that you've probably heard of.

The loaded data contains the sampling rate, data[0], and the time-series signals from stereo channels, data[1].

# The wave file can be downloaded from

# https://jonghank.github.io/ase3001/files/no_surprises_clip.wav

data = wavfile.read('no_surprises_clip.wav')

framerate = data[0]

sounddata = data[1]

t = np.arange(0,len(sounddata))/framerate

plt.figure(figsize=(12,4), dpi=100)

plt.plot(t,sounddata[:,0], label='Right channel')

plt.plot(t,sounddata[:,1], label='Left channel')

plt.xlabel(r'$t$')

plt.title('Stereo signal')

plt.legend()

plt.show()

Audio([sounddata[:,1], sounddata[:,0]], rate=framerate, autoplay=True)

The code below will merge the stereo channel data into a mono data, just by adding them. From now on we will work on this mono data.

mono = sounddata@[1,1]

plt.figure(figsize=(12,4), dpi=100)

plt.plot(t, mono)

plt.xlabel(r'$t$')

plt.title('Mono signal')

plt.show()

Audio(mono, rate=framerate, autoplay=True)

(Problem 1) The sample repeats the same riff twice. Crop the sample from $0 \le t \le 6.5$, and save it to a new time-series, $x^\text{cut}(t)$. Through Fourier analysis, you will be able to identify several dominant musical notes that appear in this part. What are they? List the five most dominant ones.

# your code here

# your code here

(Problem 2) The sample includes performances from two instruments. Separate the performances of the two instruments based on $f_\text{threshold}=300\text{ Hz}$, saving the sounds heard in the range below $f_\text{threshold}$ in $x_\text{bass}$ and the sounds heard in the range above $f_\text{threshold}$ in $x_\text{guitar}$. Play both and check if the sounds of the two instruments are well-separated.

# your code here

# your code here

(Problem 3) Now your job is to make a software that receives a musical sound sample and generates sheet music for the sound. This can be achieved by performing Fourier analysis on moving windowed segments of the audio signals, and displaying the results as a two-dimensional frequency plot over time, as described below.

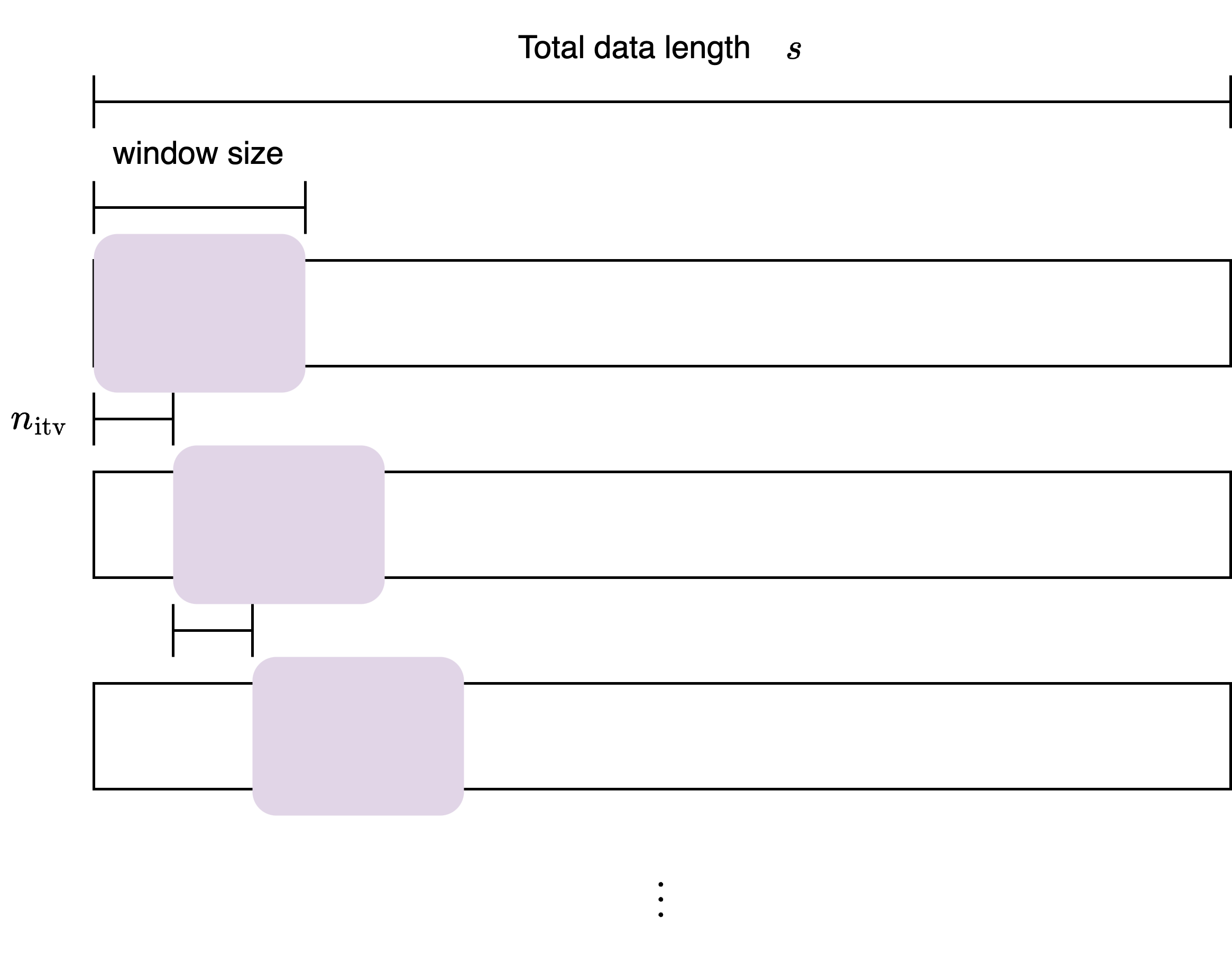

You can divide the audio data, $x^\text{cut}$ into segments of size n_wdw and create an array fft_in with intervals of size n_itv to perform the Fourier Transform.

The input matrix for the Fourier transform is defined as:

$$ {\tt{fft}_\tt{in}} = \bmat{ y^{(0)} & y^{(n_\text{itv})} & y^{(2n_\text{itv})} & \cdots & y^{(mn_\text{itv})} } \in \mathbb{R}^{n_\text{wdw}\times(m+1)} $$where,

$$ \begin{aligned} y^{(k)} &= \bmat{ x^\text{cut}_k & x^\text{cut}_{k+1} & \cdots & x^\text{cut}_{k+n_\text{wdw}-1} }^T \in \mathbb{R}^{n_\text{wdw}}\\ m &= \left \lfloor \frac{s-n_\text{wdw}}{n_\text{itv}}\right \rfloor \end{aligned} $$Save the result into an array named fft_out.

# your code here

A spectogram is a plot of frequency intensities, which were obtained via Fourier analyses, over time. A Short-Time Fourier Transform (STFT) is used to create a spectogram. The STFT involves dividing the signal into fixed length segments (windows) and performing a Fourier transform on each segment to obtain the frequency at that time.

Plot a spectogram with your result saved in fft_out using matplotlib.pyplot.pcolormesh.

# your code here

(Problem 3) The piano key number N refers to a number defined to represent the keys on a standard piano keyboard, where key number 1 represents the leftmost key (A0) producing the lowest note on a standard 88-key piano, and key number 88 represents the rightmost key (C8), producing the highest note. A difference of one between key numbers corresponds to a half-step difference in pitch (see Piano key frequencies for reference). For example, key number 49 represents the middle A (A4) on the piano.

More specifically, the frequency of the note produced by a piano key number $N$ follows an exponential relationship as follows:

$$ f_N = 440\times 2^\frac{N-49}{12}. $$As $N$ increases, the note’s frequency rises exponentially. Each octave consists of 12 keys, corresponding to the 12 semitones in Western music. When you move up by one octave, the frequency of the note doubles. This shows the exponential increase in frequency as you move up the keyboard.

Overlay the frequencies corresponding to all the piano keys from key 37 up to 3 octaves (36 keys) on the spectrogram obtained earlier, and then identify the guitar notes that appear during the first 6.5 seconds of “No Surprises.” (Notice that Ed O'Brien, the band's rhythm guitarist who recorded this song, very slightly down-tuned his guitar.)

# your code here