TF from MQL¶

This notebook can read an MQL dump of a version of the BHSA Hebrew Text Database and transform it in a Text-Fabric Text-Fabric resource.

Discussion¶

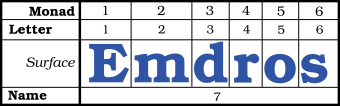

The principled way of going about such a conversion is to import the MQL source into an Emdros database, and use it to retrieve objects and features from there.

Because the syntax of an MQL file leaves some freedom, it is error prone to do a text-to-text conversion from MQL to something else.

Yet this is what we do, the error-prone thing. We then avoid installing and configuring and managing Emdros, MYSQL/SQLite3. Aside the upfront work to get this going, the going after that would also be much slower.

So here you are, a smallish script to do an awful lot of work, mostly correct, if careful used.

Caveat¶

This notebook makes use of a new feature of text-fabric, first present in 2.3.12. Make sure to upgrade first.

sudo

import os

import sys

from shutil import rmtree

from tf.fabric import Fabric

import utils

if "SCRIPT" not in locals():

SCRIPT = False

FORCE = True

CORE_NAME = "bhsa"

VERSION = "2021"

RENAME = (

("g_suffix", "trailer"),

("g_suffix_utf8", "trailer_utf8"),

)

def stop(good=False):

if SCRIPT:

sys.exit(0 if good else 1)

Setting up the context: source file and target directories¶

The conversion is executed in an environment of directories, so that sources, temp files and results are in convenient places and do not have to be shifted around.

repoBase = os.path.expanduser("~/github/etcbc")

thisRepo = "{}/{}".format(repoBase, CORE_NAME)

thisSource = "{}/source/{}".format(thisRepo, VERSION)

mqlzFile = "{}/{}.mql.bz2".format(thisSource, CORE_NAME)

thisTemp = "{}/_temp/{}".format(thisRepo, VERSION)

thisTempSource = "{}/source".format(thisTemp)

mqlFile = "{}/{}.mql".format(thisTempSource, CORE_NAME)

thisTempTf = "{}/tf".format(thisTemp)

thisTf = "{}/tf/{}".format(thisRepo, VERSION)

Test¶

Check whether this conversion is needed in the first place. Only when run as a script.

if SCRIPT:

testFile = "{}/.tf/otype.tfx".format(thisTf)

(good, work) = utils.mustRun(

mqlzFile, "{}/.tf/otype.tfx".format(thisTf), force=FORCE

)

if not good:

stop(good=False)

if not work:

stop(good=True)

TF Settings¶

We add some custom information here.

- the MQL object type that corresponds to the Text-Fabric slot type, typically

word; - a piece of metadata that will go into every feature; the time will be added automatically

- suitable text formats for the

otextfeature of TF.

The oText feature is very sensitive to what is available in the source MQL. It needs to be configured here. We save the configs we need per source and version. And we define a stripped down default version to start with.

slotType = "word"

featureMetaData = dict(

dataset="BHSA",

version=VERSION,

datasetName="Biblia Hebraica Stuttgartensia Amstelodamensis",

author="Eep Talstra Centre for Bible and Computer",

encoders="Constantijn Sikkel (QDF), Ulrik Petersen (MQL) and Dirk Roorda (TF)",

website="https://shebanq.ancient-data.org",

email="shebanq@ancient-data.org",

)

oText = {

"": {

"": """

@sectionFeatures=book,chapter,verse

@sectionTypes=book,chapter,verse

@fmt:text-orig-full={g_word_utf8}{g_suffix_utf8}

""",

},

"_temp": """

@fmt:lex-orig-full={g_lex_utf8}

@fmt:lex-orig-plain={lex_utf8}

@fmt:lex-trans-full={g_lex}

@fmt:lex-trans-plain={lex}

@fmt:text-orig-full={g_word_utf8}{trailer_utf8}

@fmt:text-orig-plain={g_cons_utf8}{trailer_utf8}

@fmt:text-trans-full={g_word}{trailer}

@fmt:text-trans-plain={g_cons}{trailer}

@sectionFeatures=book,chapter,verse

@sectionTypes=book,chapter,verse

""", # noqa W291

"2021": """

@fmt:lex-orig-full={g_lex_utf8}

@fmt:lex-orig-plain={lex_utf8}

@fmt:lex-trans-full={g_lex}

@fmt:lex-trans-plain={lex}

@fmt:text-orig-full={g_word_utf8}{trailer_utf8}

@fmt:text-orig-plain={g_cons_utf8}{trailer_utf8}

@fmt:text-trans-full={g_word}{trailer}

@fmt:text-trans-plain={g_cons}{trailer}

@sectionFeatures=book,chapter,verse

@sectionTypes=book,chapter,verse

""", # noqa W291

"2017": """

@fmt:lex-orig-full={g_lex_utf8}

@fmt:lex-orig-plain={lex_utf8}

@fmt:lex-trans-full={g_lex}

@fmt:lex-trans-plain={lex}

@fmt:text-orig-full={g_word_utf8}{trailer_utf8}

@fmt:text-orig-plain={g_cons_utf8}{trailer_utf8}

@fmt:text-trans-full={g_word}{trailer}

@fmt:text-trans-plain={g_cons}{trailer}

@sectionFeatures=book,chapter,verse

@sectionTypes=book,chapter,verse

""", # noqa W291

"2016": """

@fmt:lex-orig-full={g_lex_utf8}

@fmt:lex-orig-plain={lex_utf8}

@fmt:lex-trans-full={g_lex}

@fmt:lex-trans-plain={lex}

@fmt:text-orig-full={g_word_utf8}{trailer_utf8}

@fmt:text-orig-plain={g_cons_utf8}{trailer_utf8}

@fmt:text-trans-full={g_word}{trailer}

@fmt:text-trans-plain={g_cons}{trailer}

@sectionFeatures=book,chapter,verse

@sectionTypes=book,chapter,verse

""", # noqa W291

"4b": """

@fmt:lex-orig-full={g_lex_utf8}

@fmt:lex-orig-plain={lex_utf8}

@fmt:lex-trans-full={g_lex}

@fmt:lex-trans-plain={lex}

@fmt:text-orig-full={g_word_utf8}{trailer_utf8}

@fmt:text-orig-full-ketiv={g_word_utf8}{trailer_utf8}

@fmt:text-orig-plain={g_cons_utf8}{trailer_utf8}

@fmt:text-trans-full={g_word}

@fmt:text-trans-full-ketiv={g_word}

@fmt:text-trans-plain={g_cons}

@sectionFeatures=book,chapter,verse

@sectionTypes=book,chapter,verse

""", # noqa W291

"4": """

@fmt:lex-orig-full={g_lex_utf8}

@fmt:lex-orig-plain={lex_utf8}

@fmt:lex-trans-full={g_lex}

@fmt:lex-trans-plain={lex}

@fmt:text-orig-full={g_word_utf8}{trailer_utf8}

@fmt:text-orig-full-ketiv={g_word_utf8}{trailer_utf8}

@fmt:text-orig-plain={g_cons_utf8}{trailer_utf8}

@fmt:text-trans-full={g_word}

@fmt:text-trans-full-ketiv={g_word}

@fmt:text-trans-plain={g_cons}

@sectionFeatures=book,chapter,verse

@sectionTypes=book,chapter,verse

""", # noqa W291

"3": """

@fmt:lex-orig-full={graphical_lexeme_utf8}

@fmt:lex-orig-plain={lexeme_utf8}

@fmt:lex-trans-full={graphical_lexeme}

@fmt:lex-trans-plain={lexeme}

@fmt:text-orig-full={text}{suffix}

@fmt:text-orig-plain={surface_consonants_utf8}{suffix}

@fmt:text-trans-full={graphical_word}

@fmt:text-trans-plain={surface_consonants}

@sectionFeatures=book,chapter,verse

@sectionTypes=book,chapter,verse

""", # noqa W291

"c": """

@fmt:lex-orig-full={g_lex_utf8}

@fmt:lex-orig-plain={lex_utf8}

@fmt:lex-trans-full={g_lex}

@fmt:lex-trans-plain={lex}

@fmt:text-orig-full={g_word_utf8}{trailer_utf8}

@fmt:text-orig-plain={g_cons_utf8}{trailer_utf8}

@fmt:text-trans-full={g_word}{trailer}

@fmt:text-trans-plain={g_cons}{trailer}

@sectionFeatures=book,chapter,verse

@sectionTypes=book,chapter,verse

""", # noqa W291

}

The next function selects the proper otext material, falling back on a default if nothing

appropriate has been specified in oText.

thisOtext = oText.get(VERSION, oText[""])

if thisOtext is oText[""]:

utils.caption(

0, "WARNING: no otext feature info provided, using a meager default value"

)

otextInfo = {}

else:

utils.caption(0, "INFO: otext feature information found")

otextInfo = dict(

line[1:].split("=", 1) for line in thisOtext.strip("\n").split("\n")

)

for x in sorted(otextInfo.items()):

utils.caption(0, '\t{:<20} = "{}"'.format(*x))

| 0.00s INFO: otext feature information found

| 0.00s fmt:lex-orig-full = "{g_lex_utf8} "

| 0.00s fmt:lex-orig-plain = "{lex_utf8} "

| 0.00s fmt:lex-trans-full = "{g_lex} "

| 0.00s fmt:lex-trans-plain = "{lex} "

| 0.00s fmt:text-orig-full = "{g_word_utf8}{trailer_utf8}"

| 0.00s fmt:text-orig-plain = "{g_cons_utf8}{trailer_utf8}"

| 0.00s fmt:text-trans-full = "{g_word}{trailer}"

| 0.00s fmt:text-trans-plain = "{g_cons}{trailer}"

| 0.00s sectionFeatures = "book,chapter,verse"

| 0.00s sectionTypes = "book,chapter,verse"

Overview¶

The program has several stages:

- prepare the source (utils.bunzip if needed)

- convert convert the MQL file into a text-fabric dataset

- differences (informational)

- deliver the TF data at its destination directory

- compile all TF features to binary format

Prepare¶

Check the source, utils.bunzip it if needed, empty the result directory.

if not os.path.exists(thisTempSource):

os.makedirs(thisTempSource)

utils.caption(0, "bunzipping {} ...".format(mqlzFile))

utils.bunzip(mqlzFile, mqlFile)

utils.caption(0, "Done")

if os.path.exists(thisTempTf):

rmtree(thisTempTf)

os.makedirs(thisTempTf)

| 2.01s bunzipping /Users/dirk/github/etcbc/bhsa/source/2021/bhsa.mql.bz2 ... | 2.01s NOTE: Using existing unzipped file which is newer than bzipped one | 2.01s Done

MQL to Text-Fabric¶

Transform the collected information in feature-like data-structures, and write it all

out to .tf files.

TF = Fabric(locations=thisTempTf, silent=True)

TF.importMQL(mqlFile, slotType=slotType, otext=otextInfo, meta=featureMetaData)

Rename features¶

We rename the features mentioned in the RENAME dictionary.

if RENAME is None:

utils.caption(4, "Rename features: nothing to do")

else:

utils.caption(4, "Renaming {} features in {}".format(len(RENAME), thisTempTf))

for (srcFeature, dstFeature) in RENAME:

srcPath = "{}/{}.tf".format(thisTempTf, srcFeature)

dstPath = "{}/{}.tf".format(thisTempTf, dstFeature)

if os.path.exists(srcPath):

os.rename(srcPath, dstPath)

utils.caption(0, "\trenamed {} to {}".format(srcFeature, dstFeature))

else:

utils.caption(0, "\tsource feature {} does not exist.".format(srcFeature))

utils.caption(

0, "\tdestination feature {} will not be created.".format(dstFeature)

)

.............................................................................................. . 3m 41s Renaming 2 features in /Users/dirk/github/etcbc/bhsa/_temp/2021/tf . .............................................................................................. | 3m 41s renamed g_suffix to trailer | 3m 41s renamed g_suffix_utf8 to trailer_utf8

Diffs¶

Check differences with previous versions.

The new dataset has been created in a temporary directory, and has not yet been copied to its destination.

Here is your opportunity to compare the newly created features with the older features. You expect some differences in some features.

We check the differences between the previous version of the features and what has been generated. We list features that will be added and deleted and changed. For each changed feature we show the first line where the new feature differs from the old one. We ignore changes in the metadata, because the timestamp in the metadata will always change.

utils.checkDiffs(thisTempTf, thisTf)

.............................................................................................. . 3m 43s Check differences with previous version . .............................................................................................. | 3m 43s 75 features to add | 3m 43s book | 3m 43s chapter | 3m 43s code | 3m 43s det | 3m 43s dist | 3m 43s dist_unit | 3m 43s distributional_parent | 3m 43s domain | 3m 43s function | 3m 43s functional_parent | 3m 43s g_cons | 3m 43s g_cons_utf8 | 3m 43s g_lex | 3m 43s g_lex_utf8 | 3m 43s g_nme | 3m 43s g_nme_utf8 | 3m 43s g_pfm | 3m 43s g_pfm_utf8 | 3m 43s g_prs | 3m 43s g_prs_utf8 | 3m 43s g_uvf | 3m 43s g_uvf_utf8 | 3m 43s g_vbe | 3m 43s g_vbe_utf8 | 3m 43s g_vbs | 3m 43s g_vbs_utf8 | 3m 43s g_voc_lex | 3m 43s g_voc_lex_utf8 | 3m 43s g_word | 3m 43s g_word_utf8 | 3m 43s gn | 3m 43s is_root | 3m 43s kind | 3m 43s kq_hybrid | 3m 43s kq_hybrid_utf8 | 3m 43s label | 3m 43s language | 3m 43s lex | 3m 43s lex_utf8 | 3m 43s lexeme_count | 3m 43s ls | 3m 43s mother | 3m 43s mother_object_type | 3m 43s nme | 3m 43s nu | 3m 43s number | 3m 43s oslots | 3m 43s otext | 3m 43s otype | 3m 43s pdp | 3m 43s pfm | 3m 43s prs | 3m 43s prs_gn | 3m 43s prs_nu | 3m 43s prs_ps | 3m 43s ps | 3m 43s qere | 3m 43s qere_utf8 | 3m 43s rela | 3m 43s sp | 3m 43s st | 3m 43s suffix_gender | 3m 43s suffix_number | 3m 43s suffix_person | 3m 43s tab | 3m 43s trailer | 3m 43s trailer_utf8 | 3m 43s txt | 3m 43s typ | 3m 43s uvf | 3m 43s vbe | 3m 43s vbs | 3m 43s verse | 3m 43s vs | 3m 43s vt | 3m 43s no features to delete | 3m 43s 0 features in common | 3m 43s Done

Deliver¶

Copy the new TF dataset from the temporary location where it has been created to its final destination.

utils.deliverDataset(thisTempTf, thisTf)

.............................................................................................. . 3m 46s Deliver data set to /Users/dirk/github/etcbc/bhsa/tf/2021 . ..............................................................................................

Compile TF¶

Just to see whether everything loads and the pre-computing of extra information works out. Moreover, if you want to work with these features, then the pre-computing has already been done, and everything is quicker in subsequent runs.

We issue load statement to trigger the pre-computing of extra data.

Note that all features specified text formats in the otext config feature,

will be loaded, as well as the features for sections.

At that point we have access to the full list of features. We grab them and are going to load them all!

utils.caption(4, "Load and compile standard TF features")

TF = Fabric(locations=thisTf, modules=[""])

api = TF.load("")

utils.caption(4, "Load and compile all other TF features")

allFeatures = TF.explore(silent=False, show=True)

loadableFeatures = allFeatures["nodes"] + allFeatures["edges"]

api = TF.load(loadableFeatures)

api.makeAvailableIn(globals())

..............................................................................................

. 3m 53s Load and compile standard TF features .

..............................................................................................

This is Text-Fabric 8.5.13

Api reference : https://annotation.github.io/text-fabric/tf/cheatsheet.html

75 features found and 0 ignored

0.00s loading features ...

| 0.50s T otype from ~/github/etcbc/bhsa/tf/2021

| 6.76s T oslots from ~/github/etcbc/bhsa/tf/2021

| 0.00s Dataset without structure sections in otext:no structure functions in the T-API

| 0.84s T lex from ~/github/etcbc/bhsa/tf/2021

| 0.99s T g_word_utf8 from ~/github/etcbc/bhsa/tf/2021

| 0.90s T g_cons_utf8 from ~/github/etcbc/bhsa/tf/2021

| 0.90s T g_lex_utf8 from ~/github/etcbc/bhsa/tf/2021

| 0.68s T trailer_utf8 from ~/github/etcbc/bhsa/tf/2021

| 0.03s T chapter from ~/github/etcbc/bhsa/tf/2021

| 0.93s T g_word from ~/github/etcbc/bhsa/tf/2021

| 0.84s T g_cons from ~/github/etcbc/bhsa/tf/2021

| 0.85s T g_lex from ~/github/etcbc/bhsa/tf/2021

| 0.88s T lex_utf8 from ~/github/etcbc/bhsa/tf/2021

| 0.65s T trailer from ~/github/etcbc/bhsa/tf/2021

| 0.04s T verse from ~/github/etcbc/bhsa/tf/2021

| 0.05s T book from ~/github/etcbc/bhsa/tf/2021

| | 0.64s C __levels__ from otype, oslots, otext

| | 12s C __order__ from otype, oslots, __levels__

| | 0.63s C __rank__ from otype, __order__

| | 12s C __levUp__ from otype, oslots, __rank__

| | 8.14s C __levDown__ from otype, __levUp__, __rank__

| | 3.33s C __boundary__ from otype, oslots, __rank__

| | 0.07s C __sections__ from otype, oslots, otext, __levUp__, __levels__, book, chapter, verse

53s All features loaded/computed - for details use loadLog()

..............................................................................................

. 4m 46s Load and compile all other TF features .

..............................................................................................

| 0.00s Feature overview: 70 for nodes; 4 for edges; 1 configs; 8 computed

0.00s loading features ...

| 0.00s Dataset without structure sections in otext:no structure functions in the T-API

| 0.13s T code from ~/github/etcbc/bhsa/tf/2021

| 0.96s T det from ~/github/etcbc/bhsa/tf/2021

| 1.01s T dist from ~/github/etcbc/bhsa/tf/2021

| 1.16s T dist_unit from ~/github/etcbc/bhsa/tf/2021

| 3.50s T distributional_parent from ~/github/etcbc/bhsa/tf/2021

| 0.16s T domain from ~/github/etcbc/bhsa/tf/2021

| 0.48s T function from ~/github/etcbc/bhsa/tf/2021

| 4.83s T functional_parent from ~/github/etcbc/bhsa/tf/2021

| 0.63s T g_nme from ~/github/etcbc/bhsa/tf/2021

| 0.62s T g_nme_utf8 from ~/github/etcbc/bhsa/tf/2021

| 0.46s T g_pfm from ~/github/etcbc/bhsa/tf/2021

| 0.46s T g_pfm_utf8 from ~/github/etcbc/bhsa/tf/2021

| 0.47s T g_prs from ~/github/etcbc/bhsa/tf/2021

| 0.47s T g_prs_utf8 from ~/github/etcbc/bhsa/tf/2021

| 0.43s T g_uvf from ~/github/etcbc/bhsa/tf/2021

| 0.42s T g_uvf_utf8 from ~/github/etcbc/bhsa/tf/2021

| 0.50s T g_vbe from ~/github/etcbc/bhsa/tf/2021

| 0.45s T g_vbe_utf8 from ~/github/etcbc/bhsa/tf/2021

| 0.44s T g_vbs from ~/github/etcbc/bhsa/tf/2021

| 0.43s T g_vbs_utf8 from ~/github/etcbc/bhsa/tf/2021

| 0.84s T g_voc_lex from ~/github/etcbc/bhsa/tf/2021

| 0.91s T g_voc_lex_utf8 from ~/github/etcbc/bhsa/tf/2021

| 0.79s T gn from ~/github/etcbc/bhsa/tf/2021

| 0.17s T is_root from ~/github/etcbc/bhsa/tf/2021

| 0.16s T kind from ~/github/etcbc/bhsa/tf/2021

| 0.42s T kq_hybrid from ~/github/etcbc/bhsa/tf/2021

| 0.43s T kq_hybrid_utf8 from ~/github/etcbc/bhsa/tf/2021

| 0.13s T label from ~/github/etcbc/bhsa/tf/2021

| 0.79s T language from ~/github/etcbc/bhsa/tf/2021

| 0.58s T lexeme_count from ~/github/etcbc/bhsa/tf/2021

| 0.79s T ls from ~/github/etcbc/bhsa/tf/2021

| 0.95s T mother from ~/github/etcbc/bhsa/tf/2021

| 0.42s T mother_object_type from ~/github/etcbc/bhsa/tf/2021

| 0.71s T nme from ~/github/etcbc/bhsa/tf/2021

| 0.78s T nu from ~/github/etcbc/bhsa/tf/2021

| 1.63s T number from ~/github/etcbc/bhsa/tf/2021

| 0.83s T pdp from ~/github/etcbc/bhsa/tf/2021

| 0.80s T pfm from ~/github/etcbc/bhsa/tf/2021

| 0.82s T prs from ~/github/etcbc/bhsa/tf/2021

| 0.78s T prs_gn from ~/github/etcbc/bhsa/tf/2021

| 0.79s T prs_nu from ~/github/etcbc/bhsa/tf/2021

| 0.80s T prs_ps from ~/github/etcbc/bhsa/tf/2021

| 0.80s T ps from ~/github/etcbc/bhsa/tf/2021

| 0.42s T qere from ~/github/etcbc/bhsa/tf/2021

| 0.41s T qere_utf8 from ~/github/etcbc/bhsa/tf/2021

| 1.37s T rela from ~/github/etcbc/bhsa/tf/2021

| 0.81s T sp from ~/github/etcbc/bhsa/tf/2021

| 0.77s T st from ~/github/etcbc/bhsa/tf/2021

| 0.77s T suffix_gender from ~/github/etcbc/bhsa/tf/2021

| 0.79s T suffix_number from ~/github/etcbc/bhsa/tf/2021

| 0.80s T suffix_person from ~/github/etcbc/bhsa/tf/2021

| 0.12s T tab from ~/github/etcbc/bhsa/tf/2021

| 0.16s T txt from ~/github/etcbc/bhsa/tf/2021

| 1.33s T typ from ~/github/etcbc/bhsa/tf/2021

| 0.80s T uvf from ~/github/etcbc/bhsa/tf/2021

| 0.75s T vbe from ~/github/etcbc/bhsa/tf/2021

| 0.82s T vbs from ~/github/etcbc/bhsa/tf/2021

| 0.80s T vs from ~/github/etcbc/bhsa/tf/2021

| 0.81s T vt from ~/github/etcbc/bhsa/tf/2021

47s All features loaded/computed - for details use loadLog()

[('Computed',

'computed-data',

('C Computed', 'Call AllComputeds', 'Cs ComputedString')),

('Features', 'edge-features', ('E Edge', 'Eall AllEdges', 'Es EdgeString')),

('Fabric', 'loading', ('TF',)),

('Locality', 'locality', ('L Locality',)),

('Nodes', 'navigating-nodes', ('N Nodes',)),

('Features',

'node-features',

('F Feature', 'Fall AllFeatures', 'Fs FeatureString')),

('Search', 'search', ('S Search',)),

('Text', 'text', ('T Text',))]

Examples¶

utils.caption(4, "Basic test")

utils.caption(4, "First verse in all formats")

for fmt in T.formats:

utils.caption(0, "{}".format(fmt), continuation=True)

utils.caption(0, "\t{}".format(T.text(range(1, 12), fmt=fmt)), continuation=True)

.............................................................................................. . 5m 37s Basic test . .............................................................................................. .............................................................................................. . 5m 37s First verse in all formats . .............................................................................................. lex-orig-full בְּ רֵאשִׁית בָּרָא אֱלֹה אֵת הַ שָּׁמַי וְ אֵת הָ אָרֶץ lex-orig-plain ב ראשׁית֜ ברא אלהים֜ את ה שׁמים֜ ו את ה ארץ֜ lex-trans-full B.:- R;>CIJT B.@R@> >:ELOH >;T HA- C.@MAJ W:- >;T H@- >@REY lex-trans-plain B R>CJT/ BR>[ >LHJM/ >T H CMJM/ W >T H >RY/ text-orig-full בְּרֵאשִׁ֖ית בָּרָ֣א אֱלֹהִ֑ים אֵ֥ת הַשָּׁמַ֖יִם וְאֵ֥ת הָאָֽרֶץ׃ text-orig-plain בראשׁית ברא אלהים את השׁמים ואת הארץ׃ text-trans-full B.:-R;>CI73JT B.@R@74> >:ELOHI92JM >;71T HA-C.@MA73JIM W:->;71T H@->@75REY00 text-trans-plain BR>CJT BR> >LHJM >T HCMJM W>T H>RY00

if SCRIPT:

stop(good=True)

f = "subphrase_type"

print("`" + "` `".join(sorted(str(x[0]) for x in Fs(f).freqList())) + "`")

15s Node feature "subphrase_type" not loaded

--------------------------------------------------------------------------- AttributeError Traceback (most recent call last) <ipython-input-16-ecb467d6013a> in <module> 1 f = 'subphrase_type' ----> 2 print('`' + '` `'.join(sorted(str(x[0]) for x in Fs(f).freqList())) + '`') AttributeError: 'NoneType' object has no attribute 'freqList'