Literature / Useful References¶

Outline¶

- Motivation

- Many Samples

- Difficult Samples

- Training / Learning

- Thresholding

- Automated Methods

- Hysteresis Method

- Feature Vectors

- K-Means Clustering

- Superpixels

- Probabalistic Models

- Working with Segmented Images

- Contouring

Previously on QBI¶

- Image acquisition and representations

- Enhancement and noise reduction

- Understanding models and interpreting histograms

- Ground Truth and ROC Curves

- Choosing a threshold

- Examining more complicated, multivariate data sets

- Improving segementation with morphological operations

- Filling holes

- Connecting objects

- Removing Noise

- Partial Volume Effect

Where segmentation fails¶

Segmentation using a single threshold is sensitive to different conditions...

%matplotlib inline

from skimage.io import imread

import matplotlib.pyplot as plt

import numpy as np

files = ['class_art_in.png','class_wgn_in.png','class_cupped_in.png','class_wgn_smooth_in.png']

titles = ['Artefacts','Noise','Gradients','Noise and unsharpness']

plt.figure(figsize=[15,9])

for i in range(4) :

img = imread('../common/data/'+files[i])

plt.subplot(3,4,i+1), plt.imshow(img), plt.title(titles[i])

plt.subplot(3,4,i+5), plt.hist(img.ravel(),bins=50)

plt.subplot(3,4,i+9), plt.imshow(120<img)

Inconsitent illumination on real data¶

cell_img = imread("../common/figures/Cell_Colony.jpg")/255.0

np.random.seed(2018)

m_cell_imgs = [cell_img+k for k in np.random.uniform(-0.25, 0.25, size = 8)]

fig, m_axs = plt.subplots(3, 3, figsize = (12, 12), dpi = 72)

ax1 = m_axs[0,0]

for i, (c_ax, c_img) in enumerate(zip(m_axs.flatten()[1:], m_cell_imgs)):

ax1.hist(c_img.ravel(), np.linspace(0, 1, 20),

label = '{}'.format(i), alpha = 0.5, histtype = 'step')

c_ax.imshow(c_img, cmap = 'bone', vmin = 0, vmax = 1)

c_ax.axis('off')

ax1.set_yscale('log', nonposy = 'clip')

ax1.set_ylabel('Pixel Count (Log)')

ax1.set_xlabel('Intensity')

ax1.legend();

Apply a treshold¶

A constant threshold of 0.6 for the different illuminations

fig, m_axs = plt.subplots(len(m_cell_imgs), 2,

figsize = (4*2, 4*len(m_cell_imgs)),

dpi = 72)

THRESH_VAL = 0.6

for i, ((ax1, ax2), c_img) in enumerate(zip(m_axs,

m_cell_imgs)):

ax1.hist(c_img.ravel()[c_img.ravel()>THRESH_VAL],

np.linspace(0, 1, 100),

label = 'Above Threshold',

alpha = 0.5)

ax1.hist(c_img.ravel()[c_img.ravel()<THRESH_VAL],

np.linspace(0, 1, 100),

label = 'Below Threshold',

alpha = 0.5)

ax1.set_yscale('log', nonposy = 'clip')

ax1.set_ylabel('Pixel Count (Log)')

ax1.set_xlabel('Intensity')

ax1.legend()

ax2.imshow(c_img<THRESH_VAL,

cmap = 'bone',

vmin = 0, vmax = 1)

ax2.set_title('Volume Fraction (%2.2f%%)' % (100*np.mean(c_img.ravel()<THRESH_VAL)))

ax2.axis('off')

Where segmentation fails: Canaliculi¶

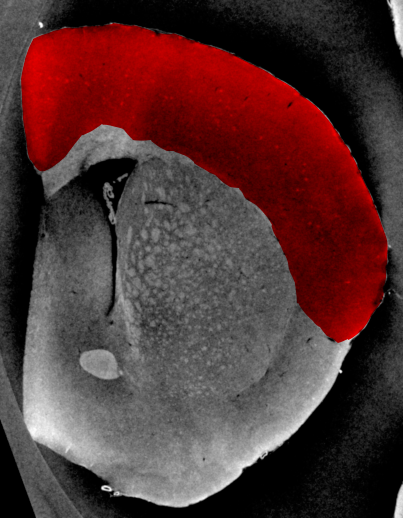

Here is a bone slice¶

- Find the larger cellular structures (osteocyte lacunae)

- Find the small channels which connect them together

The first task¶

is easy using a threshold and size criteria (we know how big the cells should be)

The second¶

is much more difficult because the small channels having radii on the same order of the pixel size are obscured by partial volume effects and noise.

Where segmentation fails: Brain Cortex¶

- The cortex is barely visible to the human eye

- Tiny structures hint at where cortex is located

- A simple threshold is insufficient to finding the cortical structures

- Other filtering techniques are unlikely to magicially fix this problem

Apply thresholds¶

%matplotlib inline

from skimage.io import imread

import matplotlib.pyplot as plt

import numpy as np

cortex_img = imread("ext-figures/cortex.png")

np.random.seed(2018)

fig, (ax1, ax2, ax3) = plt.subplots(1, 3,

figsize = (12, 4), dpi = 72)

ax1.imshow(cortex_img, cmap = 'bone')

thresh_vals = np.linspace(cortex_img.min(), cortex_img.max(), 4+2)[:-1]

out_img = np.zeros_like(cortex_img)

for i, (t_start, t_end) in enumerate(zip(thresh_vals, thresh_vals[1:])):

thresh_reg = (cortex_img>t_start) & (cortex_img<t_end)

ax2.hist(cortex_img.ravel()[thresh_reg.ravel()])

out_img[thresh_reg] = i

ax3.imshow(out_img, cmap = 'gist_earth');

Automated Threshold Selection¶

Given that applying a threshold is such a common and signficant step, there have been many tools developed to automatically (unsupervised) perform it. A particularly important step in setups where images are rarely consistent such as outdoor imaging which has varying lighting (sun, clouds). The methods are based on several basic principles.

Automated Methods¶

Histogram-based methods¶

Just like we visually inspect a histogram an algorithm can examine the histogram and find local minimums between two peaks, maximum / minimum entropy and other factors

- Otsu, Isodata, Intermodes, etc

Image based Methods¶

These look at the statistics of the thresheld image themselves (like entropy) to estimate the threshold

Results-based Methods¶

These search for a threshold which delivers the desired results in the final objects. For example if you know you have an image of cells and each cell is between 200-10000 pixels the algorithm runs thresholds until the objects are of the desired size

- More specific requirements need to be implemented manually

Histogram Methods¶

Taking a typical image of a bone slice, we can examine the variations in calcification density in the image

We can see in the histogram that there are two peaks, one at 0 (no absorption / air) and one at 0.5 (stronger absorption / bone)

%matplotlib inline

from skimage.io import imread

import matplotlib.pyplot as plt

import numpy as np

bone_img = imread("ext-figures/bonegfiltslice.png")/255.0

np.random.seed(2018)

fig, (ax1, ax2) = plt.subplots(1, 2,figsize = (12, 4), dpi = 72)

ax1.imshow(bone_img, cmap = 'bone')

ax1.axis('off')

ax2.hist(bone_img.ravel(), bins=256);

Histogram Methods¶

Intermodes¶

- Take the point between the two modes (peaks) in the histogram

Otsu¶

Search and minimize intra-class (within) variance $$\sigma^2_w(t)=\omega_{bg}(t)\sigma^2_{bg}(t)+\omega_{fg}(t)\sigma^2_{fg}(t)$$

Isodata¶

- $\textrm{thresh}= \frac{\max(img)+\min(img)}{2}$

- while the thresh is changing

- $bg = img<\textrm{thresh}, obj = img>\textrm{thresh}$

- $\textrm{thresh} = (\textrm{avg}(bg) + \textrm{avg}(obj))/2$

Try All Thresholds¶

There are many methods and they can be complicated to implement yourself.

Both Fiji and scikit-image offers many of them as built in functions so you can automatically try all of them on your image.

%matplotlib inline

from skimage.io import imread

import matplotlib.pyplot as plt

import numpy as np

bone_img = imread("ext-figures/bonegfiltslice.png")/255.0

from skimage.filters import try_all_threshold

fig, ax = try_all_threshold(bone_img, figsize=(10, 8), verbose=True);

skimage.filters.thresholding.threshold_isodata skimage.filters.thresholding.threshold_li skimage.filters.thresholding.threshold_mean skimage.filters.thresholding.threshold_minimum skimage.filters.thresholding.threshold_otsu skimage.filters.thresholding.threshold_triangle skimage.filters.thresholding.threshold_yen

Pitfalls¶

While an incredibly useful tool, there are many potential pitfalls to these automated techniques.

Histogram-based¶

These methods are very sensitive to the distribution of pixels in your image and may work really well on images with equal amounts of each phase but work horribly on images which have very high amounts of one phase compared to the others

Image-based¶

These methods are sensitive to noise and a large noise content in the image can change statistics like entropy significantly.

Results-based¶

These methods are inherently biased by the expectations you have.

- If you want to find objects between 200 and 1000 pixels you will,

- they just might not be anything meaningful.

Realistic Approaches for Dealing with these Shortcomings¶

Imaging science rarely represents the ideal world and will never be 100% perfect. At some point we need to write our master's thesis, defend, or publish a paper. These are approaches for more qualitative assessment we will later cover how to do this a bit more robustly with quantitative approaches

Model-based¶

One approach is to try and simulate everything (including noise) as well as possible and to apply these techniques to many realizations of the same image and qualitatively keep track of how many of the results accurately identify your phase or not. Hint: >95% seems to convince most biologists

Sample-based¶

Apply the methods to each sample and keep track of which threshold was used for each one. Go back and apply each threshold to each sample in the image and keep track of how many of them are correct enough to be used for further study.

Worst-case Scenario¶

Come up with the worst-case scenario (noise, misalignment, etc) and assess how inacceptable the results are. Then try to estimate the quartiles range (75% - 25% of images).

Hysteresis Thresholding¶

For some images a single threshold does not work

- large structures are very clearly defined

- smaller structures are difficult to differentiate (see partial volume effect)

%matplotlib inline

from skimage.io import imread

import matplotlib.pyplot as plt

import numpy as np

bone_img = imread("ext-figures/bonegfiltslice.png")

np.random.seed(2018)

fig, (ax1, ax2, ax3) = plt.subplots(1, 3,

figsize = (12, 4), dpi = 150)

cmap = plt.cm.nipy_spectral_r

ax1.imshow(bone_img, cmap = 'bone')

thresh_vals = [0, 70, 110, 255]

out_img = np.zeros_like(bone_img)

for i, (t_start, t_end) in enumerate(zip(thresh_vals, thresh_vals[1:])):

thresh_reg = (bone_img>t_start) & (bone_img<t_end)

ax2.hist(bone_img.ravel()[thresh_reg.ravel()],

color = cmap(i/(len(thresh_vals))))

out_img[thresh_reg] = i

ax2.set_yscale("log", nonposy='clip')

th_ax = ax3.imshow(out_img,

cmap = cmap,

vmin = 0,

vmax = len(thresh_vals))

plt.colorbar(th_ax)

<matplotlib.colorbar.Colorbar at 0x7ffe0a742650>

Goldilocks Situation¶

Here we end up with a goldilocks situation

- Mama bear and Papa Bear

- one is too low and the other is too high

fig, (ax1, ax2,ax3) = plt.subplots(1, 3, figsize = (15, 4), dpi = 100)

ax1.imshow(bone_img<thresh_vals[1], cmap = 'bone_r')

ax1.set_title('Threshold $<$ %d' % (thresh_vals[1]))

ax2.imshow(bone_img<thresh_vals[2], cmap = 'bone_r')

ax2.set_title('Threshold $<$ %d' % (thresh_vals[2]));

import scipy.stats as stats

ax3.hist(bone_img.ravel(),bins=50);

x=np.linspace(-100,255,100)

ax3.plot(x,4.5e5*stats.norm.pdf(x,128,16),label="Bone")

ax3.plot(x,5e4*stats.norm.pdf(x,20,30),label="Pores (amplified)")

ax3.plot([70,70],[0,4000],'r', label="Threshold @ 70")

ax3.plot([110,110],[0,4000],'lightgreen',label="Threshold @ 110")

ax3.legend();

Baby Bear¶

We can thus follow a process for ending up with a happy medium of the two

Hysteresis Thresholding: Reducing Pixels¶

Now we apply the following steps.

- Take the first threshold image with the highest (more strict) threshold

- Remove the objects which are not cells (too small) using an opening operation.

- Take a second threshold image with the higher value

- Combine both threshold images

- Keep the between pixels which are connected (by looking again at a neighborhood $\mathcal{N}$) to the air voxels and ignore the other ones. This goes back to our original supposition that the smaller structures are connected to the larger structures

%matplotlib inline

from skimage.morphology import dilation, opening, disk

from collections import OrderedDict

step_list = OrderedDict()

step_list['Strict Threshold'] = bone_img<thresh_vals[1]

step_list['Remove Small Objects'] = opening(step_list['Strict Threshold'], disk(1))

step_list['Looser Threshold'] = bone_img<thresh_vals[2]

step_list['Both Thresholds'] = 1.0*step_list['Looser Threshold'] + 1.0*step_list['Remove Small Objects']

# the tricky part keeping the between images

step_list['Connected Thresholds'] = step_list['Remove Small Objects']

for i in range(10):

step_list['Connected Thresholds'] = dilation(step_list['Connected Thresholds'] ,

disk(1.8)) & step_list['Looser Threshold']

fig, ax_steps = plt.subplots(1, len(step_list),

figsize = (15, 5),

dpi = 72)

for i, (c_ax, (c_title, c_img)) in enumerate(zip(ax_steps.flatten(), step_list.items()),1):

c_ax.imshow(c_img, cmap = 'bone_r' if c_img.max()<=1 else 'viridis')

c_ax.set_title('%d) %s' % (i, c_title))

c_ax.axis('off')

More Complicated Images¶

As we briefly covered last time, many measurement techniques produce quite rich data.

- Digital cameras produce 3 channels of color for each pixel (rather than just one intensity)

- MRI produces dozens of pieces of information for every voxel which are used when examining different contrasts in the system.

- Raman-shift imaging produces an entire spectrum for each pixel

- Coherent diffraction techniques produce 2- (sometimes 3) diffraction patterns for each point.

Feature Vectors¶

A pairing between spatial information (position) and some other kind of information (value). $$ \vec{x} \rightarrow \vec{f} $$

We are used to seeing images in a grid format where the position indicates the row and column in the grid and the intensity (absorption, reflection, tip deflection, etc) is shown as a different color. We take an example here of text on a page.

%matplotlib inline

from skimage.io import imread

import matplotlib.pyplot as plt

import numpy as np

from skimage.data import page

import pandas as pd

from skimage.filters import gaussian, median, threshold_triangle

page_image = page()

just_text = median(page_image, np.ones((2,2)))-255*gaussian(page_image, 20.0)

plt.figure(figsize=[15,5])

plt.subplot(1,2,1),plt.imshow(page_image, cmap = 'bone'); plt.title('Original');

plt.subplot(1,2,2),plt.imshow(just_text, cmap = 'bone'); plt.title('Just text');

Let's create a feature table¶

xx, yy = np.meshgrid(np.arange(page_image.shape[1]),

np.arange(page_image.shape[0]))

page_table = pd.DataFrame(dict(x = xx.ravel(),

y = yy.ravel(),

intensity = page_image.ravel(),

is_text = just_text.ravel()>0))

page_table.sample(5)

| x | y | intensity | is_text | |

|---|---|---|---|---|

| 38169 | 153 | 99 | 174 | True |

| 17741 | 77 | 46 | 161 | True |

| 38819 | 35 | 101 | 107 | True |

| 24130 | 322 | 62 | 234 | True |

| 15369 | 9 | 40 | 120 | True |

Inspect the features - Intensity vs. IsText¶

fig, ax1 = plt.subplots(1,1)

for c_cat, c_df in page_table.groupby(['is_text']):

ax1.hist(c_df['intensity'],

np.arange(150),

label = 'Text: {}'.format(c_cat),

alpha = 0.5)

ax1.set_yscale("log", nonposy='clip')

ax1.legend();

What does the ROC curve look like?¶

from sklearn.metrics import roc_curve, roc_auc_score

fpr, tpr, _ = roc_curve(page_table['is_text'], page_table['intensity'])

roc_auc = roc_auc_score(page_table['is_text'], page_table['intensity'])

fig, ax = plt.subplots(1,1)

ax.plot(fpr, tpr, label='ROC curve (area = {0:0.2})'.format(roc_auc))

ax.plot([0, 1], [0, 1], 'k--',label='Random guess')

ax.set_xlim([0.0, 1.0]), ax.set_ylim([0.0, 1.05])

ax.set_xlabel('False Positive Rate')

ax.set_ylabel('True Positive Rate')

ax.set_title('Receiver operating characteristic example')

ax.legend(loc="lower right");

Adding Information¶

Here we can improve the results by adding information.

As we discussed in the second lecture on enhancement, edge-enhancing filters can be very useful for classifying images.

How about: $f_{DoG}= G_{\sigma_1}*f - G_{\sigma_2}*f,\quad \sigma_1<\sigma_2$

def dog_filter(in_img, sig_1, sig_2):

return gaussian(page_image, sig_1) - gaussian(page_image, sig_2)

fig, (ax1, ax2) = plt.subplots(1,2, figsize = (15, 4))

page_edges = dog_filter(page_image, 0.5, 10)

ax1.imshow(page_image, cmap = 'bone')

ax2.imshow(page_edges, cmap = 'bone')

page_table['edges'] = page_edges.ravel()

page_table.sample(5)

| x | y | intensity | is_text | edges | |

|---|---|---|---|---|---|

| 31063 | 343 | 80 | 234 | True | 0.101055 |

| 17677 | 13 | 46 | 115 | True | 0.010855 |

| 11181 | 45 | 29 | 110 | False | -0.041728 |

| 55242 | 330 | 143 | 229 | True | 0.001557 |

| 17891 | 227 | 46 | 218 | True | 0.053318 |

fig, ax1 = plt.subplots(1,1)

for c_cat, c_df in page_table.groupby(['is_text']):

ax1.hist(c_df['edges'],

label = 'Text: {}'.format(c_cat),

alpha = 0.5, bins=100)

ax1.set_yscale("log", nonposy='clip')

ax1.legend();

from sklearn.metrics import roc_curve, roc_auc_score

fpr2, tpr2, _ = roc_curve(page_table['is_text'],

page_table['intensity']/1000.0+page_table['edges'])

roc_auc2 = roc_auc_score(page_table['is_text'],

page_table['intensity']/1000.0+page_table['edges'])

fig, ax = plt.subplots(1,1)

ax.plot(fpr, tpr, label='Intensity curve (area = %0.2f)' % roc_auc)

ax.plot(fpr2, tpr2, label='Combined curve (area = %0.2f)' % roc_auc2)

ax.plot([0, 1], [0, 1], 'k--')

ax.set_xlim([0.0, 1.0])

ax.set_ylim([0.0, 1.05])

ax.set_xlabel('False Positive Rate')

ax.set_ylabel('True Positive Rate')

ax.set_title('Receiver operating characteristic example')

ax.legend(loc="lower right");

Why does the second filter perform better?¶

fig, (ax1, ax2) = plt.subplots(1,2, figsize = (15, 5))

for c_cat, c_df in page_table.groupby(['is_text']):

ax2.hist(c_df['edges'],

label = 'Text: {}'.format(c_cat),

alpha = 0.5, bins=100)

ax2.set_yscale("log", nonposy='clip')

ax2.legend();

for c_cat, c_df in page_table.groupby(['is_text']):

ax1.hist(c_df['intensity'],

np.arange(255),

label = 'Text: {}'.format(c_cat),

alpha = 0.5)

ax1.set_yscale("log", nonposy='clip')

ax1.legend();

K-Means Clustering / Classification (Unsupervised)¶

- Automatic clustering of multidimensional data into groups based on a distance metric

- Fast and scalable to petabytes of data (Google, Facebook, Twitter, etc. use it regularly to classify customers, advertisements, queries)

- Input = feature vectors, distance metric, number of groups

- Output = a classification for each feature vector to a group

import pandas as pd

import numpy as np

import matplotlib.pyplot as plt

from sklearn.datasets import make_blobs

%matplotlib inline

test_pts = pd.DataFrame(make_blobs(n_samples=200, random_state=2018)[

0], columns=['x', 'y'])

plt.plot(test_pts.x, test_pts.y, 'r.')

test_pts.sample(5)

| x | y | |

|---|---|---|

| 190 | 7.504196 | -7.803367 |

| 96 | 8.428999 | -7.504711 |

| 135 | 8.877641 | -6.836999 |

| 73 | 5.160910 | -6.364902 |

| 81 | 10.318225 | -3.544903 |

from sklearn.cluster import KMeans

km = KMeans(n_clusters=2, random_state=2018)

n_grp = km.fit_predict(test_pts)

plt.scatter(test_pts.x, test_pts.y, c=n_grp)

grp_pts = test_pts.copy()

grp_pts['group'] = n_grp

grp_pts.groupby(['group']).apply(lambda x: x.sample(5))

| x | y | group | ||

|---|---|---|---|---|

| group | ||||

| 0 | 174 | -1.104400 | 1.871711 | 0 |

| 8 | -0.737558 | 2.935987 | 0 | |

| 101 | -0.693470 | 1.900714 | 0 | |

| 122 | -0.578495 | 3.237306 | 0 | |

| 27 | -0.678995 | 2.546154 | 0 | |

| 1 | 81 | 10.318225 | -3.544903 | 1 |

| 57 | 8.529693 | -3.438141 | 1 | |

| 74 | 6.436361 | -8.220122 | 1 | |

| 193 | 10.677735 | -4.628090 | 1 | |

| 96 | 8.428999 | -7.504711 | 1 |

K-Means Algorithm¶

We give as an initial parameter the number of groups we want to find and possible a criteria for removing groups that are too similar

- Randomly create center points (groups) in vector space

- Assigns group to data point by the “closest” center

- Recalculate centers from mean point in each group

- Go back to step 2 until the groups stop changing

What vector space do we have?¶

- Sometimes represent physical locations (classify swiss people into cities)

- Can include intensity or color (K-means can be used as a thresholding technique when you give it image intensity as the vector and tell it to find two or more groups)

- Can also include orientation, shape, or in extreme cases full spectra (chemically sensitive imaging)

Note: If you look for N groups you will almost always find N groups with K-Means, whether or not they make any sense¶

from sklearn.cluster import KMeans

km = KMeans(n_clusters=3, random_state=2018)

n_grp = km.fit_predict(test_pts)

plt.scatter(test_pts.x, test_pts.y, c=n_grp)

grp_pts = test_pts.copy()

grp_pts['group'] = n_grp

grp_pts.groupby(['group']).apply(lambda x: x.sample(3))

| x | y | group | ||

|---|---|---|---|---|

| group | ||||

| 0 | 26 | -1.428551 | 3.810784 | 0 |

| 129 | -2.166615 | 3.444121 | 0 | |

| 191 | -1.203880 | 2.430533 | 0 | |

| 1 | 61 | 7.834443 | -3.383261 | 1 |

| 124 | 7.623013 | -3.395907 | 1 | |

| 34 | 8.217555 | -4.503802 | 1 | |

| 2 | 146 | 9.992937 | -7.760523 | 2 |

| 102 | 8.157207 | -8.078576 | 2 | |

| 181 | 7.233435 | -8.989808 | 2 |

K-Means Applied to Cortex Image¶

In this example we use position and intensity as feature vectors.

import pandas as pd

import numpy as np

import matplotlib.pyplot as plt

from skimage.io import imread

%matplotlib inline

cortex_img = imread("ext-figures/cortex.png")[::3, ::3]/1000.0

np.random.seed(2018)

fig, (ax1) = plt.subplots(1, 1,

figsize=(8, 8), dpi=72)

ax1.imshow(cortex_img, cmap='bone')

xx, yy = np.meshgrid(np.arange(cortex_img.shape[1]),

np.arange(cortex_img.shape[0]))

cortex_df = pd.DataFrame(dict(x=xx.ravel(),

y=yy.ravel(),

intensity=cortex_img.ravel()))

cortex_df.sample(5)

| x | y | intensity | |

|---|---|---|---|

| 18621 | 126 | 137 | 6.015 |

| 8830 | 55 | 65 | 18.961 |

| 23217 | 132 | 171 | 57.508 |

| 7605 | 45 | 56 | 5.810 |

| 13164 | 69 | 97 | 18.338 |

First segmentation attempt¶

from sklearn.cluster import KMeans

km = KMeans(n_clusters=4, random_state=2018)

cortex_df['group'] = km.fit_predict(cortex_df[['x', 'y', 'intensity']].values)

fig, (ax1, ax2) = plt.subplots(1, 2,

figsize=(12, 8), dpi=72)

ax1.imshow(cortex_img, cmap='bone')

ax2.imshow(cortex_df['group'].values.reshape(

cortex_img.shape), cmap='gist_earth')

cortex_df.groupby(['group']).apply(lambda x: x.sample(3))

| x | y | intensity | group | ||

|---|---|---|---|---|---|

| group | |||||

| 0 | 14955 | 105 | 110 | 20.207 | 0 |

| 15903 | 108 | 117 | 31.614 | 0 | |

| 17637 | 87 | 130 | 14.963 | 0 | |

| 1 | 7213 | 58 | 53 | 13.598 | 1 |

| 3118 | 13 | 23 | 48.637 | 1 | |

| 4371 | 51 | 32 | 25.322 | 1 | |

| 2 | 897 | 87 | 6 | 5.111 | 2 |

| 10073 | 83 | 74 | 21.232 | 2 | |

| 8361 | 126 | 61 | 2.654 | 2 | |

| 3 | 15531 | 6 | 115 | 1.537 | 3 |

| 14582 | 2 | 108 | 7.722 | 3 | |

| 20040 | 60 | 148 | 30.661 | 3 |

Why is the image segmented like this?¶

Rescaling components¶

Since the distance is currently calculated by $||\vec{v}_i-\vec{v}_j||$ and the values for the position is much larger than the values for the Intensity , Sobel or Gaussian they need to be rescaled so they all fit on the same axis $$\vec{v} = \left\{\frac{x}{10}, \frac{y}{10}, \textrm{Intensity}\right\}$$

$N_{clusters}$=4

km = KMeans(n_clusters=4, random_state=2018)

scale_cortex_df = cortex_df.copy()

scale_cortex_df.x = scale_cortex_df.x/10

scale_cortex_df.y = scale_cortex_df.y/10

scale_cortex_df['group'] = km.fit_predict(

scale_cortex_df[['x', 'y', 'intensity']].values)

fig, (ax1, ax2) = plt.subplots(1, 2,

figsize=(12, 8), dpi=72)

ax1.imshow(cortex_img, cmap='bone')

ax2.imshow(scale_cortex_df['group'].values.reshape(cortex_img.shape),

cmap='gist_earth')

scale_cortex_df.groupby(['group']).apply(lambda x: x.sample(3))

| x | y | intensity | group | ||

|---|---|---|---|---|---|

| group | |||||

| 0 | 14399 | 8.9 | 10.6 | 16.835 | 0 |

| 13412 | 4.7 | 9.9 | 17.121 | 0 | |

| 17780 | 9.5 | 13.1 | 27.618 | 0 | |

| 1 | 21802 | 6.7 | 16.1 | 32.274 | 1 |

| 16891 | 1.6 | 12.5 | 33.947 | 1 | |

| 19258 | 8.8 | 14.2 | 30.419 | 1 | |

| 2 | 3903 | 12.3 | 2.8 | 5.592 | 2 |

| 4697 | 10.7 | 3.4 | 0.000 | 2 | |

| 2556 | 12.6 | 1.8 | 2.847 | 2 | |

| 3 | 21425 | 9.5 | 15.8 | 0.000 | 3 |

| 16458 | 12.3 | 12.1 | 4.903 | 3 | |

| 22883 | 6.8 | 16.9 | 0.000 | 3 |

Let's try a different position scaling¶

$$\vec{v} = \left\{\frac{x}{5}, \frac{y}{5}, \textrm{Intensity}\right\}$$$N_{clusters}$=5

km = KMeans(n_clusters=5, random_state=2019)

scale_cortex_df = cortex_df.copy()

scale_cortex_df.x = scale_cortex_df.x/5

scale_cortex_df.y = scale_cortex_df.y/5

scale_cortex_df['group'] = km.fit_predict(

scale_cortex_df[['x', 'y', 'intensity']].values)

fig, (ax1, ax2) = plt.subplots(1, 2,

figsize=(12, 8), dpi=72)

ax1.imshow(cortex_img, cmap='bone')

ax2.imshow(scale_cortex_df['group'].values.reshape(cortex_img.shape),

cmap='nipy_spectral')

scale_cortex_df.groupby(['group']).apply(lambda x: x.sample(3))

| x | y | intensity | group | ||

|---|---|---|---|---|---|

| group | |||||

| 0 | 14190 | 3.0 | 21.0 | 26.988 | 0 |

| 18521 | 5.2 | 27.4 | 25.967 | 0 | |

| 20994 | 13.8 | 31.0 | 30.576 | 0 | |

| 1 | 5129 | 26.8 | 7.4 | 6.033 | 1 |

| 12963 | 0.6 | 19.2 | 1.713 | 1 | |

| 199 | 12.8 | 0.2 | 0.622 | 1 | |

| 2 | 2055 | 6.0 | 3.0 | 38.202 | 2 |

| 5615 | 16.0 | 8.2 | 25.909 | 2 | |

| 5621 | 17.2 | 8.2 | 25.009 | 2 | |

| 3 | 11070 | 0.0 | 16.4 | 8.713 | 3 |

| 13246 | 3.2 | 19.6 | 22.331 | 3 | |

| 14405 | 19.0 | 21.2 | 21.069 | 3 | |

| 4 | 20506 | 24.2 | 30.2 | 3.644 | 4 |

| 21570 | 21.0 | 31.8 | 1.358 | 4 | |

| 22136 | 26.2 | 32.6 | 0.000 | 4 |

Quad tree example¶

Superpixels¶

An approach for simplifying images by performing a clustering and forming super-pixels from groups of similar pixels. https://ivrl.epfl.ch/research/superpixels

|

|

|

Why use superpixels¶

Super pixels

- Drastically reduced data size,

- Serves as an initial segmentation showing spatially meaningful groups

We start with an example of shale with multiple phases

- rock

- clay

- pore

Basic tresholds¶

import pandas as pd

import numpy as np

import matplotlib.pyplot as plt

from skimage.io import imread

%matplotlib inline

shale_img = imread("../common/figures/shale-slice.tiff")

np.random.seed(2018)

fig, (ax1, ax2, ax3) = plt.subplots(1, 3,

figsize=(12, 4), dpi=100)

ax1.imshow(shale_img, cmap='bone')

thresh_vals = np.linspace(shale_img.min(), shale_img.max(), 5+2)[:-1]

out_img = np.zeros_like(shale_img)

for i, (t_start, t_end) in enumerate(zip(thresh_vals, thresh_vals[1:])):

thresh_reg = (shale_img > t_start) & (shale_img < t_end)

ax2.hist(shale_img.ravel()[thresh_reg.ravel()])

out_img[thresh_reg] = i

ax3.imshow(out_img, cmap='gist_earth')

<matplotlib.image.AxesImage at 0x7ffdf9a40410>

Super pixels¶

Using the SLIC algorithm

from skimage.segmentation import slic, mark_boundaries

fig, (ax1, ax2, ax3) = plt.subplots(1, 3, figsize=(12, 4), dpi=100)

shale_segs = slic(shale_img,

n_segments=100,

compactness=5e-2,

sigma=3.0)

ax1.imshow(shale_img, cmap='bone')

ax1.set_title('Original Image')

ax2.imshow(shale_segs, cmap='gist_earth')

ax2.set_title('Superpixels')

ax3.imshow(mark_boundaries(shale_img, shale_segs))

ax3.set_title('Superpixel Overlay')

Text(0.5, 1.0, 'Superpixel Overlay')

Merging super pixels¶

flat_shale_img = shale_img.copy()

for s_idx in np.unique(shale_segs.ravel()):

flat_shale_img[shale_segs == s_idx] = np.mean(

flat_shale_img[shale_segs == s_idx])

fig, (ax1,ax2,ax3) = plt.subplots(1, 3, figsize=(15, 5))

ax1.imshow(mark_boundaries(shale_img, shale_segs))

ax1.set_title('Superpixel Overlay')

ax2.imshow(flat_shale_img, cmap='bone'); ax2.set_title('Merged super pixels')

ax3.imshow(flat_shale_img, cmap='Paired'); ax3.set_title('Merged super pixels (alternative color)');

Segmentation using super pixels¶

fig, (ax1, ax2, ax3) = plt.subplots(1, 3,

figsize=(12, 4), dpi=100)

ax1.imshow(shale_img, cmap='viridis')

thresh_vals = np.linspace(flat_shale_img.min(), flat_shale_img.max(), 5+2)[:-1]

sp_out_img = np.zeros_like(flat_shale_img)

for i, (t_start, t_end) in enumerate(zip(thresh_vals, thresh_vals[1:])):

thresh_reg = (flat_shale_img > t_start) & (flat_shale_img < t_end)

sp_out_img[thresh_reg] = i

ax2.imshow(out_img, cmap='tab10')

ax2 .set_title('Pixel Segmentation')

ax3.imshow(sp_out_img, cmap='tab10')

ax3.set_title('Superpixel Segmentation');

Probabilistic Models of Segmentation¶

A more general approach is to use a probabilistic model to segmentation. We start with our image $I(\vec{x}) \forall \vec{x}\in \mathbb{R}^N$ and we classify it into two phases $\alpha$ and $\beta$

$$P(\{\vec{x} , I(\vec{x})\} | \alpha) \propto P(\alpha) + P(I(\vec{x}) | \alpha)+ P(\sum_{x^{\prime} \in \mathcal{N}} I(\vec{x^{\prime}}) | \alpha)$$- $P(\{\vec{x} , f(\vec{x})\} | \alpha)$ the probability a given pixel is in phase $\alpha$ given we know it's position and value (what we are trying to estimate)

- $P(\alpha)$ probability of any pixel in an image being part of the phase (expected volume fraction of that phase)

- $P(I(\vec{x}) | \alpha)$ probability adjustment based on knowing the value of $I$ at the given point (standard threshold)

- $P(f(\vec{x^{\prime}}) | \alpha)$ are the collective probability adjustments based on knowing the value of a pixels neighbors (very simple version of Markov Random Field approaches)