In [ ]:

# ! apt-get update

# ! apt-get install g++ openjdk-8-jdk

# ! pip3 install nltk konlpy matplotlib gensim

# ! apt-get install fonts-nanum-eco

# ! apt-get install fontconfig

# ! fc-cache -fv

# ! cp /usr/share/fonts/truetype/nanum/Nanum* /usr/local/lib/python3.6/dist-packages/matplotlib/mpl-data/fonts/ttf/

# ! rm -rf /content/.cache/matplotlib/*

In [ ]:

import pandas as pd

movies = pd.read_csv('../data/movies_metadata.csv', usecols=['original_title', 'overview', 'title'], low_memory=False)

movies = movies.dropna(axis=0)

print(movies.shape)

movie_plot_li = movies['overview']

movie_info_li = movies['title']

movies.head(3)

2 텍스트 전처리, 모델 만들기¶

In [ ]:

from nltk.stem import WordNetLemmatizer

from nltk.tokenize import RegexpTokenizer

class LemmaTokenizer(object):

def __init__(self):

self.wnl = WordNetLemmatizer()

self.tokenizer = RegexpTokenizer('(?u)[A-z]+')

def __call__(self, doc): # 클래스 호출시 마다 실행(Tf-idf Vector 호출)

return([self.wnl.lemmatize(t) for t in self.tokenizer.tokenize(doc)])

In [ ]:

# 사이킷런에 위에서 정의한 토크나이저를 입력으로 넣습니다.

from sklearn.feature_extraction.text import TfidfVectorizer

vectorizer = TfidfVectorizer(min_df=3, tokenizer=LemmaTokenizer(),

stop_words='english')

X = vectorizer.fit_transform(movie_plot_li[:10000]) # 메모리 오류로 갯수를 제한

vocabluary = vectorizer.get_feature_names()

In [ ]:

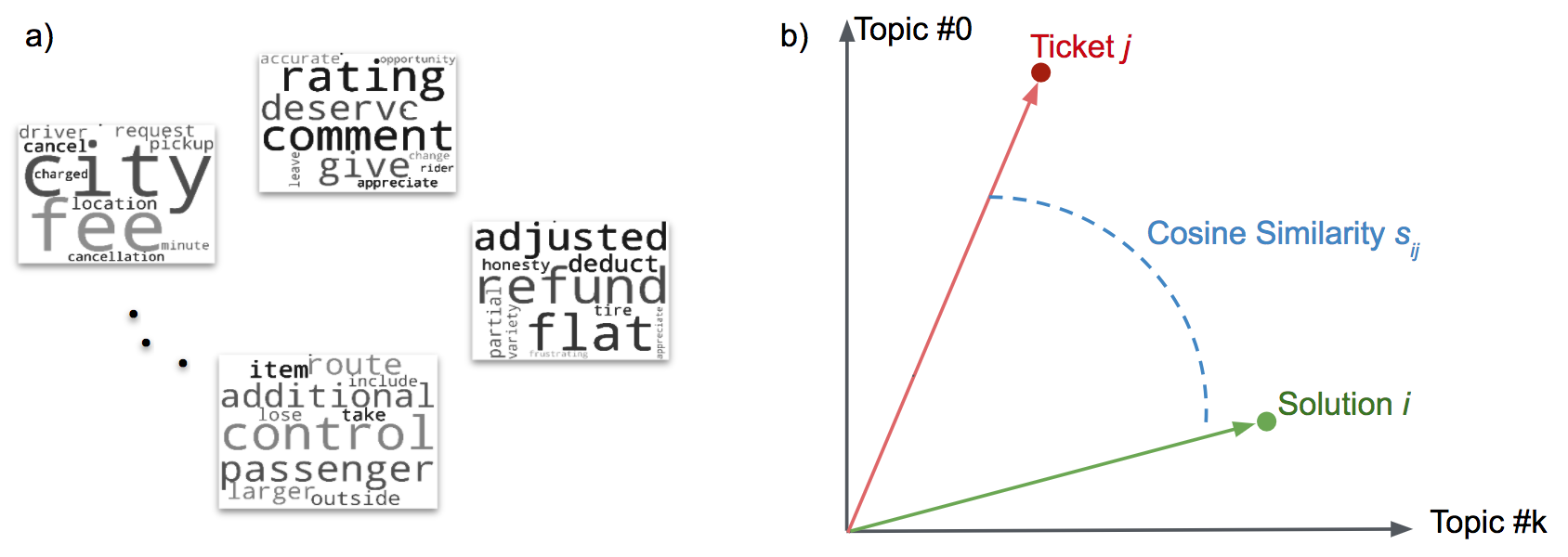

# 비슷한 영화 추천하는 Cosin 유사모델 만들기

from sklearn.metrics.pairwise import cosine_similarity

movie_sim = cosine_similarity(X)

print(movie_sim.shape)

movie_sim

3 코싸인 유사도 테이블 활용¶

In [ ]:

# 특정 영화와 유사한 영화목록 출력하기

def similar_recommend_by_movie_id(movielens_id, rank=8):

movie_index = movielens_id - 1

similar_movies = sorted(list(enumerate(movie_sim[movie_index])), key=lambda x:x[1], reverse=True)

print("----- {} : 관람객 추천영화 -------".format(movie_info_li[similar_movies[0][0]]))

for no, movie_idx in enumerate(similar_movies[1:rank]):

print('추천영화 {}순위 : {}'.format(no, movie_info_li[movie_idx[0]]))

In [ ]:

similar_recommend_by_movie_id(1, rank=20)