在这个快速入门中,您将学习如何使用OpenAI的向量嵌入和Apache Cassandra®,或者等效地使用DataStax Astra DB通过CQL,作为数据持久化的向量存储,构建一个“哲学名言查找器和生成器”。

本笔记本的基本工作流程如下所示。您将评估并存储一些著名哲学家的名言的向量嵌入,使用它们来构建一个强大的搜索引擎,甚至可以生成新的名言!

该笔记本展示了一些向量搜索的标准使用模式,同时演示了使用Cassandra / Astra DB通过CQL的向量功能有多么容易入门。

有关使用向量搜索和文本嵌入构建问答系统的背景,请查看这个优秀的实践笔记本:使用嵌入进行问答。

选择您的框架¶

请注意,本笔记本使用Cassandra驱动程序并直接运行CQL(Cassandra查询语言)语句,但我们也涵盖了其他选择的技术来完成相同的任务。请查看此文件夹的README以了解其他选项。本笔记本可以作为Colab笔记本或常规Jupyter笔记本运行。

目录:

- 设置

- 获取数据库连接

- 连接到OpenAI

- 将名言加载到向量存储中

- 用例1:名言搜索引擎

- 用例2:名言生成器

- (可选)利用向量存储中的分区

工作原理¶

索引

每个引用都被转换为一个嵌入向量,使用OpenAI的Embedding。这些向量被保存在向量存储中,以便以后用于搜索。一些元数据,包括作者的姓名和一些其他预先计算的标签,也被存储在旁边,以允许搜索定制。

搜索

为了找到与提供的搜索引用相似的引用,后者被即时转换为一个嵌入向量,并且这个向量被用来查询存储中类似的向量...即先前索引的相似引用。搜索可以选择性地通过附加元数据进行约束(“找到与这个引用相似的斯宾诺莎的引用...”)。

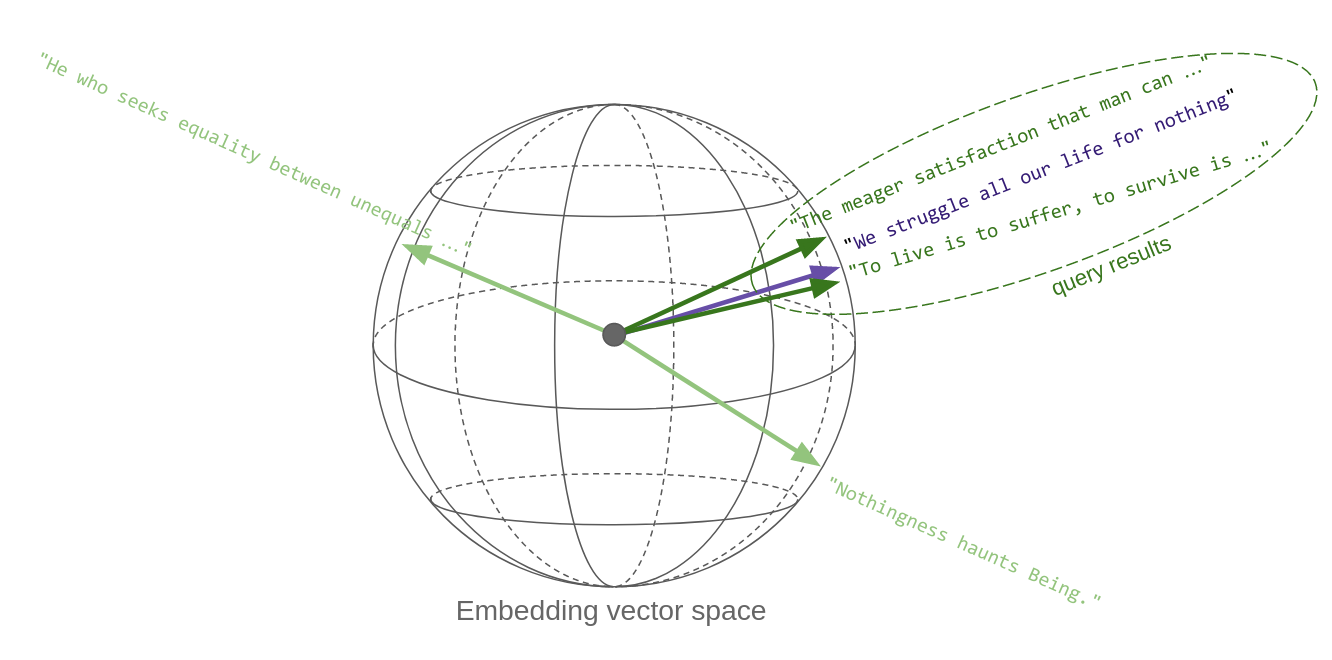

这里的关键点是,“内容相似的引用”在向量空间中转化为彼此在度量上接近的向量:因此,向量相似性搜索有效地实现了语义相似性。这就是向量嵌入如此强大的关键原因。

下面的草图试图传达这个想法。每个引用一旦被转换为一个向量,就是空间中的一个点。嗯,在这种情况下,它在一个球上,因为OpenAI的嵌入向量,像大多数其他向量一样,被归一化为_单位长度_。哦,这个球实际上不是三维的,而是1536维的!

因此,本质上,向量空间中的相似性搜索返回与查询向量最接近的向量:

生成

给定一个建议(一个主题或一个暂定的引用),执行搜索步骤,并将第一个返回的结果(引用)馈送到LLM提示中,该提示要求生成模型根据传递的示例和初始建议创造一个新的文本。

设置¶

安装并导入必要的依赖项:

!pip install --quiet "cassandra-driver>=0.28.0" "openai>=1.0.0" datasets

import os

from uuid import uuid4

from getpass import getpass

from collections import Counter

from cassandra.cluster import Cluster

from cassandra.auth import PlainTextAuthProvider

import openai

from datasets import load_dataset

不要太在意接下来的单元格,我们需要它来检测Colabs并让您上传SCB文件(见下文):

try:

from google.colab import files

IS_COLAB = True

except ModuleNotFoundError:

IS_COLAB = False

获取数据库连接¶

创建Session对象(连接到Astra DB实例)需要一些秘钥。

(注意:在Google Colab和本地Jupyter上,一些步骤会略有不同,因此笔记本将检测运行时类型。)

# Your database's Secure Connect Bundle zip file is needed:

if IS_COLAB:

print('Please upload your Secure Connect Bundle zipfile: ')

uploaded = files.upload()

if uploaded:

astraBundleFileTitle = list(uploaded.keys())[0]

ASTRA_DB_SECURE_BUNDLE_PATH = os.path.join(os.getcwd(), astraBundleFileTitle)

else:

raise ValueError(

'Cannot proceed without Secure Connect Bundle. Please re-run the cell.'

)

else:

# you are running a local-jupyter notebook:

ASTRA_DB_SECURE_BUNDLE_PATH = input("Please provide the full path to your Secure Connect Bundle zipfile: ")

ASTRA_DB_APPLICATION_TOKEN = getpass("Please provide your Database Token ('AstraCS:...' string): ")

ASTRA_DB_KEYSPACE = input("Please provide the Keyspace name for your Database: ")

Please provide the full path to your Secure Connect Bundle zipfile: /path/to/secure-connect-DatabaseName.zip

Please provide your Database Token ('AstraCS:...' string): ········

Please provide the Keyspace name for your Database: my_keyspace

# Don't mind the "Closing connection" error after "downgrading protocol..." messages you may see,

# 这其实只是一个警告:连接将会顺畅无阻。

cluster = Cluster(

cloud={

"secure_connect_bundle": ASTRA_DB_SECURE_BUNDLE_PATH,

},

auth_provider=PlainTextAuthProvider(

"token",

ASTRA_DB_APPLICATION_TOKEN,

),

)

session = cluster.connect()

keyspace = ASTRA_DB_KEYSPACE

create_table_statement = f"""CREATE TABLE IF NOT EXISTS {keyspace}.philosophers_cql (

quote_id UUID PRIMARY KEY,

body TEXT,

embedding_vector VECTOR<FLOAT, 1536>,

author TEXT,

tags SET<TEXT>

);"""

将此语句传递给您的数据库会话以执行:

session.execute(create_table_statement)

<cassandra.cluster.ResultSet at 0x7feee37b3460>

为ANN搜索添加向量索引¶

为了在表中的向量上运行ANN(近似最近邻)搜索,您需要在embedding_vector列上创建一个特定的索引。

在创建索引时,您可以选择使用的“相似度函数”来计算向量距离:对于单位长度向量(例如来自OpenAI的向量),"余弦差异"与"点积"相同,您将使用计算上更便宜的后者。

运行以下CQL语句:

create_vector_index_statement = f"""CREATE CUSTOM INDEX IF NOT EXISTS idx_embedding_vector

ON {keyspace}.philosophers_cql (embedding_vector)

USING 'org.apache.cassandra.index.sai.StorageAttachedIndex'

WITH OPTIONS = {{'similarity_function' : 'dot_product'}};

"""

# Note: the double '{{' and '}}' are just the F-string escape sequence for '{' and '}'

session.execute(create_vector_index_statement)

<cassandra.cluster.ResultSet at 0x7feeefd3da00>

为作者和标签过滤添加索引¶

这已足够在表上运行向量搜索了...但您可能希望能够选择性地指定作者和/或一些标签来限制引用搜索。创建另外两个索引来支持这一点:

create_author_index_statement = f"""创建自定义索引(如果不存在)名为 idx_author

在 {keyspace}.philosophers_cql 表上基于 author 列

使用 'org.apache.cassandra.index.sai.StorageAttachedIndex' 索引方式。

"""

session.execute(create_author_index_statement)

create_tags_index_statement = f"""创建自定义索引(如果不存在)名为 idx_tags

在 {keyspace}.philosophers_cql 表上(基于 tags 列的值)

使用 'org.apache.cassandra.index.sai.StorageAttachedIndex' 方法;

"""

session.execute(create_tags_index_statement)

<cassandra.cluster.ResultSet at 0x7fef2c64af70>

连接到OpenAI¶

设置您的秘钥¶

OPENAI_API_KEY = getpass("Please enter your OpenAI API Key: ")

Please enter your OpenAI API Key: ········

获取嵌入向量的测试调用¶

快速检查如何为一组输入文本获取嵌入向量:

client = openai.OpenAI(api_key=OPENAI_API_KEY)

embedding_model_name = "text-embedding-3-small"

result = client.embeddings.create(

input=[

"This is a sentence",

"A second sentence"

],

model=embedding_model_name,

)

注意:以上是针对OpenAI v1.0+的语法。如果使用之前的版本,获取嵌入向量的代码会有所不同。

print(f"len(result.data) = {len(result.data)}")

print(f"result.data[1].embedding = {str(result.data[1].embedding)[:55]}...")

print(f"len(result.data[1].embedding) = {len(result.data[1].embedding)}")

len(result.data) = 2 result.data[1].embedding = [-0.0108176339417696, 0.0013546717818826437, 0.00362232... len(result.data[1].embedding) = 1536

将报价加载到向量存储中¶

获取一个带有引语的数据集。(我们从Kaggle数据集中调整和增补了数据,以便在此演示中使用。)

philo_dataset = load_dataset("datastax/philosopher-quotes")["train"]

快速检查:

print("An example entry:")

print(philo_dataset[16])

An example entry:

{'author': 'aristotle', 'quote': 'Love well, be loved and do something of value.', 'tags': 'love;ethics'}

检查数据集大小:

author_count = Counter(entry["author"] for entry in philo_dataset)

print(f"Total: {len(philo_dataset)} quotes. By author:")

for author, count in author_count.most_common():

print(f" {author:<20}: {count} quotes")

Total: 450 quotes. By author:

aristotle : 50 quotes

schopenhauer : 50 quotes

spinoza : 50 quotes

hegel : 50 quotes

freud : 50 quotes

nietzsche : 50 quotes

sartre : 50 quotes

plato : 50 quotes

kant : 50 quotes

将引用插入向量存储库¶

您将计算引用的嵌入,并将其保存到向量存储库中,同时保存文本本身和以后使用的元数据。

为了优化速度并减少调用次数,您将对嵌入OpenAI服务进行批处理调用。

DB写入是通过CQL语句完成的。但由于您将多次运行此特定插入操作(尽管使用不同的值),最好是_准备_语句,然后反复运行它。

(注意:为了更快的插入,Cassandra驱动程序可以让您执行并发插入,但我们在这里没有这样做,以便更简单地演示代码。)

prepared_insertion = session.prepare(

f"INSERT INTO {keyspace}.philosophers_cql (quote_id, author, body, embedding_vector, tags) VALUES (?, ?, ?, ?, ?);"

)

BATCH_SIZE = 20

num_batches = ((len(philo_dataset) + BATCH_SIZE - 1) // BATCH_SIZE)

quotes_list = philo_dataset["quote"]

authors_list = philo_dataset["author"]

tags_list = philo_dataset["tags"]

print("Starting to store entries:")

for batch_i in range(num_batches):

b_start = batch_i * BATCH_SIZE

b_end = (batch_i + 1) * BATCH_SIZE

# 计算这一批数据的嵌入向量

b_emb_results = client.embeddings.create(

input=quotes_list[b_start : b_end],

model=embedding_model_name,

)

# 准备插入的行

print("B ", end="")

for entry_idx, emb_result in zip(range(b_start, b_end), b_emb_results.data):

if tags_list[entry_idx]:

tags = {

tag

for tag in tags_list[entry_idx].split(";")

}

else:

tags = set()

author = authors_list[entry_idx]

quote = quotes_list[entry_idx]

quote_id = uuid4() # 为每条引用生成一个新的随机ID。在实际应用中,您需要更精细的控制……

session.execute(

prepared_insertion,

(quote_id, author, quote, emb_result.embedding, tags),

)

print("*", end="")

print(f" done ({len(b_emb_results.data)})")

print("\nFinished storing entries.")

Starting to store entries: B ******************** done (20) B ******************** done (20) B ******************** done (20) B ******************** done (20) B ******************** done (20) B ******************** done (20) B ******************** done (20) B ******************** done (20) B ******************** done (20) B ******************** done (20) B ******************** done (20) B ******************** done (20) B ******************** done (20) B ******************** done (20) B ******************** done (20) B ******************** done (20) B ******************** done (20) B ******************** done (20) B ******************** done (20) B ******************** done (20) B ******************** done (20) B ******************** done (20) B ********** done (10) Finished storing entries.

用例1:报价搜索引擎¶

对于引用搜索功能,您首先需要将输入的引用转换为向量,然后使用它来查询存储库(除了处理可选的元数据到搜索调用中)。

将搜索引擎功能封装到一个函数中,以便于重复使用:

def find_quote_and_author(query_quote, n, author=None, tags=None):

query_vector = client.embeddings.create(

input=[query_quote],

model=embedding_model_name,

).data[0].embedding

# 根据传递的条件不同,语句中的 WHERE 子句可能会有所变化。

where_clauses = []

where_values = []

if author:

where_clauses += ["author = %s"]

where_values += [author]

if tags:

for tag in tags:

where_clauses += ["tags CONTAINS %s"]

where_values += [tag]

# 上述两个列表存在的原因是,在执行CQL搜索语句时,传递的值

# must match the sequence of "?" marks in the statement.

if where_clauses:

search_statement = f"""SELECT body, author FROM {keyspace}.philosophers_cql

WHERE {' AND '.join(where_clauses)}

ORDER BY embedding_vector ANN OF %s

LIMIT %s;

"""

else:

search_statement = f"""SELECT body, author FROM {keyspace}.philosophers_cql

ORDER BY embedding_vector ANN OF %s

LIMIT %s;

"""

# 为了获得最佳性能,应保持一个已准备语句的缓存(参见上面的插入代码示例)。

# 对于此处使用的各种可能的陈述。

# (我们留给读者作为练习,这样可以避免代码过长。

# 记住:准备语句时,使用 '?' 代替 '%s'。)

query_values = tuple(where_values + [query_vector] + [n])

result_rows = session.execute(search_statement, query_values)

return [

(result_row.body, result_row.author)

for result_row in result_rows

]

进行搜索测试¶

传递一个引用:

find_quote_and_author("We struggle all our life for nothing", 3)

[('Life to the great majority is only a constant struggle for mere existence, with the certainty of losing it at last.',

'schopenhauer'),

('We give up leisure in order that we may have leisure, just as we go to war in order that we may have peace.',

'aristotle'),

('Perhaps the gods are kind to us, by making life more disagreeable as we grow older. In the end death seems less intolerable than the manifold burdens we carry',

'freud')]

搜索限定于作者:

find_quote_and_author("We struggle all our life for nothing", 2, author="nietzsche")

[('To live is to suffer, to survive is to find some meaning in the suffering.',

'nietzsche'),

('What makes us heroic?--Confronting simultaneously our supreme suffering and our supreme hope.',

'nietzsche')]

搜索限定为标签(从先前用引号保存的标签中选择):

find_quote_and_author("We struggle all our life for nothing", 2, tags=["politics"])

[('Mankind will never see an end of trouble until lovers of wisdom come to hold political power, or the holders of power become lovers of wisdom',

'plato'),

('Everything the State says is a lie, and everything it has it has stolen.',

'nietzsche')]

剔除不相关的结果¶

向量相似性搜索通常会返回与查询最接近的向量,即使这意味着如果没有更好的结果,可能会返回一些不太相关的结果。

为了控制这个问题,您可以获取查询与每个结果之间的实际“相似度”,然后设置一个阈值,有效地丢弃超出该阈值的结果。正确调整此阈值并不是一件容易的问题:在这里,我们只是向您展示一种方法。

为了感受一下这是如何工作的,请尝试以下查询,并尝试选择引号和阈值以比较结果:

注(对于数学倾向者):此值是两个向量之间余弦差异的重新缩放值,介于零和一之间,即标量积除以两个向量的范数的乘积。换句话说,对于面对相反的向量,此值为0,对于平行向量,此值为+1。对于其他相似度度量,请查看文档 — 并牢记SELECT查询中使用的度量应与之前创建索引时使用的度量相匹配,以获得有意义的有序结果。

quote = "Animals are our equals."

# 引文 = "Be good."

# 引文 = "This teapot is strange."

similarity_threshold = 0.92

quote_vector = client.embeddings.create(

input=[quote],

model=embedding_model_name,

).data[0].embedding

# 再次强调:为了获得更佳的性能,请记得在生产环境中准备好你的声明……

search_statement = f"""SELECT body, similarity_dot_product(embedding_vector, %s) as similarity

FROM {keyspace}.philosophers_cql

ORDER BY embedding_vector ANN OF %s

LIMIT %s;

"""

query_values = (quote_vector, quote_vector, 8)

result_rows = session.execute(search_statement, query_values)

results = [

(result_row.body, result_row.similarity)

for result_row in result_rows

if result_row.similarity >= similarity_threshold

]

print(f"{len(results)} quotes within the threshold:")

for idx, (r_body, r_similarity) in enumerate(results):

print(f" {idx}. [similarity={r_similarity:.3f}] \"{r_body[:70]}...\"")

3 quotes within the threshold:

0. [similarity=0.927] "The assumption that animals are without rights, and the illusion that ..."

1. [similarity=0.922] "Animals are in possession of themselves; their soul is in possession o..."

2. [similarity=0.920] "At his best, man is the noblest of all animals; separated from law and..."

使用案例2:报价生成器¶

对于这个任务,您需要从OpenAI获取另一个组件,即一个LLM来为我们生成报价(基于通过查询向量存储获取的输入)。

您还需要一个用于提示模板,该模板将用于填充生成报价LLM完成任务。

completion_model_name = "gpt-3.5-turbo"

generation_prompt_template = """"Generate a single short philosophical quote on the given topic,

similar in spirit and form to the provided actual example quotes.

Do not exceed 20-30 words in your quote.

REFERENCE TOPIC: "{topic}"

ACTUAL EXAMPLES:

{examples}

"""

与搜索功能类似,这个功能最好封装到一个方便的函数中(内部使用搜索功能):

def generate_quote(topic, n=2, author=None, tags=None):

quotes = find_quote_and_author(query_quote=topic, n=n, author=author, tags=tags)

if quotes:

prompt = generation_prompt_template.format(

topic=topic,

examples="\n".join(f" - {quote[0]}" for quote in quotes),

)

# 少量日志记录:

print("** quotes found:")

for q, a in quotes:

print(f"** - {q} ({a})")

print("** end of logging")

#

response = client.chat.completions.create(

model=completion_model_name,

messages=[{"role": "user", "content": prompt}],

temperature=0.7,

max_tokens=320,

)

return response.choices[0].message.content.replace('"', '').strip()

else:

print("** no quotes found.")

return None

注意:与嵌入计算的情况类似,在OpenAI v1.0之前,Chat Completion API的代码会略有不同。

测试引文生成¶

只是传递一段文本(一个“引用”,但实际上可以只是建议一个主题,因为它的向量嵌入最终仍会出现在向量空间中的正确位置):

q_topic = generate_quote("politics and virtue")

print("\nA new generated quote:")

print(q_topic)

** quotes found: ** - Happiness is the reward of virtue. (aristotle) ** - Our moral virtues benefit mainly other people; intellectual virtues, on the other hand, benefit primarily ourselves; therefore the former make us universally popular, the latter unpopular. (schopenhauer) ** end of logging A new generated quote: True politics is not the pursuit of power, but the cultivation of virtue for the betterment of all.

从一个哲学家的灵感中获得启发:

q_topic = generate_quote("animals", author="schopenhauer")

print("\nA new generated quote:")

print(q_topic)

** quotes found: ** - Because Christian morality leaves animals out of account, they are at once outlawed in philosophical morals; they are mere 'things,' mere means to any ends whatsoever. They can therefore be used for vivisection, hunting, coursing, bullfights, and horse racing, and can be whipped to death as they struggle along with heavy carts of stone. Shame on such a morality that is worthy of pariahs, and that fails to recognize the eternal essence that exists in every living thing, and shines forth with inscrutable significance from all eyes that see the sun! (schopenhauer) ** - The assumption that animals are without rights, and the illusion that our treatment of them has no moral significance, is a positively outrageous example of Western crudity and barbarity. Universal compassion is the only guarantee of morality. (schopenhauer) ** end of logging A new generated quote: Do not judge the worth of a soul by its outward form, for within every animal lies an eternal essence that deserves our compassion and respect.

(可选)分区¶

在完成这个快速入门之前,有一个有趣的主题需要研究。一般来说,标签和引用可以有任何关系(例如,引用可以有多个标签),但是_作者_实际上是一个精确的分组(它们在引用集合上定义了一个“不相交的分区”):每个引用都有一个作者(至少对我们来说是这样)。

现在,假设您事先知道您的应用程序通常(或总是)会对_单个作者_运行查询。那么,您可以充分利用底层数据库结构:如果您将引用分组在分区中(每个作者一个分区),仅对一个作者进行向量查询将使用更少的资源并且返回速度更快。

我们不会在这里深入讨论细节,这些细节涉及Cassandra存储内部:重要的信息是如果您的查询在一个组内运行,请考虑相应地进行分区以提高性能。

现在,您将看到这个选择的实际效果。

为每位作者创建分区需要一个新的表模式:创建一个名为"philosophers_cql_partitioned"的新表,以及必要的索引:

create_table_p_statement = f"""CREATE TABLE IF NOT EXISTS {keyspace}.philosophers_cql_partitioned (

author TEXT,

quote_id UUID,

body TEXT,

embedding_vector VECTOR<FLOAT, 1536>,

tags SET<TEXT>,

PRIMARY KEY ( (author), quote_id )

) WITH CLUSTERING ORDER BY (quote_id ASC);"""

session.execute(create_table_p_statement)

create_vector_index_p_statement = f"""CREATE CUSTOM INDEX IF NOT EXISTS idx_embedding_vector_p

ON {keyspace}.philosophers_cql_partitioned (embedding_vector)

USING 'org.apache.cassandra.index.sai.StorageAttachedIndex'

WITH OPTIONS = {{'similarity_function' : 'dot_product'}};

"""

session.execute(create_vector_index_p_statement)

create_tags_index_p_statement = f"""如果索引 `idx_tags_p` 不存在,则在键空间 `{keyspace}` 的表 `philosophers_cql_partitioned` 上创建自定义索引 `idx_tags_p`,该索引基于 `tags` 列的值,并使用 `org.apache.cassandra.index.sai.StorageAttachedIndex` 作为索引实现。

"""

session.execute(create_tags_index_p_statement)

<cassandra.cluster.ResultSet at 0x7fef149d7940>

现在在新表上重复执行计算嵌入并插入的步骤。

您可以使用与之前相同的插入代码,因为差异是隐藏在“幕后”的:数据库将根据这个新表的分区方案以不同的方式存储插入的行。

然而,为了演示,您将利用Cassandra驱动程序提供的一个方便的功能,可以轻松地并发运行多个查询(在本例中是INSERT)。这是Cassandra / Astra DB通过CQL非常好支持的功能,可以显著加快速度,而客户端代码几乎没有什么变化。

(注:此外,可以额外地缓存先前计算的嵌入以节省一些API令牌 -- 但在这里,我们希望保持代码更易于检查。)

from cassandra.concurrent import execute_concurrent_with_args

prepared_insertion = session.prepare(

f"INSERT INTO {keyspace}.philosophers_cql_partitioned (quote_id, author, body, embedding_vector, tags) VALUES (?, ?, ?, ?, ?);"

)

BATCH_SIZE = 50

num_batches = ((len(philo_dataset) + BATCH_SIZE - 1) // BATCH_SIZE)

quotes_list = philo_dataset["quote"]

authors_list = philo_dataset["author"]

tags_list = philo_dataset["tags"]

print("Starting to store entries:")

for batch_i in range(num_batches):

print("[...", end="")

b_start = batch_i * BATCH_SIZE

b_end = (batch_i + 1) * BATCH_SIZE

# 计算这一批数据的嵌入向量

b_emb_results = client.embeddings.create(

input=quotes_list[b_start : b_end],

model=embedding_model_name,

)

# prepare this batch's entries for insertion

tuples_to_insert = []

for entry_idx, emb_result in zip(range(b_start, b_end), b_emb_results.data):

if tags_list[entry_idx]:

tags = {

tag

for tag in tags_list[entry_idx].split(";")

}

else:

tags = set()

author = authors_list[entry_idx]

quote = quotes_list[entry_idx]

quote_id = uuid4() # a new random ID for each quote. In a production app you'll want to have better control...

# append a *tuple* to the list, and in the tuple the values are ordered to match "?" in the prepared statement:

tuples_to_insert.append((quote_id, author, quote, emb_result.embedding, tags))

# 通过驱动程序的并发原语一次性插入批次

conc_results = execute_concurrent_with_args(

session,

prepared_insertion,

tuples_to_insert,

)

# 检查所有插入操作是否成功(最好始终进行此项检查):

if any([not success for success, _ in conc_results]):

print("Something failed during the insertions!")

else:

print(f"{len(b_emb_results.data)}] ", end="")

print("\nFinished storing entries.")

Starting to store entries: [...50] [...50] [...50] [...50] [...50] [...50] [...50] [...50] [...50] Finished storing entries.

尽管表结构不同,但在相似性搜索背后的数据库查询本质上是相同的:

def find_quote_and_author_p(query_quote, n, author=None, tags=None):

query_vector = client.embeddings.create(

input=[query_quote],

model=embedding_model_name,

).data[0].embedding

# 根据传递的条件不同,语句中的 WHERE 子句可能会有所变化。

# 相应地构建它:

where_clauses = []

where_values = []

if author:

where_clauses += ["author = %s"]

where_values += [author]

if tags:

for tag in tags:

where_clauses += ["tags CONTAINS %s"]

where_values += [tag]

if where_clauses:

search_statement = f"""从 {keyspace}.philosophers_cql_partitioned 中选择 body 和 author

其中 {' AND '.join(where_clauses)}

按 %s 的 embedding_vector 进行 ANN 排序

限制为 %s;

"""

else:

search_statement = f"""从 {keyspace}.philosophers_cql_partitioned 中选择 body 和 author,

按照 embedding_vector 对 %s 进行近似最近邻排序,

限制结果数量为 %s;

"""

query_values = tuple(where_values + [query_vector] + [n])

result_rows = session.execute(search_statement, query_values)

return [

(result_row.body, result_row.author)

for result_row in result_rows

]

就是这样:新表仍然支持“通用”相似性搜索没错...

find_quote_and_author_p("We struggle all our life for nothing", 3)

[('Life to the great majority is only a constant struggle for mere existence, with the certainty of losing it at last.',

'schopenhauer'),

('We give up leisure in order that we may have leisure, just as we go to war in order that we may have peace.',

'aristotle'),

('Perhaps the gods are kind to us, by making life more disagreeable as we grow older. In the end death seems less intolerable than the manifold burdens we carry',

'freud')]

...但是当指定作者时,您会注意到一个巨大的性能优势:

find_quote_and_author_p("We struggle all our life for nothing", 2, author="nietzsche")

[('To live is to suffer, to survive is to find some meaning in the suffering.',

'nietzsche'),

('What makes us heroic?--Confronting simultaneously our supreme suffering and our supreme hope.',

'nietzsche')]

嗯,如果你有一个真实大小的数据集,你会注意到性能的提升。在这个演示中,只有几十个条目,并没有明显的差异,但你可以理解其中的道理。

结论¶

恭喜!您已经学会了如何使用OpenAI进行向量嵌入和使用Astra DB / Cassandra进行存储,以构建一个复杂的哲学搜索引擎和引用生成器。

这个示例使用了Cassandra drivers,并直接运行CQL(Cassandra Query Language)语句来与向量存储进行交互 - 但这并不是唯一的选择。查看README以了解其他选项和与流行框架的集成。

要了解更多关于Astra DB的向量搜索功能如何成为您的ML/GenAI应用程序中的关键要素,请访问Astra DB关于这个主题的网页。

清理¶

如果您想要删除此演示中使用的所有资源,请运行此单元格(_警告:这将删除表和其中插入的数据!_):

session.execute(f"DROP TABLE IF EXISTS {keyspace}.philosophers_cql;")

session.execute(f"DROP TABLE IF EXISTS {keyspace}.philosophers_cql_partitioned;")

<cassandra.cluster.ResultSet at 0x7fef149096a0>