# Reveal.js

from notebook.services.config import ConfigManager

cm = ConfigManager()

cm.update('livereveal', {

'theme': 'white',

'transition': 'none',

'controls': 'false',

'progress': 'true',

})

{'theme': 'white',

'transition': 'none',

'controls': 'false',

'progress': 'true'}

%%capture

%load_ext autoreload

%autoreload 2

# %cd ..

import sys

sys.path.append("..")

import statnlpbook.util as util

util.execute_notebook('language_models.ipynb')

%%html

<script>

function code_toggle() {

if (code_shown){

$('div.input').hide('500');

$('#toggleButton').val('Show Code')

} else {

$('div.input').show('500');

$('#toggleButton').val('Hide Code')

}

code_shown = !code_shown

}

$( document ).ready(function(){

code_shown=false;

$('div.input').hide()

});

</script>

<form action="javascript:code_toggle()"><input type="submit" id="toggleButton" value="Show Code"></form>

from IPython.display import Image

import random

Contextualised Word Representations¶

What makes a good word representation?¶

- Representations are distinct

- Similar words have similar representations

What does this mean?¶

- "Yesterday I saw a bass ..."

Image(url='../img/bass_1.jpg'+'?'+str(random.random()), width=300)

Image(url='../img/bass_2.svg'+'?'+str(random.random()), width=100)

Contextualised Representations Example¶

- a) "Yesterday I saw a bass swimming in the lake"

Image(url='../img/bass_1.jpg'+'?'+str(random.random()), width=300)

- b) "Yesterday I saw a bass in the music shop"

Image(url='../img/bass_2.svg'+'?'+str(random.random()), width=100)

Contextualised Representations Example¶

- a) "Yesterday I saw a bass swimming in the lake".

- b) "Yesterday I saw a bass in the music shop".

Image(url='../img/bass_visualisation.jpg'+'?'+str(random.random()), width=500)

What makes a good representation?¶

- Representations are distinct

- Similar words have similar representations

Additional criterion:

- Representations take context into account

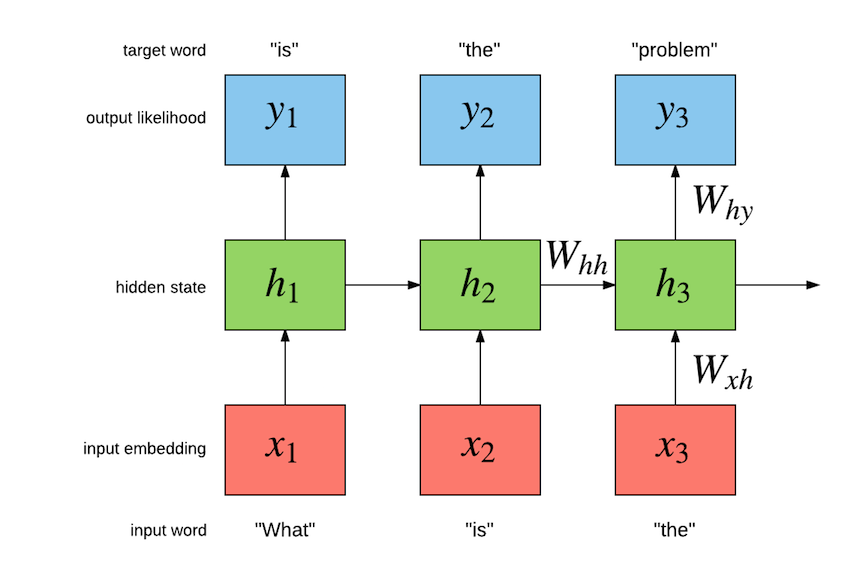

How to train contextualised representations¶

Basicallly like word2vec: predict a word from its context (or vice versa).

Cannot just use lookup table (i.e., embedding matrix) any more.

Train a network with the sequence as input! Does this remind you of anything?

The hidden state of an RNN LM is a contextualised word representation!

Image(url='../img/elmo_1.png'+'?'+str(random.random()), width=800)

"Let's stick to improvisation in this skit"

Image credit: http://jalammar.github.io/illustrated-bert/

Bidirectional RNN LM¶

An RNN (or LSTM) LM only considers preceding context.

ELMo (Embeddings from Language Models) is based on a biLM: bidirectional language model (Peters et al., 2018).

Image(url='../img/elmo_2.png'+'?'+str(random.random()), width=1200)

Image(url='../img/elmo_3.png'+'?'+str(random.random()), width=1200)

Solution¶

To prevent a word from being used to predict itself, while still allowing the model to consider both preceding and following words.

Problem: Long-Term Dependencies¶

LSTMs have longer-term memory, but they still forget.

Solution: transformers! (Vaswani et al. (2017))

- In 2022, all state-of-the-art LMs are transformers.

- Yes, also GPT-3

Image(url='../img/transformers.png'+'?'+str(random.random()), width=400)

Summary¶

- Static word embeddings do not differ depending on context

- Contextualised representations are dynamic

Additional Reading¶