import keras

keras.__version__

Using TensorFlow backend.

'2.0.8'

Using a pre-trained convnet¶

This notebook contains the code sample found in Chapter 5, Section 3 of Deep Learning with Python. Note that the original text features far more content, in particular further explanations and figures: in this notebook, you will only find source code and related comments.

A common and highly effective approach to deep learning on small image datasets is to leverage a pre-trained network. A pre-trained network is simply a saved network previously trained on a large dataset, typically on a large-scale image classification task. If this original dataset is large enough and general enough, then the spatial feature hierarchy learned by the pre-trained network can effectively act as a generic model of our visual world, and hence its features can prove useful for many different computer vision problems, even though these new problems might involve completely different classes from those of the original task. For instance, one might train a network on ImageNet (where classes are mostly animals and everyday objects) and then re-purpose this trained network for something as remote as identifying furniture items in images. Such portability of learned features across different problems is a key advantage of deep learning compared to many older shallow learning approaches, and it makes deep learning very effective for small-data problems.

In our case, we will consider a large convnet trained on the ImageNet dataset (1.4 million labeled images and 1000 different classes). ImageNet contains many animal classes, including different species of cats and dogs, and we can thus expect to perform very well on our cat vs. dog classification problem.

We will use the VGG16 architecture, developed by Karen Simonyan and Andrew Zisserman in 2014, a simple and widely used convnet architecture for ImageNet. Although it is a bit of an older model, far from the current state of the art and somewhat heavier than many other recent models, we chose it because its architecture is similar to what you are already familiar with, and easy to understand without introducing any new concepts. This may be your first encounter with one of these cutesie model names -- VGG, ResNet, Inception, Inception-ResNet, Xception... you will get used to them, as they will come up frequently if you keep doing deep learning for computer vision.

There are two ways to leverage a pre-trained network: feature extraction and fine-tuning. We will cover both of them. Let's start with feature extraction.

Feature extraction¶

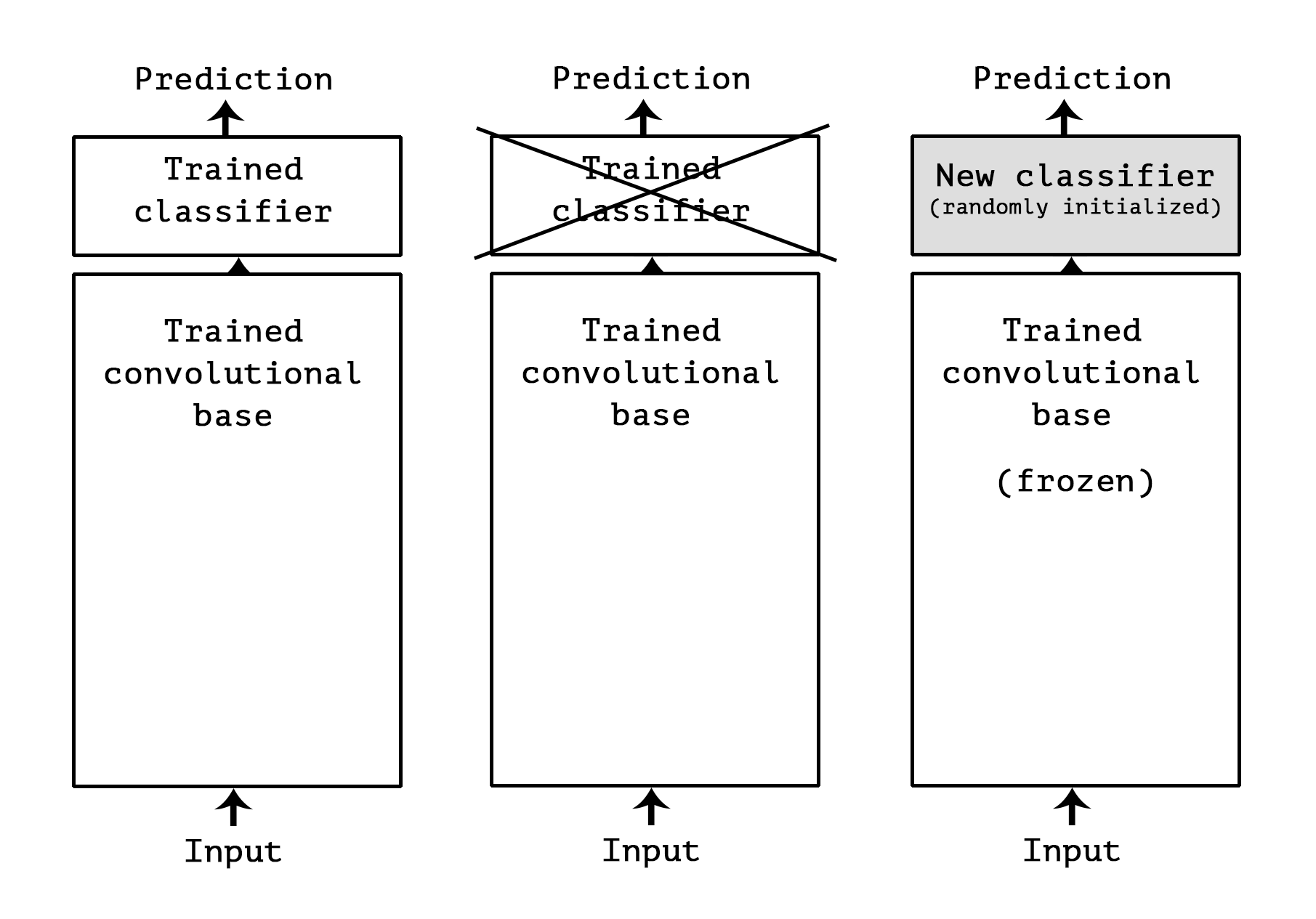

Feature extraction consists of using the representations learned by a previous network to extract interesting features from new samples. These features are then run through a new classifier, which is trained from scratch.

As we saw previously, convnets used for image classification comprise two parts: they start with a series of pooling and convolution layers, and they end with a densely-connected classifier. The first part is called the "convolutional base" of the model. In the case of convnets, "feature extraction" will simply consist of taking the convolutional base of a previously-trained network, running the new data through it, and training a new classifier on top of the output.

Why only reuse the convolutional base? Could we reuse the densely-connected classifier as well? In general, it should be avoided. The reason is simply that the representations learned by the convolutional base are likely to be more generic and therefore more reusable: the feature maps of a convnet are presence maps of generic concepts over a picture, which is likely to be useful regardless of the computer vision problem at hand. On the other end, the representations learned by the classifier will necessarily be very specific to the set of classes that the model was trained on -- they will only contain information about the presence probability of this or that class in the entire picture. Additionally, representations found in densely-connected layers no longer contain any information about where objects are located in the input image: these layers get rid of the notion of space, whereas the object location is still described by convolutional feature maps. For problems where object location matters, densely-connected features would be largely useless.

Note that the level of generality (and therefore reusability) of the representations extracted by specific convolution layers depends on the depth of the layer in the model. Layers that come earlier in the model extract local, highly generic feature maps (such as visual edges, colors, and textures), while layers higher-up extract more abstract concepts (such as "cat ear" or "dog eye"). So if your new dataset differs a lot from the dataset that the original model was trained on, you may be better off using only the first few layers of the model to do feature extraction, rather than using the entire convolutional base.

In our case, since the ImageNet class set did contain multiple dog and cat classes, it is likely that it would be beneficial to reuse the information contained in the densely-connected layers of the original model. However, we will chose not to, in order to cover the more general case where the class set of the new problem does not overlap with the class set of the original model.

Let's put this in practice by using the convolutional base of the VGG16 network, trained on ImageNet, to extract interesting features from our cat and dog images, and then training a cat vs. dog classifier on top of these features.

The VGG16 model, among others, comes pre-packaged with Keras. You can import it from the keras.applications module. Here's the list of

image classification models (all pre-trained on the ImageNet dataset) that are available as part of keras.applications:

- Xception

- InceptionV3

- ResNet50

- VGG16

- VGG19

- MobileNet

Let's instantiate the VGG16 model:

from keras.applications import VGG16

conv_base = VGG16(weights='imagenet',

include_top=False,

input_shape=(150, 150, 3))

We passed three arguments to the constructor:

weights, to specify which weight checkpoint to initialize the model frominclude_top, which refers to including or not the densely-connected classifier on top of the network. By default, this

densely-connected classifier would correspond to the 1000 classes from ImageNet. Since we intend to use our own densely-connected classifier (with only two classes, cat and dog), we don't need to include it.

input_shape, the shape of the image tensors that we will feed to the network. This argument is purely optional: if we don't pass it,

then the network will be able to process inputs of any size.

Here's the detail of the architecture of the VGG16 convolutional base: it's very similar to the simple convnets that you are already familiar with.

conv_base.summary()

_________________________________________________________________ Layer (type) Output Shape Param # ================================================================= input_1 (InputLayer) (None, 150, 150, 3) 0 _________________________________________________________________ block1_conv1 (Conv2D) (None, 150, 150, 64) 1792 _________________________________________________________________ block1_conv2 (Conv2D) (None, 150, 150, 64) 36928 _________________________________________________________________ block1_pool (MaxPooling2D) (None, 75, 75, 64) 0 _________________________________________________________________ block2_conv1 (Conv2D) (None, 75, 75, 128) 73856 _________________________________________________________________ block2_conv2 (Conv2D) (None, 75, 75, 128) 147584 _________________________________________________________________ block2_pool (MaxPooling2D) (None, 37, 37, 128) 0 _________________________________________________________________ block3_conv1 (Conv2D) (None, 37, 37, 256) 295168 _________________________________________________________________ block3_conv2 (Conv2D) (None, 37, 37, 256) 590080 _________________________________________________________________ block3_conv3 (Conv2D) (None, 37, 37, 256) 590080 _________________________________________________________________ block3_pool (MaxPooling2D) (None, 18, 18, 256) 0 _________________________________________________________________ block4_conv1 (Conv2D) (None, 18, 18, 512) 1180160 _________________________________________________________________ block4_conv2 (Conv2D) (None, 18, 18, 512) 2359808 _________________________________________________________________ block4_conv3 (Conv2D) (None, 18, 18, 512) 2359808 _________________________________________________________________ block4_pool (MaxPooling2D) (None, 9, 9, 512) 0 _________________________________________________________________ block5_conv1 (Conv2D) (None, 9, 9, 512) 2359808 _________________________________________________________________ block5_conv2 (Conv2D) (None, 9, 9, 512) 2359808 _________________________________________________________________ block5_conv3 (Conv2D) (None, 9, 9, 512) 2359808 _________________________________________________________________ block5_pool (MaxPooling2D) (None, 4, 4, 512) 0 ================================================================= Total params: 14,714,688 Trainable params: 14,714,688 Non-trainable params: 0 _________________________________________________________________

The final feature map has shape (4, 4, 512). That's the feature on top of which we will stick a densely-connected classifier.

At this point, there are two ways we could proceed:

- Running the convolutional base over our dataset, recording its output to a Numpy array on disk, then using this data as input to a

standalone densely-connected classifier similar to those you have seen in the first chapters of this book. This solution is very fast and cheap to run, because it only requires running the convolutional base once for every input image, and the convolutional base is by far the most expensive part of the pipeline. However, for the exact same reason, this technique would not allow us to leverage data augmentation at all.

- Extending the model we have (

conv_base) by addingDenselayers on top, and running the whole thing end-to-end on the input data. This

allows us to use data augmentation, because every input image is going through the convolutional base every time it is seen by the model. However, for this same reason, this technique is far more expensive than the first one.

We will cover both techniques. Let's walk through the code required to set-up the first one: recording the output of conv_base on our

data and using these outputs as inputs to a new model.

We will start by simply running instances of the previously-introduced ImageDataGenerator to extract images as Numpy arrays as well as

their labels. We will extract features from these images simply by calling the predict method of the conv_base model.

import os

import numpy as np

from keras.preprocessing.image import ImageDataGenerator

base_dir = '/Users/fchollet/Downloads/cats_and_dogs_small'

train_dir = os.path.join(base_dir, 'train')

validation_dir = os.path.join(base_dir, 'validation')

test_dir = os.path.join(base_dir, 'test')

datagen = ImageDataGenerator(rescale=1./255)

batch_size = 20

def extract_features(directory, sample_count):

features = np.zeros(shape=(sample_count, 4, 4, 512))

labels = np.zeros(shape=(sample_count))

generator = datagen.flow_from_directory(

directory,

target_size=(150, 150),

batch_size=batch_size,

class_mode='binary')

i = 0

for inputs_batch, labels_batch in generator:

features_batch = conv_base.predict(inputs_batch)

features[i * batch_size : (i + 1) * batch_size] = features_batch

labels[i * batch_size : (i + 1) * batch_size] = labels_batch

i += 1

if i * batch_size >= sample_count:

# Note that since generators yield data indefinitely in a loop,

# we must `break` after every image has been seen once.

break

return features, labels

train_features, train_labels = extract_features(train_dir, 2000)

validation_features, validation_labels = extract_features(validation_dir, 1000)

test_features, test_labels = extract_features(test_dir, 1000)

Found 2000 images belonging to 2 classes. Found 1000 images belonging to 2 classes. Found 1000 images belonging to 2 classes.

The extracted features are currently of shape (samples, 4, 4, 512). We will feed them to a densely-connected classifier, so first we must

flatten them to (samples, 8192):

train_features = np.reshape(train_features, (2000, 4 * 4 * 512))

validation_features = np.reshape(validation_features, (1000, 4 * 4 * 512))

test_features = np.reshape(test_features, (1000, 4 * 4 * 512))

At this point, we can define our densely-connected classifier (note the use of dropout for regularization), and train it on the data and labels that we just recorded:

from keras import models

from keras import layers

from keras import optimizers

model = models.Sequential()

model.add(layers.Dense(256, activation='relu', input_dim=4 * 4 * 512))

model.add(layers.Dropout(0.5))

model.add(layers.Dense(1, activation='sigmoid'))

model.compile(optimizer=optimizers.RMSprop(lr=2e-5),

loss='binary_crossentropy',

metrics=['acc'])

history = model.fit(train_features, train_labels,

epochs=30,

batch_size=20,

validation_data=(validation_features, validation_labels))

Train on 2000 samples, validate on 1000 samples Epoch 1/30 2000/2000 [==============================] - 1s - loss: 0.6253 - acc: 0.6455 - val_loss: 0.4526 - val_acc: 0.8300 Epoch 2/30 2000/2000 [==============================] - 0s - loss: 0.4490 - acc: 0.7965 - val_loss: 0.3784 - val_acc: 0.8450 Epoch 3/30 2000/2000 [==============================] - 0s - loss: 0.3670 - acc: 0.8490 - val_loss: 0.3327 - val_acc: 0.8660 Epoch 4/30 2000/2000 [==============================] - 0s - loss: 0.3176 - acc: 0.8705 - val_loss: 0.3115 - val_acc: 0.8820 Epoch 5/30 2000/2000 [==============================] - 0s - loss: 0.3017 - acc: 0.8800 - val_loss: 0.2926 - val_acc: 0.8820 Epoch 6/30 2000/2000 [==============================] - 0s - loss: 0.2674 - acc: 0.8960 - val_loss: 0.2799 - val_acc: 0.8880 Epoch 7/30 2000/2000 [==============================] - 0s - loss: 0.2510 - acc: 0.9040 - val_loss: 0.2732 - val_acc: 0.8890 Epoch 8/30 2000/2000 [==============================] - 0s - loss: 0.2414 - acc: 0.9030 - val_loss: 0.2644 - val_acc: 0.8950 Epoch 9/30 2000/2000 [==============================] - 0s - loss: 0.2307 - acc: 0.9070 - val_loss: 0.2583 - val_acc: 0.8890 Epoch 10/30 2000/2000 [==============================] - 0s - loss: 0.2174 - acc: 0.9205 - val_loss: 0.2577 - val_acc: 0.8930 Epoch 11/30 2000/2000 [==============================] - 0s - loss: 0.1997 - acc: 0.9235 - val_loss: 0.2500 - val_acc: 0.8970 Epoch 12/30 2000/2000 [==============================] - 0s - loss: 0.1962 - acc: 0.9280 - val_loss: 0.2470 - val_acc: 0.8950 Epoch 13/30 2000/2000 [==============================] - 0s - loss: 0.1864 - acc: 0.9275 - val_loss: 0.2460 - val_acc: 0.8980 Epoch 14/30 2000/2000 [==============================] - 0s - loss: 0.1796 - acc: 0.9325 - val_loss: 0.2473 - val_acc: 0.8950 Epoch 15/30 2000/2000 [==============================] - 0s - loss: 0.1760 - acc: 0.9380 - val_loss: 0.2450 - val_acc: 0.8960 Epoch 16/30 2000/2000 [==============================] - 0s - loss: 0.1612 - acc: 0.9400 - val_loss: 0.2543 - val_acc: 0.8940 Epoch 17/30 2000/2000 [==============================] - 0s - loss: 0.1595 - acc: 0.9425 - val_loss: 0.2392 - val_acc: 0.9010 Epoch 18/30 2000/2000 [==============================] - 0s - loss: 0.1534 - acc: 0.9470 - val_loss: 0.2385 - val_acc: 0.9000 Epoch 19/30 2000/2000 [==============================] - 0s - loss: 0.1494 - acc: 0.9490 - val_loss: 0.2453 - val_acc: 0.9000 Epoch 20/30 2000/2000 [==============================] - 0s - loss: 0.1409 - acc: 0.9515 - val_loss: 0.2394 - val_acc: 0.9030 Epoch 21/30 2000/2000 [==============================] - 0s - loss: 0.1304 - acc: 0.9535 - val_loss: 0.2379 - val_acc: 0.9010 Epoch 22/30 2000/2000 [==============================] - 0s - loss: 0.1294 - acc: 0.9550 - val_loss: 0.2376 - val_acc: 0.9010 Epoch 23/30 2000/2000 [==============================] - 0s - loss: 0.1269 - acc: 0.9535 - val_loss: 0.2473 - val_acc: 0.8970 Epoch 24/30 2000/2000 [==============================] - 0s - loss: 0.1234 - acc: 0.9635 - val_loss: 0.2372 - val_acc: 0.9020 Epoch 25/30 2000/2000 [==============================] - 0s - loss: 0.1159 - acc: 0.9635 - val_loss: 0.2380 - val_acc: 0.9030 Epoch 26/30 2000/2000 [==============================] - 0s - loss: 0.1093 - acc: 0.9665 - val_loss: 0.2409 - val_acc: 0.9030 Epoch 27/30 2000/2000 [==============================] - 0s - loss: 0.1069 - acc: 0.9605 - val_loss: 0.2477 - val_acc: 0.9000 Epoch 28/30 2000/2000 [==============================] - 0s - loss: 0.1071 - acc: 0.9670 - val_loss: 0.2486 - val_acc: 0.9010 Epoch 29/30 2000/2000 [==============================] - 0s - loss: 0.0988 - acc: 0.9695 - val_loss: 0.2437 - val_acc: 0.9030 Epoch 30/30 2000/2000 [==============================] - 0s - loss: 0.0968 - acc: 0.9680 - val_loss: 0.2428 - val_acc: 0.9030

Training is very fast, since we only have to deal with two Dense layers -- an epoch takes less than one second even on CPU.

Let's take a look at the loss and accuracy curves during training:

import matplotlib.pyplot as plt

acc = history.history['acc']

val_acc = history.history['val_acc']

loss = history.history['loss']

val_loss = history.history['val_loss']

epochs = range(len(acc))

plt.plot(epochs, acc, 'bo', label='Training acc')

plt.plot(epochs, val_acc, 'b', label='Validation acc')

plt.title('Training and validation accuracy')

plt.legend()

plt.figure()

plt.plot(epochs, loss, 'bo', label='Training loss')

plt.plot(epochs, val_loss, 'b', label='Validation loss')

plt.title('Training and validation loss')

plt.legend()

plt.show()

We reach a validation accuracy of about 90%, much better than what we could achieve in the previous section with our small model trained from scratch. However, our plots also indicate that we are overfitting almost from the start -- despite using dropout with a fairly large rate. This is because this technique does not leverage data augmentation, which is essential to preventing overfitting with small image datasets.

Now, let's review the second technique we mentioned for doing feature extraction, which is much slower and more expensive, but which allows

us to leverage data augmentation during training: extending the conv_base model and running it end-to-end on the inputs. Note that this

technique is in fact so expensive that you should only attempt it if you have access to a GPU: it is absolutely intractable on CPU. If you

cannot run your code on GPU, then the previous technique is the way to go.

Because models behave just like layers, you can add a model (like our conv_base) to a Sequential model just like you would add a layer.

So you can do the following:

from keras import models

from keras import layers

model = models.Sequential()

model.add(conv_base)

model.add(layers.Flatten())

model.add(layers.Dense(256, activation='relu'))

model.add(layers.Dense(1, activation='sigmoid'))

This is what our model looks like now:

model.summary()

_________________________________________________________________ Layer (type) Output Shape Param # ================================================================= vgg16 (Model) (None, 4, 4, 512) 14714688 _________________________________________________________________ flatten_1 (Flatten) (None, 8192) 0 _________________________________________________________________ dense_3 (Dense) (None, 256) 2097408 _________________________________________________________________ dense_4 (Dense) (None, 1) 257 ================================================================= Total params: 16,812,353 Trainable params: 16,812,353 Non-trainable params: 0 _________________________________________________________________

As you can see, the convolutional base of VGG16 has 14,714,688 parameters, which is very large. The classifier we are adding on top has 2 million parameters.

Before we compile and train our model, a very important thing to do is to freeze the convolutional base. "Freezing" a layer or set of

layers means preventing their weights from getting updated during training. If we don't do this, then the representations that were

previously learned by the convolutional base would get modified during training. Since the Dense layers on top are randomly initialized,

very large weight updates would be propagated through the network, effectively destroying the representations previously learned.

In Keras, freezing a network is done by setting its trainable attribute to False:

print('This is the number of trainable weights '

'before freezing the conv base:', len(model.trainable_weights))

This is the number of trainable weights before freezing the conv base: 30

conv_base.trainable = False

print('This is the number of trainable weights '

'after freezing the conv base:', len(model.trainable_weights))

This is the number of trainable weights after freezing the conv base: 4

With this setup, only the weights from the two Dense layers that we added will be trained. That's a total of four weight tensors: two per

layer (the main weight matrix and the bias vector). Note that in order for these changes to take effect, we must first compile the model.

If you ever modify weight trainability after compilation, you should then re-compile the model, or these changes would be ignored.

Now we can start training our model, with the same data augmentation configuration that we used in our previous example:

from keras.preprocessing.image import ImageDataGenerator

train_datagen = ImageDataGenerator(

rescale=1./255,

rotation_range=40,

width_shift_range=0.2,

height_shift_range=0.2,

shear_range=0.2,

zoom_range=0.2,

horizontal_flip=True,

fill_mode='nearest')

# Note that the validation data should not be augmented!

test_datagen = ImageDataGenerator(rescale=1./255)

train_generator = train_datagen.flow_from_directory(

# This is the target directory

train_dir,

# All images will be resized to 150x150

target_size=(150, 150),

batch_size=20,

# Since we use binary_crossentropy loss, we need binary labels

class_mode='binary')

validation_generator = test_datagen.flow_from_directory(

validation_dir,

target_size=(150, 150),

batch_size=20,

class_mode='binary')

model.compile(loss='binary_crossentropy',

optimizer=optimizers.RMSprop(lr=2e-5),

metrics=['acc'])

history = model.fit_generator(

train_generator,

steps_per_epoch=100,

epochs=30,

validation_data=validation_generator,

validation_steps=50,

verbose=2)

Found 2000 images belonging to 2 classes. Found 1000 images belonging to 2 classes. Epoch 1/30 74s - loss: 0.4465 - acc: 0.7810 - val_loss: 0.2056 - val_acc: 0.9120 Epoch 2/30 72s - loss: 0.2738 - acc: 0.8905 - val_loss: 0.1239 - val_acc: 0.9550 Epoch 3/30 72s - loss: 0.2088 - acc: 0.9145 - val_loss: 0.1194 - val_acc: 0.9560 Epoch 4/30 72s - loss: 0.1835 - acc: 0.9280 - val_loss: 0.1025 - val_acc: 0.9550 Epoch 5/30 72s - loss: 0.1642 - acc: 0.9330 - val_loss: 0.0903 - val_acc: 0.9680 Epoch 6/30 72s - loss: 0.1360 - acc: 0.9410 - val_loss: 0.0794 - val_acc: 0.9740 Epoch 7/30 72s - loss: 0.1426 - acc: 0.9465 - val_loss: 0.0968 - val_acc: 0.9560 Epoch 8/30 72s - loss: 0.1013 - acc: 0.9580 - val_loss: 0.1411 - val_acc: 0.9430 Epoch 9/30 72s - loss: 0.1177 - acc: 0.9500 - val_loss: 0.2105 - val_acc: 0.9310 Epoch 10/30 72s - loss: 0.0949 - acc: 0.9620 - val_loss: 0.0900 - val_acc: 0.9710 Epoch 11/30 72s - loss: 0.0915 - acc: 0.9655 - val_loss: 0.1204 - val_acc: 0.9630 Epoch 12/30 72s - loss: 0.0782 - acc: 0.9645 - val_loss: 0.0995 - val_acc: 0.9650 Epoch 13/30 72s - loss: 0.0717 - acc: 0.9755 - val_loss: 0.1269 - val_acc: 0.9580 Epoch 14/30 72s - loss: 0.0670 - acc: 0.9715 - val_loss: 0.0994 - val_acc: 0.9680 Epoch 15/30 71s - loss: 0.0718 - acc: 0.9735 - val_loss: 0.0558 - val_acc: 0.9790 Epoch 16/30 72s - loss: 0.0612 - acc: 0.9780 - val_loss: 0.0870 - val_acc: 0.9690 Epoch 17/30 71s - loss: 0.0693 - acc: 0.9765 - val_loss: 0.0972 - val_acc: 0.9720 Epoch 18/30 71s - loss: 0.0596 - acc: 0.9785 - val_loss: 0.0832 - val_acc: 0.9730 Epoch 19/30 71s - loss: 0.0497 - acc: 0.9800 - val_loss: 0.1160 - val_acc: 0.9610 Epoch 20/30 71s - loss: 0.0546 - acc: 0.9780 - val_loss: 0.1057 - val_acc: 0.9660 Epoch 21/30 71s - loss: 0.0568 - acc: 0.9825 - val_loss: 0.2012 - val_acc: 0.9500 Epoch 22/30 71s - loss: 0.0493 - acc: 0.9830 - val_loss: 0.1384 - val_acc: 0.9610 Epoch 23/30 71s - loss: 0.0328 - acc: 0.9905 - val_loss: 0.1281 - val_acc: 0.9640 Epoch 24/30 71s - loss: 0.0524 - acc: 0.9860 - val_loss: 0.0846 - val_acc: 0.9760 Epoch 25/30 71s - loss: 0.0422 - acc: 0.9845 - val_loss: 0.1002 - val_acc: 0.9670 Epoch 26/30 71s - loss: 0.0617 - acc: 0.9825 - val_loss: 0.0858 - val_acc: 0.9760 Epoch 27/30 71s - loss: 0.0568 - acc: 0.9830 - val_loss: 0.0889 - val_acc: 0.9700 Epoch 28/30 71s - loss: 0.0296 - acc: 0.9915 - val_loss: 0.1406 - val_acc: 0.9620 Epoch 29/30 71s - loss: 0.0432 - acc: 0.9890 - val_loss: 0.1535 - val_acc: 0.9650 Epoch 30/30 71s - loss: 0.0354 - acc: 0.9885 - val_loss: 0.1832 - val_acc: 0.9510

model.save('cats_and_dogs_small_3.h5')

Let's plot our results again:

acc = history.history['acc']

val_acc = history.history['val_acc']

loss = history.history['loss']

val_loss = history.history['val_loss']

epochs = range(len(acc))

plt.plot(epochs, acc, 'bo', label='Training acc')

plt.plot(epochs, val_acc, 'b', label='Validation acc')

plt.title('Training and validation accuracy')

plt.legend()

plt.figure()

plt.plot(epochs, loss, 'bo', label='Training loss')

plt.plot(epochs, val_loss, 'b', label='Validation loss')

plt.title('Training and validation loss')

plt.legend()

plt.show()

As you can see, we reach a validation accuracy of about 96%. This is much better than our small convnet trained from scratch.

Fine-tuning¶

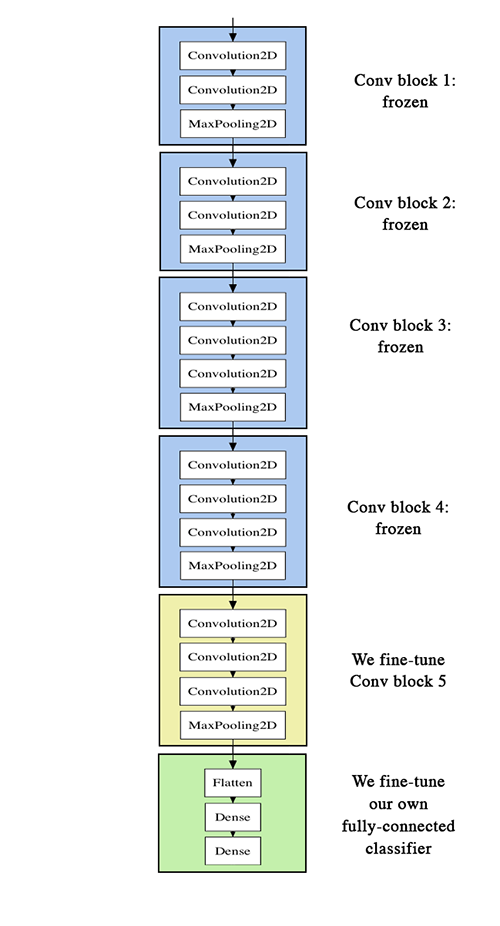

Another widely used technique for model reuse, complementary to feature extraction, is fine-tuning. Fine-tuning consists in unfreezing a few of the top layers of a frozen model base used for feature extraction, and jointly training both the newly added part of the model (in our case, the fully-connected classifier) and these top layers. This is called "fine-tuning" because it slightly adjusts the more abstract representations of the model being reused, in order to make them more relevant for the problem at hand.

We have stated before that it was necessary to freeze the convolution base of VGG16 in order to be able to train a randomly initialized classifier on top. For the same reason, it is only possible to fine-tune the top layers of the convolutional base once the classifier on top has already been trained. If the classified wasn't already trained, then the error signal propagating through the network during training would be too large, and the representations previously learned by the layers being fine-tuned would be destroyed. Thus the steps for fine-tuning a network are as follow:

- Add your custom network on top of an already trained base network.

- Freeze the base network.

- Train the part you added.

- Unfreeze some layers in the base network.

- Jointly train both these layers and the part you added.

We have already completed the first 3 steps when doing feature extraction. Let's proceed with the 4th step: we will unfreeze our conv_base,

and then freeze individual layers inside of it.

As a reminder, this is what our convolutional base looks like:

conv_base.summary()

_________________________________________________________________ Layer (type) Output Shape Param # ================================================================= input_1 (InputLayer) (None, 150, 150, 3) 0 _________________________________________________________________ block1_conv1 (Conv2D) (None, 150, 150, 64) 1792 _________________________________________________________________ block1_conv2 (Conv2D) (None, 150, 150, 64) 36928 _________________________________________________________________ block1_pool (MaxPooling2D) (None, 75, 75, 64) 0 _________________________________________________________________ block2_conv1 (Conv2D) (None, 75, 75, 128) 73856 _________________________________________________________________ block2_conv2 (Conv2D) (None, 75, 75, 128) 147584 _________________________________________________________________ block2_pool (MaxPooling2D) (None, 37, 37, 128) 0 _________________________________________________________________ block3_conv1 (Conv2D) (None, 37, 37, 256) 295168 _________________________________________________________________ block3_conv2 (Conv2D) (None, 37, 37, 256) 590080 _________________________________________________________________ block3_conv3 (Conv2D) (None, 37, 37, 256) 590080 _________________________________________________________________ block3_pool (MaxPooling2D) (None, 18, 18, 256) 0 _________________________________________________________________ block4_conv1 (Conv2D) (None, 18, 18, 512) 1180160 _________________________________________________________________ block4_conv2 (Conv2D) (None, 18, 18, 512) 2359808 _________________________________________________________________ block4_conv3 (Conv2D) (None, 18, 18, 512) 2359808 _________________________________________________________________ block4_pool (MaxPooling2D) (None, 9, 9, 512) 0 _________________________________________________________________ block5_conv1 (Conv2D) (None, 9, 9, 512) 2359808 _________________________________________________________________ block5_conv2 (Conv2D) (None, 9, 9, 512) 2359808 _________________________________________________________________ block5_conv3 (Conv2D) (None, 9, 9, 512) 2359808 _________________________________________________________________ block5_pool (MaxPooling2D) (None, 4, 4, 512) 0 ================================================================= Total params: 14,714,688 Trainable params: 0 Non-trainable params: 14,714,688 _________________________________________________________________

We will fine-tune the last 3 convolutional layers, which means that all layers up until block4_pool should be frozen, and the layers

block5_conv1, block5_conv2 and block5_conv3 should be trainable.

Why not fine-tune more layers? Why not fine-tune the entire convolutional base? We could. However, we need to consider that:

- Earlier layers in the convolutional base encode more generic, reusable features, while layers higher up encode more specialized features. It is

more useful to fine-tune the more specialized features, as these are the ones that need to be repurposed on our new problem. There would be fast-decreasing returns in fine-tuning lower layers.

- The more parameters we are training, the more we are at risk of overfitting. The convolutional base has 15M parameters, so it would be

risky to attempt to train it on our small dataset.

Thus, in our situation, it is a good strategy to only fine-tune the top 2 to 3 layers in the convolutional base.

Let's set this up, starting from where we left off in the previous example:

conv_base.trainable = True

set_trainable = False

for layer in conv_base.layers:

if layer.name == 'block5_conv1':

set_trainable = True

if set_trainable:

layer.trainable = True

else:

layer.trainable = False

Now we can start fine-tuning our network. We will do this with the RMSprop optimizer, using a very low learning rate. The reason for using a low learning rate is that we want to limit the magnitude of the modifications we make to the representations of the 3 layers that we are fine-tuning. Updates that are too large may harm these representations.

Now let's proceed with fine-tuning:

model.compile(loss='binary_crossentropy',

optimizer=optimizers.RMSprop(lr=1e-5),

metrics=['acc'])

history = model.fit_generator(

train_generator,

steps_per_epoch=100,

epochs=100,

validation_data=validation_generator,

validation_steps=50)

Epoch 1/100 100/100 [==============================] - 32s - loss: 0.0215 - acc: 0.9935 - val_loss: 0.0980 - val_acc: 0.9720 Epoch 2/100 100/100 [==============================] - 32s - loss: 0.0131 - acc: 0.9960 - val_loss: 0.1247 - val_acc: 0.9700 Epoch 3/100 100/100 [==============================] - 32s - loss: 0.0140 - acc: 0.9940 - val_loss: 0.1044 - val_acc: 0.9790 Epoch 4/100 100/100 [==============================] - 33s - loss: 0.0102 - acc: 0.9965 - val_loss: 0.1259 - val_acc: 0.9770 Epoch 5/100 100/100 [==============================] - 33s - loss: 0.0137 - acc: 0.9945 - val_loss: 0.1036 - val_acc: 0.9800 Epoch 6/100 100/100 [==============================] - 33s - loss: 0.0183 - acc: 0.9935 - val_loss: 0.1260 - val_acc: 0.9750 Epoch 7/100 100/100 [==============================] - 33s - loss: 0.0141 - acc: 0.9945 - val_loss: 0.1575 - val_acc: 0.9690 Epoch 8/100 100/100 [==============================] - 33s - loss: 0.0094 - acc: 0.9965 - val_loss: 0.0935 - val_acc: 0.9780 Epoch 9/100 100/100 [==============================] - 33s - loss: 0.0079 - acc: 0.9985 - val_loss: 0.1452 - val_acc: 0.9760 Epoch 10/100 100/100 [==============================] - 33s - loss: 0.0127 - acc: 0.9970 - val_loss: 0.1027 - val_acc: 0.9790 Epoch 11/100 100/100 [==============================] - 33s - loss: 0.0097 - acc: 0.9965 - val_loss: 0.1463 - val_acc: 0.9720 Epoch 12/100 100/100 [==============================] - 33s - loss: 0.0055 - acc: 0.9980 - val_loss: 0.1361 - val_acc: 0.9720 Epoch 13/100 100/100 [==============================] - 33s - loss: 0.0274 - acc: 0.9955 - val_loss: 0.1446 - val_acc: 0.9740 Epoch 14/100 100/100 [==============================] - 33s - loss: 0.0043 - acc: 0.9985 - val_loss: 0.1123 - val_acc: 0.9790 Epoch 15/100 100/100 [==============================] - 33s - loss: 0.0057 - acc: 0.9975 - val_loss: 0.1912 - val_acc: 0.9700 Epoch 16/100 100/100 [==============================] - 33s - loss: 0.0144 - acc: 0.9960 - val_loss: 0.1415 - val_acc: 0.9780 Epoch 17/100 100/100 [==============================] - 33s - loss: 0.0048 - acc: 0.9990 - val_loss: 0.1231 - val_acc: 0.9780 Epoch 18/100 100/100 [==============================] - 33s - loss: 0.0188 - acc: 0.9965 - val_loss: 0.1551 - val_acc: 0.9720 Epoch 19/100 100/100 [==============================] - 33s - loss: 0.0160 - acc: 0.9970 - val_loss: 0.2155 - val_acc: 0.9740 Epoch 20/100 100/100 [==============================] - 33s - loss: 0.0047 - acc: 0.9965 - val_loss: 0.1559 - val_acc: 0.9730 Epoch 21/100 100/100 [==============================] - 33s - loss: 0.0132 - acc: 0.9980 - val_loss: 0.1518 - val_acc: 0.9740 Epoch 22/100 100/100 [==============================] - 33s - loss: 0.0086 - acc: 0.9965 - val_loss: 0.1517 - val_acc: 0.9790 Epoch 23/100 100/100 [==============================] - 33s - loss: 0.0070 - acc: 0.9980 - val_loss: 0.1887 - val_acc: 0.9670 Epoch 24/100 100/100 [==============================] - 33s - loss: 0.0044 - acc: 0.9985 - val_loss: 0.1818 - val_acc: 0.9740 Epoch 25/100 100/100 [==============================] - 33s - loss: 0.0159 - acc: 0.9970 - val_loss: 0.1860 - val_acc: 0.9680 Epoch 26/100 100/100 [==============================] - 33s - loss: 0.0056 - acc: 0.9980 - val_loss: 0.1657 - val_acc: 0.9740 Epoch 27/100 100/100 [==============================] - 33s - loss: 0.0118 - acc: 0.9980 - val_loss: 0.1542 - val_acc: 0.9760 Epoch 28/100 100/100 [==============================] - 33s - loss: 0.0031 - acc: 0.9990 - val_loss: 0.1493 - val_acc: 0.9770 Epoch 29/100 100/100 [==============================] - 33s - loss: 0.0114 - acc: 0.9965 - val_loss: 0.1921 - val_acc: 0.9680 Epoch 30/100 100/100 [==============================] - 33s - loss: 0.0031 - acc: 0.9990 - val_loss: 0.1188 - val_acc: 0.9830 Epoch 31/100 100/100 [==============================] - 33s - loss: 0.0068 - acc: 0.9985 - val_loss: 0.1814 - val_acc: 0.9740 Epoch 32/100 100/100 [==============================] - 33s - loss: 0.0096 - acc: 0.9985 - val_loss: 0.2034 - val_acc: 0.9760 Epoch 33/100 100/100 [==============================] - 33s - loss: 0.0072 - acc: 0.9985 - val_loss: 0.1970 - val_acc: 0.9730 Epoch 34/100 100/100 [==============================] - 33s - loss: 0.0047 - acc: 0.9990 - val_loss: 0.2349 - val_acc: 0.9680 Epoch 35/100 100/100 [==============================] - 33s - loss: 0.0066 - acc: 0.9990 - val_loss: 0.1865 - val_acc: 0.9740 Epoch 36/100 100/100 [==============================] - 33s - loss: 0.0115 - acc: 0.9975 - val_loss: 0.1933 - val_acc: 0.9750 Epoch 37/100 100/100 [==============================] - 33s - loss: 0.0101 - acc: 0.9980 - val_loss: 0.1779 - val_acc: 0.9780 Epoch 38/100 100/100 [==============================] - 33s - loss: 0.0101 - acc: 0.9975 - val_loss: 0.1887 - val_acc: 0.9700 Epoch 39/100 100/100 [==============================] - 33s - loss: 0.0093 - acc: 0.9980 - val_loss: 0.2159 - val_acc: 0.9720 Epoch 40/100 100/100 [==============================] - 33s - loss: 0.0049 - acc: 0.9990 - val_loss: 0.1412 - val_acc: 0.9790 Epoch 41/100 100/100 [==============================] - 33s - loss: 0.0052 - acc: 0.9985 - val_loss: 0.2066 - val_acc: 0.9690 Epoch 42/100 100/100 [==============================] - 33s - loss: 0.0043 - acc: 0.9990 - val_loss: 0.1860 - val_acc: 0.9770 Epoch 43/100 100/100 [==============================] - 33s - loss: 0.0031 - acc: 0.9985 - val_loss: 0.2361 - val_acc: 0.9680 Epoch 44/100 100/100 [==============================] - 33s - loss: 0.0012 - acc: 0.9995 - val_loss: 0.2440 - val_acc: 0.9680 Epoch 45/100 100/100 [==============================] - 33s - loss: 0.0035 - acc: 0.9985 - val_loss: 0.1428 - val_acc: 0.9820 Epoch 46/100 100/100 [==============================] - 33s - loss: 0.0111 - acc: 0.9970 - val_loss: 0.1822 - val_acc: 0.9720 Epoch 47/100 100/100 [==============================] - 33s - loss: 0.0047 - acc: 0.9990 - val_loss: 0.1726 - val_acc: 0.9720 Epoch 48/100 100/100 [==============================] - 33s - loss: 0.0039 - acc: 0.9995 - val_loss: 0.2164 - val_acc: 0.9730 Epoch 49/100 100/100 [==============================] - 33s - loss: 0.0060 - acc: 0.9970 - val_loss: 0.1856 - val_acc: 0.9810 Epoch 50/100 100/100 [==============================] - 33s - loss: 0.0126 - acc: 0.9980 - val_loss: 0.1824 - val_acc: 0.9720 Epoch 51/100 100/100 [==============================] - 33s - loss: 0.0155 - acc: 0.9965 - val_loss: 0.1867 - val_acc: 0.9710 Epoch 52/100 100/100 [==============================] - 33s - loss: 0.0059 - acc: 0.9985 - val_loss: 0.2287 - val_acc: 0.9700 Epoch 53/100 100/100 [==============================] - 33s - loss: 0.0046 - acc: 0.9980 - val_loss: 0.2337 - val_acc: 0.9650 Epoch 54/100 100/100 [==============================] - 33s - loss: 0.0087 - acc: 0.9970 - val_loss: 0.1168 - val_acc: 0.9820 Epoch 55/100 100/100 [==============================] - 33s - loss: 0.0046 - acc: 0.9985 - val_loss: 0.1496 - val_acc: 0.9790 Epoch 56/100 100/100 [==============================] - 33s - loss: 0.0067 - acc: 0.9985 - val_loss: 0.1615 - val_acc: 0.9750 Epoch 57/100 100/100 [==============================] - 33s - loss: 0.0066 - acc: 0.9975 - val_loss: 0.2520 - val_acc: 0.9630 Epoch 58/100 100/100 [==============================] - 33s - loss: 0.0017 - acc: 0.9990 - val_loss: 0.1899 - val_acc: 0.9740 Epoch 59/100 100/100 [==============================] - 33s - loss: 0.0022 - acc: 0.9990 - val_loss: 0.2321 - val_acc: 0.9680 Epoch 60/100 100/100 [==============================] - 33s - loss: 0.0091 - acc: 0.9975 - val_loss: 0.1416 - val_acc: 0.9790 Epoch 61/100 100/100 [==============================] - 33s - loss: 0.0054 - acc: 0.9985 - val_loss: 0.1749 - val_acc: 0.9720 Epoch 62/100 100/100 [==============================] - 33s - loss: 0.0028 - acc: 0.9995 - val_loss: 0.2065 - val_acc: 0.9740 Epoch 63/100 100/100 [==============================] - 33s - loss: 0.0058 - acc: 0.9985 - val_loss: 0.1749 - val_acc: 0.9750 Epoch 64/100 100/100 [==============================] - 33s - loss: 0.0076 - acc: 0.9980 - val_loss: 0.1542 - val_acc: 0.9760 Epoch 65/100 100/100 [==============================] - 33s - loss: 0.0081 - acc: 0.9980 - val_loss: 0.2627 - val_acc: 0.9660 Epoch 66/100 100/100 [==============================] - 33s - loss: 0.0107 - acc: 0.9980 - val_loss: 0.1748 - val_acc: 0.9740 Epoch 67/100 100/100 [==============================] - 33s - loss: 5.1450e-04 - acc: 1.0000 - val_loss: 0.1922 - val_acc: 0.9710 Epoch 68/100 100/100 [==============================] - 33s - loss: 0.0054 - acc: 0.9985 - val_loss: 0.2299 - val_acc: 0.9680 Epoch 69/100 100/100 [==============================] - 33s - loss: 0.0091 - acc: 0.9965 - val_loss: 0.1533 - val_acc: 0.9730 Epoch 70/100 100/100 [==============================] - 33s - loss: 0.0190 - acc: 0.9965 - val_loss: 0.2232 - val_acc: 0.9690 Epoch 71/100 100/100 [==============================] - 33s - loss: 0.0058 - acc: 0.9985 - val_loss: 0.1773 - val_acc: 0.9710 Epoch 72/100 100/100 [==============================] - 33s - loss: 0.0072 - acc: 0.9990 - val_loss: 0.1660 - val_acc: 0.9760 Epoch 73/100 100/100 [==============================] - 33s - loss: 0.0036 - acc: 0.9990 - val_loss: 0.2354 - val_acc: 0.9700 Epoch 74/100 100/100 [==============================] - 33s - loss: 0.0023 - acc: 0.9995 - val_loss: 0.1904 - val_acc: 0.9700 Epoch 75/100 100/100 [==============================] - 33s - loss: 0.0137 - acc: 0.9955 - val_loss: 0.1363 - val_acc: 0.9810 Epoch 76/100 100/100 [==============================] - 33s - loss: 0.0055 - acc: 0.9990 - val_loss: 0.2119 - val_acc: 0.9710 Epoch 77/100 100/100 [==============================] - 33s - loss: 9.2985e-04 - acc: 1.0000 - val_loss: 0.1777 - val_acc: 0.9760 Epoch 78/100 100/100 [==============================] - 33s - loss: 0.0032 - acc: 0.9990 - val_loss: 0.2568 - val_acc: 0.9710 Epoch 79/100 100/100 [==============================] - 33s - loss: 0.0112 - acc: 0.9970 - val_loss: 0.2066 - val_acc: 0.9720 Epoch 80/100 100/100 [==============================] - 33s - loss: 0.0055 - acc: 0.9975 - val_loss: 0.1793 - val_acc: 0.9700 Epoch 81/100 100/100 [==============================] - 33s - loss: 0.0045 - acc: 0.9980 - val_loss: 0.2082 - val_acc: 0.9690 Epoch 82/100 100/100 [==============================] - 33s - loss: 0.0095 - acc: 0.9985 - val_loss: 0.1985 - val_acc: 0.9700 Epoch 83/100 100/100 [==============================] - 33s - loss: 0.0032 - acc: 0.9985 - val_loss: 0.1992 - val_acc: 0.9710 Epoch 84/100 100/100 [==============================] - 33s - loss: 0.0019 - acc: 0.9995 - val_loss: 0.2285 - val_acc: 0.9690 Epoch 85/100 100/100 [==============================] - 33s - loss: 0.0066 - acc: 0.9970 - val_loss: 0.1833 - val_acc: 0.9760 Epoch 86/100 100/100 [==============================] - 33s - loss: 0.0066 - acc: 0.9990 - val_loss: 0.2054 - val_acc: 0.9690 Epoch 87/100 100/100 [==============================] - 33s - loss: 0.0072 - acc: 0.9980 - val_loss: 0.2084 - val_acc: 0.9730 Epoch 88/100 100/100 [==============================] - 33s - loss: 0.0043 - acc: 0.9980 - val_loss: 0.1840 - val_acc: 0.9690 Epoch 89/100 100/100 [==============================] - 33s - loss: 0.0101 - acc: 0.9980 - val_loss: 0.1953 - val_acc: 0.9700 Epoch 90/100 100/100 [==============================] - 33s - loss: 0.0092 - acc: 0.9990 - val_loss: 0.1996 - val_acc: 0.9740 Epoch 91/100 100/100 [==============================] - 33s - loss: 0.0081 - acc: 0.9965 - val_loss: 0.2136 - val_acc: 0.9750 Epoch 92/100 100/100 [==============================] - 33s - loss: 0.0055 - acc: 0.9985 - val_loss: 0.1775 - val_acc: 0.9790 Epoch 93/100 100/100 [==============================] - 33s - loss: 3.4598e-04 - acc: 1.0000 - val_loss: 0.2102 - val_acc: 0.9760 Epoch 94/100 100/100 [==============================] - 33s - loss: 0.0109 - acc: 0.9985 - val_loss: 0.2081 - val_acc: 0.9760 Epoch 95/100 100/100 [==============================] - 33s - loss: 0.0028 - acc: 0.9985 - val_loss: 0.2033 - val_acc: 0.9720 Epoch 96/100 100/100 [==============================] - 33s - loss: 0.0074 - acc: 0.9990 - val_loss: 0.2118 - val_acc: 0.9750 Epoch 97/100 100/100 [==============================] - 33s - loss: 0.0034 - acc: 0.9990 - val_loss: 0.2621 - val_acc: 0.9740 Epoch 98/100 100/100 [==============================] - 33s - loss: 0.0054 - acc: 0.9975 - val_loss: 0.2589 - val_acc: 0.9650 Epoch 99/100 100/100 [==============================] - 33s - loss: 0.0060 - acc: 0.9990 - val_loss: 0.2242 - val_acc: 0.9700 Epoch 100/100 100/100 [==============================] - 33s - loss: 0.0010 - acc: 0.9995 - val_loss: 0.2403 - val_acc: 0.9750

model.save('cats_and_dogs_small_4.h5')

Let's plot our results using the same plotting code as before:

acc = history.history['acc']

val_acc = history.history['val_acc']

loss = history.history['loss']

val_loss = history.history['val_loss']

epochs = range(len(acc))

plt.plot(epochs, acc, 'bo', label='Training acc')

plt.plot(epochs, val_acc, 'b', label='Validation acc')

plt.title('Training and validation accuracy')

plt.legend()

plt.figure()

plt.plot(epochs, loss, 'bo', label='Training loss')

plt.plot(epochs, val_loss, 'b', label='Validation loss')

plt.title('Training and validation loss')

plt.legend()

plt.show()

These curves look very noisy. To make them more readable, we can smooth them by replacing every loss and accuracy with exponential moving averages of these quantities. Here's a trivial utility function to do this:

def smooth_curve(points, factor=0.8):

smoothed_points = []

for point in points:

if smoothed_points:

previous = smoothed_points[-1]

smoothed_points.append(previous * factor + point * (1 - factor))

else:

smoothed_points.append(point)

return smoothed_points

plt.plot(epochs,

smooth_curve(acc), 'bo', label='Smoothed training acc')

plt.plot(epochs,

smooth_curve(val_acc), 'b', label='Smoothed validation acc')

plt.title('Training and validation accuracy')

plt.legend()

plt.figure()

plt.plot(epochs,

smooth_curve(loss), 'bo', label='Smoothed training loss')

plt.plot(epochs,

smooth_curve(val_loss), 'b', label='Smoothed validation loss')

plt.title('Training and validation loss')

plt.legend()

plt.show()

These curves look much cleaner and more stable. We are seeing a nice 1% absolute improvement.

Note that the loss curve does not show any real improvement (in fact, it is deteriorating). You may wonder, how could accuracy improve if the loss isn't decreasing? The answer is simple: what we display is an average of pointwise loss values, but what actually matters for accuracy is the distribution of the loss values, not their average, since accuracy is the result of a binary thresholding of the class probability predicted by the model. The model may still be improving even if this isn't reflected in the average loss.

We can now finally evaluate this model on the test data:

test_generator = test_datagen.flow_from_directory(

test_dir,

target_size=(150, 150),

batch_size=20,

class_mode='binary')

test_loss, test_acc = model.evaluate_generator(test_generator, steps=50)

print('test acc:', test_acc)

Found 1000 images belonging to 2 classes. test acc: 0.967999992371

Here we get a test accuracy of 97%. In the original Kaggle competition around this dataset, this would have been one of the top results. However, using modern deep learning techniques, we managed to reach this result using only a very small fraction of the training data available (about 10%). There is a huge difference between being able to train on 20,000 samples compared to 2,000 samples!

Take-aways: using convnets with small datasets¶

Here's what you should take away from the exercises of these past two sections:

- Convnets are the best type of machine learning models for computer vision tasks. It is possible to train one from scratch even on a very

small dataset, with decent results.

- On a small dataset, overfitting will be the main issue. Data augmentation is a powerful way to fight overfitting when working with image

data.

- It is easy to reuse an existing convnet on a new dataset, via feature extraction. This is a very valuable technique for working with

small image datasets.

- As a complement to feature extraction, one may use fine-tuning, which adapts to a new problem some of the representations previously

learned by an existing model. This pushes performance a bit further.

Now you have a solid set of tools for dealing with image classification problems, in particular with small datasets.