Run the code cell below with the ▶| button above to set up this notebook, or type SHIFT-ENTER:

from datascience import *

import pandas as pd

import geojson

import numpy as np

import matplotlib.pyplot as plt

import folium

from IPython.display import HTML, display, IFrame

from folium import plugins

%matplotlib inline

from scipy import stats

import ipywidgets as widgets

from soc_module import *

import warnings

warnings.filterwarnings('ignore')

from datetime import datetime

import re

Sociology 130 Module: "The Neighborhood Project"¶

Welcome to the data science part of your project! You have gathered data and entered it here from your assigned census tracts. Now it's time to explore our class data and quantify our observations using Python, a popular programming language used in data science.

You won't need any prior programming knowledge to do this! The purpose of this module is not to teach you programming, but rather to show you the power of these tools and give you the intuition for how they work. It also allows us to quickly produce summarizations of our data!

Table of Contents¶

Completing the Notebooks¶

QUESTION cells are in blue and ask you to answers questions or fill in code cells. To receive full credit for your assignment, you must complete all QUESTION cells.

Part 0: Introduction to Python and Jupyter Notebooks: ¶

1. Cells, Arithmetic, and Code¶

In a notebook, each rectangle containing text or code is called a cell.

Cells (like this one) can be edited by double-clicking on them. This cell is a text cell, written in a simple format called Markdown to add formatting and section headings. You don't need to worry about Markdown today, but it's a pretty fun+easy tool to learn.

After you edit a cell, click the "run cell" button at the top that looks like ▶| to confirm any changes. (Try not to delete the instructions.) You can also press SHIFT-ENTER to run any cell or progress from one cell to the next.

Other cells contain code in the Python programming language. Running a code cell will execute all of the code it contains.

Try running this cell:

print("Hello, World!")

We will now quickly go through some very basic functionality of Python, which we'll be using throughout the rest of this notebook.

1.1 Arithmetic¶

Quantitative information arises everywhere in data science. In addition to representing commands to print out lines, expressions can represent numbers and methods of combining numbers.

The expression 3.2500 evaluates to the number 3.25. (Run the cell and see.)

3.2500

3.25

We don't necessarily always need to say "print", because Jupyter always prints the last line in a code cell. If you want to print more than one line, though, do specify "print".

print(3)

4

5

3

5

Many basic arithmetic operations are built in to Python, like * (multiplication), + (addition), - (subtraction), and / (division). There are many others, which you can find information about here. Use parenthesis to specify the order of operations, which act according to PEMDAS, just as you may have learned in school. Use parentheses for a happy new year!

2 + (6 * 5 - (6 * 3)) ** 2 * (( 2 ** 3 ) / 4 * 7)

2018.0

1.2 Variables¶

We sometimes want to work with the result of some computation more than once. To be able to do that without repeating code everywhere we want to use it, we can store it in a variable with assignment statements, which have the variable name on the left, an equals sign, and the expression to be evaluated and stored on the right. In the cell below, (3 * 11 + 5) / 2 - 9 evaluates to 10, and gets stored in the variable result.

result = (3 * 11 + 5) / 2 - 9

result

10.0

2. Functions¶

One important form of an expression is the call expression, which first names a function and then describes its arguments. The function returns some value, based on its arguments. Some important mathematical functions are:

| Function | Description |

|---|---|

abs |

Returns the absolute value of its argument |

max |

Returns the maximum of all its arguments |

min |

Returns the minimum of all its arguments |

round |

Round its argument to the nearest integer |

Here are two call expressions that both evaluate to 3

abs(2 - 5)

max(round(2.8), min(pow(2, 10), -1 * pow(2, 10)))

These function calls first evaluate the expressions in the arguments (inside the parentheses), then evaluate the function on the results. abs(2-5) evaluates first to abs(3), then returns 3.

A statement is a whole line of code. Some statements are just expressions, like the examples above, that can be broken down into its subexpressions which get evaluated individually before evaluating the statement as a whole.

2.1 Calling functions¶

The most common way to combine or manipulate values in Python is by calling functions. Python comes with many built-in functions that perform common operations.

For example, the abs function takes a single number as its argument and returns the absolute value of that number. The absolute value of a number is its distance from 0 on the number line, so abs(5) is 5 and abs(-5) is also 5.

abs(5)

5

abs(-5)

5

Functions can be called as above, putting the argument in parentheses at the end, or by using "dot notation", and calling the function after finding the arguments, as in the cell immediately below.

nums = [1, 2, 5] # a list of items, in this case, numbers

nums.reverse() # reverses the item order

nums

[5, 2, 1]

Part 1: Class Data¶

We can read in the data you submitted through the form by asking Google for the form information and turning it into a table.

image_data = Table.read_table("data/image_data.csv")

class_data = Table.read_table("data/class_data.csv")

image_data["Census Tract"] = [str(i) for i in image_data["Census Tract"]]

class_data["Census Tract"] = [str(i) for i in class_data["Census Tract"]]

sub = lambda s: re.sub(r"\.0$", "", s)

image_data["Census Tract"] = image_data.apply(sub, "Census Tract")

class_data["Census Tract"] = class_data.apply(sub, "Census Tract")

image_data.show(5)

class_data.show(5)

That's a lot of columns! Now that our data is inside the class_data variable, we can ask that varible for some information. We can get a list of the column names with the .labels attribute of the table:

class_data.labels

('Timestamp',

'Census Tract',

'Amount of Beer/Liquor Bottles (1 to 5 scale)',

'Amount of Cigarette/Cigar Butts (1 to 5 scale)',

'Amount of Condoms (1 to 5 scale)',

'Amount of Garbage (1 to 5 scale)',

'Abandoned Cars (Y?N)',

'Treatment (1 (Friendly) to 5 (Suspicion) scale)',

'Condition of Buildings (1 (Good) to 4 (Poor) scale)',

'Graffiti (Y/N)',

'Fenching (1 (None) to 4 (High mesh) scale)',

'Renovations (Y/N)',

'Types of Establishments',

'Amount of Trees Linked the Block Fence (1 (Few) to 3 (Most) scale)',

'Public Transporation Available (Y/N)',

'Private Security Visible (Y/N)',

'Police Visible (Y/N)')

Let's get some summary statistics and do some plotting.

How many of you reported on which census tracts?

class_data.group("Census Tract").show()

We can use the .plot.barh() method to this to visualize the counts:

class_data.group("Census Tract").barh("Census Tract")

We can write a short function, bar_chart_column, to plot the counts for any of our columns in the table. All we have to do is select the column label in the dropdown.

def bar_chart_column(col):

bar_chart_data.group(col).barh(col)

bar_chart_data = class_data.drop("What kinds of establishments are there on the block face? Select all that apply.")

dropdown = widgets.Dropdown(options=bar_chart_data.labels[1:], description="Column")

display(widgets.interactive(bar_chart_column, col=dropdown))

interactive(children=(Dropdown(description='Column', options=('Census Tract', 'Amount of Beer/Liquor Bottles (…

We can then ask for these columns and plot their means too.

class_data.to_df().iloc[:,2:].mean().plot.barh();

One of the questions had checkbox answers that listed all of the establishments that were observed by each student in their assigned census tract. Let's create a seperate column for each of the possible options. A value of 1 in the column indicates that the estalishment was observed. A value of 0 indicates that the establishment was not observed.

establishments = pd.Series([item for sublist in [response.split(', ') for response in class_data.to_df().iloc[:, 12] if not pd.isnull(response)] for item in sublist])

ests_table = Table.empty().with_column("Types of Establishments", class_data["Types of Establishments"])

for establishment in establishments.unique():

establishment_data = []

for row in class_data.rows:

ests = row.item('Types of Establishments')

if not pd.isnull(ests):

row_establishments = ests.split(', ')

if establishment in row_establishments:

establishment_data.append(1)

else:

establishment_data.append(0)

ests_table[establishment] = establishment_data

ests_table.show(5)

col_sums = []

for col in ests_table.drop(0).labels:

col_sums.append(sum(ests_table[col]))

establishment_counts = pd.Series(col_sums, index = ests_table.drop(0).labels)

establishment_counts = establishment_counts.drop("N/A")

establishment_counts.plot.barh()

plt.title(ests_table.labels[0])

plt.show()

Mapping¶

We can also visualize how your responses mapped out over the census tracts. We'll use a library called folium to map your observations onto a map of the census tracts, and include popups with your comments and photos.

oak = geojson.load(open("CAOakland1937.geojson"))

oak['features'][10]['properties']

{'name': None,

'holc_id': 'A8',

'holc_grade': 'A',

'area_description_data': {'1': 'Piedmont, California High Green A-8',

'2': 'Characterized by steep sloped and rolling hills, which lend themselves to view home locations and landscaping. Streets platted to minimize grades.',

'3': 'New and modern mansions, set on large homesites. Zoned to single-family homes; high restricted; contains highest priced new homes in East Bay area and is considered the very best district.',

'4': 'Distance to local and San Francisco transportation, shopping centers and schools.',

'7': '15000 - 50000 22500 125 - 300 200 1933-34 10000 - 25000 15000 69% 90 - 200 135 67.50% 1937 15000 - 35000 20000 91% 125 - 250 175 87.50% 1927 102% 1927 100%',

'13': 'upward',

'14': 'District known as Upper Piedmont Estates and St. James Wood, highly restricted - all modern. District can hardly become any better, but desirability could be increased by improved transportation and building a local shool and providing more adequate shopping facilities.',

'15': 'R.H. Barrett, Building Inspector, City of Piedmont; Ralph E. Prentice; Zura E. Bells 6/15 7',

'10a': 'fair',

'10b': 'mansion - 175',

'10c': 'slow',

'11a': 'Mansions average 19,000 including lot, etc',

'11b': '27 in 1936',

'12a': 'ample',

'12b': 'ample',

'5a': 'Business executives and professional men',

'5b': '6000 - 100000',

'5c': 'no concentration N/A',

'5d': 'none N/A',

'5e': 'lower grades - no',

'5f': 'none',

'5g': 'moderately ',

'6a': 'mansions',

'6b': 'frame, stucco, masonry',

'6c': '5 (new to 10) years',

'6d': 'excellent',

'8a': '30%',

'8b': '100%',

'8c': '95%',

'9a': 'good',

'9b': 'mansion - 17,500',

'9c': 'fair'}}

import geojson

alameda = geojson.load(open("data/alameda-2010.geojson"))

myMap = folium.Map(location=(37.8044, -122.2711), zoom_start=11.4)

map_data(myMap, alameda, image_data).save("maps/map1.html")

IFrame('maps/map1.html', width=700, height=400)

--------------------------------------------------------------------------- NameError Traceback (most recent call last) <ipython-input-1-7f2c0ecb7d3e> in <module> 1 import geojson 2 alameda = geojson.load(open("data/alameda-2010.geojson")) ----> 3 myMap = folium.Map(location=(37.8044, -122.2711), zoom_start=11.4) 4 5 map_data(myMap, alameda, image_data).save("maps/map1.html") NameError: name 'folium' is not defined

Click around census tracts near yours to see if the other students' observations are similar and see if you can eyeball any trends. Check out other areas on the map and see if there are trends for tracts in specific areas.

QUESTION: Do specific characteristics cluster in different areas? Which ones? Which characteristsics seem to cluster together? What types of data do you think will correlate with socioeconomic characteristics like median income, poverty rate, education? Why?

Type your answer here, replacing this text.

Part 2: Our Metrics¶

Now that you have made some predictions, we can compare our data with socioeconomic data from the U.S. Census for the different tracts we visited and see if we can find evidence to support them. From your data, we can create some point scales that measure different aspects of a neighborhood.

For example, we can make a scale called “social disorder” for the first part of your responses. Let's first subeset our data:

social_disorder = class_data.select(range(1, 12))

social_disorder.show(5)

Now we'll need to scale the values because all responses were not on the same scale. But for this part, the higher the value the more negative the social disorder was:

social_disorder = scale_values(social_disorder, np.arange(1, 11))

social_disorder.show(5)

Now that our values are scaled, we can take the mean across all observation for a given census tract for a given column, and then take the mean across columns:

class_data.show(5)

| Timestamp | Census Tract | Amount of Beer/Liquor Bottles (1 to 5 scale) | Amount of Cigarette/Cigar Butts (1 to 5 scale) | Amount of Condoms (1 to 5 scale) | Amount of Garbage (1 to 5 scale) | Abandoned Cars (Y?N) | Treatment (1 (Friendly) to 5 (Suspicion) scale) | Condition of Buildings (1 (Good) to 4 (Poor) scale) | Graffiti (Y/N) | Fenching (1 (None) to 4 (High mesh) scale) | Renovations (Y/N) | Types of Establishments | Amount of Trees Linked the Block Fence (1 (Few) to 3 (Most) scale) | Public Transporation Available (Y/N) | Private Security Visible (Y/N) | Police Visible (Y/N) |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 10/21/2018 22:10:14 | 4229 | 2 | 2 | 1 | 2 | 0 | 4 | 2 | 1 | 2 | 1 | Eating places/restaurants, Professional offices (doctor ... | 2 | 1 | 0 | 0 |

| 10/21/2018 22:23:08 | 4006 | 1 | 1 | 1 | 2 | 0 | 4 | 2 | 1 | 2 | 0 | Liquor stores or Marijuana Dispensaries | 2 | 1 | 0 | 1 |

| 10/22/2018 0:01:58 | 4224 | 3 | 2 | 1 | 3 | 1 | 3 | 2 | 0 | 3 | 1 | Bars and alcoholic beverage services, Eating places/rest ... | 2 | 1 | 0 | 0 |

| 10/22/2018 0:08:49 | 4031 | 1 | 3 | 1 | 3 | 0 | 2 | 2 | 1 | 1 | 0 | Bodega deli corner-store convenience store, Eating place ... | 1 | 0 | 0 | 0 |

| 10/22/2018 0:09:49 | 4239.01 | 1 | 1 | 1 | 3 | 0 | 2 | 2 | 0 | 1 | 1 | Bars and alcoholic beverage services, Professional offic ... | 2 | 1 | 1 | 0 |

... (134 rows omitted)

means = social_disorder.group("Census Tract", np.mean).drop("Census Tract").values.mean(axis=1)

social_disorder = Table().with_columns(

"Census Tract", np.unique(social_disorder.column("Census Tract")),

"Social Disorder", means

)

social_disorder

| Census Tract | Social Disorder |

|---|---|

| 4005 | 0.328333 |

| 4006 | 0.234722 |

| 4007 | 0.340476 |

| 401 | 0.347222 |

| 4014 | 0.465476 |

| 4027 | 0.406944 |

| 4028 | 0.263333 |

| 403 | 0.306667 |

| 4031 | 0.286111 |

| 4033 | 0.323611 |

... (14 rows omitted)

Remember, the higher the value the more negative we perceived the census tract to be.

We can do the same for our amenities part:

amenities = ests_table.with_columns(

"Census Tract", class_data.column("Census Tract"),

"Trees", class_data.column("Amount of Trees Linked the Block Fence (1 (Few) to 3 (Most) scale)")

)

amenities["Trees"] = [(0, 1)[value > 1] for value in amenities["Trees"]]

amenities.show(5)

Certain amenities are positive and indicate desirable conditions in a neighborhood. These characteristics include things like School or Daycares, and supermarkets. Let's create a table containing only positive amenities

positive_amenities = amenities.select(

'Census Tract',

'Banks or credit unions',

'Chain retail stores',

'Community center',

'Eating places/restaurants',

'Fire station',

'Parks',

'Playgrounds',

'Public library',

'Post office',

'Professional offices (doctor dentist lawyer accountant real estate)',

'Schools or daycare centers',

'Supermarkets/grocery stores',

'Trees'

)

positive_amenities.show(5)

To make positive amenities comparable between census tracts, we can find the mean of positive amenities for each census tract. A higher value indicates a more positive census tract

means = positive_amenities.group("Census Tract", np.mean).drop("Census Tract").values.mean(axis=1)

positive_amenities = Table().with_columns(

"Census Tract", np.unique(positive_amenities.column("Census Tract")),

"Positive Amenities", means

)

positive_amenities

| Census Tract | Positive Amenities |

|---|---|

| 4005 | 0.107692 |

| 4006 | 0.141026 |

| 4007 | 0.120879 |

| 401 | 0.0769231 |

| 4014 | 0.131868 |

| 4027 | 0.141026 |

| 4028 | 0.138462 |

| 403 | 0.338462 |

| 4031 | 0.192308 |

| 4033 | 0.25641 |

... (14 rows omitted)

Certain amenities are negative and indicate undesirable conditions in a neighborhood. These characteristics include things like Bars or Fast Food Restaurants. Let's create a Data Frame with only negative amenities

negative_amenities = amenities.select(

'Census Tract',

'Auto repair/auto body shop',

'Bars and alcoholic beverage services',

'Bodega deli corner-store convenience store',

'Fast food or take-out places',

'Gas station',

'Liquor stores or Marijuana Dispensaries',

'Manufacturing' ,

'Payday lenders check cashers or pawn shops',

'Warehouses'

)

negative_amenities.show(5)

To make negative amenities comparable between census tracts, we can find the mean of negative amenities for each census tract. A higher value indicates a more negative census tract

means = negative_amenities.group("Census Tract", np.mean).drop("Census Tract").values.mean(axis=1)

negative_amenities = Table().with_columns(

"Census Tract", np.unique(negative_amenities.column("Census Tract")),

"Negative Amenities", means

)

negative_amenities

| Census Tract | Negative Amenities |

|---|---|

| 4005 | 0.0222222 |

| 4006 | 0.0555556 |

| 4007 | 0.111111 |

| 401 | 0.0740741 |

| 4014 | 0.0793651 |

| 4027 | 0.185185 |

| 4028 | 0.0666667 |

| 403 | 0.0444444 |

| 4031 | 0.111111 |

| 4033 | 0.12963 |

... (14 rows omitted)

official_data = Table.read_table("data/merged-census.csv")

official_data["Census Tract"] = [str(i) for i in official_data["Census Tract"]]

official_data["Census Tract"] = official_data.apply(sub, "Census Tract")

official_data

| Census Tract | Unemployment % | Household Median Income | Bachelor's Degree or higher % | % families with income under poverty line | % families with public assistance income | median house value | % white | % black | % asian | % hispanic/latino | % foreign born | % single mother family |

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 4005 | 6.5 | 76038 | 61.9 | 6.1 | 4.5 | 611300 | 51.7 | 24.3 | 4.8 | 14.2 | 16.8 | 27 |

| 4006 | 14.9 | 60804 | 55.3 | 3 | 3.6 | 466700 | 34.8 | 38.7 | 6.5 | 12.3 | 13 | 25 |

| 4007 | 10.5 | 39614 | 40.9 | 14.5 | 1.2 | 572300 | 35.7 | 40.3 | 2.9 | 13 | 11 | 40 |

| 4010 | 9.2 | 44766 | 33.2 | 21.3 | 7.1 | 358100 | 31.4 | 38.5 | 4.3 | 19.6 | 12.2 | 41 |

| 4014 | 16.2 | 28532 | 18.6 | 34.6 | 11.8 | 333700 | 13.8 | 45.1 | 10.9 | 23.6 | 23.4 | 36.8 |

| 4027 | 8.3 | 40169 | 28.9 | 36.6 | 13.4 | 364300 | 27.3 | 45.7 | 8.2 | 16.4 | 11 | 41.8 |

| 4028 | 14.9 | 17278 | 33.8 | 27.1 | 6.3 | 517600 | 30.8 | 40.7 | 15.9 | 8 | 29 | 27 |

| 4030 | 12.6 | 17609 | 24.6 | 14.3 | 1.1 | 344700 | 6.8 | 2.4 | 85.2 | 2.5 | 68.8 | 17.8 |

| 4031 | 7 | 59250 | 38.5 | 25.4 | 0.9 | 386000 | 25.1 | 17.5 | 38.1 | 13.4 | 36.9 | 11.8 |

| 4033 | 8.9 | 57064 | 46.5 | 19.9 | 4.7 | 338300 | 26.2 | 9 | 54.9 | 4.7 | 52.5 | 24.1 |

... (14 rows omitted)

We can add our columns to this table to put it all in one place:

joined_data = (official_data

.join("Census Tract", social_disorder)

.join("Census Tract", positive_amenities)

.join("Census Tract", negative_amenities)

)

joined_data

| Census Tract | Unemployment % | Household Median Income | Bachelor's Degree or higher % | % families with income under poverty line | % families with public assistance income | median house value | % white | % black | % asian | % hispanic/latino | % foreign born | % single mother family | Social Disorder | Positive Amenities | Negative Amenities |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 4005 | 6.5 | 76038 | 61.9 | 6.1 | 4.5 | 611300 | 51.7 | 24.3 | 4.8 | 14.2 | 16.8 | 27 | 0.328333 | 0.107692 | 0.0222222 |

| 4006 | 14.9 | 60804 | 55.3 | 3 | 3.6 | 466700 | 34.8 | 38.7 | 6.5 | 12.3 | 13 | 25 | 0.234722 | 0.141026 | 0.0555556 |

| 4007 | 10.5 | 39614 | 40.9 | 14.5 | 1.2 | 572300 | 35.7 | 40.3 | 2.9 | 13 | 11 | 40 | 0.340476 | 0.120879 | 0.111111 |

| 4014 | 16.2 | 28532 | 18.6 | 34.6 | 11.8 | 333700 | 13.8 | 45.1 | 10.9 | 23.6 | 23.4 | 36.8 | 0.465476 | 0.131868 | 0.0793651 |

| 4027 | 8.3 | 40169 | 28.9 | 36.6 | 13.4 | 364300 | 27.3 | 45.7 | 8.2 | 16.4 | 11 | 41.8 | 0.406944 | 0.141026 | 0.185185 |

| 4028 | 14.9 | 17278 | 33.8 | 27.1 | 6.3 | 517600 | 30.8 | 40.7 | 15.9 | 8 | 29 | 27 | 0.263333 | 0.138462 | 0.0666667 |

| 4031 | 7 | 59250 | 38.5 | 25.4 | 0.9 | 386000 | 25.1 | 17.5 | 38.1 | 13.4 | 36.9 | 11.8 | 0.286111 | 0.192308 | 0.111111 |

| 4033 | 8.9 | 57064 | 46.5 | 19.9 | 4.7 | 338300 | 26.2 | 9 | 54.9 | 4.7 | 52.5 | 24.1 | 0.323611 | 0.25641 | 0.12963 |

| 4201 | 8 | 117083 | 75.9 | 2 | 0 | 806800 | 66 | 2.9 | 15.2 | 9.3 | 19.1 | 18.5 | 0.147619 | 0.175824 | 0 |

| 4202 | 8.9 | 82206 | 69.4 | 7.6 | 1 | 714600 | 44 | 7.8 | 25.4 | 13.7 | 26.1 | 25.9 | 0.117857 | 0.186813 | 0.0634921 |

... (12 rows omitted)

# add zeros for all features in tracts for which we did not collect data

unobserved_tracts = [str(alameda["features"][x]['properties']['name10']) for x in range(len(alameda["features"])) \

if alameda["features"][x]['properties']['name10'] not in list(joined_data["Census Tract"])]

unobserved_data = Table().with_column("Census Tract", unobserved_tracts)

for col in joined_data.labels[1:]:

unobserved_data[col] = np.zeros(len(unobserved_tracts))

joined_data = joined_data.append(unobserved_data)

joined_data.show(5)

Mapping Exploration¶

Before we quantify the relationship between the census data and our own metrics, let's do some exploratory mapping. We can now add our social disorder and amenities metrics to the popup too!

First we'll map a choropleth of unemployment:

map2 = folium.Map(location=(37.8044, -122.2711), zoom_start=11.4)

folium.features.Choropleth(geo_data=alameda,

name='unemployment',

data=joined_data.to_df(),

columns=['Census Tract', 'Unemployment %'],

key_on='feature.properties.name10',

fill_color='BuPu',

fill_opacity=0.7,

line_opacity=0.2,

legend_name='Unemployment Rate (%)'

).add_to(map2)

folium.LayerControl().add_to(map2)

map2.save("maps/map2.html")

IFrame('maps/map2.html', width=700, height=400)

Household Median Income:

map3 = folium.Map(location=(37.8044, -122.2711), zoom_start=11.4)

folium.Choropleth(geo_data=alameda,

name='household median income',

data=joined_data.to_df(),

columns=['Census Tract', 'Household Median Income'],

key_on='feature.properties.name10',

fill_color='BuPu',

fill_opacity=0.7,

line_opacity=0.2,

legend_name='Household Median Income'

).add_to(map3)

map3.save("maps/map3.html")

IFrame('maps/map3.html', width=700, height=400)

Bachelor's Degree or higher %:

map10 = folium.Map(location=(37.8044, -122.2711), zoom_start=11.4)

folium.Choropleth(geo_data=alameda,

name='>= bachelors %"',

data=joined_data.to_df(),

columns=['Census Tract', "Bachelor's Degree or higher %"],

key_on='feature.properties.name10',

fill_color='BuPu',

fill_opacity=0.7,

line_opacity=0.2,

legend_name='Bachelor's Degree or higher %'

).add_to(map10)

map10.save("maps/map10.html")

IFrame('maps/map10.html', width=700, height=400)

File "<ipython-input-35-7baacbe61304>", line 10 legend_name='Bachelor's Degree or higher %' ^ SyntaxError: invalid syntax

Now our "social disorder":

map5 = folium.Map(location=(37.8044, -122.2711), zoom_start=11.4)

folium.Choropleth(geo_data=alameda,

name='social disorder',

data=joined_data.to_df(),

columns=['Census Tract', "Social Disorder"],

key_on='feature.properties.name10',

fill_color='BuPu',

fill_opacity=0.7,

line_opacity=0.2,

legend_name="Social Disorder"

).add_to(map5)

map5.save("maps/map5.html")

IFrame('maps/map5.html', width=700, height=400)

Now "Positive Amenities":

map6 = folium.Map(location=(37.8044, -122.2711), zoom_start=11.4)

folium.Choropleth(geo_data=alameda,

name='positive amenities',

data=joined_data.to_df(),

columns=['Census Tract', "Positive Amenities"],

key_on='feature.properties.name10',

fill_color='BuPu',

fill_opacity=0.7,

line_opacity=0.2,

legend_name="Positive Amenities"

).add_to(map6)

map6.save("maps/map6.html")

IFrame('maps/map6.html', width=700, height=400)

Finally, "Negative Amenities"

map7 = folium.Map(location=(37.8044, -122.2711), zoom_start=11.4)

folium.Choropleth(geo_data=alameda,

name='negative amenities',

data=joined_data.to_df(),

columns=['Census Tract', "Negative Amenities"],

key_on='feature.properties.name10',

fill_color='BuPu',

fill_opacity=0.7,

line_opacity=0.2,

legend_name="Negative Amenities"

).add_to(map7)

map7.save("maps/map7.html")

IFrame('maps/map7.html', width=700, height=400)

QUESTION: What do you notice?

Type your answer here, replacing this text.

QUESTION: Try copying and pasting one of the mapping cells above and change the column_name variable to a different variable (column in our data) you'd like to map, then run the cell!

...

Variable Distributions¶

We can also visualize the distributions of these variables according to census tract with histograms. A histogram will create bins, or ranges, within a variable and then count up the frequency for that bin. If we look at household median income, we may have bins of $10,000, and then we'd count how many tracts fall within that bin:

def viz_dist(var_name, tract):

x = joined_data.where(var_name, lambda x: not pd.isna(x))[var_name]

plt.hist(x)

plt.axvline(x=joined_data.where("Census Tract", tract)[var_name], color = "RED")

plt.xlabel(var_name, fontsize=18)

plt.show()

display(widgets.interactive(viz_dist, var_name=list(joined_data.labels[1:]),

tract=list(joined_data["Census Tract"])))

interactive(children=(Dropdown(description='var_name', options=('Unemployment %', 'Household Median Income', "…

QUESTION: What do these distributions tell you?

Type your answer here, replacing this text.

Part 4: Correlation¶

Let's first analyze income levels. We have sorted the data according to income level. Compare the income levels to the level of social disorder and amenities. Is there a correlation you can spot(as one increases or decreases, does the other do the same)?

(joined_data

.sort("Household Median Income", descending=True)

.select("Household Median Income", "Social Disorder", "Positive Amenities", "Negative Amenities")

).show()

| Household Median Income | Social Disorder | Positive Amenities | Negative Amenities |

|---|---|---|---|

| 150673 | 0.127778 | 0.166667 | 0.0185185 |

| 135556 | 0.143333 | 0.2 | 0.0666667 |

| 132250 | 0.134722 | 0.102564 | 0 |

| 117083 | 0.147619 | 0.175824 | 0 |

| 107875 | 0.0883333 | 0.184615 | 0 |

| 85119 | 0.181944 | 0.102564 | 0 |

| 82206 | 0.117857 | 0.186813 | 0.0634921 |

| 80404 | 0.193056 | 0.24359 | 0 |

| 76038 | 0.328333 | 0.107692 | 0.0222222 |

| 68190 | 0.163889 | 0.24359 | 0.037037 |

| 61543 | 0.236111 | 0.102564 | 0.111111 |

| 60804 | 0.234722 | 0.141026 | 0.0555556 |

| 59250 | 0.286111 | 0.192308 | 0.111111 |

| 57064 | 0.323611 | 0.25641 | 0.12963 |

| 54554 | 0.241667 | 0.128205 | 0.111111 |

| 42813 | 0.294444 | 0.141026 | 0.0925926 |

| 40169 | 0.406944 | 0.141026 | 0.185185 |

| 39614 | 0.340476 | 0.120879 | 0.111111 |

| 38689 | 0.180556 | 0.230769 | 0.0740741 |

| 28532 | 0.465476 | 0.131868 | 0.0793651 |

| 24702 | 0.22 | 0.353846 | 0.0888889 |

| 17278 | 0.263333 | 0.138462 | 0.0666667 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

QUESTION: Did you look at the whole table? A common mistake is to assume that since the top 10 results follow or do not follow a pattern, the rest don't. Real life data is often messy and not clean. Does the correlation continue throughout the whole table (a.k.a. as income decreases the points decrease) or is there no pattern? What does this mean about the data?

Type your answer here, replacing this text.

Eyeballing patterns is not the same as a statisical measure of a correlation; you must quantify it with numbers and statistics to prove your thoughts. Looking at the tables is not a very statistical measure of how much a variable correlates to the results. What does it mean for a variable "income" to match 7 out of the top 15 social disorder points? Does this correlate to the rest of the results? How well does it correlate?

The correlation coefficient - r¶

The correlation coefficient ranges from −1 to 1. A value of 1 implies that a linear equation describes the relationship between X and Y perfectly, with all data points lying on a line for which Y increases as X increases. A value of −1 implies that all data points lie on a line for which Y decreases as X increases. A value of 0 implies that there is no linear correlation between the variables. ~Wikipedia

r = 1: the scatter diagram is a perfect straight line sloping upwards

r = -1: the scatter diagram is a perfect straight line sloping downwards.

Let's calculate the correlation coefficient between acceleration and price. We can use the corr method of a DataFrame to generate a correlation matrix of our joined_data:

joined_data.to_df().corr()

| Unemployment % | Household Median Income | Bachelor's Degree or higher % | % families with income under poverty line | % families with public assistance income | median house value | % white | % black | % asian | % hispanic/latino | % foreign born | % single mother family | Social Disorder | Positive Amenities | Negative Amenities | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Unemployment % | 1.000000 | 0.700739 | 0.784148 | 0.775636 | 0.732080 | 0.805079 | 0.765777 | 0.838210 | 0.690257 | 0.886376 | 0.795158 | 0.881062 | 0.929291 | 0.848802 | 0.774060 |

| Household Median Income | 0.700739 | 1.000000 | 0.929715 | 0.383005 | 0.389389 | 0.961108 | 0.961430 | 0.385088 | 0.656285 | 0.712375 | 0.710773 | 0.572139 | 0.685744 | 0.807641 | 0.506965 |

| Bachelor's Degree or higher % | 0.784148 | 0.929715 | 1.000000 | 0.510205 | 0.397591 | 0.987622 | 0.971554 | 0.440496 | 0.790926 | 0.817890 | 0.852773 | 0.714922 | 0.773227 | 0.910293 | 0.640537 |

| % families with income under poverty line | 0.775636 | 0.383005 | 0.510205 | 1.000000 | 0.824744 | 0.474612 | 0.438053 | 0.807182 | 0.679585 | 0.785381 | 0.741619 | 0.803566 | 0.854489 | 0.684657 | 0.853013 |

| % families with public assistance income | 0.732080 | 0.389389 | 0.397591 | 0.824744 | 1.000000 | 0.451997 | 0.409923 | 0.849438 | 0.385200 | 0.702095 | 0.485612 | 0.696483 | 0.792024 | 0.507993 | 0.694109 |

| median house value | 0.805079 | 0.961108 | 0.987622 | 0.474612 | 0.451997 | 1.000000 | 0.989597 | 0.501397 | 0.713478 | 0.828056 | 0.819313 | 0.725739 | 0.781957 | 0.911037 | 0.615599 |

| % white | 0.765777 | 0.961430 | 0.971554 | 0.438053 | 0.409923 | 0.989597 | 1.000000 | 0.441412 | 0.675897 | 0.769063 | 0.747883 | 0.676095 | 0.748241 | 0.864371 | 0.598161 |

| % black | 0.838210 | 0.385088 | 0.440496 | 0.807182 | 0.849438 | 0.501397 | 0.441412 | 1.000000 | 0.376141 | 0.734989 | 0.490633 | 0.804184 | 0.847861 | 0.542204 | 0.742061 |

| % asian | 0.690257 | 0.656285 | 0.790926 | 0.679585 | 0.385200 | 0.713478 | 0.675897 | 0.376141 | 1.000000 | 0.685816 | 0.958804 | 0.691218 | 0.729291 | 0.873920 | 0.698931 |

| % hispanic/latino | 0.886376 | 0.712375 | 0.817890 | 0.785381 | 0.702095 | 0.828056 | 0.769063 | 0.734989 | 0.685816 | 1.000000 | 0.808608 | 0.819081 | 0.910144 | 0.810930 | 0.770506 |

| % foreign born | 0.795158 | 0.710773 | 0.852773 | 0.741619 | 0.485612 | 0.819313 | 0.747883 | 0.490633 | 0.958804 | 0.808608 | 1.000000 | 0.742693 | 0.810353 | 0.916947 | 0.728098 |

| % single mother family | 0.881062 | 0.572139 | 0.714922 | 0.803566 | 0.696483 | 0.725739 | 0.676095 | 0.804184 | 0.691218 | 0.819081 | 0.742693 | 1.000000 | 0.913289 | 0.832326 | 0.843604 |

| Social Disorder | 0.929291 | 0.685744 | 0.773227 | 0.854489 | 0.792024 | 0.781957 | 0.748241 | 0.847861 | 0.729291 | 0.910144 | 0.810353 | 0.913289 | 1.000000 | 0.831663 | 0.879846 |

| Positive Amenities | 0.848802 | 0.807641 | 0.910293 | 0.684657 | 0.507993 | 0.911037 | 0.864371 | 0.542204 | 0.873920 | 0.810930 | 0.916947 | 0.832326 | 0.831663 | 1.000000 | 0.743870 |

| Negative Amenities | 0.774060 | 0.506965 | 0.640537 | 0.853013 | 0.694109 | 0.615599 | 0.598161 | 0.742061 | 0.698931 | 0.770506 | 0.728098 | 0.843604 | 0.879846 | 0.743870 | 1.000000 |

You'll notice that the matrix is mirrored with a 1.000000 going down the diagonal. This matrix yields the correlation coefficient for each variable to every other variable in our data.

For example, if we look at the Social Disorder, we see that there is a 0.911115 correlation, implying that there is a strong positive relationship between our constructed social disorder variable and the unemployment rate (i.e., as one goes up the other goes up too).

QUESTION: What else do you notice about the correlation values above?

Type your answere here, replacing this text.

Part 5: Regression¶

We will now use a method called linear regression to make a graph that will show the best fit line that correlates to the data. The slope of the line will show whether it is positively correlated or negatively correlated. The code that we've created so far has helped us establish a relationship between our two variables. Once a relationship has been established, it's time to create a model of the data. To do this we'll find the equation of the regression line!

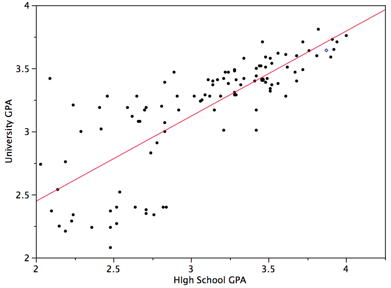

The regression line is the best fit line for our data. It’s like an average of where all the points line up. In linear regression, the regression line is a perfectly straight line! Below is a picture showing the best fit line.

As you can infer from the picture, once we find the slope and the y-intercept we can start predicting values! The equation for the above regression to predict university GPA based on high school GPA would look like this:

$\text{UNIGPA}_i= \alpha + \beta \cdot \text{HSGPA} + \epsilon_i$

The variable we want to predict (or model) is the left side y variable, the variable which we think has an influence on our left side variable is on the right side. The $\alpha$ term is the y-intercept and the $\epsilon_i$ describes the randomness.

We can set up a visualization to choose which variables we want as x and y and then plot the line of best fit:

def f(x_variable, y_variable):

if "median house value" in [x_variable, y_variable]:

drop_na = joined_data.to_df().dropna() # if not all census tracts have measure

x = drop_na[x_variable]

y = drop_na[y_variable]

else:

x = joined_data[x_variable]

y = joined_data[y_variable]

plt.scatter(x, y)

plt.xlabel(x_variable, fontsize=18)

plt.ylabel(y_variable, fontsize=18)

plt.plot(np.unique(x), np.poly1d(np.polyfit(x, y, 1))(np.unique(x)), color="r") #calculate line of best fit

plt.show()

slope, intercept, r_value, p_value, std_err = stats.linregress(x, y) #gets the r_value

print("R-squared: ", r_value**2)

display(widgets.interactive(f, x_variable=list(joined_data)[1:], y_variable=list(joined_data)[1:]))

*Note:* The R-squared tells us how much of the variation in the data can be explained by our model.

Why is this a better method than just sorting tables? First of all, we are now comparing all of the data in the graph to the variable, rather than comparing what our eyes glance quickly over. It shows a more complete picture than just saying "There are some similar results in the top half of the sorted data". Second of all, the graph gives a more intuitive sense to see if your variable does match the data. You can quickly see if the data points match up with the regression line. Lastly, the r-squared value will give you a way to quantify how good the variable is to explain the data.

One of the beautiful things about computer science and statistics is that you do not need to reinvent the wheel. You don't need to know how to calculate the R-squared value, or draw the regression line; someone has already implemented it! You simply need to tell the computer to calculate it. However, if you are interested in these mathematical models, take a data science or statistics course!

Peer Consulting Office Hours¶

Not quite understand everything covered in this notebook? Curious about concepts covered in this lab at a deeper level? Looking for more data enabled courses with modules like this? Come to Peer Consulting Office Hours at 1st Floor Moffitt! Find a Peer Consultant with the expertise you need and get your questions answered: Office Hours Schedule is linked here!

We Want Your Feedback!¶

Help us make your module experience better in future courses: *Please fill out our short feedback form!*

Fall 2018 Notebook developed by: Keeley Takimoto, Anna Nguyen, Taj Shaik, Keiko Kamei

Adapted from Spring 2018 and Fall 2017 materials by: Anna Nguyen, Sujude Dalieh, Michaela Palmer, Gavin Poe, Theodore Tran

Data Science Modules: http://data.berkeley.edu/education/modules