Abstract: The relationship between physical systems and intelligence has long fascinated researchers in computer science and physics. This talk explores fundamental connections between thermodynamic systems and intelligent decision-making through the lens of free energy principles.

We examine how concepts from statistical mechanics - particularly the relationship between total energy, free energy, and entropy - might provide novel insights into the nature of intelligence and learning. By drawing parallels between physical systems and information processing, we consider how measurement and observation can be viewed as processes that modify available energy. The discussion encompasses how model approximations and uncertainties might be understood through thermodynamic analogies, and explores the implications of treating intelligence as an energy-efficient state-change process.

While these connections remain speculative, they offer intriguing perspectives for discussing the fundamental nature of intelligence and learning systems. The talk aims to stimulate discussion about these potential relationships rather than present definitive conclusions.

::: {.cell .markdown}

import notutils as nu

nu.display_google_book(id='3yRVAAAAcAAJ', page='PP7')

Figure: Daniel Bernoulli’s Hydrodynamica published in 1738. It was one of the first works to use the idea of conservation of energy. It used Newton’s laws to predict the behaviour of gases.

Daniel Bernoulli described a kinetic theory of gases, but it wasn’t until 170 years later when these ideas were verified after Einstein had proposed a model of Brownian motion which was experimentally verified by Jean Baptiste Perrin.

import notutils as nu

nu.display_google_book(id='3yRVAAAAcAAJ', page='PA200')

Figure: Daniel Bernoulli’s chapter on the kinetic theory of gases, for a review on the context of this chapter see Mikhailov (n.d.). For 1738 this is extraordinary thinking. The notion of kinetic theory of gases wouldn’t become fully accepted in Physics until 1908 when a model of Einstein’s was verified by Jean Baptiste Perrin.

import numpy as np

p = np.random.randn(10000, 1)

xlim = [-4, 4]

x = np.linspace(xlim[0], xlim[1], 200)

y = 1/np.sqrt(2*np.pi)*np.exp(-0.5*x*x)

import matplotlib.pyplot as plt

import mlai.plot as plot

import mlai

fig, ax = plt.subplots(figsize=plot.big_wide_figsize)

ax.plot(x, y, 'r', linewidth=3)

ax.hist(p, 100, density=True)

ax.set_xlim(xlim)

mlai.write_figure('gaussian-histogram.svg', directory='./ml')

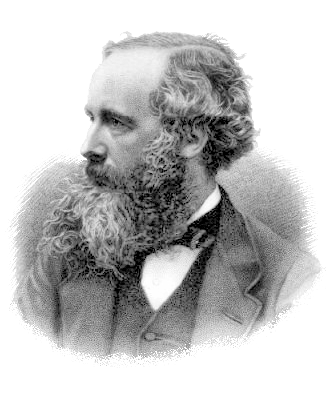

Another important figure for Cambridge was the first to derive the probability distribution that results from small balls banging together in this manner. In doing so, James Clerk Maxwell founded the field of statistical physics.

Figure: James Clerk Maxwell 1831-1879 Derived distribution of velocities of particles in an ideal gas (elastic fluid).

|

|

|

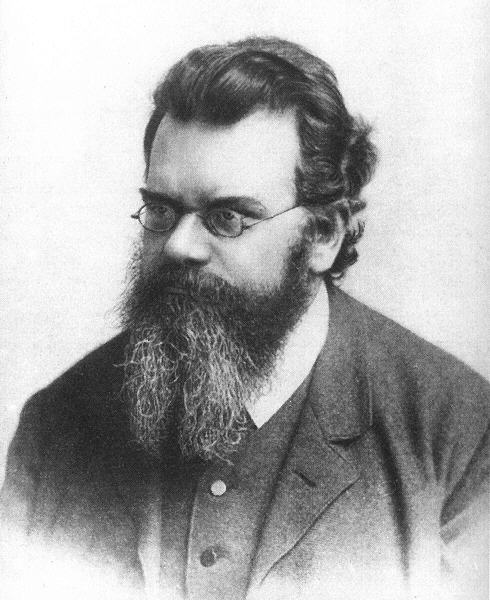

Figure: James Clerk Maxwell (1831-1879), Ludwig Boltzmann (1844-1906) Josiah Willard Gibbs (1839-1903)

Many of the ideas of early statistical physicists were rejected by a cadre of physicists who didn’t believe in the notion of a molecule. The stress of trying to have his ideas established caused Boltzmann to commit suicide in 1906, only two years before the same ideas became widely accepted.

import notutils as nu

nu.display_google_book(id='Vuk5AQAAMAAJ', page='PA373')

Figure: Boltzmann’s paper Boltzmann (n.d.) which introduced the relationship between entropy and probability. A translation with notes is available in Sharp and Matschinsky (2015).

The important point about the uncertainty being represented here is that it is not genuine stochasticity, it is a lack of knowledge about the system. The techniques proposed by Maxwell, Boltzmann and Gibbs allow us to exactly represent the state of the system through a set of parameters that represent the sufficient statistics of the physical system. We know these values as the volume, temperature, and pressure. The challenge for us, when approximating the physical world with the techniques we will use is that we will have to sit somewhere between the deterministic and purely stochastic worlds that these different scientists described.

One ongoing characteristic of people who study probability and uncertainty is the confidence with which they hold opinions about it. Another leader of the Cavendish laboratory expressed his support of the second law of thermodynamics (which can be proven through the work of Gibbs/Boltzmann) with an emphatic statement at the beginning of his book.

|

|

Figure: Eddington’s book on the Nature of the Physical World (Eddington, 1929)

The same Eddington is also famous for dismissing the ideas of a young Chandrasekhar who had come to Cambridge to study in the Cavendish lab. Chandrasekhar demonstrated the limit at which a star would collapse under its own weight to a singularity, but when he presented the work to Eddington, he was dismissive suggesting that there “must be some natural law that prevents this abomination from happening”.

|

|

Figure: Chandrasekhar (1910-1995) derived the limit at which a star collapses in on itself. Eddington’s confidence in the 2nd law may have been what drove him to dismiss Chandrasekhar’s ideas, humiliating a young scientist who would later receive a Nobel prize for the work.

Figure: Eddington makes his feelings about the primacy of the second law clear. This primacy is perhaps because the second law can be demonstrated mathematically, building on the work of Maxwell, Gibbs and Boltzmann. Eddington (1929)

Presumably he meant that the creation of a black hole seemed to transgress the second law of thermodynamics, although later Hawking was able to show that blackholes do evaporate, but the time scales at which this evaporation occurs is many orders of magnitude slower than other processes in the universe.

Maxwell’s Demon¶

[edit]

Maxwell’s demon is a thought experiment described by James Clerk Maxwell in his book, Theory of Heat (Maxwell, 1871) on page 308.

But if we conceive a being whose faculties are so sharpened that he can follow every molecule in its course, such a being, whose attributes are still as essentially finite as our own, would be able to do what is at present impossible to us. For we have seen that the molecules in a vessel full of air at uniform temperature are moving with velocities by no means uniform, though the mean velocity of any great number of them, arbitrarily selected, is almost exactly uniform. Now let us suppose that such a vessel is divided into two portions, A and B, by a division in which there is a small hole, and that a being, who can see the individual molecules, opens and closes this hole, so as to allow only the swifter molecules to pass from A to B, and the only the slower ones to pass from B to A. He will thus, without expenditure of work, raise the temperature of B and lower that of A, in contradiction to the second law of thermodynamics.

James Clerk Maxwell in Theory of Heat (Maxwell, 1871) page 308

He goes onto say:

This is only one of the instances in which conclusions which we have draw from our experience of bodies consisting of an immense number of molecules may be found not to be applicable to the more delicate observations and experiments which we may suppose made by one who can perceive and handle the individual molecules which we deal with only in large masses

import notutils as nu

nu.display_google_book(id='0p8AAAAAMAAJ', page='PA308')

Figure: Maxwell’s demon was designed to highlight the statistical nature of the second law of thermodynamics.

Entropy:

Figure: Maxwell’s Demon. The demon decides balls are either cold (blue) or hot (red) according to their velocity. Balls are allowed to pass the green membrane from right to left only if they are cold, and from left to right, only if they are hot.

Maxwell’s demon allows us to connect thermodynamics with information theory (see e.g. Hosoya et al. (2015);Hosoya et al. (2011);Bub (2001);Brillouin (1951);Szilard (1929)). The connection arises due to a fundamental connection between information erasure and energy consumption Landauer (1961).

Alemi and Fischer (2019)

Information Theory and Thermodynamics¶

[edit]

Information theory provides a mathematical framework for quantifying information. Many of information theory’s core concepts parallel those found in thermodynamics. The theory was developed by Claude Shannon who spoke extensively to MIT’s Norbert Wiener at while it was in development (Conway and Siegelman, 2005). Wiener’s own ideas about information were inspired by Willard Gibbs, one of the pioneers of the mathematical understanding of free energy and entropy. Deep connections between physical systems and information processing have connected information and energy from the start.

Entropy¶

Shannon’s entropy measures the uncertainty or unpredictability of information content. This mathematical formulation is inspired by thermodynamic entropy, which describes the dispersal of energy in physical systems. Both concepts quantify the number of possible states and their probabilities.

Figure: Maxwell’s demon thought experiment illustrates the relationship between information and thermodynamics.

In thermodynamics, free energy represents the energy available to do work. A system naturally evolves to minimize its free energy, finding equilibrium between total energy and entropy. Free energy principles are also pervasive in variational methods in machine learning. They emerge from Bayesian approaches to learning and have been heavily promoted by e.g. Karl Friston as a model for the brain.

The relationship between entropy and Free Energy can be explored through the Legendre transform. This is most easily reviewed if we restrict ourselves to distributions in the exponential family.

Exponential Family¶

The exponential family has the form ρ(Z)=h(Z)exp(θ⊤T(Z)+A(θ))

Available Energy¶

Work through Measurement¶

In machine learning and Bayesian inference, the Markov blanket is the set of variables that are conditionally independent of the variable of interest given the other variables. To introduce this idea into our information system, we first split the system into two parts, the variables, X, and the memory M.

The variables are the portion of the system that is stochastically evolving over time. The memory is a low entropy partition of the system that will give us knowledge about this evolution.

We can now write the joint entropy of the system in terms of the mutual information between the variables and the memory. S(Z)=S(X,M)=S(X|M)+S(M)=S(X)−I(X;M)+S(M).

If M is viewed as a measurement then the change in entropy of the system before and after measurement is given by S(X|M)−S(X) wehich is given by −I(X;M). This is implies that measurement increases the amount of available energy we obtain from the system (Parrondo et al., 2015).

The difference in available energy is given by ΔA=A(X)−A(Z|M)=I(X;M),

The 20 Questions Paradigm¶

In the game of 20 Questions player one (Alice) thinks of an object, player two (Bob) must identify it by asking at most 20 yes/no questions. The optimal strategy is to divide the possibility space in half with each question. The binary search approach ensures maximum information gain with each inquiry and can access 220 or about a million different objects.

Figure: The optimal strategy in the Entropy Game resembles a binary search, dividing the search space in half with each question.

Entropy Reduction and Decisions¶

From an information-theoretic perspective, decisions can be taken in a way that efficiently reduces entropy - our the uncertainty about the state of the world. Each observation or action an intelligent agent takes should maximize expected information gain, optimally reducing uncertainty given available resources.

The entropy before the question is S(X). The entropy after the question is S(X|M). The information gain is the difference between the two, I(X;M)=S(X)−S(X|M). Optimal decision making systems maximize this information gain per unit cost.

Thermodynamic Parallels¶

The entropy game connects decision-making to thermodynamics.

This perspective suggests a profound connection: intelligence might be understood as a special case of systems that efficiently extract, process, and utilize free energy from their environments, with thermodynamic principles setting fundamental constraints on what’s possible.

Measurement as a Thermodynamic Process: Information-Modified Second Law¶

The second law of thermodynamics was generalised to include the effect of measurement by Sagawa and Ueda (Sagawa and Ueda, 2008). They showed that the maximum extractable work from a system can be increased by kBTI(X;M) where kB is Boltzmann’s constant, T is temperature and I(X;M) is the information gained by making a measurement, M, I(X;M)=∑x,mρ(x,m)logρ(x,m)ρ(x)ρ(m),

The measurements can be seen as a thermodynamic process. In theory measurement, like computation is reversible. But in practice the process of measurement is likely to erode the free energy somewhat, but as long as the energy gained from information, kTI(X;M) is greater than that spent in measurement the pricess can be thermodynamically efficient.

The modified second law shows that the maximum additional extractable work is proportional to the information gained. So information acquisition creates extractable work potential. Thermodynamic consistency is maintained by properly accounting for information-entropy relationships.

Efficacy of Feedback Control¶

Sagawa and Ueda extended this relationship to provide a generalised Jarzynski equality for feedback processes (Sagawa and Ueda, 2010). The Jarzynski equality is an imporant result from nonequilibrium thermodynamics that relates the average work done across an ensemble to the free energy difference between initial and final states (Jarzynski, 1997), ⟨exp(−WkBT)⟩=exp(−ΔFkBT),

Sagawa and Ueda introduce an efficacy term that captures the effect of feedback on the system they note in the presence of feedback, ⟨exp(−WkBT)exp(ΔFkBT)⟩=γ,

Channel Coding Perspective on Memory¶

When viewing M as an information channel between past and future states, Shannon’s channel coding theorems apply (Shannon, 1948). The channel capacity C represents the maximum rate of reliable information transmission [ C = _{(M)} I(X_1;M) ] and for a memory of n bits we have [ C n, ] as the mutual information is upper bounded by the entropy of ρ(M) which is at most n bits.

This relationship seems to align with Ashby’s Law of Requisite Variety (pg 229 Ashby (1952)), which states that a control system must have at least as much ‘variety’ as the system it aims to control. In the context of memory systems, this means that to maintain temporal correlations effectively, the memory’s state space must be at least as large as the information content it needs to preserve. This provides a lower bound on the necessary memory capacity that complements the bound we get from Shannon for channel capacity.

This helps determine the required memory size for maintaining temporal correlations, optimal coding strategies, and fundamental limits on temporal correlation preservation.

Decomposition into Past and Future¶

Model Approximations and Thermodynamic Efficiency¶

Intelligent systems must balance measurement against energy efficiency and time requirements. A perfect model of the world would require infinite computational resources and speed, so approximations are necessary. This leads to uncertainties. Thermodynamics might be thought of as the physics of uncertainty: at equilibrium thermodynamic systems find thermodynamic states that minimize free energy, equivalent to maximising entropy.

Markov Blanket¶

To introduce some structure to the model assumption. We split X into X0 and X1. X0 is past and present of the system, X1 is future The conditional mutual information I(X0;X1|M) which is zero if X1 and X0 are independent conditioned on M.

At What Scales Does this Apply?¶

The equipartition theorem tells us that at equilibrium the average energy is kT/2 per degree of freedom. This means that for systems that operate at “human scale” the energy involved is many orders of magnitude larger than the amount of information we can store in memory. For a car engine producing 70 kW of power at 370 Kelvin, this implies 2×70,000370×kB=2×70,000370×1.380649×10−23=2.74×1025

Small-Scale Biochemical Systems and Information Processing¶

While macroscopic systems operate in regimes where traditional thermodynamics dominates, microscopic biological systems operate at scales where information and thermal fluctuations become critically important. Here we examine how the framework applies to molecular machines and processes that have evolved to operate efficiently at these scales.

Molecular machines like ATP synthase, kinesin motors, and the photosynthetic apparatus can be viewed as sophisticated information engines that convert energy while processing information about their environment. These systems have evolved to exploit thermal fluctuations rather than fight against them, using information processing to extract useful work.

ATP Synthase: Nature’s Rotary Engine¶

ATP synthase functions as a rotary molecular motor that synthesizes ATP from ADP and inorganic phosphate using a proton gradient. The system uses the proton gradient as both an energy source and an information source about the cell’s energetic state and exploits Brownian motion through a ratchet mechanism. It converts information about proton locations into mechanical rotation and ultimately chemical energy with approximately 3-4 protons required per ATP.

from IPython.lib.display import YouTubeVideo

YouTubeVideo('kXpzp4RDGJI')

Estimates suggest that one synapse firing may require 104 ATP molecules, so around 4×104 protons. If we take the human brain as containing around 1014 synapses, and if we suggest each synapse only fires about once every five seconds, we would require approximately 1018 protons per second to power the synapses in our brain. With each proton having six degrees of freedom. Under these rough calculations the memory capacity distributed across the ATP Synthase in our brain must be of order 6×1018 bits per second or 750 petabytes of information per second. Of course this memory capacity would be devolved across the billions of neurons within hundreds or thousands of mitochondria that each can contain thousands of ATP synthase molecules. By composition of extremely small systems we can see it’s possible to improve efficiencies in ways that seem very impractical for a car engine.

Quick note to clarify, here we’re referring to the information requirements to make our brain more energy efficient in its information processing rather than the information processing capabilities of the neurons themselves!

Jaynes’s Maximum Entropy Principle¶

[edit]

In his seminal 1957 paper (Jaynes, 1957), Ed Jaynes proposed a foundation for statistical mechanics based on information theory. Rather than relying on ergodic hypotheses or ensemble interpretations, Jaynes recast that the problem of assigning probabilities in statistical as a problem of inference with incomplete information.

A central problem in statistical mechanics is assigning initial probabilities when our knowledge is incomplete. For example, if we know only the average energy of a system, what probability distribution should we use? Jaynes argued that we should use the distribution that maximizes entropy subject to the constraints of our knowledge.

Jaynes illustrated the approachwith a simple example: Suppose a die has been tossed many times, with an average result of 4.5 rather than the expected 3.5 for a fair die. What probability assignment Pn (n=1,2,...,6) should we make for the next toss?

We need to satisfy two constraints

Many distributions could satisfy these constraints, but which one makes the fewest unwarranted assumptions? Jaynes argued that we should choose the distribution that is maximally noncommittal with respect to missing information - the one that maximizes the entropy, This principle leads to the exponential family of distributions, which in statistical mechanics gives us the canonical ensemble and other familiar distributions.

The General Maximum-Entropy Formalism¶

For a more general case, suppose a quantity x can take values (x1,x2,…,xn) and we know the average values of several functions fk(x). The problem is to find the probability assignment pi=p(xi) that satisfies and maximizes the entropy SI=−∑ni=1pilogpi.

Using Lagrange multipliers, the solution is the generalized canonical distribution, where Z(λ1,…,λm) is the partition function, The Lagrange multipliers λk are determined by the constraints, The maximum attainable entropy is

Jaynes’ World¶

[edit]

Jaynes’ World is a zero-player game that implements a version of the entropy game. The dynamical system is defined by a distribution, ρ(Z), over a state space Z. The state space is partitioned into observable variables X and memory variables M. The memory variables are considered to be in an information resevoir, a thermodynamic system that maintains information in an ordered state (see e.g. Barato and Seifert (2014)). The entropy of the whole system is bounded below by 0 and above by N. So the entropy forms a compact manifold with respect to its parameters.

Unlike the animal game, where decisions are made by reducing entropy at each step, our system evovles mathematically by maximising the instantaneous entropy production. Conceptually we can think of this as ascending the gradient of the entropy, S(Z).

In the animal game the questioner starts with maximum uncertainty and targets minimal uncertainty. Jaynes’ world starts with minimal uncertainty and aims for maximum uncertainty.

We can phrase this as a thought experiment. Imagine you are in the game, at a given turn. You want to see where the game came from, so you look back across turns. The direction the game came from is now the direction of steepest descent. Regardless of where the game actually started it looks like it started at a minimal entropy configuration that we call the origin. Similarly, wherever the game is actually stopped there will nevertheless appear to be an end point we call end that will be a configuration of maximal entropy, N.

This speculation allows us to impose the functional form of our proability distribution. As Jaynes has shown (Jaynes, 1957), the stationary points of a free-form optimisation (minimum or maximum) will place the distribution in the, ρ(Z) in the exponential family, ρ(Z)=h(Z)exp(θ⊤T(Z)−A(θ)),

This constraint to the exponential family is highly convenient as we will rely on it heavily for the dynamics of the game. In particular, by focussing on the natural parameters we find that we are optimising within an information geometry (Amari, 2016). In exponential family distributions, the entropy gradient is given by, ∇θS(Z)=g=∇2θA(θ(M))

System Evolution¶

We are now in a position to summarise the start state and the end state of our system, as well as to speculate on the nature of the transition between the two states.

Start State¶

The origin configuration is a low entropy state, with value near the lower bound of 0. The information is highly structured, by definition we place all variables in M, the information resevoir at this time. The uncertainty principle is present to handle the competeing needs of precision in parameters (giving us the near-singular form for θ(M), and capacity in the information channel that M provides (the capacity c(θ) is upper bounded by S(M).

End State¶

The end configuration is a high entropy state, near the upper bound. Both the minimal entropy and maximal entropy states are revealed by Ed Jaynes’ variational minimisation approach and are in the exponential family. In many cases a version of Zeno’s paradox will arise where the system asymtotes to the final state, taking smaller steps at each time. At this point the system is at equilibrium.

From Maximum to Minimal Entropy¶

[edit]

Jaynes formulated his principle in terms of maximizing entropy, we can also view certain problems as minimizing entropy under appropriate constraints. The duality becomes apparent when we consider the relationship between entropy and information.

The maximum entropy principle finds the distribution that is maximally noncommittal given certain constraints. Conversely, we can seek the distribution that minimizes entropy subject to different constraints - this represents the distribution with maximum structure or information.

Consider the uncertainty principle. When we seek states that minimize the product of position and momentum uncertainties, we are seeking minimal entropy states subject to the constraint of the uncertainty principle.

The mathematical formalism remains the same, but with different constraints and optimization direction, where gk are functions representing constraints different from simple averages.

The solution still takes the form of an exponential family, where μk are Lagrange multipliers for the constraints.

Minimal Entropy States in Quantum Systems¶

The pure states of quantum mechanics are those that minimize von Neumann entropy S=−Tr(ρlogρ) subject to the constraints of quantum mechanics.

For example, coherent states minimize the entropy subject to constraints on the expectation values of position and momentum operators. These states achieve the minimum uncertainty allowed by quantum mechanics.

import numpy as np

First we write some helper code to plot the histogram and compute its entropy.

import matplotlib.pyplot as plt

import mlai.plot as plot

def plot_histogram(ax, p, max_height=None):

heights = p

if max_height is None:

max_height = 1.25*heights.max()

# Safe entropy calculation that handles zeros

nonzero_p = p[p > 0] # Filter out zeros

S = - (nonzero_p*np.log2(nonzero_p)).sum()

# Define bin edges

bins = [1, 2, 3, 4, 5] # Bin edges

# Create the histogram

if ax is None:

fig, ax = plt.subplots(figsize=(6, 4)) # Adjust figure size

ax.hist(bins[:-1], bins=bins, weights=heights, align='left', rwidth=0.8, edgecolor='black') # Use weights for probabilities

# Customize the plot for better slide presentation

ax.set_xlabel("Bin")

ax.set_ylabel("Probability")

ax.set_title(f"Four Bin Histogram (Entropy {S:.3f})")

ax.set_xticks(bins[:-1]) # Show correct x ticks

ax.set_ylim(0,max_height) # Set y limit for visual appeal

We can compute the entropy of any given histogram.

# Define probabilities

p = np.zeros(4)

p[0] = 4/13

p[1] = 3/13

p[2] = 3.7/13

p[3] = 1 - p.sum()

# Safe entropy calculation

nonzero_p = p[p > 0] # Filter out zeros

entropy = - (nonzero_p*np.log2(nonzero_p)).sum()

print(f"The entropy of the histogram is {entropy:.3f}.")

import matplotlib.pyplot as plt

import mlai.plot as plot

import mlai

fig, ax = plt.subplots(figsize=plot.big_wide_figsize)

fig.tight_layout()

plot_histogram(ax, p)

ax.set_title(f"Four Bin Histogram (Entropy {entropy:.3f})")

mlai.write_figure(filename='four-bin-histogram.svg',

directory = './information-game')

Figure: The entropy of a four bin histogram.

We can play the entropy game by starting with a histogram with all the probability mass in the first bin and then ascending the gradient of the entropy function.

Two-Bin Histogram Example¶

[edit]

The simplest possible example of Jaynes’ World is a two-bin histogram with probabilities p and 1−p. This minimal system allows us to visualize the entire entropy landscape.

The natural parameter is the log odds, θ=logp1−p, and the update given by the entropy gradient is Δθsteepest=ηdSdθ=ηp(1−p)(log(1−p)−logp).

import numpy as np

# Python code for gradients

p_values = np.linspace(0.000001, 0.999999, 10000)

theta_values = np.log(p_values/(1-p_values))

entropy = -p_values * np.log(p_values) - (1-p_values) * np.log(1-p_values)

fisher_info = p_values * (1-p_values)

gradient = fisher_info * (np.log(1-p_values) - np.log(p_values))

import matplotlib.pyplot as plt

import mlai.plot as plot

import mlai

fig, (ax1, ax2) = plt.subplots(1, 2, figsize=plot.big_wide_figsize)

ax1.plot(theta_values, entropy)

ax1.set_xlabel('$\\theta$')

ax1.set_ylabel('Entropy $S(p)$')

ax1.set_title('Entropy Landscape')

ax2.plot(theta_values, gradient)

ax2.set_xlabel('$\\theta$')

ax2.set_ylabel('$\\nabla_\\theta S(p)$')

ax2.set_title('Entropy Gradient vs. Position')

mlai.write_figure(filename='two-bin-histogram-entropy-gradients.svg',

directory = './information-game')

Figure: Entropy gradients of the two bin histogram agains position.

This example reveals the entropy extrema at p=0, p=0.5, and p=1. At minimal entropy (p≈0 or p≈1), the gradient approaches zero, creating natural information reservoirs. The dynamics slow dramatically near these points - these are the areas of critical slowing that create information reservoirs.

Gradient Ascent in Natural Parameter Space¶

We can visualize the entropy maximization process by performing gradient ascent in the natural parameter space θ. Starting from a low-entropy state, we follow the gradient of entropy with respect to θ to reach the maximum entropy state.

import numpy as np

# Helper functions for two-bin histogram

def theta_to_p(theta):

"""Convert natural parameter theta to probability p"""

return 1.0 / (1.0 + np.exp(-theta))

def p_to_theta(p):

"""Convert probability p to natural parameter theta"""

# Add small epsilon to avoid numerical issues

p = np.clip(p, 1e-10, 1-1e-10)

return np.log(p/(1-p))

def entropy(theta):

"""Compute entropy for given theta"""

p = theta_to_p(theta)

# Safe entropy calculation

return -p * np.log2(p) - (1-p) * np.log2(1-p)

def entropy_gradient(theta):

"""Compute gradient of entropy with respect to theta"""

p = theta_to_p(theta)

return p * (1-p) * (np.log2(1-p) - np.log2(p))

def plot_histogram(ax, theta, max_height=None):

"""Plot two-bin histogram for given theta"""

p = theta_to_p(theta)

heights = np.array([p, 1-p])

if max_height is None:

max_height = 1.25

# Compute entropy

S = entropy(theta)

# Create the histogram

bins = [1, 2, 3] # Bin edges

if ax is None:

fig, ax = plt.subplots(figsize=(6, 4))

ax.hist(bins[:-1], bins=bins, weights=heights, align='left', rwidth=0.8, edgecolor='black')

# Customize the plot

ax.set_xlabel("Bin")

ax.set_ylabel("Probability")

ax.set_title(f"Two-Bin Histogram (Entropy {S:.3f})")

ax.set_xticks(bins[:-1])

ax.set_ylim(0, max_height)

# Parameters for gradient ascent

theta_initial = -9.0 # Start with low entropy

learning_rate = 1

num_steps = 1500

# Initialize

theta_current = theta_initial

theta_history = [theta_current]

p_history = [theta_to_p(theta_current)]

entropy_history = [entropy(theta_current)]

# Perform gradient ascent in theta space

for step in range(num_steps):

# Compute gradient

grad = entropy_gradient(theta_current)

# Update theta

theta_current = theta_current + learning_rate * grad

# Store history

theta_history.append(theta_current)

p_history.append(theta_to_p(theta_current))

entropy_history.append(entropy(theta_current))

if step % 100 == 0:

print(f"Step {step+1}: θ = {theta_current:.4f}, p = {p_history[-1]:.4f}, Entropy = {entropy_history[-1]:.4f}")

import matplotlib.pyplot as plt

import mlai.plot as plot

import mlai

# Create a figure showing the evolution

fig, axes = plt.subplots(2, 3, figsize=(15, 8))

fig.tight_layout(pad=3.0)

# Select steps to display

steps_to_show = [0, 300, 600, 900, 1200, 1500]

# Plot histograms for selected steps

for i, step in enumerate(steps_to_show):

row, col = i // 3, i % 3

plot_histogram(axes[row, col], theta_history[step])

axes[row, col].set_title(f"Step {step}: θ = {theta_history[step]:.2f}, p = {p_history[step]:.3f}")

mlai.write_figure(filename='two-bin-histogram-evolution.svg',

directory = './information-game')

# Plot entropy evolution

plt.figure(figsize=(10, 6))

plt.plot(range(num_steps+1), entropy_history, 'o-')

plt.xlabel('Gradient Ascent Step')

plt.ylabel('Entropy')

plt.title('Entropy Evolution During Gradient Ascent')

plt.grid(True)

mlai.write_figure(filename='two-bin-entropy-evolution.svg',

directory = './information-game')

# Plot trajectory in theta space

plt.figure(figsize=(10, 6))

theta_range = np.linspace(-5, 5, 1000)

entropy_curve = [entropy(t) for t in theta_range]

plt.plot(theta_range, entropy_curve, 'b-', label='Entropy Landscape')

plt.plot(theta_history, entropy_history, 'ro-', label='Gradient Ascent Path')

plt.xlabel('Natural Parameter θ')

plt.ylabel('Entropy')

plt.title('Gradient Ascent Trajectory in Natural Parameter Space')

plt.axvline(x=0, color='k', linestyle='--', alpha=0.3)

plt.legend()

plt.grid(True)

mlai.write_figure(filename='two-bin-trajectory.svg',

directory = './information-game')

Figure: Evolution of the two-bin histogram during gradient ascent in natural parameter space.

Figure: Entropy evolution during gradient ascent for the two-bin histogram.

Figure: Gradient ascent trajectory in the natural parameter space for the two-bin histogram.

The gradient ascent visualization shows how the system evolves in the natural parameter space θ. Starting from a negative θ (corresponding to a low-entropy state with p<<0.5), the system follows the gradient of entropy with respect to θ until it reaches θ=0 (corresponding to p=0.5), which is the maximum entropy state.

Note that the maximum entropy occurs at θ=0, which corresponds to p=0.5. The gradient of entropy with respect to θ is zero at this point, making it a stable equilibrium for the gradient ascent process.

Uncertainty Principle¶

[edit]

One challenge is how to parameterise our exponential family. We’ve mentioned that the variables Z are partitioned into observable variables X and memory variables M. Given the minimal entropy initial state, the obvious initial choice is that at the origin all variables, Z, should be in the information reservoir, M. This implies that they are well determined and present a sensible choice for the source of our parameters.

We define a mapping, θ(M), that maps the information resevoir to a set of values that are equivalent to the natural parameters. If the entropy of these parameters is low, and the distribution ρ(θ) is sharply peaked then we can move from treating the memory mapping, θ(⋅), as a random processe to an assumption that it is a deterministic function. We can then follow gradients with respect to these θ values.

This allows us to rewrite the distribution over Z in a conditional form, ρ(X|M)=h(X)exp(θ(M)⊤T(X)−A(θ(M))).

Unfortunately this assumption implies that θ(⋅) is a delta function, and since our representation as a compact manifold (bounded below by 0 and above by N) it does not admit any such singularities.

Capacity ↔ Precision Paradox¶

This creates an apparent paradox, at minimal entropy states, the information reservoir must simultaneously maintain precision in the parameters θ(M) (for accurate system representation) but it must also provide sufficient capacity c(M) (for information storage).

The trade-off can be expressed as, Δθ(M)⋅Δc(M)≥k,

This trade-off between precision and capacity directly parallels Shannon’s insights about information transmission (Shannon, 1948), where he demonstrated that increasing the precision of a signal requires increasing bandwidth or reducing noise immunity—creating an inherent trade-off in any communication system. Our formulation extends this principle to the information reservoir’s parameter space.

In practice this means that the parameters θ(M) and capacity variables c(M) must form a Fourier-dual pair, c(M)=F[θ(M)],

The mathematical formulation of the uncertainty principle comes from Hirschman Jr (1957) and later refined by Beckner (1975) and Białynicki-Birula and Mycielski (1975). These works demonstrated that Shannon’s information-theoretic entropy provides a natural framework for expressing quantum uncertainty, establishing a direct bridge between quantum mechanics and information theory. Our capacity-precision trade-off follows this tradition, expressing the fundamental limits of information processing in our system.

Quantum vs Classical Information Reservoirs¶

The uncertainty principle means that the game can exhibit quantum-like information processing regimes during evolution. This inspires an information-theoretic perspective on the quantum-classical transition.

At minimal entropy states near the origin, the information reservoir has characteristics reminiscent of quantum systems.

Wave-like information encoding: The information reservoir near the origin necessarily encodes information in distributed, interference-capable patterns due to the uncertainty principle between parameters θ(M) and capacity variables c(M).

Non-local correlations: Parameters are highly correlated through the Fisher information matrix, creating structures where information is stored in relationships rather than individual variables.

Uncertainty-saturated regime: The uncertainty relationship Δθ(M)⋅Δc(M)≥k is nearly saturated (approaches equality), similar to Heisenberg’s uncertainty principle in quantum systems and the entropic uncertainty relations established by Białynicki-Birula and Mycielski (1975).

As the system evolves towards higher entropy states, a transition occurs where some variables exhibit classical behavior.

From wave-like to particle-like: Variables transitioning from M to X shift from storing information in interference patterns to storing it in definite values with statistical uncertainty.

Decoherence-like process: The uncertainty product Δθ(M)⋅Δc(M) for these variables grows significantly larger than the minimum value k, indicating a departure from quantum-like behavior.

Local information encoding: Information becomes increasingly encoded in local variables rather than distributed correlations.

The saddle points in our entropy landscape mark critical transitions between quantum-like and classical information processing regimes. Near these points

The critically slowed modes maintain quantum-like characteristics, functioning as coherent memory that preserves information through interference patterns.

The rapidly evolving modes exhibit classical characteristics, functioning as incoherent processors that manipulate information through statistical operations.

This natural separation creates a hybrid computational architecture where quantum-like memory interfaces with classical-like processing.

The quantum-classical transition can be quantified using the moment generating function MZ(t). In quantum-like regimes, the MGF exhibits oscillatory behavior with complex analytic structure, whereas in classical regimes, it grows monotonically with simple analytic structure. The transition between these behaviors identifies variables moving between quantum-like and classical information processing modes.

This perspective suggests that what we recognize as “quantum” versus “classical” behavior may fundamentally reflect different regimes of information processing - one optimized for coherent information storage (quantum-like) and the other for flexible information manipulation (classical-like). The emergence of both regimes from our entropy-maximizing model indicates that nature may exploit this computational architecture to optimize information processing across multiple scales.

This formulation of the uncertainty principle in terms of information capacity and parameter precision follows the tradition established by Shannon (1948) and expanded upon by Hirschman Jr (1957) and others who connected information entropy uncertainty to Heisenberg’s uncertainty.

Maximum Entropy and Density Matrices¶

[edit]

In Jaynes (1957) Jaynes showed how the maximum entropy formalism is applied, in later papers such as Jaynes (1963) he showed how his maximum entropy formalism could be applied to von Neumann entropy of a density matrix.

As Jaynes noted in his 1962 Brandeis lectures: “Assignment of initial probabilities must, in order to be useful, agree with the initial information we have (i.e., the results of measurements of certain parameters). For example, we might know that at time t=0, a nuclear spin system having total (measured) magnetic moment M(0), is placed in a magnetic field H, and the problem is to predict the subsequent variation M(t)… What initial density matrix for the spin system ρ(0), should we use?”

Jaynes recognized that we should choose the density matrix that maximizes the von Neumann entropy, subject to constraints from our measurements, where Mop is the operator corresponding to total magnetic moment.

The solution is the quantum version of the maximum entropy distribution, where Ai are the operators corresponding to measured observables, λi are Lagrange multipliers, and Z=Tr[exp(−λ1A1−⋯−λmAm)] is the partition function.

This unifies classical entropies and density matrix entropies under the same information-theoretic principle. It clarifies that quantum states with minimum entropy (pure states) represent maximum information, while mixed states represent incomplete information.

Jaynes further noted that “strictly speaking, all this should be restated in terms of quantum theory using the density matrix formalism. This will introduce the N! permutation factor, a natural zero for entropy, alteration of numerical values if discreteness of energy levels becomes comparable to kBT, etc.”

Quantum States and Exponential Families¶

[edit]

The minimal entropy quantum states provides a connection between density matrices and exponential family distributions. This connection enables us to use many of the classical techniques from information geometry and apply them to the game in the case where the uncertainty principle is present.

The minimal entropy density matrix belongs to an exponential family, just like many classical distributions,

Quantum Minimal Entropy State¶

ρ=exp(−R⊤⋅G⋅R−Z)- Both have an exponential form

- Both involve sufficient statistics (in the quantum case, these are quadratic forms of operators)

- Both have natural parameters (G in the quantum case)

- Both include a normalization term

The matrix G in the minimal entropy state is directly related to the ‘quantum Fisher information matrix’, G=QFIM/4

This creates a link between

- Minimal entropy (maximum order)

- Uncertainty (fundamental quantum limitations)

- Information (ability to estimate parameters precisely)

The relationship implies, V⋅QFIM≥ℏ24

These minimal entropy states may have physical relationships to interpretations squeezed states in quantum optics. They are the states that achieve the ultimate precision allowed by quantum mechanics.

Minimal Entropy States¶

[edit]

In Jaynes’ World, we begin at a minimal entropy configuration - the “origin” state. Understanding the properties of these minimal entropy states is crucial for characterizing how the system evolves. These states are constrained by the uncertainty principle we previously identified: Δθ(M)⋅Δc(M)≥k.

This constraint is reminiscient of the Heisenberg uncertainty principle in quantum mechanics, where Δx⋅Δp≥ℏ/2. This isn’t a coincidence - both represent limitations on precision arising from the mathematical structure of information. The total entropy of the system is constrained to be between 0 and N, forming a compact manifold with respect to its parameters. This upper bound N ensures that as the system evolves from minimal to maximal entropy, it remains within a well-defined entropy space.

Structure of Minimal Entropy States¶

The minimal entropy configuration under the uncertainty constraint takes a specific mathematical form. It is a pure state (in the sense of having minimal possible entropy) that exactly saturates the uncertainty bound. For a system with multiple degrees of freedom, the distribution takes a Gaussian form, ρ(Z)=1Zexp(−RT⋅G⋅R),

This form is an exponential family distribution, in line with Jaynes’ principle that entropy-optimized distributions belong to the exponential family. The matrix G determines how uncertainty is distributed among different variables and their correlations.

Fisher Information and Minimal Uncertainty¶

// … existing code …

Gradient Ascent and Uncertainty Principles¶

[edit]

In our exploration of information dynamics, we now turn to the relationship between gradient ascent on entropy and uncertainty principles. This section demonstrates how systems naturally evolve from quantum-like states (with minimal uncertainty) toward classical-like states (with excess uncertainty) through entropy maximization.

For simplicity, we’ll focus on multivariate Gaussian distributions, where the uncertainty relationships are particularly elegant. In this setting, the precision matrix Λ (inverse of the covariance matrix) fully characterizes the distribution. The entropy of a multivariate Gaussian is directly related to the determinant of the covariance matrix, S=12logdet(V)+constant,

For conjugate variables like position and momentum, the Heisenberg uncertainty principle imposes constraints on the minimum product of their uncertainties. In our information-theoretic framework, this appears as a constraint on the determinant of certain submatrices of the covariance matrix.

import numpy as np

from scipy.linalg import eigh

import matplotlib.pyplot as plt

from matplotlib.patches import Ellipse

The code below implements gradient ascent on the entropy of a multivariate Gaussian system while respecting uncertainty constraints. We’ll track how the system evolves from minimal uncertainty states (quantum-like) to states with excess uncertainty (classical-like).

First, we define key functions for computing entropy and its gradient.

# Constants

hbar = 1.0 # Normalized Planck's constant

min_uncertainty_product = hbar/2

# Compute entropy of a multivariate Gaussian with precision matrix Lambda

def compute_entropy(Lambda):

"""

Compute entropy of multivariate Gaussian with precision matrix Lambda.

Parameters:

-----------

Lambda: array

Precision matrix

Returns:

--------

entropy: float

Entropy value

"""

# Covariance matrix is inverse of precision matrix

V = np.linalg.inv(Lambda)

# Entropy formula for multivariate Gaussian

n = Lambda.shape[0]

entropy = 0.5 * np.log(np.linalg.det(V)) + 0.5 * n * (1 + np.log(2*np.pi))

return entropy

# Compute gradient of entropy with respect to precision matrix

def compute_entropy_gradient(Lambda):

"""

Compute gradient of entropy with respect to precision matrix.

Parameters:

-----------

Lambda: array

Precision matrix

Returns:

--------

gradient: array

Gradient of entropy

"""

# Gradient is -0.5 * inverse of Lambda

V = np.linalg.inv(Lambda)

gradient = -0.5 * V

return gradient

The compute_entropy function calculates the entropy of a multivariate

Gaussian distribution from its precision matrix. The

compute_entropy_gradient function computes the gradient of entropy

with respect to the precision matrix, which is essential for our

gradient ascent procedure.

Next, we implement functions to handle the constraints imposed by the uncertainty principle:

# Project gradient to respect uncertainty constraints

def project_gradient(eigenvalues, gradient):

"""

Project gradient to respect minimum uncertainty constraints.

Parameters:

-----------

eigenvalues: array

Eigenvalues of precision matrix

gradient: array

Gradient vector

Returns:

--------

projected_gradient: array

Gradient projected to respect constraints

"""

n_pairs = len(eigenvalues) // 2

projected_gradient = gradient.copy()

# For each position-momentum pair

for i in range(n_pairs):

idx1, idx2 = 2*i, 2*i+1

# Check if we're at the uncertainty boundary

product = 1.0 / (eigenvalues[idx1] * eigenvalues[idx2])

if product <= min_uncertainty_product * 1.01:

# We're at or near the boundary

# Project gradient to maintain the product

avg_grad = 0.5 * (gradient[idx1]/eigenvalues[idx1] + gradient[idx2]/eigenvalues[idx2])

projected_gradient[idx1] = avg_grad * eigenvalues[idx1]

projected_gradient[idx2] = avg_grad * eigenvalues[idx2]

return projected_gradient

# Initialize a multidimensional state with position-momentum pairs

def initialize_multidimensional_state(n_pairs, squeeze_factors=None, with_cross_connections=False):

"""

Initialize a precision matrix for multiple position-momentum pairs.

Parameters:

-----------

n_pairs: int

Number of position-momentum pairs

squeeze_factors: list or None

Factors determining the position-momentum squeezing

with_cross_connections: bool

Whether to initialize with cross-connections between pairs

Returns:

--------

Lambda: array

Precision matrix

"""

if squeeze_factors is None:

squeeze_factors = [0.1 + 0.05*i for i in range(n_pairs)]

# Total dimension (position + momentum)

dim = 2 * n_pairs

# Initialize with diagonal precision matrix

eigenvalues = np.zeros(dim)

# Set eigenvalues based on squeeze factors

for i in range(n_pairs):

squeeze = squeeze_factors[i]

eigenvalues[2*i] = 1.0 / (squeeze * min_uncertainty_product)

eigenvalues[2*i+1] = 1.0 / (min_uncertainty_product / squeeze)

# Initialize with identity eigenvectors

eigenvectors = np.eye(dim)

# If requested, add cross-connections by mixing eigenvectors

if with_cross_connections:

# Create a random orthogonal matrix for mixing

Q, _ = np.linalg.qr(np.random.randn(dim, dim))

# Apply moderate mixing - not fully random to preserve some structure

mixing_strength = 0.3

eigenvectors = (1 - mixing_strength) * eigenvectors + mixing_strength * Q

# Re-orthogonalize

eigenvectors, _ = np.linalg.qr(eigenvectors)

# Construct precision matrix from eigendecomposition

Lambda = eigenvectors @ np.diag(eigenvalues) @ eigenvectors.T

return Lambda

The project_gradient function ensures that our gradient ascent

respects the uncertainty principle by projecting the gradient to

maintain minimum uncertainty products when necessary. The

initialize_multidimensional_state function creates a starting state

with multiple position-momentum pairs, each initialized to the minimum

uncertainty allowed by the uncertainty principle, but with different

“squeeze factors” that determine the shape of the uncertainty ellipse.

# Add gradient check function

def check_entropy_gradient(Lambda, epsilon=1e-6):

"""

Check the analytical gradient of entropy against numerical gradient.

Parameters:

-----------

Lambda: array

Precision matrix

epsilon: float

Small perturbation for numerical gradient

Returns:

--------

analytical_grad: array

Analytical gradient with respect to eigenvalues

numerical_grad: array

Numerical gradient with respect to eigenvalues

"""

# Get eigendecomposition

eigenvalues, eigenvectors = eigh(Lambda)

# Compute analytical gradient

analytical_grad = entropy_gradient(eigenvalues)

# Compute numerical gradient

numerical_grad = np.zeros_like(eigenvalues)

for i in range(len(eigenvalues)):

# Perturb eigenvalue up

eigenvalues_plus = eigenvalues.copy()

eigenvalues_plus[i] += epsilon

Lambda_plus = eigenvectors @ np.diag(eigenvalues_plus) @ eigenvectors.T

entropy_plus = compute_entropy(Lambda_plus)

# Perturb eigenvalue down

eigenvalues_minus = eigenvalues.copy()

eigenvalues_minus[i] -= epsilon

Lambda_minus = eigenvectors @ np.diag(eigenvalues_minus) @ eigenvectors.T

entropy_minus = compute_entropy(Lambda_minus)

# Compute numerical gradient

numerical_grad[i] = (entropy_plus - entropy_minus) / (2 * epsilon)

# Compare

print("Analytical gradient:", analytical_grad)

print("Numerical gradient:", numerical_grad)

print("Difference:", np.abs(analytical_grad - numerical_grad))

return analytical_grad, numerical_grad

Now we implement the main gradient ascent procedure.

# Perform gradient ascent on entropy

def gradient_ascent_entropy(Lambda_init, n_steps=100, learning_rate=0.01):

"""

Perform gradient ascent on entropy while respecting uncertainty constraints.

Parameters:

-----------

Lambda_init: array

Initial precision matrix

n_steps: int

Number of gradient steps

learning_rate: float

Learning rate for gradient ascent

Returns:

--------

Lambda_history: list

History of precision matrices

entropy_history: list

History of entropy values

"""

Lambda = Lambda_init.copy()

Lambda_history = [Lambda.copy()]

entropy_history = [compute_entropy(Lambda)]

for step in range(n_steps):

# Compute gradient of entropy

grad_matrix = compute_entropy_gradient(Lambda)

# Diagonalize Lambda to work with eigenvalues

eigenvalues, eigenvectors = eigh(Lambda)

# Transform gradient to eigenvalue space

grad = np.diag(eigenvectors.T @ grad_matrix @ eigenvectors)

# Project gradient to respect constraints

proj_grad = project_gradient(eigenvalues, grad)

# Update eigenvalues

eigenvalues += learning_rate * proj_grad

# Ensure eigenvalues remain positive

eigenvalues = np.maximum(eigenvalues, 1e-10)

# Reconstruct Lambda from updated eigenvalues

Lambda = eigenvectors @ np.diag(eigenvalues) @ eigenvectors.T

# Store history

Lambda_history.append(Lambda.copy())

entropy_history.append(compute_entropy(Lambda))

return Lambda_history, entropy_history

The gradient_ascent_entropy function implements the core optimization

procedure. It performs gradient ascent on the entropy while respecting

the uncertainty constraints. The algorithm works in the eigenvalue space

of the precision matrix, which makes it easier to enforce constraints

and ensure the matrix remains positive definite.

To analyze the results, we implement functions to track uncertainty metrics and detect interesting dynamics:

# Track uncertainty products and regime classification

def track_uncertainty_metrics(Lambda_history):

"""

Track uncertainty products and classify regimes for each conjugate pair.

Parameters:

-----------

Lambda_history: list

History of precision matrices

Returns:

--------

metrics: dict

Dictionary containing uncertainty metrics over time

"""

n_steps = len(Lambda_history)

n_pairs = Lambda_history[0].shape[0] // 2

# Initialize tracking arrays

uncertainty_products = np.zeros((n_steps, n_pairs))

regimes = np.zeros((n_steps, n_pairs), dtype=object)

for step, Lambda in enumerate(Lambda_history):

# Get covariance matrix

V = np.linalg.inv(Lambda)

# Calculate Fisher information matrix

G = Lambda / 2

# For each conjugate pair

for i in range(n_pairs):

# Extract 2x2 submatrix for this pair

idx1, idx2 = 2*i, 2*i+1

V_sub = V[np.ix_([idx1, idx2], [idx1, idx2])]

# Compute uncertainty product (determinant of submatrix)

uncertainty_product = np.sqrt(np.linalg.det(V_sub))

uncertainty_products[step, i] = uncertainty_product

# Classify regime

if abs(uncertainty_product - min_uncertainty_product) < 0.1*min_uncertainty_product:

regimes[step, i] = "Quantum-like"

else:

regimes[step, i] = "Classical-like"

return {

'uncertainty_products': uncertainty_products,

'regimes': regimes

}

The track_uncertainty_metrics function analyzes the evolution of

uncertainty products for each position-momentum pair and classifies them

as either “quantum-like” (near minimum uncertainty) or “classical-like”

(with excess uncertainty). This classification helps us understand how

the system transitions between these regimes during entropy

maximization.

We also implement a function to detect saddle points in the gradient flow, which are critical for understanding the system’s dynamics:

# Detect saddle points in the gradient flow

def detect_saddle_points(Lambda_history):

"""

Detect saddle-like behavior in the gradient flow.

Parameters:

-----------

Lambda_history: list

History of precision matrices

Returns:

--------

saddle_metrics: dict

Metrics related to saddle point behavior

"""

n_steps = len(Lambda_history)

n_pairs = Lambda_history[0].shape[0] // 2

# Track eigenvalues and their gradients

eigenvalues_history = np.zeros((n_steps, 2*n_pairs))

gradient_ratios = np.zeros((n_steps, n_pairs))

for step, Lambda in enumerate(Lambda_history):

# Get eigenvalues

eigenvalues, _ = eigh(Lambda)

eigenvalues_history[step] = eigenvalues

# For each pair, compute ratio of gradients

if step > 0:

for i in range(n_pairs):

idx1, idx2 = 2*i, 2*i+1

# Change in eigenvalues

delta1 = abs(eigenvalues_history[step, idx1] - eigenvalues_history[step-1, idx1])

delta2 = abs(eigenvalues_history[step, idx2] - eigenvalues_history[step-1, idx2])

# Ratio of max to min (high ratio indicates saddle-like behavior)

max_delta = max(delta1, delta2)

min_delta = max(1e-10, min(delta1, delta2)) # Avoid division by zero

gradient_ratios[step, i] = max_delta / min_delta

# Identify candidate saddle points (where some gradients are much larger than others)

saddle_candidates = []

for step in range(1, n_steps):

if np.any(gradient_ratios[step] > 10): # Threshold for saddle-like behavior

saddle_candidates.append(step)

return {

'eigenvalues_history': eigenvalues_history,

'gradient_ratios': gradient_ratios,

'saddle_candidates': saddle_candidates

}

The detect_saddle_points function identifies points in the gradient

flow where some eigenvalues change much faster than others, indicating

saddle-like behavior. These saddle points are important because they

represent critical transitions in the system’s evolution.

Finally, we implement visualization functions to help us understand the system’s behavior:

# Visualize uncertainty ellipses for multiple pairs

def plot_multidimensional_uncertainty(Lambda_history, step_indices, pairs_to_plot=None):

"""

Plot the evolution of uncertainty ellipses for multiple position-momentum pairs.

Parameters:

-----------

Lambda_history: list

History of precision matrices

step_indices: list

Indices of steps to visualize

pairs_to_plot: list, optional

Indices of position-momentum pairs to plot

"""

n_pairs = Lambda_history[0].shape[0] // 2

if pairs_to_plot is None:

pairs_to_plot = range(min(3, n_pairs)) # Plot up to 3 pairs by default

fig, axes = plt.subplots(len(pairs_to_plot), len(step_indices),

figsize=(4*len(step_indices), 3*len(pairs_to_plot)))

# Handle case of single pair or single step

if len(pairs_to_plot) == 1:

axes = axes.reshape(1, -1)

if len(step_indices) == 1:

axes = axes.reshape(-1, 1)

for row, pair_idx in enumerate(pairs_to_plot):

for col, step in enumerate(step_indices):

ax = axes[row, col]

Lambda = Lambda_history[step]

covariance = np.linalg.inv(Lambda)

# Extract 2x2 submatrix for this pair

idx1, idx2 = 2*pair_idx, 2*pair_idx+1

cov_sub = covariance[np.ix_([idx1, idx2], [idx1, idx2])]

# Get eigenvalues and eigenvectors of submatrix

values, vectors = eigh(cov_sub)

# Calculate ellipse parameters

angle = np.degrees(np.arctan2(vectors[1, 0], vectors[0, 0]))

width, height = 2 * np.sqrt(values)

# Create ellipse

ellipse = Ellipse((0, 0), width=width, height=height, angle=angle,

edgecolor='blue', facecolor='lightblue', alpha=0.5)

# Add to plot

ax.add_patch(ellipse)

ax.set_xlim(-3, 3)

ax.set_ylim(-3, 3)

ax.set_aspect('equal')

ax.grid(True)

# Add minimum uncertainty circle

min_circle = plt.Circle((0, 0), min_uncertainty_product,

fill=False, color='red', linestyle='--')

ax.add_patch(min_circle)

# Compute uncertainty product

uncertainty_product = np.sqrt(np.linalg.det(cov_sub))

# Determine regime

if abs(uncertainty_product - min_uncertainty_product) < 0.1*min_uncertainty_product:

regime = "Quantum-like"

color = 'red'

else:

regime = "Classical-like"

color = 'blue'

# Add labels

if row == 0:

ax.set_title(f"Step {step}")

if col == 0:

ax.set_ylabel(f"Pair {pair_idx+1}")

# Add uncertainty product text

ax.text(0.05, 0.95, f"ΔxΔp = {uncertainty_product:.2f}",

transform=ax.transAxes, fontsize=10, verticalalignment='top')

# Add regime text

ax.text(0.05, 0.85, regime, transform=ax.transAxes,

fontsize=10, verticalalignment='top', color=color)

ax.set_xlabel("Position")

ax.set_ylabel("Momentum")

plt.tight_layout()

return fig

The plot_multidimensional_uncertainty function visualizes the

uncertainty ellipses for multiple position-momentum pairs at different

steps of the gradient ascent process. These visualizations help us

understand how the system transitions from quantum-like to

classical-like regimes.

This implementation builds on the InformationReservoir class we saw

earlier, but generalizes to multiple position-momentum pairs and focuses

specifically on the uncertainty relationships. The key connection is

that both implementations track how systems naturally evolve from

minimal entropy states (with quantum-like uncertainty relations) toward

maximum entropy states (with classical-like uncertainty relations).

As the system evolves through gradient ascent, we observe transitions.

Uncertainty desaturation: The system begins with a minimal entropy state that exactly saturates the uncertainty bound (Δx⋅Δp=ℏ/2). As entropy increases, this bound becomes less tightly saturated.

Shape transformation: The initial highly squeezed uncertainty ellipse (with small position uncertainty and large momentum uncertainty) gradually becomes more circular, representing a more balanced distribution of uncertainty.

Quantum-to-classical transition: The system transitions from a quantum-like regime (where uncertainty is at the minimum allowed by quantum mechanics) to a more classical-like regime (where statistical uncertainty dominates over quantum uncertainty).

This evolution reveals how information naturally flows from highly ordered configurations toward maximum entropy states, while still respecting the fundamental constraints imposed by the uncertainty principle.

In systems with multiple position-momentum pairs, the gradient ascent process encounters saddle points which trigger a natural slowdown. The system naturally slows down near saddle points, with some eigenvalue pairs evolving quickly while others hardly change. These saddle points represent partially equilibrated states where some degrees of freedom have reached maximum entropy while others remain ordered. At these critical points, some variables maintain quantum-like characteristics (uncertainty saturation) while others exhibit classical-like behavior (excess uncertainty).

This natural separation creates a hybrid system where quantum-like memory interfaces with classical-like processing - emerging naturally from the geometry of the entropy landscape under uncertainty constraints.

import numpy as np

from scipy.linalg import eigh

# Constants

hbar = 1.0 # Normalized Planck's constant

min_uncertainty_product = hbar/2

# Verify gradient calculation

print("Testing gradient calculation:")

test_Lambda = np.array([[2.0, 0.5], [0.5, 1.0]]) # Example precision matrix

analytical_grad, numerical_grad = check_entropy_gradient(test_Lambda)

# Verify if we're ascending or descending

entropy_before = compute_entropy(test_Lambda)

eigenvalues, eigenvectors = eigh(test_Lambda)

step_size = 0.01

eigenvalues_after = eigenvalues + step_size * analytical_grad

test_Lambda_after = eigenvectors @ np.diag(eigenvalues_after) @ eigenvectors.T

entropy_after = compute_entropy(test_Lambda_after)

print(f"Entropy before step: {entropy_before}")

print(f"Entropy after step: {entropy_after}")

print(f"Change in entropy: {entropy_after - entropy_before}")

if entropy_after > entropy_before:

print("We are ascending the entropy gradient")

else:

print("We are descending the entropy gradient")

test_grad = compute_entropy_gradient(test_Lambda)

print(f"Precision matrix:\n{test_Lambda}")

print(f"Entropy gradient:\n{test_grad}")

print(f"Entropy: {compute_entropy(test_Lambda):.4f}")

# Initialize system with 2 position-momentum pairs

n_pairs = 2

Lambda_init = initialize_multidimensional_state(n_pairs, squeeze_factors=[0.1, 0.5])

# Run gradient ascent

n_steps = 100

Lambda_history, entropy_history = gradient_ascent_entropy(Lambda_init, n_steps, learning_rate=0.01)

# Track metrics

uncertainty_metrics = track_uncertainty_metrics(Lambda_history)

saddle_metrics = detect_saddle_points(Lambda_history)

# Print results

print("\nFinal entropy:", entropy_history[-1])

print("Initial uncertainty products:", uncertainty_metrics['uncertainty_products'][0])

print("Final uncertainty products:", uncertainty_metrics['uncertainty_products'][-1])

print("Saddle point candidates at steps:", saddle_metrics['saddle_candidates'])

# Plot entropy evolution

plt.figure(figsize=plot.big_wide_figsize)

plt.plot(entropy_history)

plt.xlabel('Gradient Ascent Step')

plt.ylabel('Entropy')

plt.title('Entropy Evolution During Gradient Ascent')

plt.grid(True)

mlai.write_figure(filename='entropy-evolution-during-gradient-ascent.svg',

directory='./information-game')

# Plot uncertainty products evolution

plt.figure(figsize=plot.big_wide_figsize)

for i in range(n_pairs):

plt.plot(uncertainty_metrics['uncertainty_products'][:, i],

label=f'Pair {i+1}')

plt.axhline(y=min_uncertainty_product, color='k', linestyle='--',

label='Minimum uncertainty')

plt.xlabel('Gradient Ascent Step')

plt.ylabel('Uncertainty Product (ΔxΔp)')

plt.title('Evolution of Uncertainty Products')

plt.legend()

plt.grid(True)

mlai.write_figure(filename='uncertainty-products-evolution.svg',

directory='./information-game')

# Plot uncertainty ellipses at key steps

step_indices = [0, 20, 50, 99] # Initial, early, middle, final

plot_multidimensional_uncertainty(Lambda_history, step_indices)

# Plot eigenvalues evolution

plt.subplots(figsize=plot.big_wide_figsize)

for i in range(2*n_pairs):

plt.semilogy(saddle_metrics['eigenvalues_history'][:, i],

label=f'$\\lambda_{i+1}$')

plt.xlabel('Gradient Ascent Step')

plt.ylabel('Eigenvalue (log scale)')

plt.title('Evolution of Precision Matrix Eigenvalues')

plt.legend()

plt.grid(True)

plt.tight_layout()

mlai.write_figure(filename='eigenvalue-evolution.svg',

directory='./information-game')

Figure: Eigenvalue evolution during gradient ascent.

Figure: Uncertainty products evolution during gradient ascent.

Figure: Entropy evolution during gradient ascent.

Figure: .

Visualising the Parameter-Capacity Uncertainty Principle¶

[edit]

The uncertainty principle between parameters θ and capacity variables c is a fundamental feature of information reservoirs. We can visualize this uncertainty relation using phase space plots.

We can demonstrate how the uncertainty principle manifests in different regimes:

Quantum-like regime: Near minimal entropy, the uncertainty product Δθ⋅Δc approaches the lower bound k, creating wave-like interference patterns in probability space.

Transitional regime: As entropy increases, uncertainty relations begin to decouple, with Δθ⋅Δc>k.

Classical regime: At high entropy, parameter uncertainty dominates, creating diffusion-like dynamics with minimal influence from uncertainty relations.

The visualization shows probability distributions for these three regimes in both parameter space and capacity space.

import numpy as np

import matplotlib.pyplot as plt

import mlai.plot as plot

import mlai

from matplotlib.patches import Ellipse

# Visualization of uncertainty ellipses

fig, ax = plt.subplots(figsize=plot.big_figsize)

# Parameters for uncertainty ellipses

k = 1 # Uncertainty constant

centers = [(0, 0), (2, 2), (4, 4)]

widths = [0.25, 0.5, 2]

heights = [4, 2.5, 2]

#heights = [k/w for w in widths]

colors = ['blue', 'green', 'red']

labels = ['Quantum-like', 'Transitional', 'Classical']

# Plot uncertainty ellipses

for center, width, height, color, label in zip(centers, widths, heights, colors, labels):

ellipse = Ellipse(center, width, height,

edgecolor=color, facecolor='none',

linewidth=2, label=label)

ax.add_patch(ellipse)

# Add text label

ax.text(center[0], center[1] + height/2 + 0.2,

label, ha='center', color=color)

# Add area label (uncertainty product)

area = width * height

ax.text(center[0], center[1] - height/2 - 0.3,

f'Area = {width:.2f} $\\times$ {height: .2f} $\\pi$', ha='center')

# Set axis labels and limits

ax.set_xlabel('Parameter $\\theta$')

ax.set_ylabel('Capacity $C$')

ax.set_xlim(-3, 7)

ax.set_ylim(-3, 7)

ax.set_aspect('equal')

ax.grid(True, linestyle='--', alpha=0.7)

ax.set_title('Parameter-Capacity Uncertainty Relation')

# Add diagonal line representing constant uncertainty product

x = np.linspace(0.25, 6, 100)

y = k/x

ax.plot(x, y, 'k--', alpha=0.5, label='Minimum uncertainty: $\\Delta \\theta \\Delta C = k$')

ax.legend(loc='upper right')

mlai.write_figure(filename='uncertainty-ellipses.svg',

directory = './information-game')

Figure: Visualisaiton of the uncertainty trade-off between parameter precision and capacity.

This visualization helps explain why information reservoirs with quantum-like properties naturally emerge at minimal entropy. The uncertainty principle is not imposed but arises naturally from the constraints of Shannon information theory applied to physical systems operating at minimal entropy.

Scaling to Large Systems: Emergent Statistical Behavior¶

[edit]

We now extend our analysis to much larger systems with thousands of position-momentum pairs. This allows us to observe emergent statistical behaviors and phase transitions that aren’t apparent in smaller systems.

Large-scale systems reveal how microscopic uncertainty constraints lead to macroscopic statistical patterns. By analyzing thousands of position-momentum pairs simultaneously, we can identify emergent behaviors and natural clustering of dynamical patterns.

# Optimized implementation for very large systems

def large_scale_gradient_ascent(n_pairs, steps=100, learning_rate=1, sample_interval=5):

"""

Memory-efficient implementation of gradient ascent for very large systems.

Parameters:

-----------

n_pairs: int

Number of position-momentum pairs

steps: int

Number of gradient steps to take

learning_rate: float

Step size for gradient ascent

sample_interval: int

Store state every sample_interval steps to save memory

Returns:

--------

sampled_states: list

Sparse history of states at sampled intervals

entropy_history: list

Complete history of entropy values

uncertainty_metrics: dict

Metrics tracking uncertainty products over time

"""

# Initialize with diagonal precision matrix (no need to store full matrix)

dim = 2 * n_pairs

eigenvalues = np.zeros(dim)

# Initialize with minimal entropy state

for i in range(n_pairs):

squeeze = 0.1 * (1 + (i % 10)) # Cycle through 10 different squeeze factors

eigenvalues[2*i] = 1.0 / (squeeze * min_uncertainty_product)

eigenvalues[2*i+1] = 1.0 / (min_uncertainty_product / squeeze)

# Storage for results (sparse to save memory)

sampled_states = []

entropy_history = []

uncertainty_products = np.zeros((steps+1, n_pairs))

# Initial entropy and uncertainty

entropy = 0.5 * (dim * (1 + np.log(2*np.pi)) - np.sum(np.log(eigenvalues)))

entropy_history.append(entropy)

# Track initial uncertainty products

for i in range(n_pairs):

uncertainty_products[0, i] = 1.0 / np.sqrt(eigenvalues[2*i] * eigenvalues[2*i+1])

# Store initial state

sampled_states.append(eigenvalues.copy())

# Gradient ascent loop

for step in range(steps):

# Compute gradient with respect to eigenvalues (diagonal precision)

grad = -1.0 / (2.0 * eigenvalues)

# Project gradient to respect constraints

for i in range(n_pairs):

idx1, idx2 = 2*i, 2*i+1

# Current uncertainty product (in eigenvalue space, this is inverse)

current_product = eigenvalues[idx1] * eigenvalues[idx2]

# If we're already at minimum uncertainty, project gradient

if abs(current_product - 1/min_uncertainty_product**2) < 1e-6:

# Tangent direction preserves the product

tangent = np.array([-eigenvalues[idx2], -eigenvalues[idx1]])

tangent = tangent / np.linalg.norm(tangent)

# Project the gradient onto this tangent

pair_gradient = np.array([grad[idx1], grad[idx2]])

projection = np.dot(pair_gradient, tangent) * tangent

grad[idx1] = projection[0]

grad[idx2] = projection[1]

# Update eigenvalues

eigenvalues += learning_rate * grad

# Ensure eigenvalues remain positive

eigenvalues = np.maximum(eigenvalues, 1e-10)

# Compute entropy

entropy = 0.5 * (dim * (1 + np.log(2*np.pi)) - np.sum(np.log(eigenvalues)))

entropy_history.append(entropy)

# Track uncertainty products

for i in range(n_pairs):

uncertainty_products[step+1, i] = 1.0 / np.sqrt(eigenvalues[2*i] * eigenvalues[2*i+1])

# Store state at sampled intervals

if step % sample_interval == 0 or step == steps-1:

sampled_states.append(eigenvalues.copy())

# Compute regime classifications

regimes = np.zeros((steps+1, n_pairs), dtype=object)

for step in range(steps+1):

for i in range(n_pairs):

if abs(uncertainty_products[step, i] - min_uncertainty_product) < 0.1*min_uncertainty_product:

regimes[step, i] = "Quantum-like"

else:

regimes[step, i] = "Classical-like"

uncertainty_metrics = {

'uncertainty_products': uncertainty_products,

'regimes': regimes

}

return sampled_states, entropy_history, uncertainty_metrics

# Add gradient check function for large systems

def check_large_system_gradient(n_pairs=10, epsilon=1e-6):

"""

Check the analytical gradient against numerical gradient for a large system.

Parameters:

-----------

n_pairs: int

Number of position-momentum pairs to test

epsilon: float

Small perturbation for numerical gradient

Returns:

--------

max_diff: float

Maximum difference between analytical and numerical gradients

"""

# Initialize a small test system

dim = 2 * n_pairs

eigenvalues = np.zeros(dim)

# Initialize with minimal entropy state

for i in range(n_pairs):

squeeze = 0.1 * (1 + (i % 10))

eigenvalues[2*i] = 1.0 / (squeeze * min_uncertainty_product)

eigenvalues[2*i+1] = 1.0 / (min_uncertainty_product / squeeze)

# Compute analytical gradient

analytical_grad = -1.0 / (2.0 * eigenvalues)

# Compute numerical gradient

numerical_grad = np.zeros_like(eigenvalues)

# Function to compute entropy from eigenvalues

def compute_entropy_from_eigenvalues(evals):

return 0.5 * (dim * (1 + np.log(2*np.pi)) - np.sum(np.log(evals)))

# Initial entropy

base_entropy = compute_entropy_from_eigenvalues(eigenvalues)

# Compute numerical gradient

for i in range(dim):

# Perturb eigenvalue up

eigenvalues_plus = eigenvalues.copy()

eigenvalues_plus[i] += epsilon

entropy_plus = compute_entropy_from_eigenvalues(eigenvalues_plus)

# Perturb eigenvalue down

eigenvalues_minus = eigenvalues.copy()

eigenvalues_minus[i] -= epsilon

entropy_minus = compute_entropy_from_eigenvalues(eigenvalues_minus)

# Compute numerical gradient

numerical_grad[i] = (entropy_plus - entropy_minus) / (2 * epsilon)

# Compare

diff = np.abs(analytical_grad - numerical_grad)

max_diff = np.max(diff)

avg_diff = np.mean(diff)

print(f"Gradient check for {n_pairs} position-momentum pairs:")