Example of Using a Raw Image Dataset in the Feature Store¶

Images are often stored in binary formats for training machine learning models, such as tfrecords or parquet. However, sometimes it can be useful to store a large image dataset in a folder with one file per image, such as .jpg or .png.

This notebook will demonstrate how to create a training dataset with .jpg files in the Hopsworks Feature Store

from hops import featurestore

from hops import hdfs

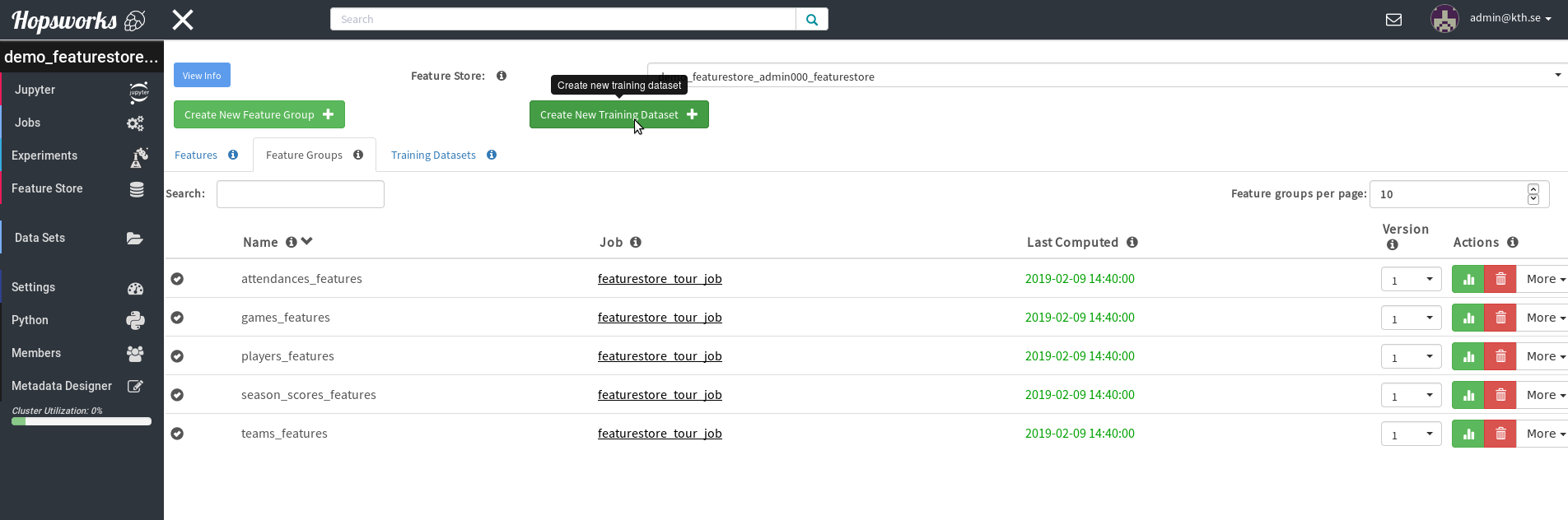

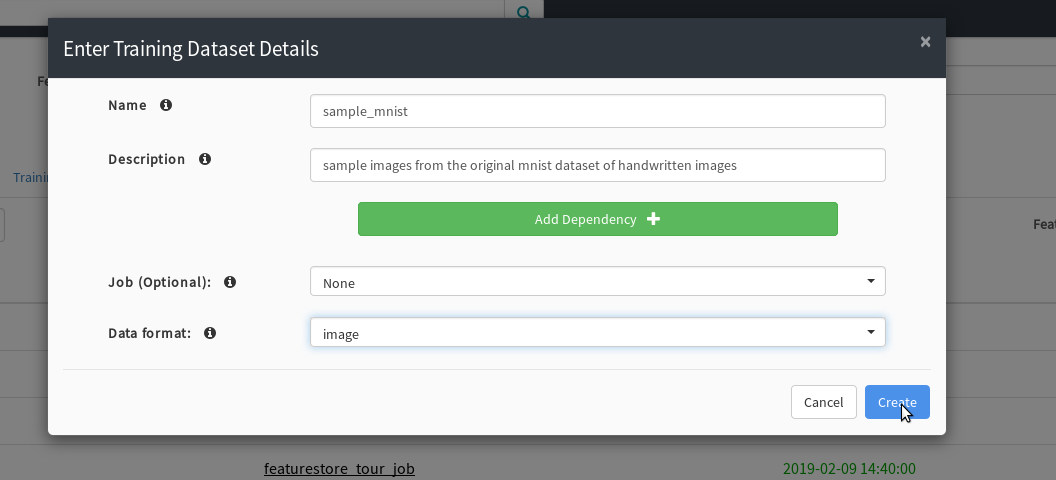

Step 1: Create a PlaceHolder Training Dataset from the Featurestore Registry¶

As a first step we can create the training dataset from the hopsworks registry UI. This will create the metadata of the training dataset and also create a folder to store the dataset in HDFS.

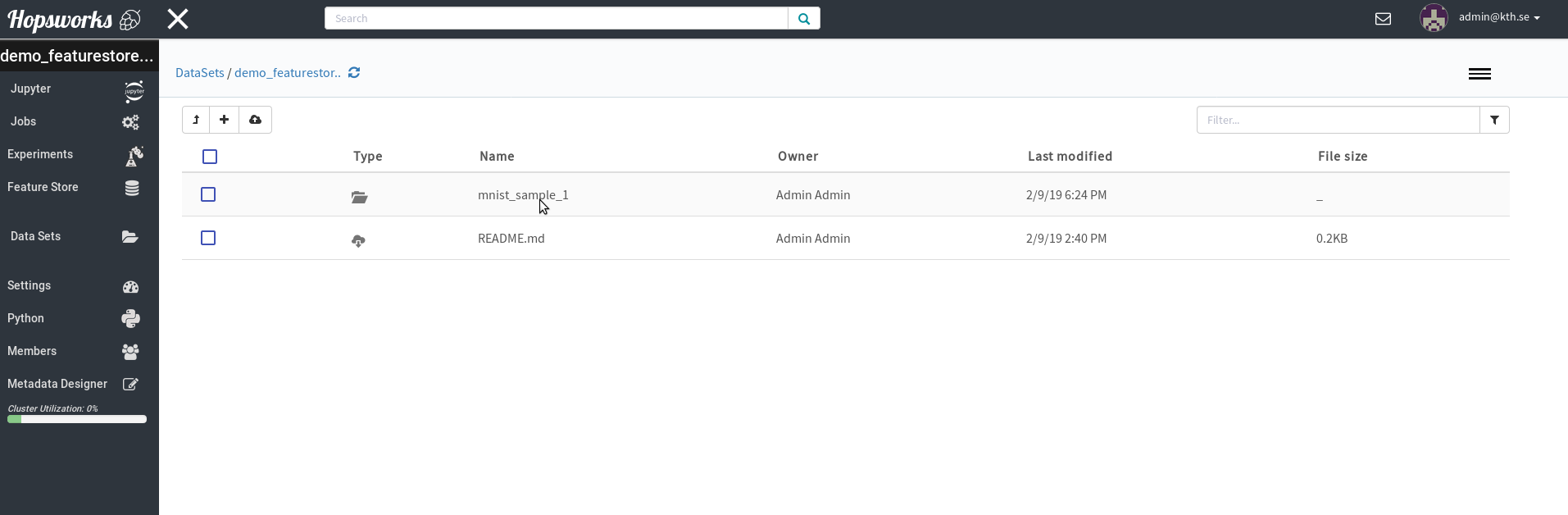

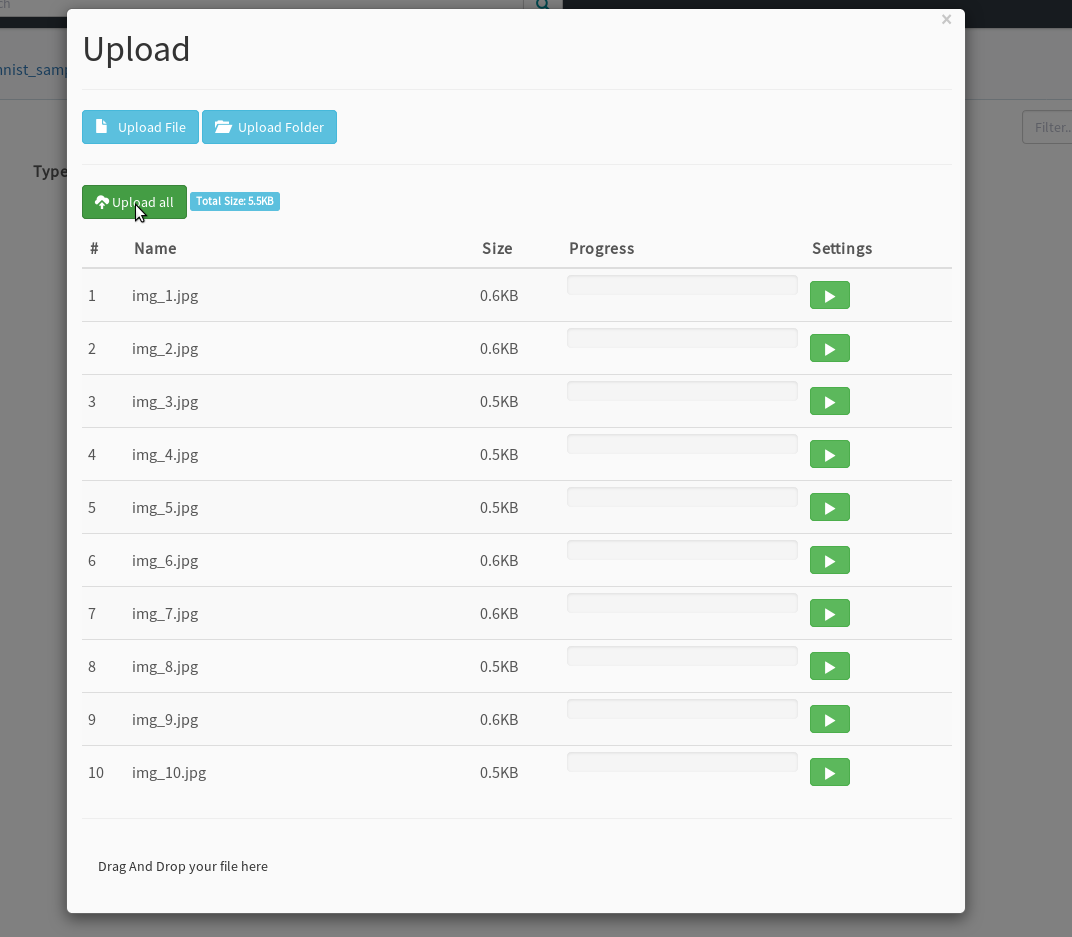

Step 2: Uploading the Images¶

.jpg or .png files are not designed to be written using big data tools, therefore we recommend that you upload the raw images directly from the Dataset-Service in Hopsworks.

The dataset will be in a folder called <datasetname>_<version> inside the dataset containing your training datasets (<projectname>_<Training_Datasets>). You can get the path directly from the API:

featurestore.get_training_dataset_path("sample_mnist")

'hdfs://10.0.2.15:8020/Projects/demo_featurestore_admin000/demo_featurestore_admin000_Training_Datasets/sample_mnist_1'

Step 3: Read the Training Dataset into a Spark Dataframe or a Tensorflow Dataset¶

Images such as .jpg or .png can be read by Spark or Tensorflow from HDFS.

Reading an Image Dataset with Spark¶

images_df = featurestore.get_training_dataset("sample_mnist")

images_df.printSchema()

root |-- image: struct (nullable = true) | |-- origin: string (nullable = true) | |-- height: integer (nullable = true) | |-- width: integer (nullable = true) | |-- nChannels: integer (nullable = true) | |-- mode: integer (nullable = true) | |-- data: binary (nullable = true)

images_df.show(5)

+--------------------+ | image| +--------------------+ |[hdfs://10.0.2.15...| |[hdfs://10.0.2.15...| |[hdfs://10.0.2.15...| |[hdfs://10.0.2.15...| |[hdfs://10.0.2.15...| +--------------------+ only showing top 5 rows

Reading an Image Dataset with Tensorflow¶

import tensorflow as tf

import numpy as np

def build_tf_graph(images_dir):

"""

A simple computational graph for reading in a jpg into a tensor

"""

img_filenames = tf.gfile.Glob(images_dir + "/*.jpg")

num_images = len(img_filenames)

img_queue = tf.train.string_input_producer(img_filenames)

img_reader = tf.WholeFileReader()

# Operation for reading a single file from the queue

file_name_op, file_contents_op = img_reader.read(img_queue)

# Operation for decoding JPEG to tensor

img_to_tensor_op = tf.image.decode_jpeg(file_contents_op)

return img_to_tensor_op, file_name_op, num_images

def run_graph_for_reading_images(sess, images_dir, num_images, img_op, file_name_op):

"""

Run the tf-graph for reading all images into tensors

"""

image_tensors = []

image_filenames_read = []

for i in range(num_images):

# these two must be run in the same call to sess.run() otherwise they become unsynced which messes up labels for validation set..

img_tensor, file_name_str = sess.run([img_op, file_name_op])

image_tensors.append(img_tensor)

image_filenames_read.append(file_name_str)

return np.array(image_tensors), np.array(image_filenames_read)

def init_graph():

"""

Initialize the graph and variables for Tensorflow engine

"""

# get operation for initializing the global variables in the graph

init_g = tf.global_variables_initializer()

# create a session for encapsulating the environment where

# operations can be run and tensors can be evaluated

sess = tf.Session()

# run the initialization operation

sess.run(init_g)

return sess

def setup_tf():

"""

Setup TF session

"""

# Initialize TF

sess = init_graph()

# Get coordinator for threads to be able to read

coord = tf.train.Coordinator()

# Starts all queue runners in the graph and return list of the threads

threads = tf.train.start_queue_runners(coord=coord, sess=sess)

return sess, coord, threads

images_dir = featurestore.get_training_dataset_path("sample_mnist")

img_to_tensor_op, file_name_op, num_images = build_tf_graph(images_dir)

sess, coord, threads = setup_tf()

image_tensors, image_filenames_read = run_graph_for_reading_images(sess,

images_dir,

num_images,

img_to_tensor_op,

file_name_op

)

image_filenames_read

array([b'hdfs://10.0.2.15:8020/Projects/demo_featurestore_admin000/demo_featurestore_admin000_Training_Datasets/sample_mnist_1/img_4.jpg',

b'hdfs://10.0.2.15:8020/Projects/demo_featurestore_admin000/demo_featurestore_admin000_Training_Datasets/sample_mnist_1/img_1.jpg',

b'hdfs://10.0.2.15:8020/Projects/demo_featurestore_admin000/demo_featurestore_admin000_Training_Datasets/sample_mnist_1/img_3.jpg',

b'hdfs://10.0.2.15:8020/Projects/demo_featurestore_admin000/demo_featurestore_admin000_Training_Datasets/sample_mnist_1/img_10.jpg',

b'hdfs://10.0.2.15:8020/Projects/demo_featurestore_admin000/demo_featurestore_admin000_Training_Datasets/sample_mnist_1/img_2.jpg',

b'hdfs://10.0.2.15:8020/Projects/demo_featurestore_admin000/demo_featurestore_admin000_Training_Datasets/sample_mnist_1/img_6.jpg',

b'hdfs://10.0.2.15:8020/Projects/demo_featurestore_admin000/demo_featurestore_admin000_Training_Datasets/sample_mnist_1/img_7.jpg',

b'hdfs://10.0.2.15:8020/Projects/demo_featurestore_admin000/demo_featurestore_admin000_Training_Datasets/sample_mnist_1/img_9.jpg',

b'hdfs://10.0.2.15:8020/Projects/demo_featurestore_admin000/demo_featurestore_admin000_Training_Datasets/sample_mnist_1/img_8.jpg',

b'hdfs://10.0.2.15:8020/Projects/demo_featurestore_admin000/demo_featurestore_admin000_Training_Datasets/sample_mnist_1/img_5.jpg'],

dtype='|S128')

image_tensors[0].shape

(28, 28, 1)