Consuming Messages from Kafka Tour Producer Using PySpark¶

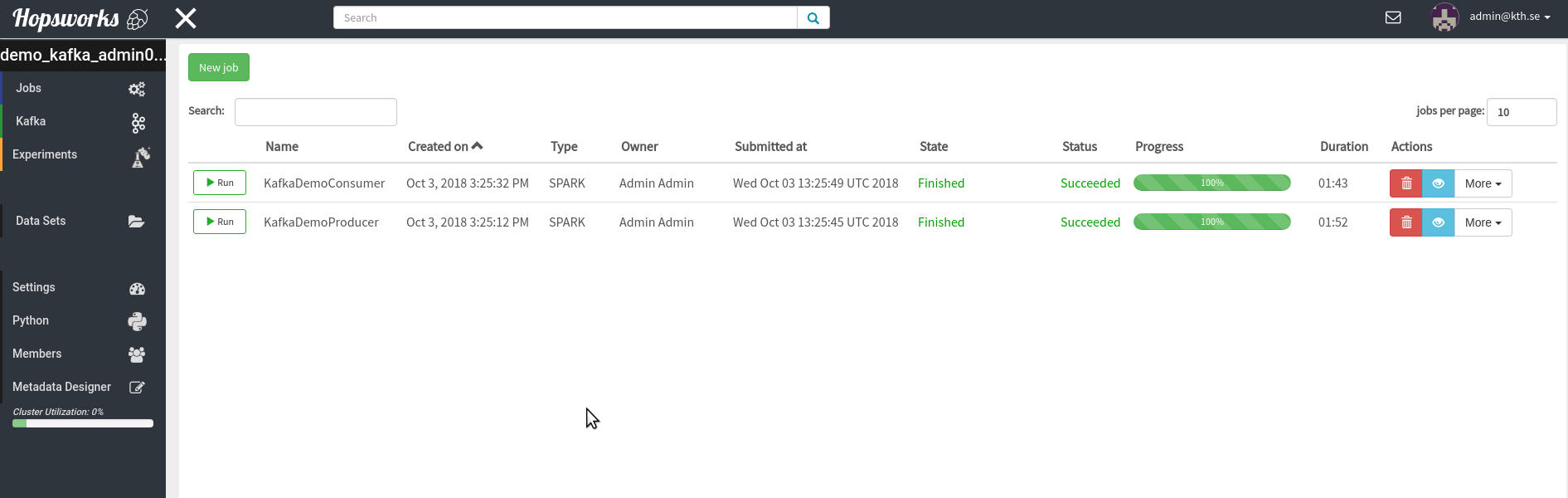

To run this notebook you should have taken the Kafka tour and created the Producer and Consumer jobs. I.e your Job UI should look like this:

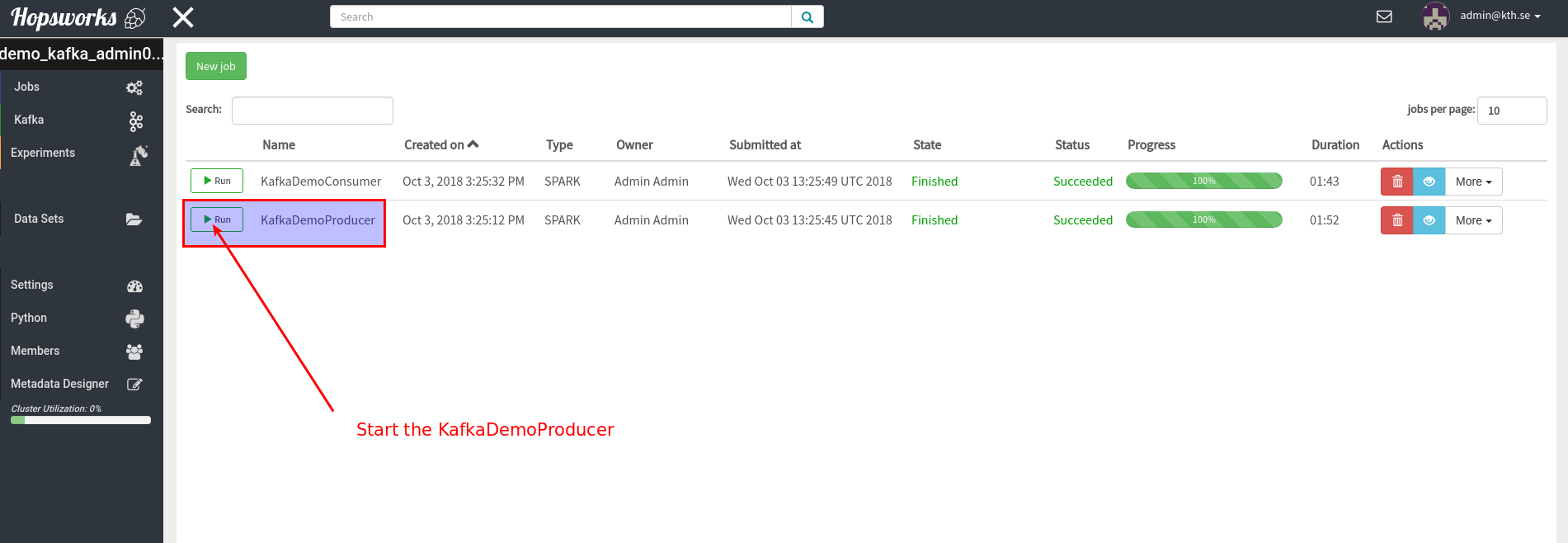

In this notebook we will consume messages from Kafka that were produced by the producer-job created in the Demo. Go to the Jobs-UI in hopsworks and start the Kafka producer job:

Imports¶

We use utility functions from the hops library to make Kafka configuration simple

Dependencies:

- hops-py-util

from hops import kafka

from hops import tls

from hops import hdfs

from confluent_kafka import Producer, Consumer

import numpy as np

from pyspark.sql.types import StructType, StructField, FloatType, TimestampType

Constants¶

Update the TOPIC_NAME field to reflect the name of your Kafka topic that was created in your Kafka tour (e.g "DemoKafkaTopic_3")

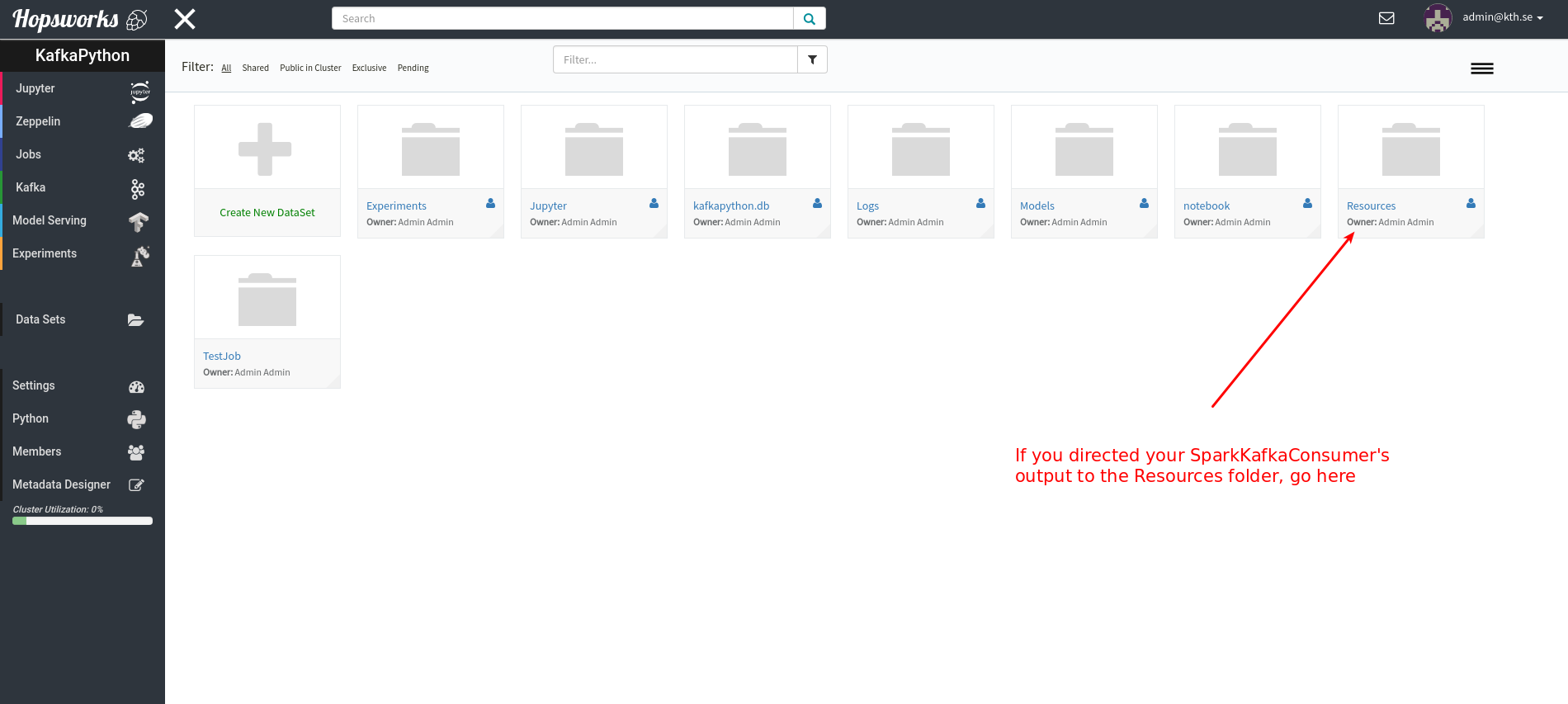

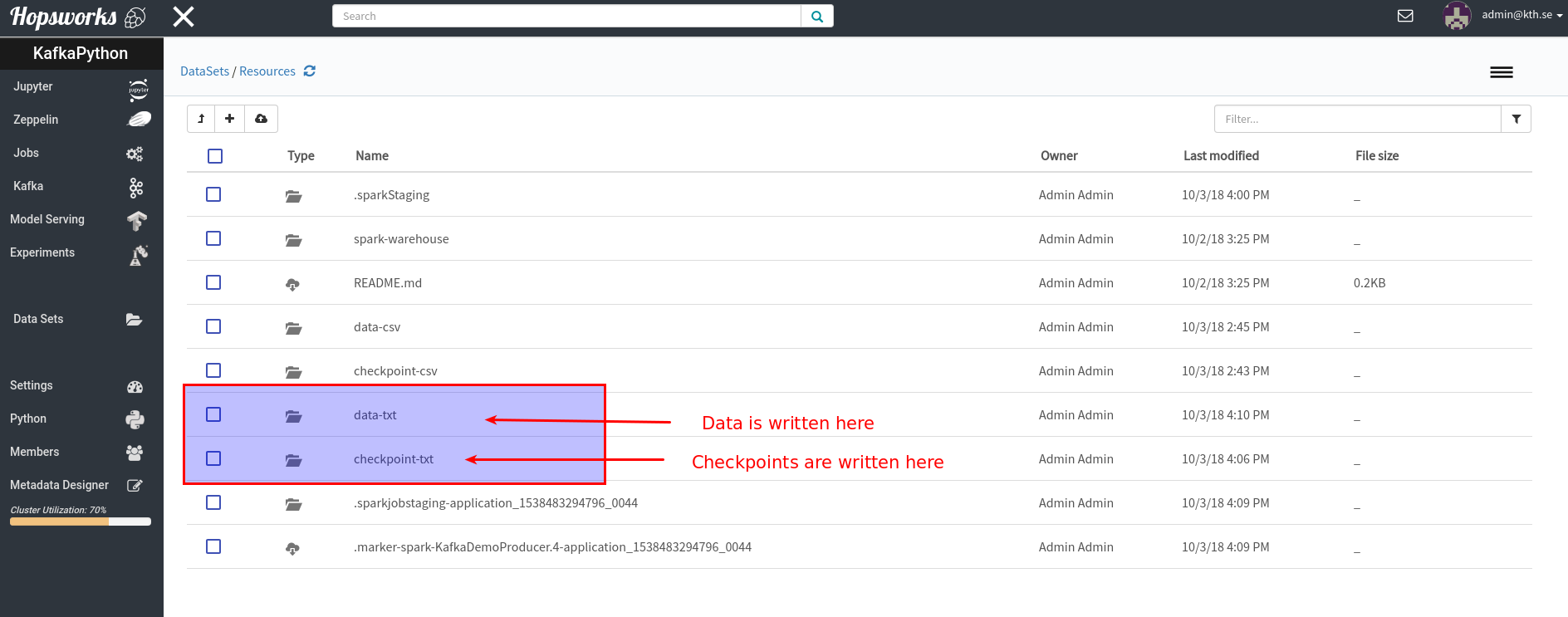

Update the OUTPUT_PATH field to where the output data should be written

TOPIC_NAME = "test2"

OUTPUT_PATH = "/Projects/" + hdfs.project_name() + "/Resources/data-txt"

CHECKPOINT_PATH = "/Projects/" + hdfs.project_name() + "/Resources/checkpoint-txt"

Consume the Kafka Topic using Spark and Write to a Sink¶

The below snippet creates a streaming DataFrame with Kafka as a data source. Spark is lazy so it will not start streaming the data from Kafka into the dataframe until we specify an output sink (which we do later on in this notebook)

#lazy

df = spark \

.readStream \

.format("kafka") \

.option("kafka.bootstrap.servers", kafka.get_broker_endpoints()) \

.option("kafka.security.protocol",kafka.get_security_protocol()) \

.option("kafka.ssl.truststore.location", tls.get_trust_store()) \

.option("kafka.ssl.truststore.password", tls.get_key_store_pwd()) \

.option("kafka.ssl.keystore.location", tls.get_key_store()) \

.option("kafka.ssl.keystore.password", tls.get_key_store_pwd()) \

.option("kafka.ssl.key.password", tls.get_trust_store_pwd()) \

.option("subscribe", TOPIC_NAME) \

.load()

When using Kafka as a data source, Spark gives us a default kafka schema as printed below

df.printSchema()

root |-- key: binary (nullable = true) |-- value: binary (nullable = true) |-- topic: string (nullable = true) |-- partition: integer (nullable = true) |-- offset: long (nullable = true) |-- timestamp: timestamp (nullable = true) |-- timestampType: integer (nullable = true)

We are using the Spark structured streaming engine, which means that we can express stream queries just as we would do in batch jobs.

Below we filter the input stream to select only the message values

messages = df.selectExpr("CAST(value AS STRING)")

Specify the output query and the sink of the stream job to be a CSV file in HopsFS.

By using checkpointing and a WAL, spark gives us end-to-end exactly-once fault-tolerance

query = messages \

.writeStream \

.format("text") \

.option("path", OUTPUT_PATH) \

.option("checkpointLocation", CHECKPOINT_PATH) \

.start()

Run the streaming job, in theory streaming jobs should run forever.

The call below will be blocking and not terminate. To kill this job you have to restart the pyspark kernel.

query.awaitTermination()

query.stop()

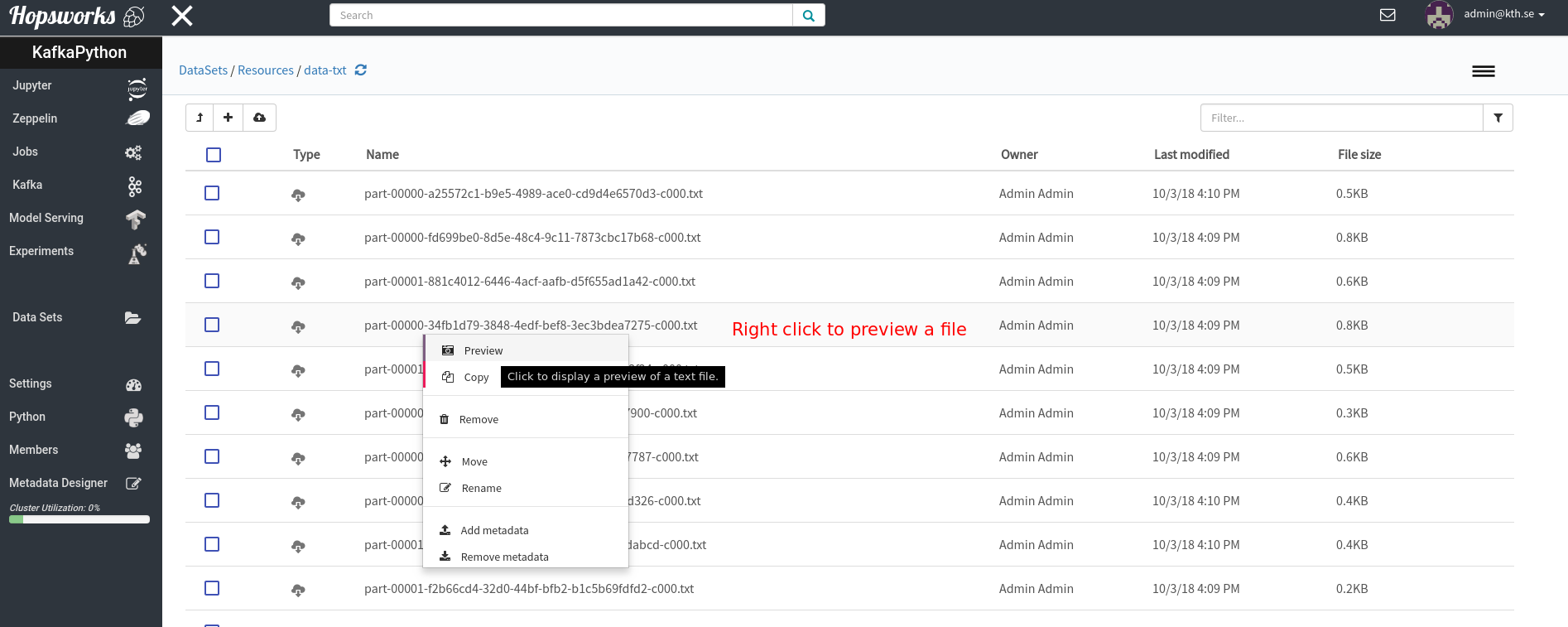

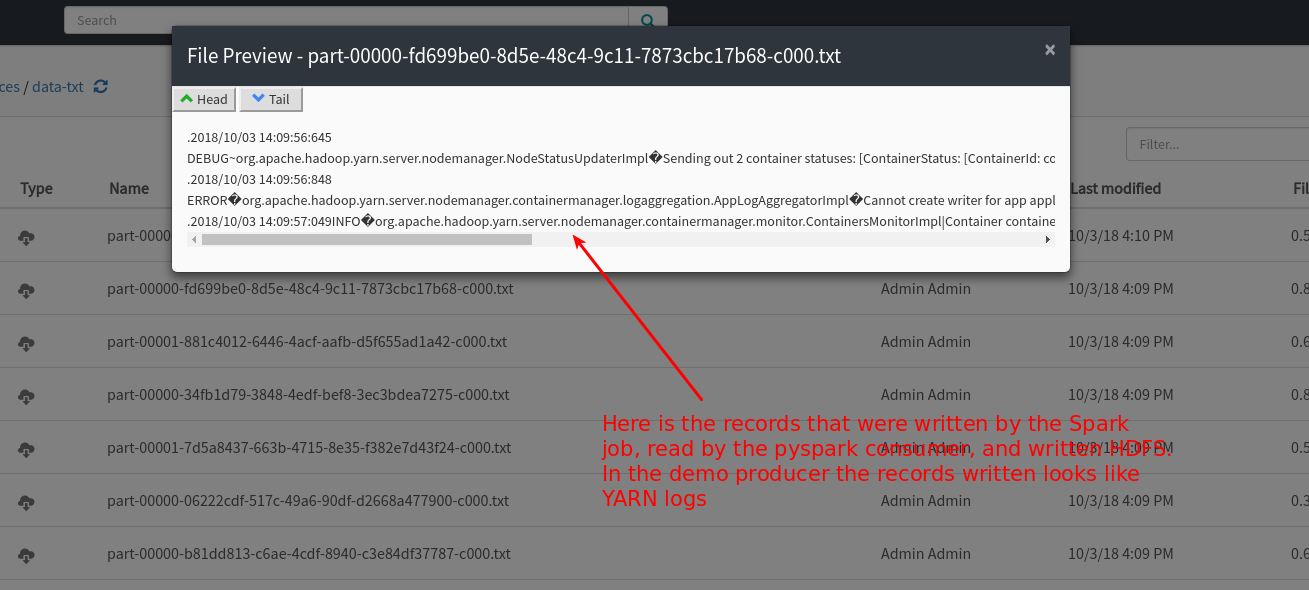

While the job is running you can go to the HDFS file browser in the Hopsworks UI to preview the files: