![]()

1. Introduction ¶

In the 2 prior notebooks, we deployed our machine learning model as a web service on [Azure Container Instances](https://github.com/Microsoft/ComputerVisionBestPractices/blob/master/classification/notebooks/21_deployment_on_azure_container_instances.ipynb) (ACI) and on [Azure Kubernetes Service](https://github.com/Microsoft/ComputerVision/blob/master/classification/notebooks/22_deployment_on_azure_kubernetes_service.ipynb) (AKS). In this notebook, we will learn how to test our service:

- Using the

runAPI - Via a raw HTTP request.

Note: We are assuming that notebooks "20", "21" and "22" were previously run, and that we consequently already have a workspace, and a web service that serves our machine learning model on ACI and/or AKS. If that is not the case, we can refer to these three notebooks.

# For automatic reloading of modified libraries

%reload_ext autoreload

%autoreload 2

# Regular python libraries

import inspect

import json

import os

import requests

import sys

from azureml.core.authentication import AzureCliAuthentication

from azureml.core.authentication import InteractiveLoginAuthentication

from azureml.core.authentication import AuthenticationException

# fast.ai

from fastai.vision import open_image

# Azure

import azureml.core

from azureml.core import Workspace

# Computer Vision repository

sys.path.extend([".", "../.."])

from utils_cv.common.data import data_path

from utils_cv.common.image import im2base64, ims2strlist

from utils_cv.common.azureml import get_or_create_workspace

# Check core SDK version number

print(f"Azure ML SDK Version: {azureml.core.VERSION}")

Azure ML SDK Version: 1.0.48

To create or access an Azure ML Workspace, you will need the following information. If you are coming from previous notebook you can retreive existing workspace, or create a new one if you are just starting with this notebook.

- subscription ID: the ID of the Azure subscription we are using

- resource group: the name of the resource group in which our workspace resides

- workspace region: the geographical area in which our workspace resides (e.g. "eastus2" -- other examples are ---available here -- note the lack of spaces)

- workspace name: the name of the workspace we want to create or retrieve.

subscription_id = "YOUR_SUBSCRIPTION_ID"

resource_group = "YOUR_RESOURCE_GROUP_NAME"

workspace_name = "YOUR_WORKSPACE_NAME"

workspace_region = "YOUR_WORKSPACE_REGION" #Possible values eastus, eastus2 and so on.

# A util method that creates a workspace or retrieves one if it exists, also takes care of Azure Authentication

from utils_cv.common.azureml import get_or_create_workspace

ws = get_or_create_workspace(

subscription_id,

resource_group,

workspace_name,

workspace_region)

# Print the workspace attributes

print('Workspace name: ' + ws.name,

'Workspace region: ' + ws.location,

'Subscription id: ' + ws.subscription_id,

'Resource group: ' + ws.resource_group, sep = '\n')

WARNING - Warning: Falling back to use azure cli login credentials. If you run your code in unattended mode, i.e., where you can't give a user input, then we recommend to use ServicePrincipalAuthentication or MsiAuthentication. Please refer to aka.ms/aml-notebook-auth for different authentication mechanisms in azureml-sdk.

Workspace name: amlnotebookws Workspace region: eastus Resource group: amlnotebookrg

ws.webservices

{'aks-cpu-image-classif-web-svc': AksWebservice(workspace=Workspace.create(name='amlnotebookws', subscription_id='2ad17db4-e26d-4c9e-999e-adae9182530c', resource_group='amlnotebookrg'), name=aks-cpu-image-classif-web-svc, image_id=image-classif-resnet18-f48:2, compute_type=AKS, state=None, scoring_uri=http://13.82.180.139:80/api/v1/service/aks-cpu-image-classif-web-svc/score, tags={}, properties={'azureml.git.repository_uri': 'git@github.com:microsoft/ComputerVision.git', 'mlflow.source.git.repoURL': 'git@github.com:microsoft/ComputerVision.git', 'azureml.git.branch': 'rijai/amltesting', 'mlflow.source.git.branch': 'rijai/amltesting', 'azureml.git.commit': '0bf9dd30d64b5aed17a3e97055215e4d24b3840a', 'mlflow.source.git.commit': '0bf9dd30d64b5aed17a3e97055215e4d24b3840a', 'azureml.git.dirty': 'True'}),

'im-classif-websvc': AciWebservice(workspace=Workspace.create(name='amlnotebookws', subscription_id='2ad17db4-e26d-4c9e-999e-adae9182530c', resource_group='amlnotebookrg'), name=im-classif-websvc, image_id=image-classif-resnet18-f48:2, compute_type=ACI, state=None, scoring_uri=http://c7bf18e3-cef1-4179-a524-59f862ffa1d9.eastus.azurecontainer.io/score, tags={'webservice': 'image classification model (fastai 1.0.48)'}, properties={'azureml.git.repository_uri': 'git@github.com:microsoft/ComputerVision.git', 'mlflow.source.git.repoURL': 'git@github.com:microsoft/ComputerVision.git', 'azureml.git.branch': 'rijai/amltesting', 'mlflow.source.git.branch': 'rijai/amltesting', 'azureml.git.commit': '0bf9dd30d64b5aed17a3e97055215e4d24b3840a', 'mlflow.source.git.commit': '0bf9dd30d64b5aed17a3e97055215e4d24b3840a', 'azureml.git.dirty': 'True'})}

This command should return a dictionary, where the keys are the names we assigned to them.

Let's now retrieve the web services of interest.

# Retrieve the web services

aci_service = ws.webservices['im-classif-websvc']

aks_service = ws.webservices['aks-cpu-image-classif-web-svc']

3. Testing of the web services

Let's now test our web service. For this, we first need to retrieve test images and to pre-process them into the format expected by our service. A service typically expects input data to be in a JSON serializable format. Here, we use our own ims2strlist() function to transform our .jpg images into strings of bytes.

# Check the source code of the conversion functions

im2base64_source = inspect.getsource(im2base64)

im2strlist_source = inspect.getsource(ims2strlist)

print(im2base64_source)

print(im2strlist_source)

def im2base64(im_path: Union[Path, str]) -> bytes:

"""Get image bytes.

Args:

im_path (string): Path to the image

Returns:

im_bytes

"""

with open(im_path, "rb") as image:

# Extract image bytes

im_content = image.read()

# Convert bytes into a string

im_bytes = b64encode(im_content)

return im_bytes

def ims2strlist(im_path_list: list) -> list:

"""Get byte-str list of the images in the given path.

Args:

im_path_list (list of strings): List of image paths

Returns:

im_string_list: List containing based64-encoded images

decoded into strings

"""

im_string_list = []

for im_path in im_path_list:

im_string_list.append(im2base64(im_path).decode("utf-8"))

return im_string_list

# Extract test images paths

im_url_root = "https://cvbp.blob.core.windows.net/public/images/"

im_filenames = ["cvbp_milk_bottle.jpg", "cvbp_water_bottle.jpg"]

for im_filename in im_filenames:

# Retrieve test images from our storage blob

r = requests.get(os.path.join(im_url_root, im_filename))

# Copy test images to local data/ folder

with open(os.path.join(data_path(), im_filename), 'wb') as f:

f.write(r.content)

# Extract local path to test images

local_im_paths = [os.path.join(data_path(), im_filename) for im_filename in im_filenames]

# Convert images to json object

im_string_list = ims2strlist(local_im_paths)

service_input = json.dumps({"data": im_string_list})

3.A Using the run API

In a real case scenario, we would only have one of these 2 services running. In this section, we show how to test that the web service running on ACI is working as expected. The commands we will use here are exactly the same as those we would use for our service running on AKS. We would just need to replace the aci_service object by the aks_service one.

# Select the web service to test

service = aci_service

# service = aks_service

# Predict using the deployed model

result = service.run(service_input)

# Plot the results

actual_labels = ['milk_bottle', 'water_bottle']

for k in range(len(result)):

title = f"{actual_labels[k]}/{result[k]['label']} - {round(100.*float(result[k]['probability']), 2)}%"

open_image(local_im_paths[k]).show(title=title)

# ---------

# On ACI

# ---------

# Extract service URL

service_uri = aci_service.scoring_uri

print(f"POST requests to url: {service_uri}")

# Prepare the data

payload = {"data": im_string_list}

# Send the service request

resp = requests.post(service_uri, json=payload)

# Alternative way of sending the test data

# headers = {'Content-Type':'application/json'}

# resp = requests.post(service_uri, service_input, headers=headers)

print(f"Prediction: {resp.text}")

POST requests to url: http://c7bf18e3-cef1-4179-a524-59f862ffa1d9.eastus.azurecontainer.io/score

Prediction: [{"label": "water_bottle", "probability": "0.8001841306686401"}, {"label": "water_bottle", "probability": "0.68577641248703"}]

# ---------

# On AKS

# ---------

# Service URL

service_uri = aks_service.scoring_uri

print(f"POST requests to url: {service_uri}")

# Prepare the data

payload = {"data": im_string_list}

# - - - - Specific to AKS - - - -

# Authentication keys

primary, secondary = aks_service.get_keys()

print(f"Keys to use when calling the service from an external app: {[primary, secondary]}")

# Build the request's parameters

key = primary

# Set the content type

headers = { 'Content-Type':'application/json' }

# Set the authorization header

headers['Authorization']=f'Bearer {key}'

# - - - - - - - - - - - - - - - -

# Send the service request

resp = requests.post(service_uri, json=payload, headers=headers)

# Alternative way of sending the test data

# resp = requests.post(service_uri, service_input, headers=headers)

print(f"Predictions: {resp.text}")

POST requests to url: http://13.82.180.139:80/api/v1/service/aks-cpu-image-classif-web-svc/score

Keys to use when calling the service from an external app: ['YeUcrNbnzUmN3kKrJipnGzNexQbp7nIz', 'ChjsMumEcKMdsM6R9Ov4XidvBKIJsEkb']

Predictions: [{"label": "water_bottle", "probability": "0.8001841306686401"}, {"label": "water_bottle", "probability": "0.68577641248703"}]

4. Service telemetry in Application Insights ¶

Let's now assume that we have users, and that they start sending requests to our web service. As they do so, we want to ensure that our service is up, healthy, returning responses in a timely fashion, and that traffic is reasonable for the resources we allocated to it. For this, we can use Application Insights. This service captures our web service's logs, parses them and provides us with tables and visual representations of what is happening.

In the [Azure portal](https://portal.azure.com):

- Let's navigate to "Resource groups"

- Select our subscription and resource group that contain our workspace

- Select the Application Insights type associated with our workspace

- If we have several, we can still go back to our workspace (in the portal) and click on "Overview" - This shows the elements associated with our workspace, in particular our Application Insights, on the upper right of the screen

- Click on the App Insights resource

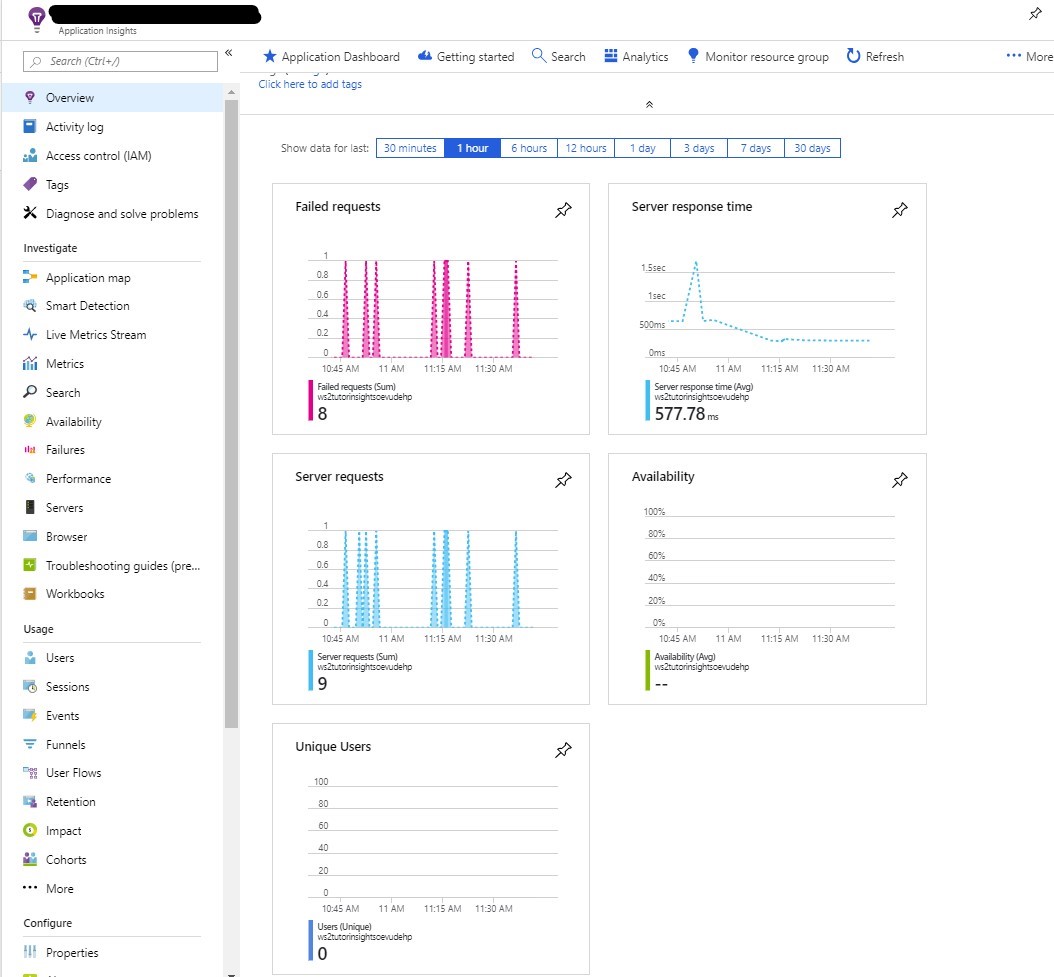

- There, we can see a high level dashboard with information on successful and failed requests, server response time and availability (cf. Figure 1)

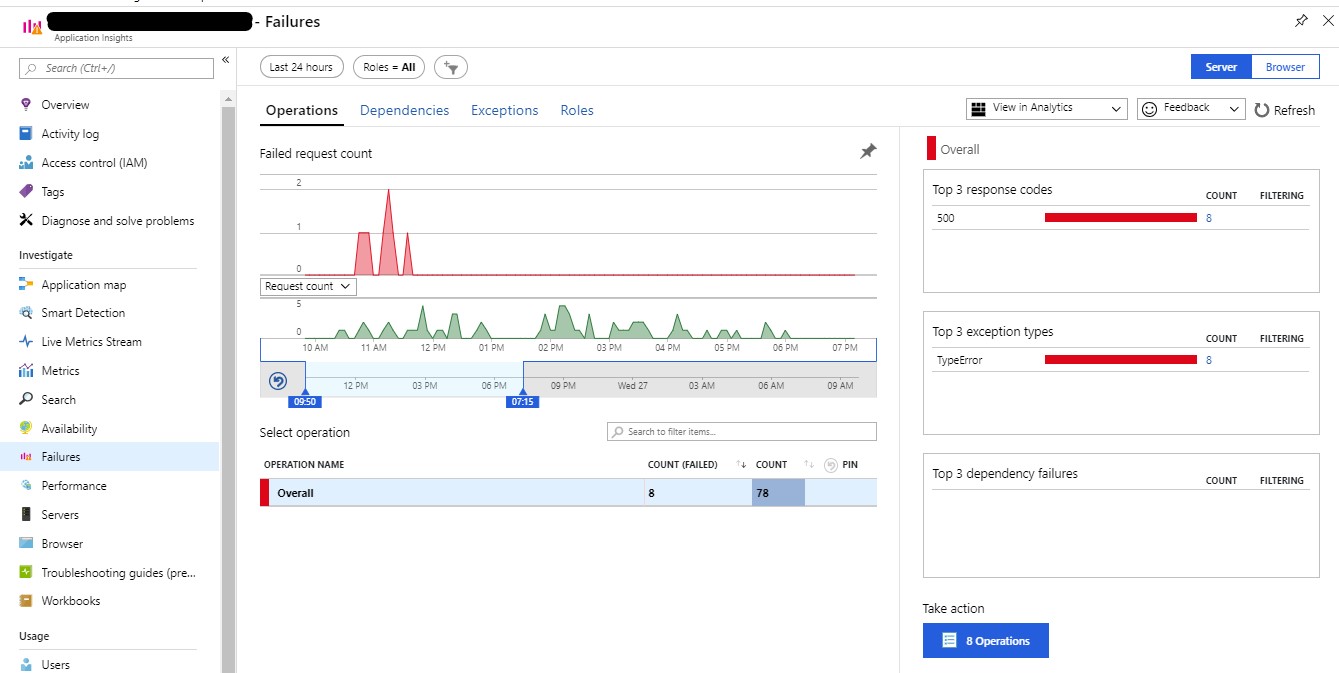

- Click on the "Server requests" graph

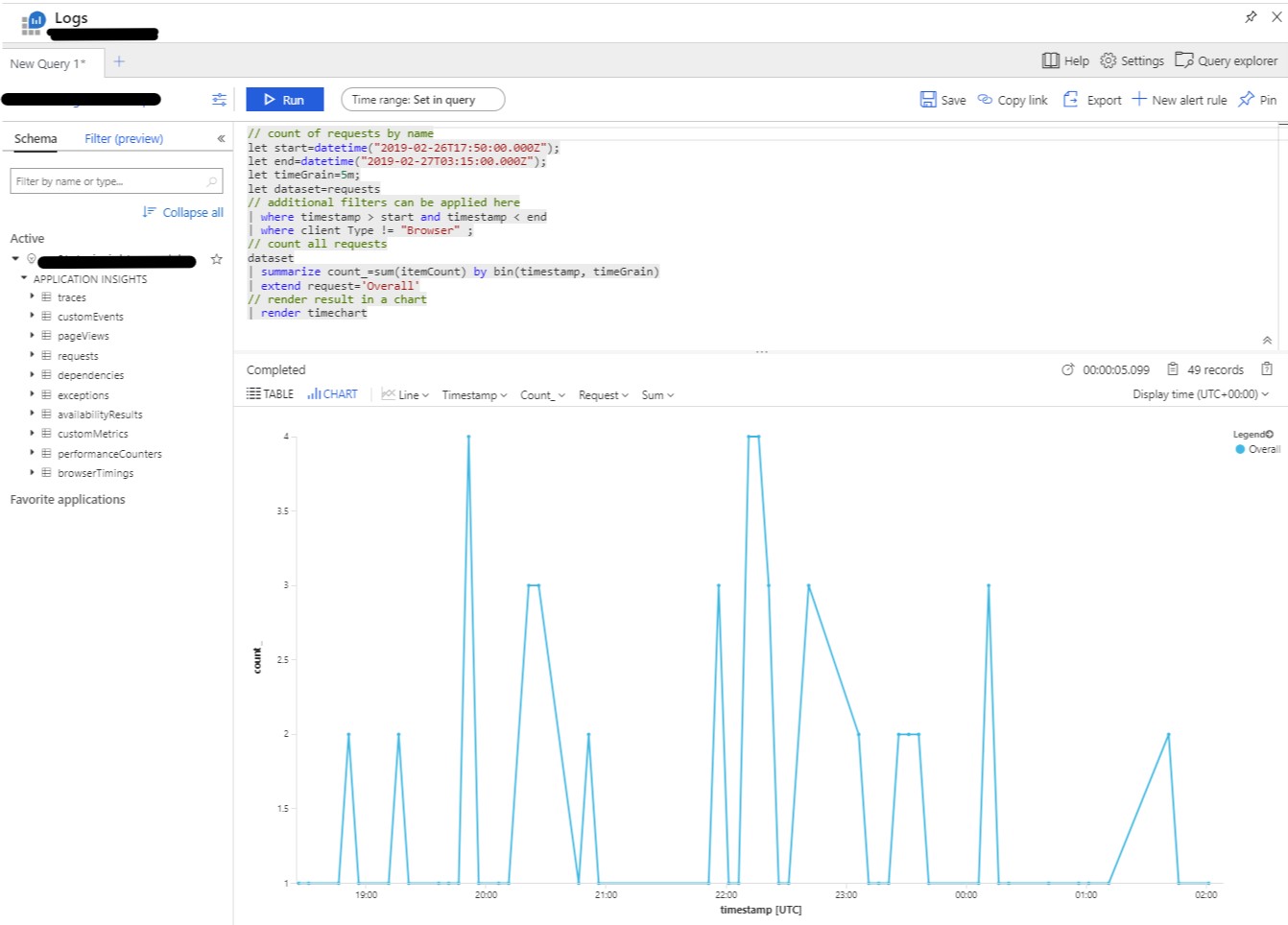

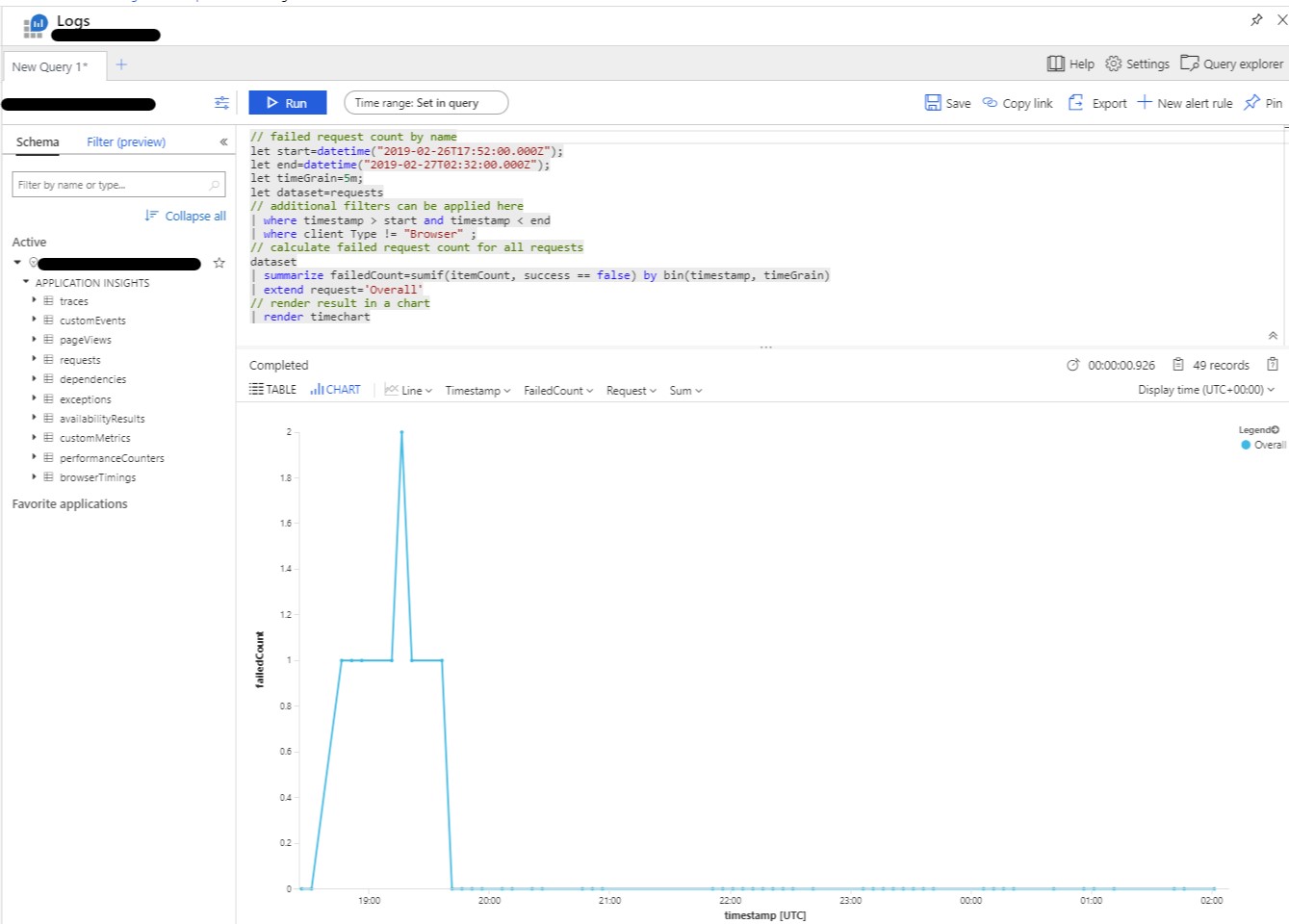

- In the "View in Analytics" drop-down, select "Request count" in the "Analytics" section

- This displays the specific query ran against the service logs to extract the number of executed requests (successful or not -- cf. Figure 2).

- Still in the "Logs" page, click on the eye icon next to "requests" on the "Schema"/left pane, and on "Table", on the right:

- This shows the list of calls to the service, with their success statuses, durations, and other metrics. This table is especially useful to investigate problematic requests (cf. Figure 3).

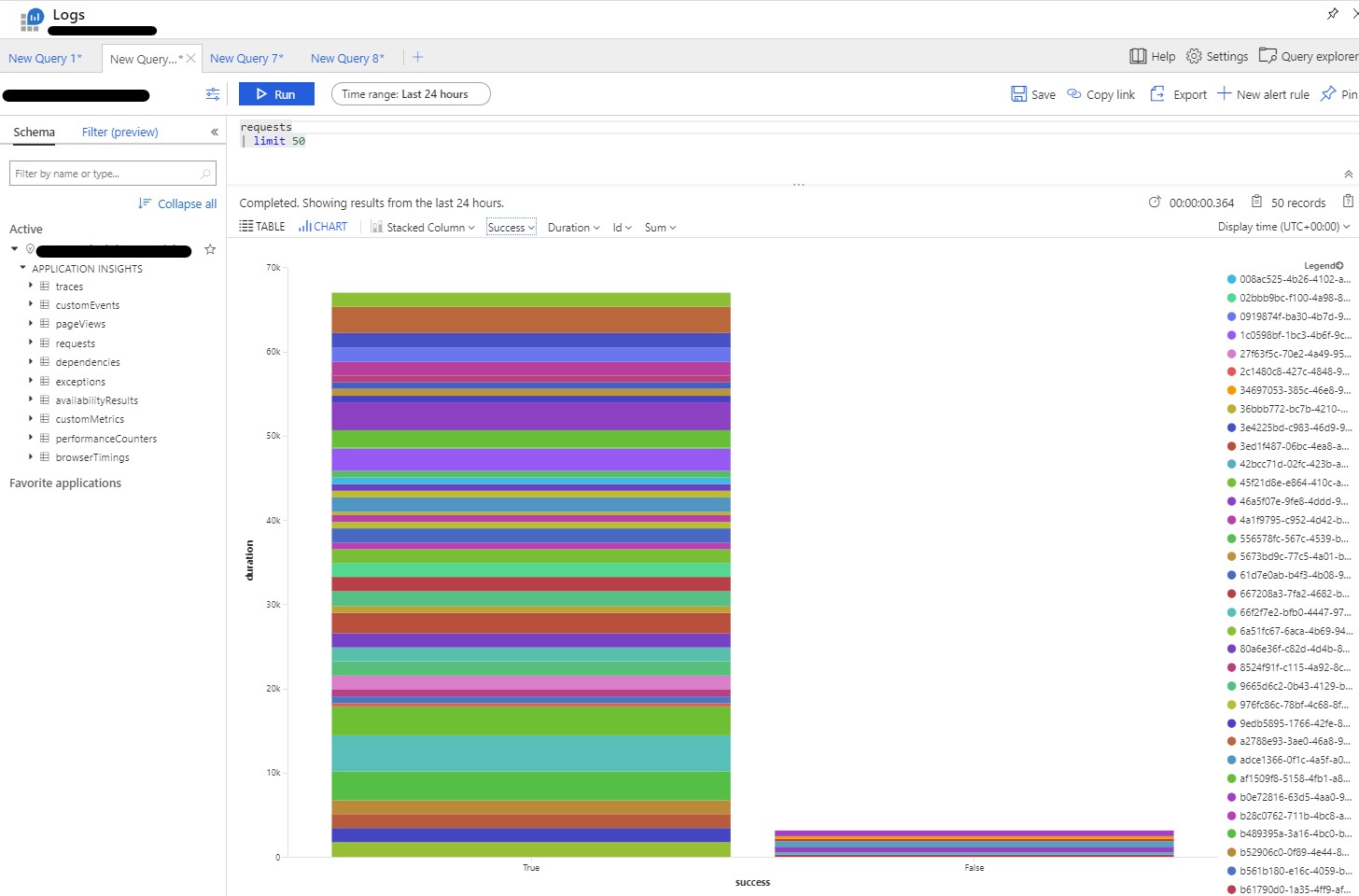

- Results can also be visualized as a graph by clicking on the "Chart" tab. Metrics are plotted by default, but we can change them by clicking on one of the field name drop-downs (cf. Figures 4 to 6).

- Navigate across the different queries we ran through the different "New Query X" tabs.

Figure 1: Web service performance metrics Figure 1: Web service performance metrics

|

Figure 2: Insights into failed requests Figure 2: Insights into failed requests

|

Figure 3: Example log of a failed request Figure 3: Example log of a failed request

|

Figure 4: Total request count over time Figure 4: Total request count over time

|

Figure 5: Failed request count over time Figure 5: Failed request count over time

|

Figure 6: Request success distribution Figure 6: Request success distribution

|

5. Clean up

In a real-life scenario, it is likely that one of our web services would need to be up and running at all times. However, in the present demonstrative case, and now that we have verified that they work, we can delete them as well as all the resources we used.

Overall, with a workspace, a web service running on ACI and another one running on a CPU-based AKS cluster, we incurred a cost of about $15 a day (as of May 2019). About 70% was spent on virtual machines, 13% on the container registry (ACR), 12% on the container instances (ACI), and 5% on storage.

To get a better sense of pricing, we can refer to [this calculator](https://azure.microsoft.com/en-us/pricing/calculator/?service=virtual-machines). We can also navigate to the [Cost Management + Billing pane](https://ms.portal.azure.com/#blade/Microsoft_Azure_Billing/ModernBillingMenuBlade/BillingAccounts) on the portal, click on our subscription ID, and click on the Cost Analysis tab to check our credit usage.

Note: In the next notebooks, we will continue to use the AKS web service. This is why we are only deleting the service deployed on ACI.

5.A Application Insights deactivation and web service termination

When deleting resources, we need to start by the ones we created last. So, we first deactivate the telemetry and then delete services and compute targets.

# Telemetry deactivation

# aks_service.update(enable_app_insights=False)

# Services termination

aci_service.delete()

# aks_service.delete()

# Compute target deletion

# aks_target.delete()

5.B Docker image deletion

Now that the services no longer exist, we can delete the Docker image that we created in [21_deployment_on_azure_container_instances.ipynb](https://github.com/Microsoft/ComputerVisionBestPractices/blob/master/classification/notebooks/21_deployment_on_azure_container_instances.ipynb), and which contains our image classifier model.

print("Docker images:")

for docker_im in ws.images:

print(f" --> Name: {ws.images[docker_im].name}\n\

--> ID: {ws.images[docker_im].id}\n\

--> Tags: {ws.images[docker_im].tags}\n\

--> Creation time: {ws.images[docker_im].created_time}")

Docker images:

--> Name: image-classif-resnet18-f48

--> ID: image-classif-resnet18-f48:2

--> Tags: {'training set': 'ImageNet', 'architecture': 'CNN ResNet18', 'type': 'Pretrained'}

--> Creation time: 2019-07-18 17:51:26.927240+00:00

docker_image = ws.images["image-classif-resnet18-f48"]

# docker_image.delete()