%matplotlib inline

import warnings

warnings.filterwarnings("ignore")

import pandas as pd

import plotly.express as px

import re

from datetime import datetime, date, timedelta

import sys

sys.path.append("..")

from data.utils import get_days_index

all_days = get_days_index(250)

import sqlite3

conn = sqlite3.connect("../data/dbs/Ex2.db")

c = conn.cursor()

1. Introduction¶

In the last exercise, we examined some isolated data downtime incidents in the EXOPLANETS table. While the techniques from that exercise are helpful, in practice data downtime involves data infrastructure with more than one table. So, in this exercise, we'll look at a scenario where multiple tables interact.

EXOPLANETS¶

Let's once again consider our EXOPLANETS table, but at a later date than before. Now, our table has additional entries, and additional fields. We now record the planets' orbital eccentricity and the contents of their atmosphere.

c.execute("PRAGMA table_info(EXOPLANETS);")

c.fetchall()

Let's take a look at our 10 most recent additions to the table:

pd.read_sql_query("SELECT * FROM EXOPLANETS ORDER BY DATE_ADDED DESC LIMIT 10", conn)

A database entry in EXOPLANETS contains the following info:

_id: A UUID corresponding to the planet.distance: Distance from Earth, in lightyears.g: Surface gravity as a multiple of $g$, the gravitational force constant.orbital_period: Length of a single orbital cycle in days.avg_temp: Average surface temperature in degrees Kelvin.date_added: The date our system discovered the planet and added it automatically to our databases.eccentricity: The orbital eccentricity of the planet about its host star.atmosphere: The dominant chemical makeup of the planet's atmosphere.

Note that like distance, g, orbital_period, and avg_temp, both eccentricity and atmosphere may be NULL for a given planet as a result of missing or erroneous data. For example, rogue planets have undefined orbital eccentricity, and many planets don't have atmospheres at all.

Note also that data is not backfilled, meaning data entries from the beginning of the table will not have eccentricity and atmosphere information. Check out all of these None values at the beginning of the table:

pd.read_sql_query(

"""SELECT

date_added,

eccentricity,

atmosphere

FROM EXOPLANETS

ORDER BY DATE_ADDED ASC

LIMIT 10""",

conn

)

EXOPLANETS_SCHEMA¶

Thankfully, we have been recording historical table_info on the EXOPLANETS table and collecting the results in a table called EXOPLANETS_SCHEMA, updated daily.

c.execute("PRAGMA table_info(EXOPLANETS_SCHEMA);")

c.fetchall()

Querying the very beginning and end of EXOPLANETS_SCHEMA's data reflects that EXOPLANETS's metadata has changed since January 2020:

exoplanets_schema_df = pd.read_sql_query("SELECT * FROM EXOPLANETS_SCHEMA", conn)

print("Was: " + exoplanets_schema_df.iloc[0]["schema"])

print("Is now: " + exoplanets_schema_df.iloc[-1]["schema"])

2. Exercise: Understanding Schema Change¶

When exactly did EXOPLANETS start recording new data? The metadata in EXOPLANETS_SCHEMA should tell us. See if you can write a SQL query that returns the date(s) the schema changed.

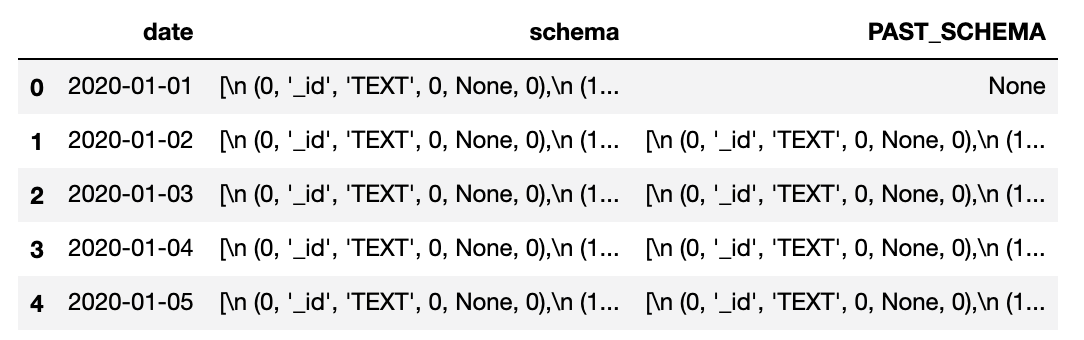

To start, here's a query that returns each date and schema in the table:

SQL = """

SELECT

DATE,

SCHEMA

FROM

EXOPLANETS_SCHEMA

"""

pd.read_sql_query(SQL, conn).head(5)

Using this query, and the trick with LAG() we utilized in the last notebook, try to get a table comparing a date's schema to the schema from the day prior.

# YOUR CODE HERE

SQL = """

"""

# END YOUR CODE

pd.read_sql_query(SQL, conn).head(5)

You should see something like this:

Next, let's simply add a WITH statement like we did before, and return all of the rows where SCHEMA and PAST_SCHEMA are different!

# YOUR CODE HERE

SQL = """

"""

# END YOUR CODE

pd.read_sql_query(SQL, conn).head(5)

A correct implementation should show a single date, 2020-07-19. If you got that, nice work! This date will come in handy later, so keep it in mind.

3. An additional table¶

Now, we want to involve another table in our DB. HABITABLES records information about the habitability of exoplanets we've discovered. This table takes data from EXOPLANETS and other upstream tables and transforms it to produce a habitability index: a real number between 0 and 1 indicating how likely the planet is to harbor life.

c.execute("PRAGMA TABLE_INFO(HABITABLES);")

c.fetchall()

pd.read_sql_query("SELECT * FROM HABITABLES LIMIT 10", conn)

4. Exercise: Visualizing Distribution Errors¶

Like in exercise 1, I'll write a quick query assessing a distributional feature of the HABITABILITY table -- how habitable is the average planet we detect, as a function of the day it was detected?

SQL = """

SELECT

DATE_ADDED,

AVG(HABITABILITY) AS AVG_HABITABILITY

FROM

HABITABLES

GROUP BY

DATE_ADDED

"""

avg_habitability = pd.read_sql_query(SQL, conn)

avg_habitability = avg_habitability \

.rename(columns={clmn: clmn.lower() for clmn in avg_habitability.columns})

avg_habitability = avg_habitability.set_index("date_added")

avg_habitability = avg_habitability.reindex(all_days)

fig = px.bar(x=all_days, y=avg_habitability["avg_habitability"])

fig.update_xaxes(title="Date")

fig.update_yaxes(title="AVG(habitability)")

fig.add_vline(x="2020-07-19", line_color='red')

fig.show()

I plotted the date of the schema change, 2020-07-19, in red as a visual aid. Clearly, unless our instruments are malfunctioning, something is wrong! The planets we're adding to the table after the schema change seem much less habitable on average. Using a SQL query below, see if you can figure out what exactly happened.

Hint: When averages change, it's natural to look for occurrences of unusual values. When is habitability NULL, 0, or outside of the range $[0, 1]$? What about other fields in the table that might be related?

Let's try looking at the zero rate to see if anything is unusual.

As a hint, it's easy to find special conditions, like when a metric is equal to 0, using a SQL CASE statement. You'll probably want to aggregate all of these CASEs, using SUM(), and be sure to CAST(... AS FLOAT) if you're dividing anything. Try it out!

# YOUR CODE HERE

SQL = """

"""

# END YOUR CODE

zero_rate = pd.read_sql_query(SQL, conn)

zero_rate = zero_rate \

.rename(columns={clmn: clmn.lower() for clmn in zero_rate.columns})

zero_rate = zero_rate.set_index("date_added")

zero_rate = zero_rate.reindex(all_days)

fig = px.bar(x=all_days, y=zero_rate["zero_rate"])

fig.add_vline(x="2020-07-19", line_color='red')

fig.update_xaxes(title="Date")

fig.update_yaxes(title="Habitability Zero Rate")

fig.show()

With a small amount of digging, we can uncover something important -- the habitability index is never exactly 0 before the schema change, but afterwards we see the rate of 0s jump up to ~50%. This has the detected effect of dipping the average value of the field.

Note that in practice, you should look for both -- measuring a field's rate of zero values, as well as its average value, can both help with identifying data downtime issues. As we saw last exercise, the rate of null values can also be helpful.

Great work!¶

In the next exercise, we'll look at queries that span multiple tables, another step towards building intelligent data downtime systems.