Various text formats (Nestle1904LFT)¶

Table of content ¶

1 - Introduction ¶

Back to TOC¶

This Jupyter Notebook is designed to demonstrate the predefined text formats available in this Text-Fabric dataset, specifically focusing on displaying the Greek surface text of the New Testament.

2 - Load Text-Fabric app and data ¶

Back to TOC¶

%load_ext autoreload

%autoreload 2

# Loading the Text-Fabric code

# Note: it is assumed Text-Fabric is installed in your environment

from tf.fabric import Fabric

from tf.app import use

# load the N1904 app and data

N1904 = use ("tonyjurg/Nestle1904LFT", version="0.7", hoist=globals())

Locating corpus resources ...

The requested app is not available offline ~/text-fabric-data/github/tonyjurg/Nestle1904LFT/app not found rate limit is 5000 requests per hour, with 4996 left for this hour connecting to online GitHub repo tonyjurg/Nestle1904LFT ... connected app/README.md...downloaded app/config.yaml...downloaded app/static...directory app/static/css...directory app/static/css/display_custom.css...downloaded app/static/logo.png...downloaded OK

Data: tonyjurg - Nestle1904LFT 0.7, Character table, Feature docs

Node types

| Name | # of nodes | # slots / node | % coverage |

|---|---|---|---|

| book | 27 | 5102.93 | 100 |

| chapter | 260 | 529.92 | 100 |

| verse | 7943 | 17.35 | 100 |

| sentence | 8011 | 17.20 | 100 |

| wg | 105430 | 6.85 | 524 |

| word | 137779 | 1.00 | 100 |

Features:

Nestle 1904 (Low Fat Tree)

specified

- apiVersion:

3 - appName:

tonyjurg/Nestle1904LFT appPath:

C:/Users/tonyj/text-fabric-data/github/tonyjurg/Nestle1904LFT/app- commit:

e68bd68c7c4c862c1464d995d51e27db7691254f - css:

'' dataDisplay:

excludedFeatures:

orig_orderversebookchapter

noneValues:

noneunknown- no value

NA''

- showVerseInTuple:

0 - textFormat:

text-orig-full

docs:

- docBase:

https://github.com/tonyjurg/Nestle1904LFT/blob/main/docs/ - docPage:

about - docRoot:

https://github.com/tonyjurg/Nestle1904LFT featureBase:

https://github.com/tonyjurg/Nestle1904LFT/blob/main/docs/features/<feature>.md

- docBase:

- interfaceDefaults: {fmt:

layout-orig-full} - isCompatible:

True - local: no value

localDir:

C:/Users/tonyj/text-fabric-data/github/tonyjurg/Nestle1904LFT/_tempprovenanceSpec:

- corpus:

Nestle 1904 (Low Fat Tree) - doi:

10.5281/zenodo.10182594 - org:

tonyjurg - relative:

/tf - repo:

Nestle1904LFT - repro:

Nestle1904LFT - version:

0.7 - webBase:

https://learner.bible/text/show_text/nestle1904/ - webHint:

Show this on the Bible Online Learner website - webLang:

en webUrl:

https://learner.bible/text/show_text/nestle1904/<1>/<2>/<3>- webUrlLex:

{webBase}/word?version={version}&id=<lid>

- corpus:

- release:

v0.6 typeDisplay:

book:

- condense:

True - hidden:

True - label:

{book} - style:

''

- condense:

chapter:

- condense:

True - hidden:

True - label:

{chapter} - style:

''

- condense:

sentence:

- hidden:

0 - label:

#{sentence} (start: {book} {chapter}:{headverse}) - style:

''

- hidden:

verse:

- condense:

True - excludedFeatures:

chapter verse - label:

{book} {chapter}:{verse} - style:

''

- condense:

wg:

- hidden:

0 label:

#{wgnum}: {wgtype} {wgclass} {clausetype} {wgrole} {wgrule} {junction}- style:

''

- hidden:

word:

- base:

True - features:

lemma - featuresBare:

gloss - surpress:

chapter verse

- base:

- writing:

grc

# The following will push the Text-Fabric stylesheet to this notebook (to facilitate proper display with notebook viewer)

N1904.dh(N1904.getCss())

# Set default view in a way to limit noise as much as possible.

N1904.displaySetup(condensed=True, multiFeatures=False, queryFeatures=False)

3 - Performing the queries ¶

Back to TOC¶

3.1 - Display the formatting options available for this corpus¶

Back to TOC¶

The output of the following command provides details on available formats to present the text of the corpus.

N1904.showFormats()

| format | level | template |

|---|---|---|

text-critical |

word | {unicode} |

text-normalized |

word | {normalized}{after} |

text-orig-full |

word | {word}{after} |

text-transliterated |

word | {wordtranslit}{after} |

text-unaccented |

word | {wordunacc}{after} |

Note 1: This data originates from the file otext.tf:

@config

...

@fmt:text-orig-full={word}{after}

...

Note 2: The names of the available formats can also be obtaind by using the following call. However, this will not display the features that are included into the format. The function will return a list of ordered tuples that can easily be postprocessed:

T.formats

{'text-critical': 'word',

'text-normalized': 'word',

'text-orig-full': 'word',

'text-transliterated': 'word',

'text-unaccented': 'word'}

3.2 - Showcasing the various formats¶

Back to TOC¶

The following will show the differences between the displayed text for the various formats. The verse to be printed is from Mark 1:1. The assiated verse node is 139200.

for formats in T.formats:

print(f'fmt={formats}\t: {T.text(139200,formats)}')

fmt=text-critical : Ἀρχὴ τοῦ εὐαγγελίου Ἰησοῦ Χριστοῦ (Υἱοῦ Θεοῦ). fmt=text-normalized : Ἀρχή τοῦ εὐαγγελίου Ἰησοῦ Χριστοῦ Υἱοῦ Θεοῦ. fmt=text-orig-full : Ἀρχὴ τοῦ εὐαγγελίου Ἰησοῦ Χριστοῦ Υἱοῦ Θεοῦ. fmt=text-transliterated : Arkhe tou euaggeliou Iesou Khristou Uiou Theou. fmt=text-unaccented : Αρχη του ευαγγελιου Ιησου Χριστου Υιου Θεου.

3.3 - Normalized text¶

Back to TOC¶

The normalized Greek text refers to a standardized and consistent representation of Greek characters and linguistic elements in a text. Using normalized text ensures a consistent presentation, which, in turn, allows for easier postprocessing. The relevance of normalized text becomes evident through the following demonstration.

In the upcoming code segment, a list will be created to display the top 10 differences in values between the "word" feature and the "normalized" feature on the same word node.

# Library to format table

from tabulate import tabulate

# get a node list for all word nodes

WordQuery = '''

word

'''

# The option 'silent=True' has been added in the next line to prevent printing the number of nodes found

WordResult = N1904.search(WordQuery,silent=True)

# Gather the results where feature normalized is different from feature word

ResultDict = {}

NumberOfChanges=0

for tuple in WordResult:

word=F.word.v(tuple[0])

normalized=F.normalized.v(tuple[0])

if word!=normalized:

Change=f"{word} -> {normalized}"

NumberOfChanges+=1

if Change in ResultDict:

# If it exists, add the count to the existing value

ResultDict[Change]+=1

else:

# If it doesn't exist, initialize the count as the value

ResultDict[Change]=1

print(f"{NumberOfChanges} differences found between feature word and feature normalized.")

# Convert the dictionary into a list of key-value pairs and sort it according to frequency

UnsortedTableData = [[key, value] for key, value in ResultDict.items()]

TableData= sorted(UnsortedTableData, key=lambda row: row[1], reverse=True)

# In this example the table will be truncated

max_rows = 10 # Set your desired number of rows here

TruncatedTable = TableData[:max_rows]

# Produce the table

headers = ["word -> normalized","frequency"]

print(tabulate(TruncatedTable, headers=headers, tablefmt='fancy_grid'))

# Add a warning using markdown (API call A.dm) allowing it to be printed in bold type

N1904.dm("**Warning: table truncated!**")

37182 differences found between feature word and feature normalized. ╒══════════════════════╤═════════════╕ │ word -> normalized │ frequency │ ╞══════════════════════╪═════════════╡ │ καὶ -> καί │ 8545 │ ├──────────────────────┼─────────────┤ │ δὲ -> δέ │ 2620 │ ├──────────────────────┼─────────────┤ │ τὸ -> τό │ 1658 │ ├──────────────────────┼─────────────┤ │ τὸν -> τόν │ 1556 │ ├──────────────────────┼─────────────┤ │ τὴν -> τήν │ 1518 │ ├──────────────────────┼─────────────┤ │ γὰρ -> γάρ │ 921 │ ├──────────────────────┼─────────────┤ │ μὴ -> μή │ 902 │ ├──────────────────────┼─────────────┤ │ τὰ -> τά │ 817 │ ├──────────────────────┼─────────────┤ │ τοὺς -> τούς │ 722 │ ├──────────────────────┼─────────────┤ │ πρὸς -> πρός │ 670 │ ╘══════════════════════╧═════════════╛

Warning: table truncated!

Now, it would be interesting to check whether καί and δέ already exist (with these accents) in the feature "word."

# get a node list for all word nodes with feature word=καί

KaiQuery = '''

word word=καί

'''

KaiResult = N1904.search(KaiQuery)

# get a node list for all word nodes with feature word=δέ

DeQuery = '''

word word=δέ

'''

DeResult = N1904.search(DeQuery)

0.09s 31 results 0.08s 144 results

This demonstrates the presence of variant accents for καί and δέ in the feature word. Consequently, constructing queries based on a single accent variant would result in the omission of certain results.

3.4 - Unaccented text¶

Back to TOC¶

A similar case can be made regarding postprocessing with respect to the unaccented text; however, accents do play a significant role in understanding some Greek words (homographs). It is important to realize that the accents were not part of the original text, which was in unaccented capital letters (uncials) without spaces between words.

# get a node list for all word nodes containing some variants in accents

KosmosQuery = '''

word word~λ[όο]γ[όο]ς

'''

PneumaQuery = '''

word word~πν[εέ][ῦυ]μα

'''

KuriosQuery = '''

word word~κ[ύυ]ρ[ίι]ος

'''

HemeraQuery = '''

word word~ἡμ[έε]ρα

'''

Result = N1904.search(KuriosQuery)

for tuple in Result:

word=F.word.v(tuple[0])

print(word)

0.10s 30 results κύριος κύριος κύριος κύριος κύριος κύριος κύριος κύριος κύριος κύριος κύριος κύριος κύριος κύριος κύριος κύριος κύριος κύριος κύριος κύριος κύριος κύριος κύριος κύριος κύριος κύριος κύριος κύριος κύριος κύριος

3.5 - Transliterated text¶

Back to TOC¶

Using transliterated text can be convenient when creating queries, as it allows you to use your normal keyboard without the need to include Greek characters. See the following example:

LatinQuery = '''

word wordtranslit=logos

'''

Result = N1904.search(LatinQuery)

for tuple in Result:

word=F.word.v(tuple[0])

print(word)

break

0.09s 63 results λόγος

3.6 - Text with text critical markers¶

Back to TOC¶

A limited number of critical markers are included in the dataset, stored in the features "markbefore" and "markafter." To get an impression of their quantity:

F.markafter.freqList()

(('', 137728), ('—', 31), (')', 11), (']]', 7), ('(', 1), (']', 1))

F.markbefore.freqList()

(('', 137745), ('—', 16), ('(', 10), ('[[', 7), ('[', 1))

A quick investigation was conducted to check the dataset's consistency. Note that an automated check for '—' is not implemented below, as it is difficult to determine whether this marker indicates a start or an end.

# get a node list for all word nodes

WordQuery = '''

word

'''

BracketList=("(" , ")" , "[" , "]" , "[[" , "]]")

# The option 'silent=True' has been added in the next line to prevent printing the number of nodes found

WordResult = N1904.search(WordQuery,silent=True)

SingleRound=SingleSquare=DoubleSquare=False

for tuple in WordResult:

node=tuple[0]

MarkAfter=F.markafter.v(node)

MarkBefore=F.markbefore.v(node)

Mark=MarkAfter+MarkBefore

location="{} {}:{}".format(F.book.v(node),F.chapter.v(node),F.verse.v(node))

if (Mark in BracketList):

if Mark=="(":

if SingleRound==True: print ("Sequence problem?")

SingleRound=True

print (f"{location}: set single round")

if Mark==")":

if SingleRound==False: print ("Sequence problem?")

SingleRound=False

print (f"{location}: unset single round\n")

if Mark=="[":

if SingleSquare==True: print ("Sequence problem?")

SingleSquare=True

print (f"{location}: set single square")

if Mark=="]":

if SingleSquare==False: print ("Sequence problem?")

SingleSquare=False

print (f"{location}: unset single square\n")

if Mark=="[[":

if DoubleSquare==True: print ("Sequence problem?")

DoubleSquare=True

print (f"{location}: set double square")

if Mark=="]]":

if DoubleSquare==False: print ("Sequence problem?")

DoubleSquare=False

print (f"{location}: unset double square\n")

Mark 1:1: set single round Mark 1:1: unset single round Mark 16:9: set double square Mark 16:20: unset double square Mark 16:99: set double square Mark 16:99: unset double square Luke 24:12: set double square Luke 24:12: unset double square Luke 24:36: set double square Luke 24:36: unset double square Luke 24:40: set double square Luke 24:40: unset double square Luke 24:51: set double square Luke 24:51: unset double square Luke 24:52: set double square Luke 24:52: unset double square John 1:38: set single round John 1:38: unset single round John 1:41: set single round John 1:41: unset single round John 1:42: set single round John 1:42: unset single round John 5:3: set single round John 5:4: unset single round John 7:53: set single round John 8:11: unset single round John 9:7: set single round John 9:7: unset single round John 20:16: set single round John 20:16: unset single round Romans 4:16: set single round Romans 4:17: unset single round Ephesians 1:1: set single square Ephesians 1:1: unset single square Colossians 4:10: set single round Colossians 4:10: unset single round I_Timothy 3:5: set single round I_Timothy 3:5: unset single round

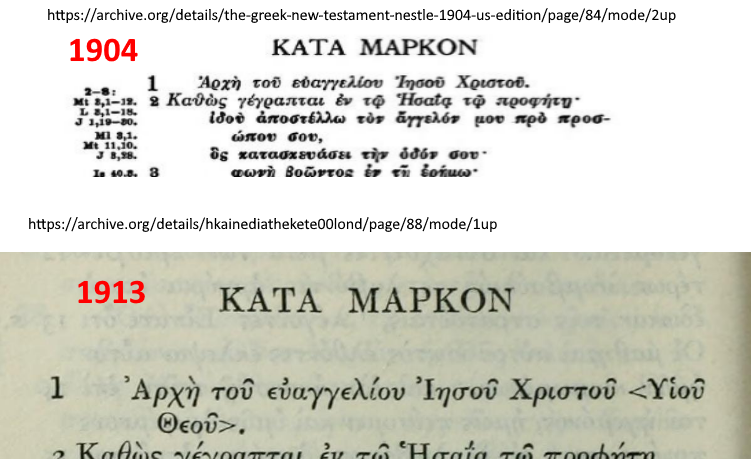

3.7 - Nestle version 1904 and version 1913 (Mark 1:1)¶

Back to TOC¶

The dataset seems to be (also) compiled based upon the Nestle version or 1913, as explained on [https://sites.google.com/site/nestle1904/faq]:

What are your sources? For the text, I used the scanned books available at the Internet Archive (The first edition of 1904, and a reprinting from 1913 – the latter one has a better quality).

Print Mark 1:1 from Text-Fabric data:

T.text(139200,fmt='text-critical')

'Ἀρχὴ τοῦ εὐαγγελίου Ἰησοῦ Χριστοῦ (Υἱοῦ Θεοῦ). '

The result can be verified by examining the scans of the following printed versions:

- Nestle version 1904: @ archive.org

- Nestle version 1913: @ archive.org

Or, in an image, placed side by side: