🚩 Create a free WhyLabs account to get more value out of whylogs!

Did you know you can store, visualize, and monitor whylogs profiles with the WhyLabs Observability Platform? Sign up for a free WhyLabs account to leverage the power of whylogs and WhyLabs together!

Monitoring Text Embeddings with the 20 Newsgroups Dataset¶

In this example, we will show how to use whylogs and WhyLabs to monitor text data. We will use the 20 Newsgroups dataset to train a classifier and monitor different aspects of the pipeline: we will monitor high dimensional embeddings, the list of tokens, and also the performance of the classifier itself. We will also inject an anomaly to see how we can detect it with WhyLabs. We will translate an increasingly large portion of documents from English to Spanish and see how the model performance degrades and how the embeddings change.

To monitor the embeddings, we will first calculate a number of meaningful reference points. In this case, that means embeddings that represent each document topic. To do so, we will use the labeled data in our training dataset to calculate the centroids in PCA space for each topic. We will then compare each data point of a given batch to this set of reference embeddings. That way, we can calculate distribution metrics according to the distance of each reference point.

We can also tokenize each document and monitor the list of tokens. This is useful to detect changes in the vocabulary of the dataset, number of tokens per document, and other statistics.

Finally, we will monitor the performance of the classifier. We will log both predictions and labels for each batch, so we can calculate metrics such as accuracy, precision, recall, and F1 score.

What we'll cover in this tutorial¶

We will divide this example in two stages: Pre-deployment Stage and Production Stage.

In the Pre-deployment Stage we will:

- train a classifier

- calculate the centroids for each topic cluster

In the Production Stage we will:

- load daily batches of data

- vectorize the data

- predict the topic for each document

- log:

- embeddings distance to the centroids

- tokens list for each document

- predictions and targets

In the Production Stage, we will introduce documents in another language (Spanish) to see how the model behaves, and how we can monitor this with WhyLabs.

Installing Dependencies¶

# Note: you may need to restart the kernel to use updated packages.

%pip install whylogs scikit-learn==1.0.2

✔️ Setting the Environment Variables¶

import getpass

import os

# set your org-id here - should be something like "org-xxxx"

print("Enter your WhyLabs Org ID")

os.environ["WHYLABS_DEFAULT_ORG_ID"] = input()

# set your datased_id (or model_id) here - should be something like "model-xxxx"

print("Enter your WhyLabs Dataset ID")

os.environ["WHYLABS_DEFAULT_DATASET_ID"] = input()

# set your API key here

print("Enter your WhyLabs API key")

os.environ["WHYLABS_API_KEY"] = getpass.getpass()

print("Using API Key ID: ", os.environ["WHYLABS_API_KEY"][0:10])

Pre-deployment¶

Training the model¶

from sklearn.datasets import fetch_20newsgroups

from sklearn.feature_extraction.text import TfidfTransformer

from sklearn.feature_extraction.text import CountVectorizer

from sklearn.pipeline import Pipeline

import numpy as np

import pandas as pd

from whylogs.experimental.preprocess.embeddings.selectors import PCACentroidsSelector

from sklearn.naive_bayes import MultinomialNB

We will extract TF-IDF vectors to train our classifier. We will later use the same transform pipeline to generate embeddings in the production stage.

categories = [

"alt.atheism",

"soc.religion.christian",

"comp.graphics",

"rec.sport.baseball",

"talk.politics.guns",

"misc.forsale",

"sci.med",

]

twenty_train = fetch_20newsgroups(

subset="train", remove=("headers", "footers", "quotes"), categories=categories, shuffle=True, random_state=42

)

vectorizer = Pipeline(

[

("vect", CountVectorizer()),

("tfidf", TfidfTransformer()),

]

)

vectors_train = vectorizer.fit_transform(twenty_train.data)

vectors_train = vectors_train.toarray()

clf = MultinomialNB(alpha=0.01)

clf.fit(vectors_train, twenty_train.target)

MultinomialNB(alpha=0.01)

Calculating Reference Embeddings¶

If we have labels for our data, selecting the centroids of clusters for each label makes sense. We provide a helper class, PCACentroidSelector, that finds the centroids in PCA space before converting back to the original dimensional space. The number of components should be high enough to capture enough information about the clusters, but not so high that it becomes computationally expensive. In this example, let's use 20 components.

Let's utilize the labels available in the dataset for determining our references.

references, labels = PCACentroidsSelector(n_components=20).calculate_references(vectors_train, twenty_train.target)

ref_labels = [twenty_train.target_names[x].split(".")[-1] for x in labels]

print(ref_labels)

['atheism', 'graphics', 'forsale', 'baseball', 'med', 'christian', 'guns']

Production Stage¶

Configuring Schema for Embeddings+Tokens+Performance logging¶

By default, whylogs will calculate standard metrics. For this example, we'll be using specialized metrics such as the EmbeddingMetrics and BagofWordsMetrics, so we need to create a custom schema. Let's do that:

import whylogs as why

from whylogs.core.resolvers import MetricSpec, ResolverSpec

from whylogs.core.schema import DeclarativeSchema

from whylogs.experimental.extras.embedding_metric import (

DistanceFunction,

EmbeddingConfig,

EmbeddingMetric,

)

from whylogs.experimental.extras.nlp_metric import BagOfWordsMetric

from whylogs.core.resolvers import STANDARD_RESOLVER

config = EmbeddingConfig(

references=references,

labels=ref_labels,

distance_fn=DistanceFunction.cosine,

)

embeddings_resolver = ResolverSpec(column_name="news_centroids", metrics=[MetricSpec(EmbeddingMetric, config)])

tokens_resolver = ResolverSpec(column_name="document_tokens", metrics=[MetricSpec(BagOfWordsMetric)])

embedding_schema = DeclarativeSchema(STANDARD_RESOLVER+[embeddings_resolver])

token_schema = DeclarativeSchema(STANDARD_RESOLVER+[tokens_resolver])

Loading daily batches¶

To speed things up, let's download the production data from a public S3 bucket. That way, we won't have to translate or tokenize the documents ourselves.

The DataFrame below contains 5306 documents - 2653 in English and 2653 in Spanish. The spanish documents were obtained by simply translating the english ones. Documents that have the same doc_id refers to the same document in different languages.

The tokenization was done using the nltk library.

download_url = "https://whylabs-public.s3.us-west-2.amazonaws.com/whylogs_examples/Newsgroups/production_en_es.parquet"

prod_df = pd.read_parquet(download_url)

prod_df.head()

| doc | target | predicted | tokens | language | batch_id | doc_id | |

|---|---|---|---|---|---|---|---|

| 0 | Hello\n\n Just one quick question\n ... | 4 | 4 | [Hello, Just, one, quick, question, My, father... | en | 0 | 0.0 |

| 1 | OFFICIAL UNITED NATIONS SOUVENIR FOLDERS\n\nEa... | 2 | 2 | [OFFICIAL, UNITED, NATIONS, SOUVENIR, FOLDERS,... | en | 0 | 1.0 |

| 2 | I am selling Joe Montana SportsTalk Football 9... | 2 | 2 | [I, selling, Joe, Montana, SportsTalk, Footbal... | en | 0 | 2.0 |

| 3 | \n\nNonsteroid Proventil is a brand of albute... | 4 | 4 | [Nonsteroid, Proventil, brand, albuterol, bron... | en | 0 | 3.0 |

| 4 | Two URGENT requests\n\n1 I need the latest upd... | 6 | 6 | [Two, URGENT, requests, 1, I, need, latest, up... | en | 0 | 4.0 |

Language Perturbation - Spanish Documents¶

Now, we just need to define the ratio of translated documents. We will log 7 batches of data, each representing a day. The firs 4 days will be unaltered, so we have a normal behavior to compare to. The last 3 days will have an increasing number of Spanish documents - 33%, 66%, and 100%.

Let's just define a function that will get the data for a given batch and desired ratio of Spanish documents:

language_perturbation_ratio = [0,0,0,0,0.33,0.66,1]

def get_docs_by_language_ratio(batch_df, ratio):

n_docs = len(batch_df[batch_df["language"] == "en"])

n_es_docs = int(n_docs * ratio)

n_en_docs = n_docs - n_es_docs

en_df = batch_df[batch_df["language"] == "en"].sample(n_en_docs)

es_df = batch_df[~batch_df['doc_id'].isin(en_df["doc_id"])]

# filter out docs with doc_id in en_df

es_df = es_df[es_df["language"] == "es"].sample(n_es_docs)

docs = pd.concat([en_df, es_df])

return docs

Log and Upload to WhyLabs¶

We now have everything we need to log our data and send it to WhyLabs. We will vectorize the data to log the embeddings distances, and also get the tokens list for each document to log the tokens distribution. We will also predict each document's topic with our trained classifier and log the predictions and targets, so we have access to performance metrics at our dashboard.

from datetime import datetime,timedelta, timezone

import whylogs as why

from whylogs.api.writer.whylabs import WhyLabsWriter

import random

writer = WhyLabsWriter()

for day, batch_df in prod_df.groupby("batch_id"):

batch_df['tokens'] = batch_df['tokens'].apply(lambda x: x.tolist())

dataset_timestamp = datetime.now() - timedelta(days=6-day)

dataset_timestamp = dataset_timestamp.replace(hour=0, minute=0, second=0, microsecond=0, tzinfo = timezone.utc)

print(f"day {day}: {dataset_timestamp}")

ratio = language_perturbation_ratio[day]

print(f"{ratio*100}% of documents with language perturbation")

mixed_df = get_docs_by_language_ratio(batch_df, ratio)

mixed_df = mixed_df.dropna()

sample_ratio = random.uniform(0.8, 1) # just to have some variability in the total number of daily docs

mixed_df = mixed_df.sample(frac=sample_ratio).reset_index(drop=True)

vectors = vectorizer.transform(mixed_df['doc']).toarray()

predicted = clf.predict(vectors)

print("mean accuracy: ", np.mean(predicted == mixed_df['target']))

print("Profiling and logging Embeddings and Tokens...")

embeddings_profile = why.log(row={"news_centroids": vectors},

schema=embedding_schema)

embeddings_profile.set_dataset_timestamp(dataset_timestamp)

writer.write(file=embeddings_profile.view())

tokens_df = pd.DataFrame({"document_tokens":mixed_df["tokens"]})

tokens_profile = why.log(tokens_df, schema=token_schema)

tokens_profile.set_dataset_timestamp(dataset_timestamp)

writer.write(file=tokens_profile.view())

newsgroups_df = pd.DataFrame({"output_target": mixed_df["target"],

"output_prediction": predicted})

# to map indices to label names

newsgroups_df["output_target"] = newsgroups_df["output_target"].apply(lambda x: ref_labels[x])

newsgroups_df["output_prediction"] = newsgroups_df["output_prediction"].apply(lambda x: ref_labels[x])

print("Profiling and logging classification metrics...")

classification_results = why.log_classification_metrics(

newsgroups_df,

target_column="output_target",

prediction_column="output_prediction",

log_full_data=True

)

classification_results.set_dataset_timestamp(dataset_timestamp)

writer.write(file=classification_results.view())

day 0: 2023-03-28 00:00:00+00:00 0% of documents with language perturbation mean accuracy: 0.8537313432835821 Profiling and logging Embeddings and Tokens... Profiling and logging classification metrics... day 1: 2023-03-29 00:00:00+00:00 0% of documents with language perturbation mean accuracy: 0.8333333333333334 Profiling and logging Embeddings and Tokens... Profiling and logging classification metrics... day 2: 2023-03-30 00:00:00+00:00 0% of documents with language perturbation mean accuracy: 0.8475609756097561 Profiling and logging Embeddings and Tokens... Profiling and logging classification metrics... day 3: 2023-03-31 00:00:00+00:00 0% of documents with language perturbation mean accuracy: 0.811377245508982 Profiling and logging Embeddings and Tokens... Profiling and logging classification metrics... day 4: 2023-04-01 00:00:00+00:00 33.0% of documents with language perturbation mean accuracy: 0.648876404494382 Profiling and logging Embeddings and Tokens... Profiling and logging classification metrics... day 5: 2023-04-02 00:00:00+00:00 66.0% of documents with language perturbation mean accuracy: 0.49244712990936557 Profiling and logging Embeddings and Tokens... Profiling and logging classification metrics... day 6: 2023-04-03 00:00:00+00:00 100% of documents with language perturbation mean accuracy: 0.286144578313253 Profiling and logging Embeddings and Tokens... Profiling and logging classification metrics...

WhyLabs Dashboard¶

You should now have access to a number of different features in your dashboard that represent the different aspects of the pipeline we monitored:

news_centroids: Relative distance of each document to the centroids of each reference topic cluster, and frequent items for the closest centroid for each documentdocument_tokens: Distribution of tokens (term length, document length and frequent items) in each documentoutput_predictionandoutput_target: The output (predictions and targets) of the classifier that will also be used to compute metrics on thePerformancetab

We won't cover everything here for the sake of brevity, but let's take a look at some of the charts.

With the monitored information, we should be able to correlate the anomalies and reach a conclusion about what happened.

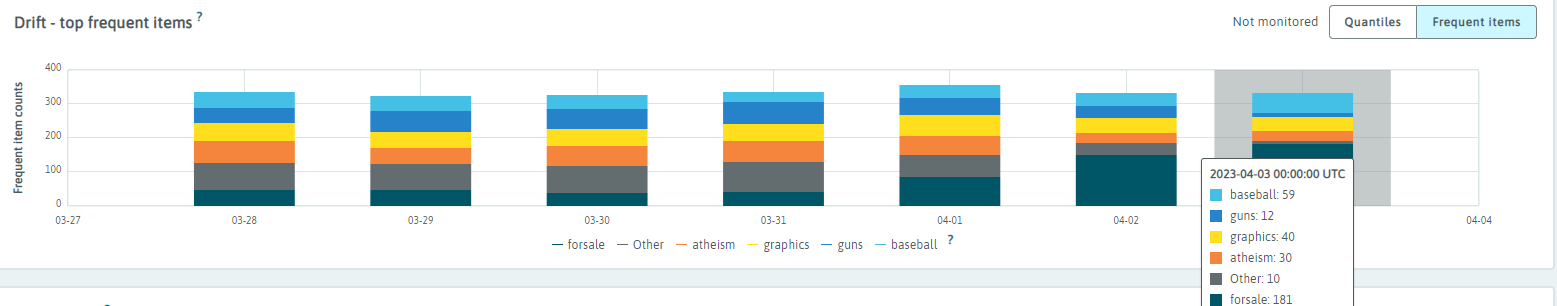

news_centroids.closest¶

In the chart below, we can see the distribution of the closest centroid for each document. We can see for the first 4 days, the distribution is similar between each other. The language perturbations injected in the last 3 days seem to skew the distribution towards the forsale topic.

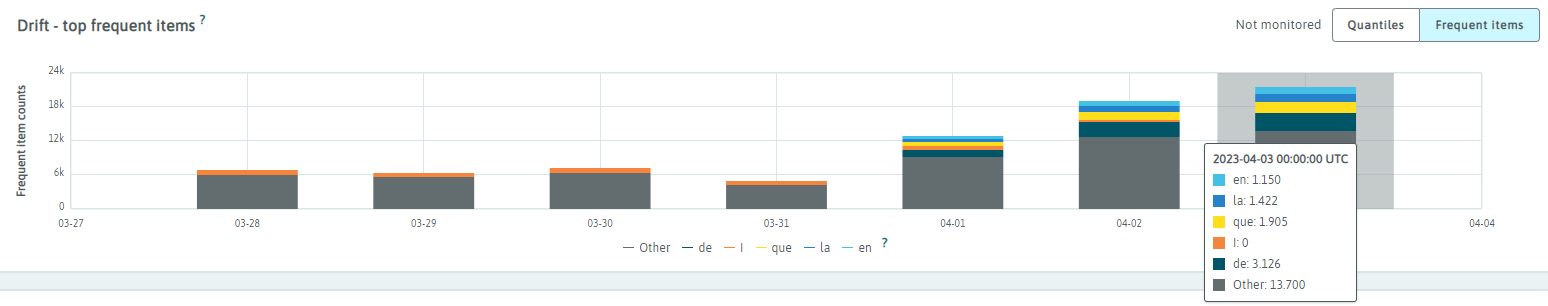

document_tokens.frequent_terms¶

Since we remove the english stopwords in our tokenization process, but we don't remove the spanish stopwords, we can see that most of the most frequent terms in the selected period are the spanish stopwords, and that those stopwords don't appear in the first 4 days.

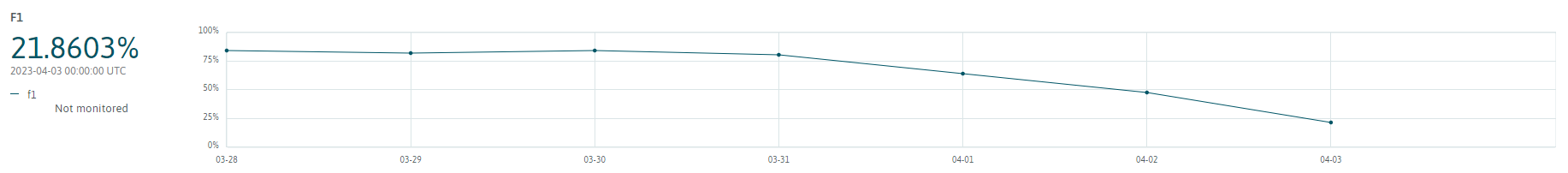

Performance.F1¶

In the Performance tab, there are plenty of information that tell us that our performance is degrading. For example, the F1 chart below shows that the model is getting increasingly worse starting from the 5th day.

References¶

This tutorial covers different aspects of whylogs and WhyLabs. Here are some blog posts and examples that relates to this tutorial: