Table of Contents¶

Introduction to Julia¶

Types of computer languages¶

Compiled languages: C/C++, Fortran, ...

- Directly compiled to machine code that is executed by CPU

- Pros: fast, memory efficient

- Cons: longer development time, hard to debug

Interpreted language: R, Matlab, Python, SAS IML, JavaScript, ...

- Interpreted by interpreter

- Pros: fast prototyping

- Cons: excruciatingly slow for loops

Mixed (dynamic) languages: Matlab (JIT), R (

compilerpackage), Julia, Cython, JAVA, ...- Pros and cons: between the compiled and interpreted languages

Script languages: Linux shell scripts, Perl, ...

- Extremely useful for some data preprocessing and manipulation

Database languages: SQL, Hive (Hadoop).

- Data analysis never happens if we do not know how to retrieve data from databases

Messages¶

To be versatile in the big data era, master at least one language in each category.

To improve efficiency of interpreted languages such as R or Matlab, conventional wisdom is to avoid loops as much as possible. Aka, vectorize code

The only loop you are allowed to have is that for an iterative algorithm.

- When looping is unavoidable, need to code in C, C++, or Fortran.

Success stories: the popular glmnet package in R is coded in Fortran; tidyverse packages use a lot RCpp/C++.

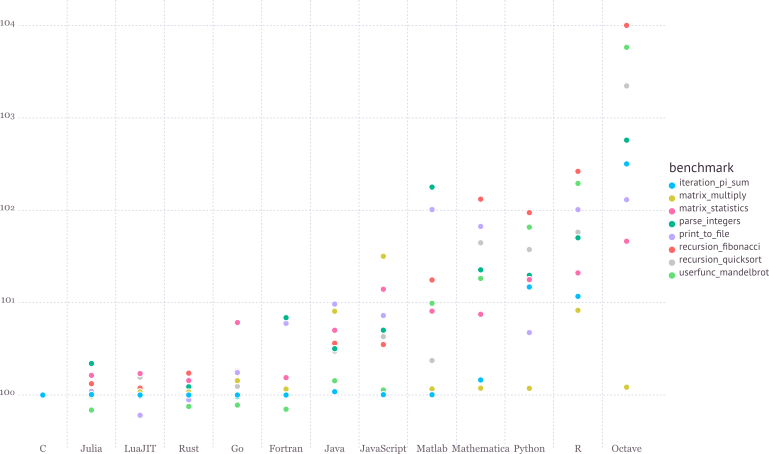

- Modern languages such as Julia tries to solve the two language problem. That is to achieve efficiency without vectorizing code.

What's Julia?¶

Julia is a high-level, high-performance dynamic programming language for technical computing, with syntax that is familiar to users of other technical computing environments

History:

- Project started in 2009. First public release in 2012

- Creators: Jeff Bezanson, Alan Edelman, Stefan Karpinski, Viral Shah

- First major release v1.0 was released on Aug 8, 2018

- Current stable release v1.1.0

Aim to solve the notorious two language problem: Prototype code goes into high-level languages like R/Python, production code goes into low-level language like C/C++.

Julia aims to:

Walks like Python. Runs like C.

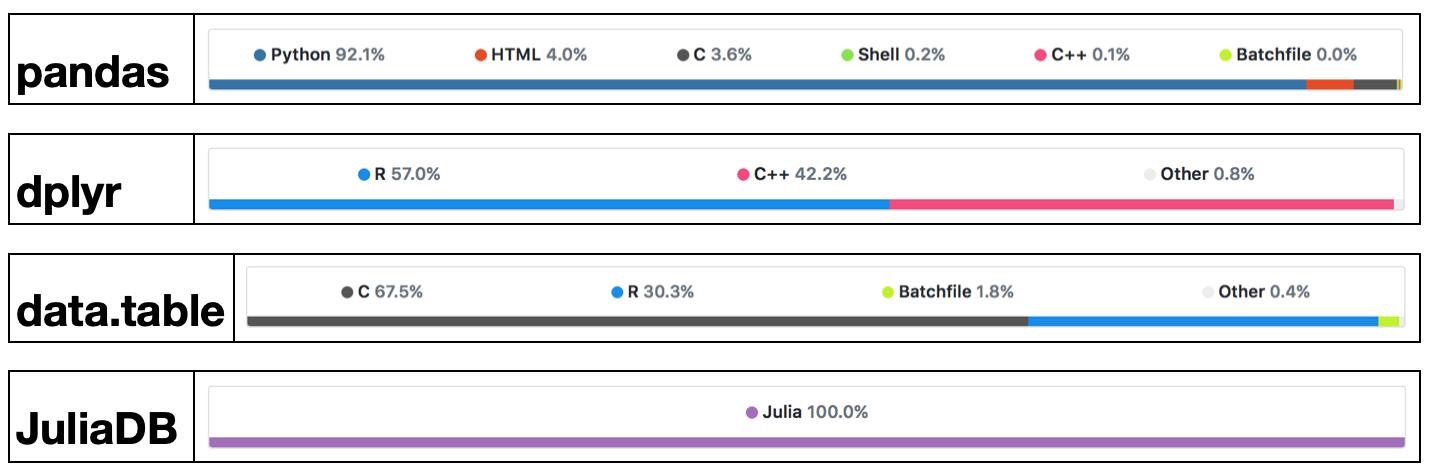

See https://julialang.org/benchmarks/ for the details of benchmark.

Write high-level, abstract code that closely resembles mathematical formulas

- yet produces fast, low-level machine code that has traditionally only been generated by static languages.

Julia is more than just "Fast R" or "Fast Matlab"

- Performance comes from features that work well together.

- You can't just take the magic dust that makes Julia fast and sprinkle it on [language of choice]

R is great, but...¶

It's not meant for high performance computing

- http://adv-r.had.co.nz/Performance.html

- Section on performance starts with "Why is R slow?"

- http://adv-r.had.co.nz/Performance.html

Deficiencies in the core language

- Many fixed with packages (

devtools,roxygen2,Matrix) - Others harder to fix (R uses an old version of BLAS)

- Some impossible to fix (clunky syntax, poor design choices)

- Many fixed with packages (

Only 6 active developers left (out of 20 R-Core members)

- JuliaLang organization has 75 members, with 567 total contributors (as of 3/3/17)

Doug Bates (member of R-Core,

Matrixandlme4)- Getting Doug on board was a big win for statistics with Julia, as he brought a lot of knowledge about the history of R development and design choices

- https://github.com/dmbates/MixedModels.jl

As some of you may know, I have had a (rather late) mid-life crisis and run off with another language called Julia.

-- Doug Bates (on the

knitrGoogle Group)

Gibbs sampler example by Doug Bates¶

An example from Dr. Doug Bates's slides Julia for R Programmers.

The task is to create a Gibbs sampler for the density

using the conditional distributions $$ \begin{eqnarray*} X | Y &\sim& \Gamma \left( 3, \frac{1}{y^2 + 4} \right) \\ Y | X &\sim& N \left(\frac{1}{1+x}, \frac{1}{2(1+x)} \right). \end{eqnarray*} $$

- R solution. The

RCall.jlpackage allows us to execute R code without leaving theJuliaenvironment. We first define an R functionRgibbs().

using RCall

R"""

library(Matrix)

Rgibbs <- function(N, thin) {

mat <- matrix(0, nrow=N, ncol=2)

x <- y <- 0

for (i in 1:N) {

for (j in 1:thin) {

x <- rgamma(1, 3, y * y + 4) # 3rd arg is rate

y <- rnorm(1, 1 / (x + 1), 1 / sqrt(2 * (x + 1)))

}

mat[i,] <- c(x, y)

}

mat

}

"""

RObject{ClosSxp}

function (N, thin)

{

mat <- matrix(0, nrow = N, ncol = 2)

x <- y <- 0

for (i in 1:N) {

for (j in 1:thin) {

x <- rgamma(1, 3, y * y + 4)

y <- rnorm(1, 1/(x + 1), 1/sqrt(2 * (x + 1)))

}

mat[i, ] <- c(x, y)

}

mat

}

To generate a sample of size 10,000 with a thinning of 500. How long does it take?

R"""

system.time(Rgibbs(10000, 500))

"""

RObject{RealSxp}

user system elapsed

21.038 2.197 23.265

- This is a Julia function for the simple Gibbs sampler:

using Distributions

function jgibbs(N, thin)

mat = zeros(N, 2)

x = y = 0.0

for i in 1:N

for j in 1:thin

x = rand(Gamma(3, 1 / (y * y + 4)))

y = rand(Normal(1 / (x + 1), 1 / sqrt(2(x + 1))))

end

mat[i, 1] = x

mat[i, 2] = y

end

mat

end

jgibbs (generic function with 1 method)

Generate the same number of samples. How long does it take?

jgibbs(100, 5); # warm-up

@elapsed jgibbs(10000, 500)

0.317547402

We see 40-80 fold speed up of Julia over R on this example, with similar coding effort!

Learning resources¶

YouTube: Intro to Julia (2h28m), by Jane Herriman.

Cheat sheet: The Fast Track to Julia.

Browse the Julia documentation.

For Matlab users, read Noteworthy Differences From Matlab.

For R users, read Noteworthy Differences From R.

For Python users, read Noteworthy Differences From Python.

The Learning page on Julia's website has pointers to many other learning resources.

Julia REPL (Read-Evaluation-Print-Loop)¶

The Julia REPL, or Julia shell, has at least five modes.

Default mode is the Julian prompt

julia>. Type backspace in other modes to enter default mode.Help mode

help?>. Type?to enter help mode.?search_termdoes a fuzzy search forsearch_term.Shell mode

shell>. Type;to enter shell mode.Package mode

v(1.1) Pkg>. Type]to enter package mode for managing Julia packages (install, uninstall, update, ...).Search mode

(reverse-i-search). Pressctrl+Rto enter search model.With

RCall.jlpackage installed, we can enter the R mode by typing$(shift+4) at Julia REPL.

Some survival commands in Julia REPL:

quit()orCtrl+D: exit Julia.Ctrl+C: interrupt execution.Ctrl+L: clear screen.Append

;(semi-colon) to suppress displaying output from a command. Same as Matlab.include("filename.jl")to source a Julia code file.

Seek help¶

Online help from REPL:

?function_name.Google (of course).

Julia documentation: https://docs.julialang.org/en/.

Look up source code:

@edit fun(x).Friends.

Which IDE?¶

Julia homepage lists many choices: Juno, VS Code, Vim, ...

Unfortunately at the moment there are no mature RStudio- or Matlab-like IDE for Julia yet.

For dynamic document, e.g., homework, I recommend Jupyter Notebook or JupyterLab. JupyterLab is supposed to replace Jupyter Notebook after it reaches v1.0.

For extensive Julia coding, myself has good experience with the editor VS Code with extensions

JuliaandVS Code Jupyter Notebook Previewerinstalled.

Julia package system¶

- Each Julia package is a Git repository. Each Julia package name ends with

.jl. E.g.,Distributions.jlpackage lives at https://github.com/JuliaStats/Distributions.jl.

Google search with PackageName.jl usually leads to the package on github.com.

The package ecosystem is rapidly maturing; a complete list of registered packages (which are required to have a certain level of testing and documentation) is at http://pkg.julialang.org/.

For example, the package called

Distributions.jlis added with

# in Pkg mode

(v1.1) pkg> add Distributions

and "removed" (although not completely deleted) with

# in Pkg mode

(v1.1) pkg> rm Distributions

The package manager provides a dependency solver that determines which packages are actually required to be installed.

Non-registered packages are added by cloning the relevant Git repository. E.g.,

# in Pkg mode

(v1.1) pkg> add https://github.com/OpenMendel/SnpArrays.jl

- A package needs only be added once, at which point it is downloaded into your local

.julia/packagesdirectory in your home directory.

;ls ~/.julia/packages

┌ Warning: special characters "#{}()[]<>|&*?~;" should now be quoted in commands

│ caller = #shell_parse#351(::String, ::Function, ::String, ::Bool) at shell.jl:100

└ @ Base ./shell.jl:100

AbstractFFTs AccurateArithmetic AlgorithmsFromTheBook Arpack AssetRegistry AxisAlgorithms AxisArrays BEDFiles BenchmarkTools BinDeps BinaryProvider Blink BufferedStreams CMake CMakeWrapper CSSUtil CSTParser CSV Cairo Calculus CatIndices CategoricalArrays Cbc Clp Clustering CodecBzip2 CodecXz CodecZlib CodecZstd Codecs ColorTypes ColorVectorSpace Colors CommonSubexpressions Compat Compose ComputationalResources Conda Contour Convex CoordinateTransformations CoupledFields CustomUnitRanges DataArrays DataFrames DataStreams DataStructures DataValues DecFP DecisionTree DeepDiffs DiffEqDiffTools DiffResults DiffRules Distances Distributions DocStringExtensions Documenter DocumenterTools DoubleFloats ECOS EzXML FFTViews FFTW FactCheck FileIO FilePaths FilePathsBase FixedPointNumbers Fontconfig ForwardDiff FunctionalCollections GLM GLPK GLPKMathProgInterface GR GZip Gadfly GenericLinearAlgebra Glob Graphics Gurobi HTTP HTTPClient Hexagons Hiccup Highlights Homebrew HttpCommon HttpParser IJulia IdentityRanges ImageAxes ImageCore ImageDistances ImageFiltering ImageMagick ImageMetadata ImageMorphology ImageShow ImageTransformations Images IndirectArrays IniFile Interact InteractBase InteractBulma InternedStrings Interpolations IntervalSets Ipopt IterTools IterableTables IterativeSolvers IteratorInterfaceExtensions JLD2 JSExpr JSON JuMP Juno KernelDensity Knockout LaTeXStrings Lazy LibCURL LibExpat Libz LinQuadOptInterface LineSearches LinearMaps Literate Loess MATLAB MPI MacroTools MappedArrays MathOptInterface MathProgBase MbedTLS Measures Media MendelPlots Missings Mocking Mosek Mustache Mux NLSolversBase NLopt NaNMath NamedTuples NearestNeighbors NodeJS Nullables ORCA Observables OffsetArrays Optim OptimTestProblems OrderedCollections OrdinalGWAS OrdinalMultinomialModels PDMats PaddedViews Parameters Parsers Pidfile PlotReferenceImages PlotThemes PlotUtils Plotly PlotlyBase PlotlyJS Plots PolrGWAS PolrModels Polynomials PooledArrays PositiveFactorizations Primes ProgressMeter PyCall PyPlot QuadGK QuartzImageIO RCall RData RDatasets RangeArrays Ratios RecipesBase RecursiveArrayTools Reexport Requests Requires ReverseDiffSparse Rmath Roots Rotations Rsvg SCS SIUnits ScikitLearnBase ShowItLikeYouBuildIt Showoff SimpleTraits SnpArrays SoftGlobalScope SortingAlgorithms SpecialFunctions StatPlots StaticArrays StatsBase StatsFuns StatsModels StatsPlots Suppressor TableShowUtils TableTraits TableTraitsUtils Tables TestImages TestSetExtensions TexExtensions TextParse TiledIteration TimeZones Tokenize TranscodingStreams URIParser UnicodePlots VarianceComponentModels VarianceComponentTest VegaDatasets VegaLite VersionParsing VisualRegressionTests WeakRefStrings Weave WebIO WebSockets Widgets WinRPM WinReg WoodburyMatrices YAML ZMQ ZipFile

- Directory of a specific package can be queried by

pathof():

using Distributions

pathof(Distributions)

"/Users/huazhou/.julia/packages/Distributions/fMt8c/src/Distributions.jl"

If you start having problems with packages that seem to be unsolvable, you may try just deleting your .julia directory and reinstalling all your packages.

Periodically, one should run

updatein Pkg mode, which checks for, downloads and installs updated versions of all the packages you currently have installed.statuslists the status of all installed packages.Using functions in package.

using Distributions

This pulls all of the exported functions in the module into your local namespace, as you can check using the whos() command. An alternative is

import Distributions

Now, the functions from the Distributions package are available only using

Distributions.<FUNNAME>

All functions, not only exported functions, are always available like this.

using RCall

x = randn(1000)

R"""

hist($x, main="I'm plotting a Julia vector")

"""

RObject{VecSxp}

$breaks

[1] -5 -4 -3 -2 -1 0 1 2 3 4

$counts

[1] 1 0 28 119 369 320 142 19 2

$density

[1] 0.001 0.000 0.028 0.119 0.369 0.320 0.142 0.019 0.002

$mids

[1] -4.5 -3.5 -2.5 -1.5 -0.5 0.5 1.5 2.5 3.5

$xname

[1] "`#JL`$x"

$equidist

[1] TRUE

attr(,"class")

[1] "histogram"

R"""

library(ggplot2)

qplot($x)

"""

RObject{VecSxp}

┌ Warning: RCall.jl: `stat_bin()` using `bins = 30`. Pick better value with `binwidth`. └ @ RCall /Users/huazhou/.julia/packages/RCall/ffM0W/src/io.jl:113

x = R"""

rnorm(10)

"""

RObject{RealSxp}

[1] -1.76152465 0.28402321 -1.18674201 0.38532610 0.66327415 -0.07548059

[7] -1.22814518 -0.36132394 -1.58982131 1.00019919

# collect R variable into Julia workspace

y = collect(x)

10-element Array{Float64,1}:

-1.761524654252555

0.2840232126371199

-1.1867420051409112

0.38532610091816666

0.6632741501853096

-0.07548059232008238

-1.2281451847872713

-0.36132394376250476

-1.589821307258373

1.0001991932779424

- Access Julia variables in R REPL mode:

julia> x = rand(5) # Julia variable

R> y <- $x

- Pass Julia expression in R REPL mode:

R> y <- $(rand(5))

- Put Julia variable into R environment:

julia> @rput x

R> x

- Get R variable into Julia environment:

R> r <- 2

Julia> @rget r

- If you want to call Julia within R, check out the

XRJuliapackage by John Chambers.

Some basic Julia code¶

# an integer, same as int in R

y = 1

typeof(y)

Int64

# a Float64 number, same as double in R

y = 1.0

typeof(y)

Float64

# Greek letters: `\pi<tab>`

π

π = 3.1415926535897...

typeof(π)

Irrational{:π}

# Greek letters: `\theta<tab>`

θ = y + π

4.141592653589793

# emoji! `\:kissing_cat:<tab>`

😽 = 5.0

5.0

# `\alpha<tab>\hat<tab>`

α̂ = π

π = 3.1415926535897...

# vector of Float64 0s

x = zeros(5)

5-element Array{Float64,1}:

0.0

0.0

0.0

0.0

0.0

# vector Int64 0s

x = zeros(Int, 5)

5-element Array{Int64,1}:

0

0

0

0

0

# matrix of Float64 0s

x = zeros(5, 3)

5×3 Array{Float64,2}:

0.0 0.0 0.0

0.0 0.0 0.0

0.0 0.0 0.0

0.0 0.0 0.0

0.0 0.0 0.0

# matrix of Float64 1s

x = ones(5, 3)

5×3 Array{Float64,2}:

1.0 1.0 1.0

1.0 1.0 1.0

1.0 1.0 1.0

1.0 1.0 1.0

1.0 1.0 1.0

# define array without initialization

x = Matrix{Float64}(undef, 5, 3)

5×3 Array{Float64,2}:

2.353e-314 2.35072e-314 2.33123e-314

2.353e-314 2.33123e-314 0.0

2.33123e-314 2.34943e-314 0.0

2.34943e-314 2.33123e-314 0.0

2.35132e-314 2.33123e-314 0.0

# fill a matrix by 0s

fill!(x, 0)

5×3 Array{Float64,2}:

0.0 0.0 0.0

0.0 0.0 0.0

0.0 0.0 0.0

0.0 0.0 0.0

0.0 0.0 0.0

# initialize an array to be constant 2.5

fill(2.5, (5, 3))

5×3 Array{Float64,2}:

2.5 2.5 2.5

2.5 2.5 2.5

2.5 2.5 2.5

2.5 2.5 2.5

2.5 2.5 2.5

# rational number

a = 3//5

3//5

typeof(a)

Rational{Int64}

b = 3//7

3//7

a + b

36//35

# uniform [0, 1) random numbers

x = rand(5, 3)

5×3 Array{Float64,2}:

0.697832 0.521255 0.635389

0.795756 0.821073 0.046378

0.330146 0.200252 0.997733

0.12915 0.951122 0.227358

0.833891 0.494311 0.428478

# uniform random numbers (in single precision)

x = rand(Float16, 5, 3)

5×3 Array{Float16,2}:

0.8604 0.798 0.1611

0.717 0.4043 0.0762

0.5312 0.0742 0.8906

0.2373 0.1309 0.632

0.5137 0.42 0.5244

# random numbers from {1,...,5}

x = rand(1:5, 5, 3)

5×3 Array{Int64,2}:

1 4 5

2 2 2

5 2 3

2 3 5

1 1 1

# standard normal random numbers

x = randn(5, 3)

5×3 Array{Float64,2}:

1.14235 -0.681071 0.360989

1.95432 -0.0724878 0.329729

-0.288511 -1.22229 1.32657

-0.774164 1.37268 -1.37873

-0.2116 0.861211 -1.25181

# range

1:10

1:10

typeof(1:10)

UnitRange{Int64}

1:2:10

1:2:9

typeof(1:2:10)

StepRange{Int64,Int64}

# integers 1-10

x = collect(1:10)

10-element Array{Int64,1}:

1

2

3

4

5

6

7

8

9

10

# or equivalently

[1:10...]

10-element Array{Int64,1}:

1

2

3

4

5

6

7

8

9

10

# Float64 numbers 1-10

x = collect(1.0:10)

10-element Array{Float64,1}:

1.0

2.0

3.0

4.0

5.0

6.0

7.0

8.0

9.0

10.0

# convert to a specific type

convert(Vector{Float64}, 1:10)

10-element Array{Float64,1}:

1.0

2.0

3.0

4.0

5.0

6.0

7.0

8.0

9.0

10.0

Timing and benchmark¶

Julia¶

@time, @elapsed, @allocated macros:

using Random # standard library

Random.seed!(123) # seed

x = rand(1_000_000) # 1 million random numbers in [0, 1)

@time sum(x) # first run includes compilation time

0.032345 seconds (95.66 k allocations: 4.858 MiB)

500060.34072352527

@time sum(x) # no compilation time after first run

0.000456 seconds (5 allocations: 176 bytes)

500060.34072352527

# just the runtime

@elapsed sum(x)

0.000449506

# just the allocation

@allocated sum(x)

16

Use package BenchmarkTools.jl for more robust benchmarking. Analog of microbenchmark package in R.

using BenchmarkTools

bm = @benchmark sum($x)

BenchmarkTools.Trial: memory estimate: 0 bytes allocs estimate: 0 -------------- minimum time: 244.108 μs (0.00% GC) median time: 268.166 μs (0.00% GC) mean time: 280.042 μs (0.00% GC) maximum time: 2.854 ms (0.00% GC) -------------- samples: 10000 evals/sample: 1

using Statistics # standard library

benchmark_result = Dict() # a dictionary to store median runtime (in milliseconds)

benchmark_result["Julia builtin"] = median(bm.times) / 1e6

0.2681655

C¶

We would use the low-level C code as the baseline for copmarison. In Julia, we can easily run compiled C code using the ccall function.

using Libdl

C_code = """

#include <stddef.h>

double c_sum(size_t n, double *X) {

double s = 0.0;

for (size_t i = 0; i < n; ++i) {

s += X[i];

}

return s;

}

"""

const Clib = tempname() # make a temporary file

# compile to a shared library by piping C_code to gcc

# (works only if you have gcc installed):

open(`gcc -std=c99 -fPIC -O3 -msse3 -xc -shared -o $(Clib * "." * Libdl.dlext) -`, "w") do f

print(f, C_code)

end

# define a Julia function that calls the C function:

c_sum(X::Array{Float64}) = ccall(("c_sum", Clib), Float64, (Csize_t, Ptr{Float64}), length(X), X)

c_sum (generic function with 1 method)

# make sure it gives same answer

c_sum(x)

500060.340723512

bm = @benchmark c_sum($x)

BenchmarkTools.Trial: memory estimate: 0 bytes allocs estimate: 0 -------------- minimum time: 1.117 ms (0.00% GC) median time: 1.134 ms (0.00% GC) mean time: 1.161 ms (0.00% GC) maximum time: 3.056 ms (0.00% GC) -------------- samples: 4297 evals/sample: 1

# store median runtime (in milliseconds)

benchmark_result["C"] = median(bm.times) / 1e6

1.134183

R, buildin sum¶

Next we compare to the build in sum function in R, which is implemented using C.

using RCall

R"""

library(microbenchmark)

y <- $x

rbm <- microbenchmark(sum(y))

"""

RObject{VecSxp}

Unit: microseconds

expr min lq mean median uq max neval

sum(y) 897.061 902.984 978.2313 995.058 1006.212 1239.114 100

# store median runtime (in milliseconds)

@rget rbm # dataframe

benchmark_result["R builtin"] = median(rbm[:time]) / 1e6

0.995058

R, handwritten loop¶

Handwritten loop in R is much slower.

using RCall

R"""

sum_r <- function(x) {

s <- 0

for (xi in x) {

s <- s + xi

}

s

}

library(microbenchmark)

y <- $x

rbm <- microbenchmark(sum_r(y))

"""

RObject{VecSxp}

Unit: milliseconds

expr min lq mean median uq max neval

sum_r(y) 25.42925 26.79393 27.30556 27.21291 27.72341 33.88999 100

# store median runtime (in milliseconds)

@rget rbm # dataframe

benchmark_result["R loop"] = median(rbm[:time]) / 1e6

27.212913

Python, builtin sum¶

Built in function sum in Python.

using PyCall

PyCall.pyversion

v"3.7.3"

# get the Python built-in "sum" function:

pysum = pybuiltin("sum")

bm = @benchmark $pysum($x)

BenchmarkTools.Trial: memory estimate: 368 bytes allocs estimate: 8 -------------- minimum time: 81.864 ms (0.00% GC) median time: 83.359 ms (0.00% GC) mean time: 83.663 ms (0.00% GC) maximum time: 89.594 ms (0.00% GC) -------------- samples: 60 evals/sample: 1

# store median runtime (in miliseconds)

benchmark_result["Python builtin"] = median(bm.times) / 1e6

83.3590615

Python, handwritten loop¶

using PyCall

py"""

def py_sum(A):

s = 0.0

for a in A:

s += a

return s

"""

sum_py = py"py_sum"

bm = @benchmark $sum_py($x)

BenchmarkTools.Trial: memory estimate: 368 bytes allocs estimate: 8 -------------- minimum time: 96.864 ms (0.00% GC) median time: 101.652 ms (0.00% GC) mean time: 101.390 ms (0.00% GC) maximum time: 109.707 ms (0.00% GC) -------------- samples: 50 evals/sample: 1

# store median runtime (in miliseconds)

benchmark_result["Python loop"] = median(bm.times) / 1e6

101.652003

Python, numpy¶

Numpy is the high-performance scientific computing library for Python.

# bring in sum function from Numpy

numpy_sum = pyimport("numpy")."sum"

PyObject <function sum at 0x128308b70>

bm = @benchmark $numpy_sum($x)

BenchmarkTools.Trial: memory estimate: 368 bytes allocs estimate: 8 -------------- minimum time: 303.052 μs (0.00% GC) median time: 336.714 μs (0.00% GC) mean time: 357.059 μs (0.00% GC) maximum time: 2.370 ms (0.00% GC) -------------- samples: 10000 evals/sample: 1

# store median runtime (in miliseconds)

benchmark_result["Python numpy"] = median(bm.times) / 1e6

0.336714

Numpy performance is on a par with Julia build-in sum function. Both are about 3 times faster than C, possibly because of usage of SIMD.

Summary¶

benchmark_result

Dict{Any,Any} with 7 entries:

"R builtin" => 0.995058

"Julia builtin" => 0.268166

"Python builtin" => 83.3591

"C" => 1.13418

"Python loop" => 101.652

"Python numpy" => 0.336714

"R loop" => 27.2129

CandR builtinare the baseline C performance (gold standard).Python builtinandPython loopare 80-100 fold slower than C because the loop is interpreted.R loopis about 30 folder slower than C and indicates the performance of bytecode generated by its compiler package (turned on by default since R v3.4.0 (Apr 2017)).Julia builtinandPython numpyare 3-4 folder faster than C because of SIMD (???).

Matrices and vectors¶

Dimensions¶

x = randn(5, 3)

5×3 Array{Float64,2}:

0.598655 -0.649164 -0.20795

-1.50715 1.41208 0.29778

-2.08909 0.283026 -1.37727

0.0489631 2.06727 -0.0209721

-0.0975634 -0.194017 0.133924

size(x)

(5, 3)

size(x, 1) # nrow() in R

5

size(x, 2)

3

# total number of elements

length(x)

15

Indexing¶

# 5 × 5 matrix of random Normal(0, 1)

x = randn(5, 5)

5×5 Array{Float64,2}:

1.40584 -0.435559 -1.77228 0.616302 0.979726

-0.434523 0.986161 0.942634 -1.27926 -1.15752

0.643141 0.39562 0.396688 -0.220179 -0.841993

-1.23652 -1.03567 -1.227 -1.13857 -1.86035

0.528711 -1.36271 -0.387252 0.79865 0.00320293

# first column

x[:, 1]

5-element Array{Float64,1}:

1.405836567860699

-0.4345229408667341

0.6431414215485608

-1.2365159892763888

0.5287106504891519

# first row

x[1, :]

5-element Array{Float64,1}:

1.405836567860699

-0.4355593559026318

-1.7722776947141923

0.6163015601209474

0.9797260369028392

# sub-array

x[1:2, 2:3]

2×2 Array{Float64,2}:

-0.435559 -1.77228

0.986161 0.942634

# getting a subset of a matrix creates a copy, but you can also create "views"

z = view(x, 1:2, 2:3)

2×2 view(::Array{Float64,2}, 1:2, 2:3) with eltype Float64:

-0.435559 -1.77228

0.986161 0.942634

# same as

@views z = x[1:2, 2:3]

2×2 view(::Array{Float64,2}, 1:2, 2:3) with eltype Float64:

-0.435559 -1.77228

0.986161 0.942634

# change in z (view) changes x as well

z[2, 2] = 0.0

x

5×5 Array{Float64,2}:

1.40584 -0.435559 -1.77228 0.616302 0.979726

-0.434523 0.986161 0.0 -1.27926 -1.15752

0.643141 0.39562 0.396688 -0.220179 -0.841993

-1.23652 -1.03567 -1.227 -1.13857 -1.86035

0.528711 -1.36271 -0.387252 0.79865 0.00320293

# y points to same data as x

y = x

5×5 Array{Float64,2}:

1.40584 -0.435559 -1.77228 0.616302 0.979726

-0.434523 0.986161 0.0 -1.27926 -1.15752

0.643141 0.39562 0.396688 -0.220179 -0.841993

-1.23652 -1.03567 -1.227 -1.13857 -1.86035

0.528711 -1.36271 -0.387252 0.79865 0.00320293

# x and y point to same data

pointer(x), pointer(y)

(Ptr{Float64} @0x000000011a458050, Ptr{Float64} @0x000000011a458050)

# changing y also changes x

y[:, 1] .= 0

x

5×5 Array{Float64,2}:

0.0 -0.435559 -1.77228 0.616302 0.979726

0.0 0.986161 0.0 -1.27926 -1.15752

0.0 0.39562 0.396688 -0.220179 -0.841993

0.0 -1.03567 -1.227 -1.13857 -1.86035

0.0 -1.36271 -0.387252 0.79865 0.00320293

# create a new copy of data

z = copy(x)

5×5 Array{Float64,2}:

0.0 -0.435559 -1.77228 0.616302 0.979726

0.0 0.986161 0.0 -1.27926 -1.15752

0.0 0.39562 0.396688 -0.220179 -0.841993

0.0 -1.03567 -1.227 -1.13857 -1.86035

0.0 -1.36271 -0.387252 0.79865 0.00320293

pointer(x), pointer(z)

(Ptr{Float64} @0x000000011a458050, Ptr{Float64} @0x000000011a458950)

Concatenate matrices¶

# 1-by-3 array

[1 2 3]

1×3 Array{Int64,2}:

1 2 3

# 3-by-1 vector

[1, 2, 3]

3-element Array{Int64,1}:

1

2

3

# multiple assignment by tuple

x, y, z = randn(5, 3), randn(5, 2), randn(3, 5)

([1.07455 2.55508 1.26546; 0.385123 -0.660738 -1.64513; … ; -0.583523 0.43622 0.385691; 0.832241 -1.32354 0.177505], [-0.997382 -0.488503; -0.817314 0.673586; … ; -1.39357 0.729497; 0.53823 0.57519], [-2.30427 1.62085 … 0.054552 0.49251; 1.3284 -1.14737 … 0.167511 -1.1699; -0.908365 0.423386 … -1.26891 0.883105])

[x y] # 5-by-5 matrix

5×5 Array{Float64,2}:

1.07455 2.55508 1.26546 -0.997382 -0.488503

0.385123 -0.660738 -1.64513 -0.817314 0.673586

-2.38285 -0.693591 -0.465704 0.605258 0.302012

-0.583523 0.43622 0.385691 -1.39357 0.729497

0.832241 -1.32354 0.177505 0.53823 0.57519

[x y; z] # 8-by-5 matrix

8×5 Array{Float64,2}:

1.07455 2.55508 1.26546 -0.997382 -0.488503

0.385123 -0.660738 -1.64513 -0.817314 0.673586

-2.38285 -0.693591 -0.465704 0.605258 0.302012

-0.583523 0.43622 0.385691 -1.39357 0.729497

0.832241 -1.32354 0.177505 0.53823 0.57519

-2.30427 1.62085 -0.0751243 0.054552 0.49251

1.3284 -1.14737 -0.121207 0.167511 -1.1699

-0.908365 0.423386 -0.163381 -1.26891 0.883105

Dot operation¶

Dot operation in Julia is elementwise operation, similar to Matlab.

x = randn(5, 3)

5×3 Array{Float64,2}:

1.42693 1.1571 0.352857

0.270257 -0.17928 0.137277

-0.0178663 -1.02672 -0.321545

0.865855 -0.232668 1.1365

0.0332832 0.886387 -1.66359

y = ones(5, 3)

5×3 Array{Float64,2}:

1.0 1.0 1.0

1.0 1.0 1.0

1.0 1.0 1.0

1.0 1.0 1.0

1.0 1.0 1.0

x .* y # same x * y in R

5×3 Array{Float64,2}:

1.42693 1.1571 0.352857

0.270257 -0.17928 0.137277

-0.0178663 -1.02672 -0.321545

0.865855 -0.232668 1.1365

0.0332832 0.886387 -1.66359

x .^ (-2)

5×3 Array{Float64,2}:

0.491126 0.746898 8.0316

13.6914 31.1127 53.0649

3132.79 0.948625 9.67199

1.33386 18.4725 0.774221

902.711 1.27278 0.361334

sin.(x)

5×3 Array{Float64,2}:

0.98967 0.91564 0.34558

0.266979 -0.178321 0.136846

-0.0178653 -0.855607 -0.316033

0.76165 -0.230575 0.907164

0.0332771 0.774793 -0.995698

Basic linear algebra¶

x = randn(5)

5-element Array{Float64,1}:

-0.48876941581527183

-1.8898912365052984

0.4752801478661729

0.5986586722127957

1.8129740483514514

using LinearAlgebra

# vector L2 norm

norm(x)

2.77159570508093

# same as

sqrt(sum(abs2, x))

2.77159570508093

y = randn(5) # another vector

# dot product

dot(x, y) # x' * y

-2.0757729618710195

# same as

x'y

-2.0757729618710195

x, y = randn(5, 3), randn(3, 2)

# matrix multiplication, same as %*% in R

x * y

5×2 Array{Float64,2}:

-0.159807 0.579995

0.621269 1.32793

0.591513 -1.99637

-0.713616 0.684772

-0.557596 0.75847

x = randn(3, 3)

3×3 Array{Float64,2}:

0.153422 1.0588 0.277495

0.271558 0.0482019 0.326489

-1.21251 -0.0983329 -0.623121

# conjugate transpose

x'

3×3 Adjoint{Float64,Array{Float64,2}}:

0.153422 0.271558 -1.21251

1.0588 0.0482019 -0.0983329

0.277495 0.326489 -0.623121

b = rand(3)

x'b # same as x' * b

3-element Array{Float64,1}:

-0.8462924095954427

0.46434157973051327

-0.1889119302892237

# trace

tr(x)

-0.4214969667533476

det(x)

-0.2308584804640239

rank(x)

3

Sparse matrices¶

using SparseArrays

# 10-by-10 sparse matrix with sparsity 0.1

X = sprandn(10, 10, .1)

10×10 SparseMatrixCSC{Float64,Int64} with 10 stored entries:

[6 , 1] = -0.251681

[5 , 2] = -0.778148

[9 , 4] = -0.119565

[2 , 5] = -0.616153

[7 , 5] = -1.40975

[2 , 7] = 0.84617

[7 , 7] = -0.207459

[9 , 8] = -0.0429563

[5 , 9] = -0.388533

[2 , 10] = -0.209722

# convert to dense matrix; be cautious when dealing with big data

Xfull = convert(Matrix{Float64}, X)

10×10 Array{Float64,2}:

0.0 0.0 0.0 0.0 … 0.0 0.0 0.0

0.0 0.0 0.0 0.0 0.0 0.0 -0.209722

0.0 0.0 0.0 0.0 0.0 0.0 0.0

0.0 0.0 0.0 0.0 0.0 0.0 0.0

0.0 -0.778148 0.0 0.0 0.0 -0.388533 0.0

-0.251681 0.0 0.0 0.0 … 0.0 0.0 0.0

0.0 0.0 0.0 0.0 0.0 0.0 0.0

0.0 0.0 0.0 0.0 0.0 0.0 0.0

0.0 0.0 0.0 -0.119565 -0.0429563 0.0 0.0

0.0 0.0 0.0 0.0 0.0 0.0 0.0

# convert a dense matrix to sparse matrix

sparse(Xfull)

10×10 SparseMatrixCSC{Float64,Int64} with 10 stored entries:

[6 , 1] = -0.251681

[5 , 2] = -0.778148

[9 , 4] = -0.119565

[2 , 5] = -0.616153

[7 , 5] = -1.40975

[2 , 7] = 0.84617

[7 , 7] = -0.207459

[9 , 8] = -0.0429563

[5 , 9] = -0.388533

[2 , 10] = -0.209722

# syntax for sparse linear algebra is same as dense linear algebra

β = ones(10)

X * β

10-element Array{Float64,1}:

0.0

0.02029443774752057

0.0

0.0

-1.1666816429212037

-0.25168069117368247

-1.617210771834139

0.0

-0.1625211385544279

0.0

# many functions apply to sparse matrices as well

sum(X)

-3.177799806735933

Control flow and loops¶

- if-elseif-else-end

if condition1

# do something

elseif condition2

# do something

else

# do something

end

forloop

for i in 1:10

println(i)

end

- Nested

forloop:

for i in 1:10

for j in 1:5

println(i * j)

end

end

Same as

for i in 1:10, j in 1:5

println(i * j)

end

- Exit loop:

for i in 1:10

# do something

if condition1

break # skip remaining loop

end

end

- Exit iteration:

for i in 1:10

# do something

if condition1

continue # skip to next iteration

end

# do something

end

Functions¶

In Julia, all arguments to functions are passed by reference, in contrast to R and Matlab.

Function names ending with

!indicates that function mutates at least one argument, typically the first.

sort!(x) # vs sort(x)

- Function definition

function func(req1, req2; key1=dflt1, key2=dflt2)

# do stuff

return out1, out2, out3

end

Required arguments are separated with a comma and use the positional notation.

Optional arguments need a default value in the signature.

Semicolon is not required in function call.

return statement is optional.

Multiple outputs can be returned as a tuple, e.g., return out1, out2, out3.

- Anonymous functions, e.g.,

x -> x^2, is commonly used in collection function or list comprehensions.

map(x -> x^2, y) # square each element in x

- Functions can be nested:

function outerfunction()

# do some outer stuff

function innerfunction()

# do inner stuff

# can access prior outer definitions

end

# do more outer stuff

end

- Functions can be vectorized using the Dot syntax:

function myfunc(x)

return sin(x^2)

end

x = randn(5, 3)

myfunc.(x)

5×3 Array{Float64,2}:

0.639626 0.0671957 0.275807

0.139496 0.387134 0.414554

0.721616 0.00984144 0.79227

-0.633982 -0.556283 0.0602744

0.544461 0.156409 0.00768604

Collection function (think this as the series of

applyfunctions in R).Apply a function to each element of a collection:

map(f, coll) # or

map(coll) do elem

# do stuff with elem

# must contain return

end

map(x -> sin(x^2), x)

5×3 Array{Float64,2}:

0.639626 0.0671957 0.275807

0.139496 0.387134 0.414554

0.721616 0.00984144 0.79227

-0.633982 -0.556283 0.0602744

0.544461 0.156409 0.00768604

map(x) do elem

elem = elem^2

return sin(elem)

end

5×3 Array{Float64,2}:

0.639626 0.0671957 0.275807

0.139496 0.387134 0.414554

0.721616 0.00984144 0.79227

-0.633982 -0.556283 0.0602744

0.544461 0.156409 0.00768604

# Mapreduce

mapreduce(x -> sin(x^2), +, x)

3.026104609456178

# same as

sum(x -> sin(x^2), x)

3.026104609456178

- List comprehension

[sin(2i + j) for i in 1:5, j in 1:3] # similar to Python

5×3 Array{Float64,2}:

0.14112 -0.756802 -0.958924

-0.958924 -0.279415 0.656987

0.656987 0.989358 0.412118

0.412118 -0.544021 -0.99999

-0.99999 -0.536573 0.420167

Type system¶

Every variable in Julia has a type.

When thinking about types, think about sets.

Everything is a subtype of the abstract type

Any.An abstract type defines a set of types

- Consider types in Julia that are a

Number:

- Consider types in Julia that are a

- We can explore type hierarchy with

typeof(),supertype(), andsubtypes().

typeof(1.0), typeof(1)

(Float64, Int64)

supertype(Float64)

AbstractFloat

subtypes(AbstractFloat)

4-element Array{Any,1}:

BigFloat

Float16

Float32

Float64

# Is Float64 a subtype of AbstractFloat?

Float64 <: AbstractFloat

true

# On 64bit machine, Int == Int64

Int == Int64

true

# convert to Float64

convert(Float64, 1)

1.0

# same as

Float64(1)

1.0

# Float32 vector

x = randn(Float32, 5)

5-element Array{Float32,1}:

-0.27314892

-1.4175588

0.06751722

-2.4249308

-0.9561249

# convert to Float64

convert(Vector{Float64}, x)

5-element Array{Float64,1}:

-0.27314892411231995

-1.4175587892532349

0.0675172209739685

-2.4249308109283447

-0.9561249017715454

# same as

Float64.(x)

5-element Array{Float64,1}:

-0.27314892411231995

-1.4175587892532349

0.0675172209739685

-2.4249308109283447

-0.9561249017715454

# convert Float64 to Int64

convert(Int, 1.0)

1

convert(Int, 1.5) # should use round(1.5)

InexactError: Int64(1.5)

Stacktrace:

[1] Type at ./float.jl:703 [inlined]

[2] convert(::Type{Int64}, ::Float64) at ./number.jl:7

[3] top-level scope at In[125]:1

round(Int, 1.5)

2

Multiple dispatch¶

Multiple dispatch lies in the core of Julia design. It allows built-in and user-defined functions to be overloaded for different combinations of argument types.

Let's consider a simple "doubling" function:

g(x) = x + x

g (generic function with 1 method)

g(1.5)

3.0

This definition is too broad, since some things, e.g., strings, can't be added

g("hello world")

MethodError: no method matching +(::String, ::String) Closest candidates are: +(::Any, ::Any, !Matched::Any, !Matched::Any...) at operators.jl:502 +(!Matched::PyObject, ::Any) at /Users/huazhou/.julia/packages/PyCall/a5Jd3/src/pyoperators.jl:13 +(::Any, !Matched::PyObject) at /Users/huazhou/.julia/packages/PyCall/a5Jd3/src/pyoperators.jl:14 Stacktrace: [1] g(::String) at ./In[127]:1 [2] top-level scope at In[129]:1

- This definition is correct but too restrictive, since any

Numbercan be added.

g(x::Float64) = x + x

g (generic function with 2 methods)

- This definition will automatically work on the entire type tree above!

g(x::Number) = x + x

g (generic function with 3 methods)

This is a lot nicer than

function g(x)

if isa(x, Number)

return x + x

else

throw(ArgumentError("x should be a number"))

end

end

methods(func)function display all methods defined forfunc.

methods(g)

- g(x::Float64) in Main at In[130]:1

- g(x::Number) in Main at In[131]:1

- g(x) in Main at In[127]:1

When calling a function with multiple definitions, Julia will search from the narrowest signature to the broadest signature.

@which func(x)marco tells which method is being used for argument signaturex.

# an Int64 input

@which g(1)

# a Vector{Float64} input

@which g(randn(5))

Just-in-time compilation (JIT)¶

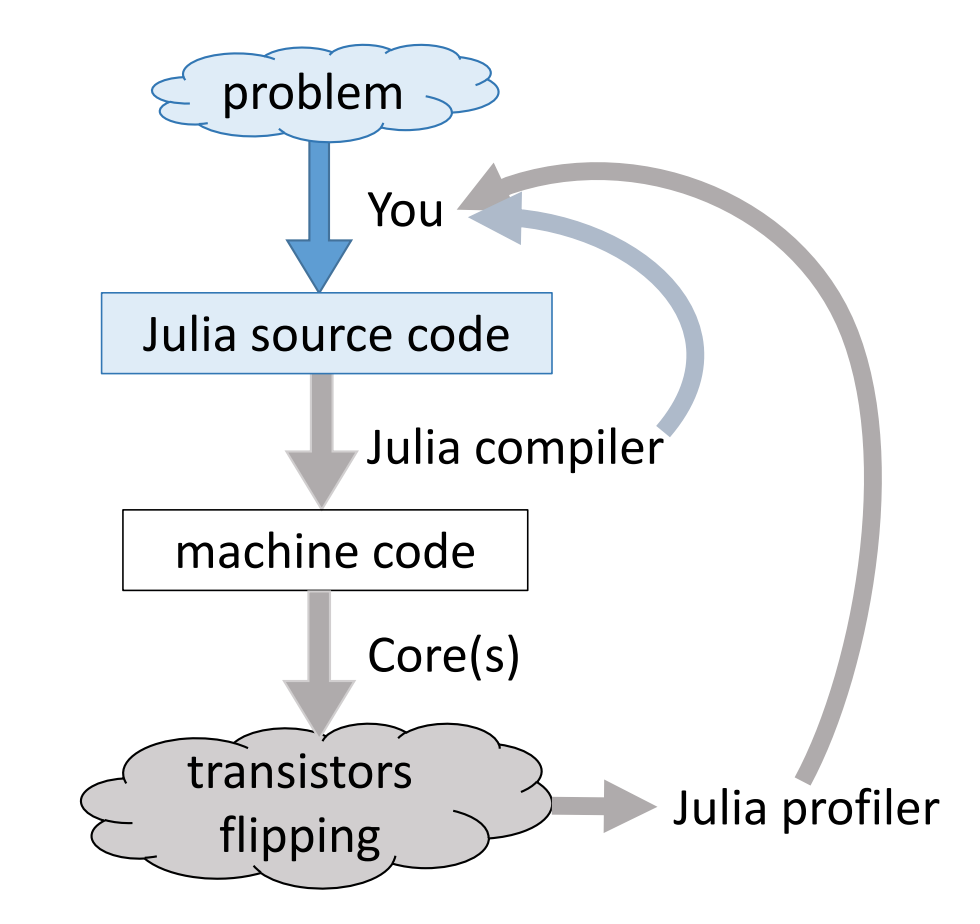

Following figures are taken from Arch D. Robinson's slides Introduction to Writing High Performance Julia.

|

|

|---|---|

Julia's efficiency results from its capability to infer the types of all variables within a function and then call LLVM to generate optimized machine code at run-time.

Consider the g (doubling) function defined earlier. This function will work on any type which has a method for +.

g(2), g(2.0)

(4, 4.0)

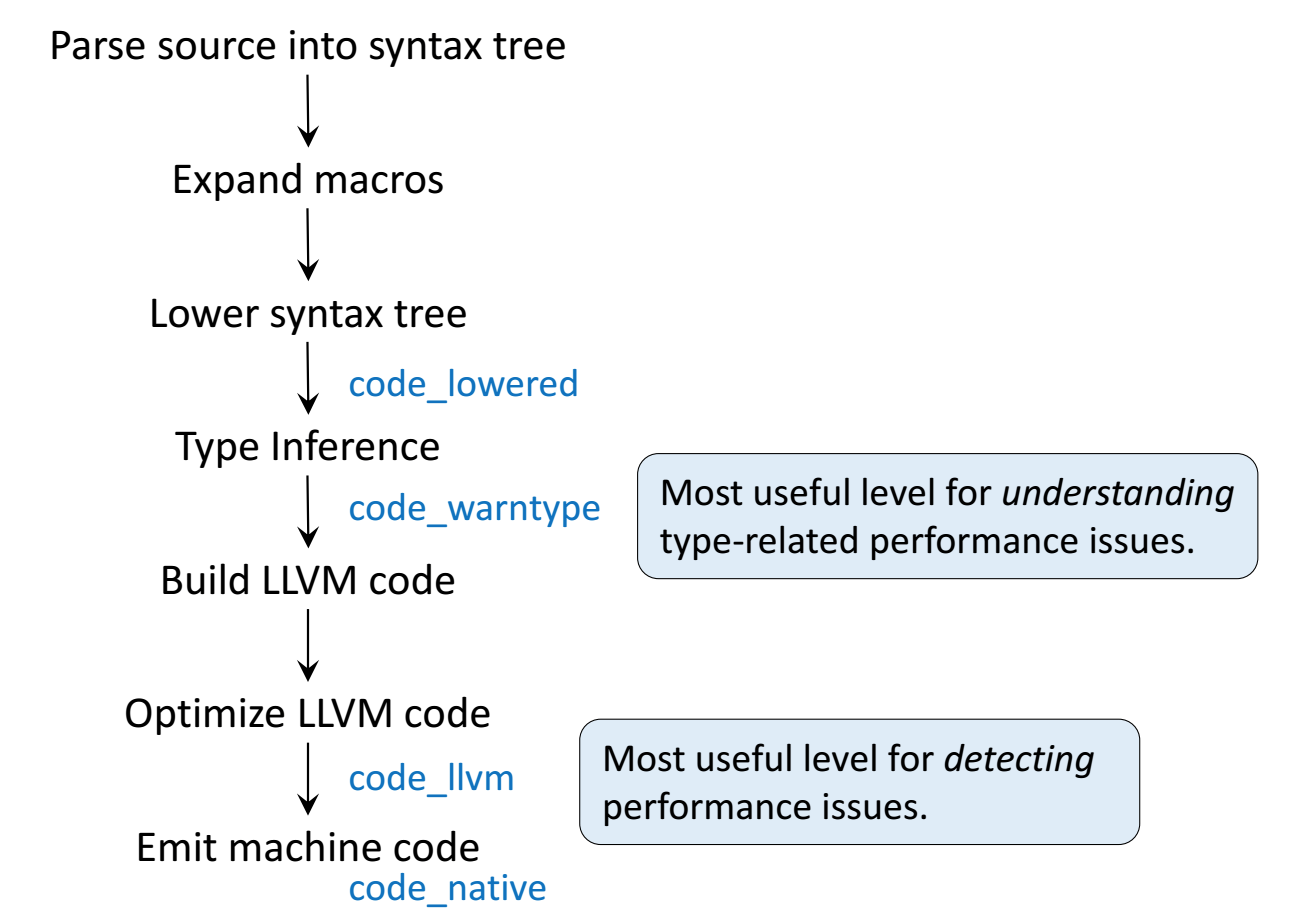

Step 1: Parse Julia code into abstract syntax tree (AST).

@code_lowered g(2)

CodeInfo( 1 ─ %1 = x + x └── return %1 )

Step 2: Type inference according to input type.

@code_warntype g(2)

Body::Int64 1 ─ %1 = (Base.add_int)(x, x)::Int64 └── return %1

@code_warntype g(2.0)

Body::Float64 1 ─ %1 = (Base.add_float)(x, x)::Float64 └── return %1

Step 3: Compile into LLVM bytecode (equivalent of R bytecode generated by compiler package).

@code_llvm g(2)

; @ In[131]:1 within `g'

define i64 @julia_g_15080(i64) {

top:

; ┌ @ int.jl:53 within `+'

%1 = shl i64 %0, 1

; └

ret i64 %1

}

@code_llvm g(2.0)

; @ In[130]:1 within `g'

define double @julia_g_15081(double) {

top:

; ┌ @ float.jl:395 within `+'

%1 = fadd double %0, %0

; └

ret double %1

}

We didn't provide a type annotation. But different LLVM code gets generated depending on the argument type!

In R or Python, g(2) and g(2.0) would use the same code for both.

In Julia, g(2) and g(2.0) dispatches to optimized code for Int64 and Float64, respectively.

For integer input x, LLVM compiler is smart enough to know x + x is simple shifting x by 1 bit, which is faster than addition.

- Step 4: Lowest level is the assembly code, which is machine dependent.

@code_native g(2)

.section __TEXT,__text,regular,pure_instructions ; ┌ @ In[131]:1 within `g' ; │┌ @ In[131]:1 within `+' decl %eax leal (%edi,%edi), %eax ; │└ retl nopw %cs:(%eax,%eax) ; └

@code_native g(2.0)

.section __TEXT,__text,regular,pure_instructions ; ┌ @ In[130]:1 within `g' ; │┌ @ In[130]:1 within `+' vaddsd %xmm0, %xmm0, %xmm0 ; │└ retl nopw %cs:(%eax,%eax) ; └

Profiling Julia code¶

Julia has several built-in tools for profiling. The @time marco outputs run time and heap allocation.

# a function defined earlier

function tally(x::Array)

s = zero(eltype(x))

for v in x

s += v

end

s

end

using Random

Random.seed!(123)

a = rand(20_000_000)

@time tally(a) # first run: include compile time

0.036713 seconds (5.84 k allocations: 276.576 KiB)

1.0000233387279043e7

@time tally(a)

0.026384 seconds (5 allocations: 176 bytes)

1.0000233387279043e7

For more robust benchmarking, the BenchmarkTools.jl package is highly recommended.

using BenchmarkTools

@benchmark tally($a)

BenchmarkTools.Trial: memory estimate: 0 bytes allocs estimate: 0 -------------- minimum time: 21.450 ms (0.00% GC) median time: 23.802 ms (0.00% GC) mean time: 23.774 ms (0.00% GC) maximum time: 29.245 ms (0.00% GC) -------------- samples: 211 evals/sample: 1

The Profile module gives line by line profile results.

using Profile

Profile.clear()

@profile tally(a)

Profile.print(format=:flat)

Count File Line Function

23 ./In[143] 5 tally(::Array{Float64,1})

25 ./boot.jl 328 eval

1 ./broadcast.jl 551 _broadcast_getindex(::Base.Broadcast...

1 ./broadcast.jl 578 _broadcast_getindex

1 ./broadcast.jl 578 _broadcast_getindex_evalf(::typeof(S...

1 ./broadcast.jl 791 copy

1 ./broadcast.jl 791 copy(::Base.Broadcast.Broadcasted{Ba...

2 ./broadcast.jl 928 copyto_nonleaf!(::Array{Expr,1}, ::B...

2 ./broadcast.jl 511 getindex

2 ./broadcast.jl 753 materialize(::Base.Broadcast.Broadca...

26 ./essentials.jl 742 #invokelatest#1

26 ./essentials.jl 741 invokelatest

23 ./float.jl 395 +

26 ./task.jl 259 (::getfield(IJulia, Symbol("##15#18"...

26 .../9ajf8/src/eventloop.jl 8 eventloop(::ZMQ.Socket)

26 .../src/execute_request.jl 67 execute_request(::ZMQ.Socket, ::IJul...

2 .../src/SoftGlobalScope.jl 124 _softscope

1 .../src/SoftGlobalScope.jl 144 _softscope(::Expr, ::Set{Symbol}, ::...

1 .../src/SoftGlobalScope.jl 152 _softscope(::Expr, ::Set{Symbol}, ::...

1 .../src/SoftGlobalScope.jl 154 _softscope(::Expr, ::Set{Symbol}, ::...

1 .../src/SoftGlobalScope.jl 177 softscope(::Module, ::Expr)

1 .../src/SoftGlobalScope.jl 217 softscope_include_string(::Module, :...

25 .../src/SoftGlobalScope.jl 218 softscope_include_string(::Module, :...

One can use ProfileView package for better visualization of profile data:

using ProfileView

ProfileView.view()

Memory profiling¶

Detailed memory profiling requires a detour. First let's write a script bar.jl, which contains the workload function tally and a wrapper for profiling.

;cat bar.jl

using Profile

function tally(x::Array)

s = zero(eltype(x))

for v in x

s += v

end

s

end

# call workload from wrapper to avoid misattribution bug

function wrapper()

y = rand(10000)

# force compilation

println(tally(y))

# clear allocation counters

Profile.clear_malloc_data()

# run compiled workload

println(tally(y))

end

wrapper()

Next, in terminal, we run the script with --track-allocation=user option.

;julia --track-allocation=user bar.jl

5012.163648117665 5012.163648117665

The profiler outputs a file bar.jl.21740.mem.

;cat bar.jl.21740.mem

- using Profile

-

- function tally(x::Array)

- s = zero(eltype(x))

- for v in x

- s += v

- end

- s

- end

-

- # call workload from wrapper to avoid misattribution bug

- function wrapper()

0 y = rand(10000)

- # force compilation

0 println(tally(y))

- # clear allocation counters

0 Profile.clear_malloc_data()

- # run compiled workload

144 println(tally(y))

- end

-

- wrapper()

-