Table of Contents¶

versioninfo()

Julia Version 1.1.0 Commit 80516ca202 (2019-01-21 21:24 UTC) Platform Info: OS: macOS (x86_64-apple-darwin14.5.0) CPU: Intel(R) Core(TM) i7-6920HQ CPU @ 2.90GHz WORD_SIZE: 64 LIBM: libopenlibm LLVM: libLLVM-6.0.1 (ORCJIT, skylake) Environment: JULIA_EDITOR = code

Conjugate Gradient and Krylov Subspace Methods¶

Introduction¶

- Conjugate gradient is the top-notch iterative method for solving large, structured linear systems $\mathbf{A} \mathbf{x} = \mathbf{b}$, where $\mathbf{A}$ is pd.

Earlier we talked about Jacobi, Gauss-Seidel, and successive over-relaxation (SOR) as the classical iterative solvers. They are rarely used in practice due to slow convergence.

[Kershaw's results](http://www.sciencedirect.com/science/article/pii/0021999178900980?via%3Dihub) for a fusion problem.

| Method | Number of Iterations |

|---|---|

| Gauss Seidel | 208,000 |

| Block SOR methods | 765 |

| Incomplete Cholesky conjugate gradient | 25 |

- History: Hestenes (UCLA professor!) and Stiefel proposed conjugate gradient method in 1950s.

Hestenes and Stiefel (1952), Methods of conjugate gradients for solving linear systems, Jounral of Research of the National Bureau of Standards.

- Solve linear equation $\mathbf{A} \mathbf{x} = \mathbf{b}$, where $\mathbf{A} \in \mathbb{R}^{n \times n}$ is pd, is equivalent to

Denote $\nabla f(\mathbf{x}) = \mathbf{A} \mathbf{x} - \mathbf{b} =: r(\mathbf{x})$.

Conjugate gradient (CG) method¶

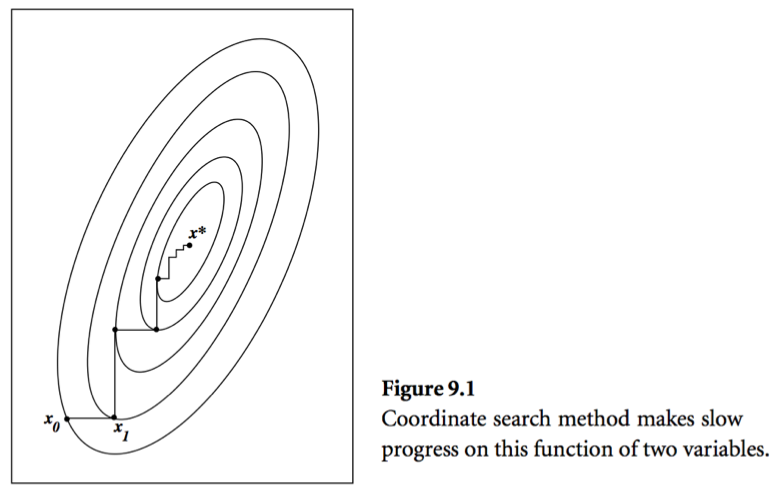

- Consider a simple idea: coordinate descent, that is to update components $x_j$ alternatingly. Same as the Gauss-Seidel iteration. Usually it takes too many iterations.

- A set of vectors $\{\mathbf{x}^{(0)},\ldots,\mathbf{x}^{(l)}\}$ is said to be conjugate with respect to $\mathbf{A}$ if

For example, eigen-vectors of $\mathbf{A}$ are conjugate to each other. Why?

- Conjugate direction method: Given a set of conjugate vectors $\{\mathbf{p}^{(0)},\ldots,\mathbf{p}^{(l)}\}$, at iteration $t$, we search along the conjugate direction $\mathbf{p}^{(t)}$

where $$ \begin{eqnarray*} \alpha^{(t)} = - \frac{\mathbf{r}^{(t)T} \mathbf{p}^{(t)}}{\mathbf{p}^{(t)T} \mathbf{A} \mathbf{p}^{(t)}} \end{eqnarray*} $$ is the optimal step length.

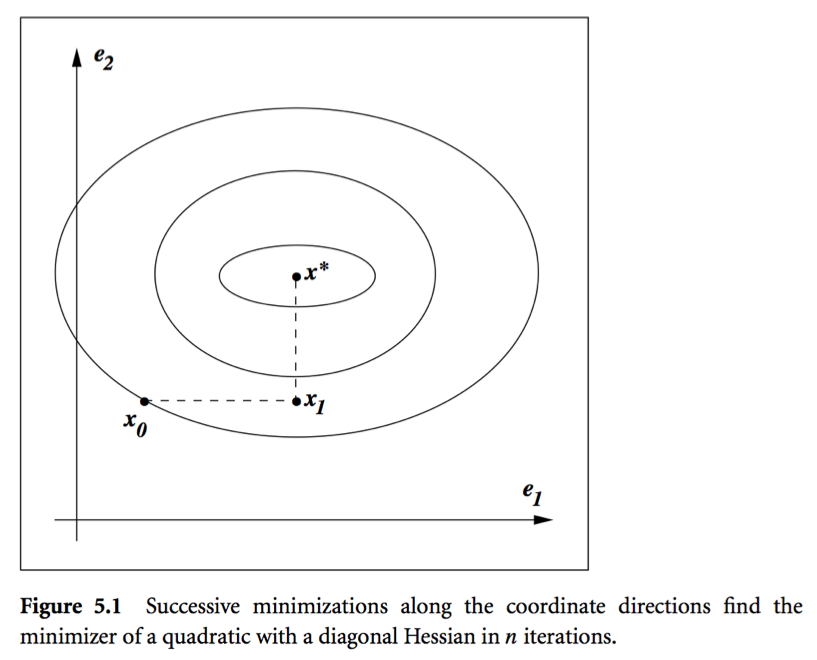

Theorem: In conjugate direction method, $\mathbf{x}^{(t)}$ converges to the solution in at most $n$ steps.

Intuition: Look at graph.

- Conjugate gradient method. Idea: generate $\mathbf{p}^{(t)}$ using only $\mathbf{p}^{(t-1)}$

where $\beta^{(t)}$ is determined by the conjugacy condition $\mathbf{p}^{(t-1)T} \mathbf{A} \mathbf{p}^{(t)} = 0$ $$ \begin{eqnarray*} \beta^{(t)} = \frac{\mathbf{r}^{(t)T} \mathbf{A} \mathbf{p}^{(t-1)}}{\mathbf{p}^{(t-1)T} \mathbf{A} \mathbf{p}^{(t-1)}}. \end{eqnarray*} $$

CG algorithm (preliminary version):

- Given $\mathbf{x}^{(0)}$

- Initialize: $\mathbf{r}^{(0)} \gets \mathbf{A} \mathbf{x}^{(0)} - \mathbf{b}$, $\mathbf{p}^{(0)} \gets - \mathbf{r}^{(0)}$, $t=0$

- While $\mathbf{r}^{(0)} \ne \mathbf{0}$

- $\alpha^{(t)} \gets - \frac{\mathbf{r}^{(t)T} \mathbf{p}^{(t)}}{\mathbf{p}^{(t)T} \mathbf{A} \mathbf{p}^{(t)}}$

- $\mathbf{x}^{(t+1)} \gets \mathbf{x}^{(t)} + \alpha^{(t)} \mathbf{p}^{(t)}$

- $\mathbf{r}^{(t+1)} \gets \mathbf{A} \mathbf{x}^{(t+1)} - \mathbf{b}$

- $\beta^{(t+1)} \gets \frac{\mathbf{r}^{(t+1)T} \mathbf{A} \mathbf{p}^{(t)}}{\mathbf{p}^{(t)T} \mathbf{A} \mathbf{p}^{(t)}}$

- $\mathbf{p}^{(t+1)} \gets - \mathbf{r}^{(t+1)} + \beta^{(t+1)} \mathbf{p}^{(t)}$

- $t \gets t+1$

Remark: The initial conjugate direction $\mathbf{p}^{(0)} \gets - \mathbf{r}^{(0)}$ is crucial.

Theorem: With CG algorithm 0. $\mathbf{r}^{(t)}$ are mutually orthogonal. 0. $\{\mathbf{r}^{(0)},\ldots,\mathbf{r}^{(t)}\}$ is contained in the Krylov subspace of degree $t$ for $\mathbf{r}^{(0)}$, denoted by $$ \begin{eqnarray*} {\cal K}(\mathbf{r}^{(0)}; t) = \text{span} \{\mathbf{r}^{(0)},\mathbf{A} \mathbf{r}^{(0)}, \mathbf{A}^2 \mathbf{r}^{(0)}, \ldots, \mathbf{A}^{t} \mathbf{r}^{(0)}\}. \end{eqnarray*} $$ 0. $\{\mathbf{p}^{(0)},\ldots,\mathbf{p}^{(t)}\}$ is contained in ${\cal K}(\mathbf{r}^{(0)}; t)$. 0. $\mathbf{p}^{(0)}, \ldots, \mathbf{p}^{(t)}$ are conjugate with respect to $\mathbf{A}$.

The iterates $\mathbf{x}^{(t)}$ converge to the solution in at most $n$ steps.

CG algorithm (economical version): saves one matrix-vector multiplication.

- Given $\mathbf{x}^{(0)}$

- Initialize: $\mathbf{r}^{(0)} \gets \mathbf{A} \mathbf{x}^{(0)} - \mathbf{b}$, $\mathbf{p}^{(0)} \gets - \mathbf{r}^{(0)}$, $t=0$

- While $\mathbf{r}^{(0)} \ne \mathbf{0}$

- $\alpha^{(t)} \gets \frac{\mathbf{r}^{(t)T} \mathbf{r}^{(t)}}{\mathbf{p}^{(t)T} \mathbf{A} \mathbf{p}^{(t)}}$

- $\mathbf{x}^{(t+1)} \gets \mathbf{x}^{(t)} + \alpha^{(t)} \mathbf{p}^{(t)}$

- $\mathbf{r}^{(t+1)} \gets \mathbf{r}^{(t)} + \alpha^{(t)} \mathbf{A} \mathbf{p}^{(t)}$

- $\beta^{(t+1)} \gets \frac{\mathbf{r}^{(t+1)T} \mathbf{r}^{(t+1)}}{\mathbf{r}^{(t)T} \mathbf{r}^{(t)}}$

- $\mathbf{p}^{(t+1)} \gets - \mathbf{r}^{(t+1)} + \beta^{(t+1)} \mathbf{p}^{(t)}$

- $t \gets t+1$

Computation cost per iteration is one matrix vector multiplication: $\mathbf{A} \mathbf{p}^{(t)}$.

Consider PageRank problem, $\mathbf{A}$ has dimension $n \approx 10^{10}$ but is highly structured (sparse + low rank). Each matrix vector multiplication takes $O(n)$.

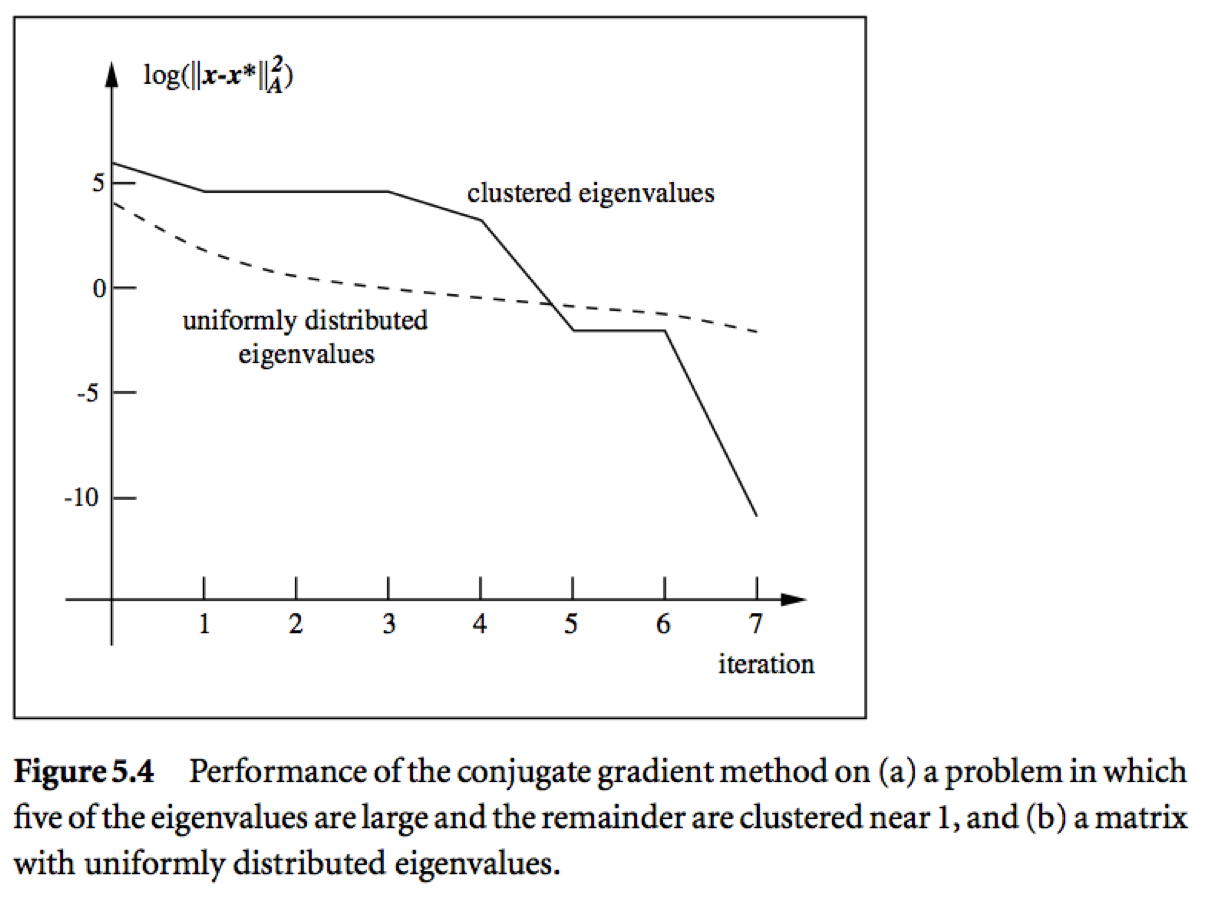

- Theorem: If $\mathbf{A}$ has $r$ distinct eigenvalues, $\mathbf{x}^{(t)}$ converges to solution $\mathbf{x}^*$ in at most $r$ steps.

Pre-conditioned conjugate gradient (PCG)¶

Summary of conjugate gradient method for solving $\mathbf{A} \mathbf{x} = \mathbf{b}$ or equivalently minimizing $\frac 12 \mathbf{x}^T \mathbf{A} \mathbf{x} - \mathbf{b}^T \mathbf{x}$:

- Each iteration needs one matrix vector multiplication: $\mathbf{A} \mathbf{p}^{(t+1)}$. For structured $\mathbf{A}$, often $O(n)$ cost per iteration.

- Guaranteed to converge in $n$ steps.

Two important bounds for conjugate gradient algorithm:

Let $\lambda_1 \le \cdots \le \lambda_n$ be the ordered eigenvalues of a pd $\mathbf{A}$.

where $\kappa(\mathbf{A}) = \lambda_n/\lambda_1$ is the condition number of $\mathbf{A}$.

Messages:

- Roughly speaking, if the eigenvalues of $\mathbf{A}$ occur in $r$ distinct clusters, the CG iterates will approximately solve the problem after $O(r)$ steps.

- $\mathbf{A}$ with a small condition number ($\lambda_1 \approx \lambda_n$) converges fast.

Pre-conditioning: Change of variables $\widehat{\mathbf{x}} = \mathbf{C} \mathbf{x}$ via a nonsingular $\mathbf{C}$ and solve

Choose $\mathbf{C}$ such that * $\mathbf{C}^{-T} \mathbf{A} \mathbf{C}^{-1}$ has small condition number, or * $\mathbf{C}^{-T} \mathbf{A} \mathbf{C}^{-1}$ has clustered eigenvalues * Inexpensive solution of $\mathbf{C}^T \mathbf{C} \mathbf{y} = \mathbf{r}$

Preconditioned CG does not make use of $\mathbf{C}$ explicitly, but rather the matrix $\mathbf{M} = \mathbf{C}^T \mathbf{C}$.

Preconditioned CG (PCG) algorithm:

- Given $\mathbf{x}^{(0)}$, pre-conditioner $\mathbf{M}$

- $\mathbf{r}^{(0)} \gets \mathbf{A} \mathbf{x}^{(0)} - \mathbf{b}$

- solve $\mathbf{M} \mathbf{y}^{(0)} = \mathbf{r}^{(0)}$ for $\mathbf{y}^{(0)}$

- $\mathbf{p}^{(0)} \gets - \mathbf{r}^{(0)}$, $t=0$

- While $\mathbf{r}^{(0)} \ne \mathbf{0}$

- $\alpha^{(t)} \gets \frac{\mathbf{r}^{(t)T} \mathbf{y}^{(t)}}{\mathbf{p}^{(t)T} \mathbf{A} \mathbf{p}^{(t)}}$

- $\mathbf{x}^{(t+1)} \gets \mathbf{x}^{(t)} + \alpha^{(t)} \mathbf{p}^{(t)}$

- $\mathbf{r}^{(t+1)} \gets \mathbf{r}^{(t)} + \alpha^{(t)} \mathbf{A} \mathbf{p}^{(t)}$

- Solve $\mathbf{M} \mathbf{y}^{(t+1)} \gets \mathbf{r}^{(t+1)}$ for $\mathbf{y}^{(t+1)}$

- $\beta^{(t+1)} \gets \frac{\mathbf{r}^{(t+1)T} \mathbf{y}^{(t+1)}}{\mathbf{r}^{(t)T} \mathbf{r}^{(t)}}$

- $\mathbf{p}^{(t+1)} \gets - \mathbf{y}^{(t+1)} + \beta^{(t+1)} \mathbf{p}^{(t)}$

- $t \gets t+1$

Remark: Only extra cost in the pre-conditioned CG algorithm is the need to solve the linear system $\mathbf{M} \mathbf{y} = \mathbf{r}$.

Pre-conditioning is more like an art than science. Some choices include

- Incomplete Cholesky. $\mathbf{A} \approx \tilde{\mathbf{L}} \tilde{\mathbf{L}}^T$, where $\tilde{\mathbf{L}}$ is a sparse approximate Cholesky factor. Then $\tilde{\mathbf{L}}^{-1} \mathbf{A} \tilde{\mathbf{L}}^{-T} \approx \mathbf{I}$ (perfectly conditioned) and $\mathbf{M} \mathbf{y} = \tilde{\mathbf{L}} \tilde {\mathbf{L}}^T \mathbf{y} = \mathbf{r}$ is easy to solve.

- Banded pre-conditioners.

- Choose $\mathbf{M}$ as a coarsened version of $\mathbf{A}$.

- Subject knowledge. Knowledge about the structure and origin of a problem is often the key to devising efficient pre-conditioner. For example, see recent work of Stein, Chen, Anitescu (2012) for pre-conditioning large covariance matrices. http://epubs.siam.org/doi/abs/10.1137/110834469

Example of PCG¶

Preconditioners.jl wraps a bunch of preconditioners.

We use the Wathen matrix (sparse and positive definite) as a test matrix.

using BenchmarkTools, MatrixDepot, IterativeSolvers, LinearAlgebra, SparseArrays

# Wathen matrix of dimension 30401 x 30401

A = matrixdepot("wathen", 100)

include group.jl for user defined matrix generators verify download of index files... used remote site is https://sparse.tamu.edu/?per_page=All populating internal database...

30401×30401 SparseMatrixCSC{Float64,Int64} with 471601 stored entries:

[1 , 1] = 2.18448

[2 , 1] = -2.18448

[3 , 1] = 0.728159

[202 , 1] = -2.18448

[203 , 1] = -2.91263

[303 , 1] = 0.728159

[304 , 1] = -2.91263

[305 , 1] = 1.09224

[1 , 2] = -2.18448

[2 , 2] = 11.6505

[3 , 2] = -2.18448

[202 , 2] = 7.28159

⋮

[30200, 30400] = 15.0471

[30399, 30400] = -4.51414

[30400, 30400] = 24.0754

[30401, 30400] = -4.51414

[30097, 30401] = 2.25707

[30098, 30401] = -6.01885

[30099, 30401] = 1.50471

[30199, 30401] = -6.01885

[30200, 30401] = -4.51414

[30399, 30401] = 1.50471

[30400, 30401] = -4.51414

[30401, 30401] = 4.51414

using UnicodePlots

spy(A)

Sparsity Pattern ┌──────────────────────────────────────────┐ 1 │⠻⣦⡀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀│ > 0 │⠀⠈⠻⣦⡀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀│ < 0 │⠀⠀⠀⠈⠻⣦⡀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀│ │⠀⠀⠀⠀⠀⠈⠻⣦⡀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀│ │⠀⠀⠀⠀⠀⠀⠀⠈⠻⣦⡀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀│ │⠀⠀⠀⠀⠀⠀⠀⠀⠀⠈⠻⣦⡀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀│ │⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠈⠻⣦⡀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀│ │⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠈⠻⣦⡀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀│ │⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠈⠻⣦⡀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀│ │⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠈⠻⣦⡀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀│ │⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠈⠻⣦⡀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀│ │⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠈⠻⣦⡀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀│ │⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠈⠻⣦⡀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀│ │⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠈⠻⣦⡀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀│ │⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠈⠻⣦⡀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀│ │⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠈⠻⣦⡀⠀⠀⠀⠀⠀⠀⠀⠀⠀│ │⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠈⠻⣦⡀⠀⠀⠀⠀⠀⠀⠀│ │⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠈⠻⣦⡀⠀⠀⠀⠀⠀│ │⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠈⠻⣦⡀⠀⠀⠀│ │⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠈⠻⣦⡀⠀│ 30401 │⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠈⠻⣦│ └──────────────────────────────────────────┘ 1 30401 nz = 471601

# sparsity level

count(!iszero, A) / length(A)

0.0005102687577359558

# rhs

b = ones(size(A, 1))

# solve Ax=b by CG

xcg = cg(A, b);

@benchmark cg($A, $b)

BenchmarkTools.Trial: memory estimate: 1.16 MiB allocs estimate: 23 -------------- minimum time: 195.939 ms (0.00% GC) median time: 199.963 ms (0.00% GC) mean time: 200.412 ms (0.21% GC) maximum time: 210.798 ms (5.05% GC) -------------- samples: 25 evals/sample: 1

Compute the incomplete cholesky preconditioner:

using Preconditioners

@time p = CholeskyPreconditioner(A, 2)

22.336321 seconds (1.95 M allocations: 7.306 GiB, 1.01% gc time)

CholeskyPreconditioner{Float64,SparseMatrixCSC{Float64,Int64}}([1.478 0.0 … 0.0 0.0; -1.478 3.0767 … 0.0 0.0; … ; 0.0 0.0 … 3.48688 0.0; 0.0 0.0 … -0.209846 1.73063], 2)

Pre-conditioned conjugate gradient:

# solver Ax=b by PCG

xpcg = cg(A, b, Pl=p)

# same answer?

norm(xcg - xpcg)

4.2404710199864254e-7

# PCG is >10 fold faster than CG

@benchmark cg($A, $b, Pl=$p)

BenchmarkTools.Trial: memory estimate: 1.16 MiB allocs estimate: 32 -------------- minimum time: 13.705 ms (0.00% GC) median time: 14.927 ms (0.00% GC) mean time: 15.060 ms (0.71% GC) maximum time: 18.903 ms (10.66% GC) -------------- samples: 332 evals/sample: 1

Other Krylov subspace methods¶

We leant about CG/PCG, which is for solving $\mathbf{A} \mathbf{x} = \mathbf{b}$, $\mathbf{A}$ pd.

MINRES (minimum residual method): symmetric indefinite $\mathbf{A}$.

Bi-CG (bi-conjugate gradient): unsymmetric $\mathbf{A}$.

Bi-CGSTAB (Bi-CG stabilized): improved version of Bi-CG.

GMRES (generalized minimum residual method): current de facto method for unsymmetric $\mathbf{A}$. E.g., PageRank problem.

Lanczos method: top eigen-pairs of a large symmetric matrix.

Arnoldi method: top eigen-pairs of a large unsymmetric matrix.

Lanczos bidiagonalization algorithm: top singular triplets of large matrix.

LSQR: least square problem $\min \|\mathbf{y} - \mathbf{X} \beta\|_2^2$. Algebraically equivalent to applying CG to the normal equation $(\mathbf{X}^T \mathbf{X} + \lambda^2 I) \beta = \mathbf{X}^T \mathbf{y}$.

LSMR: least square problem $\min \|\mathbf{y} - \mathbf{X} \beta\|_2^2$. Algebraically equivalent to applying MINRES to the normal equation $(\mathbf{X}^T \mathbf{X} + \lambda^2 I) \beta = \mathbf{X}^T \mathbf{y}$.

Software¶

Matlab¶

Iterative methods for solving linear equations:

pcg,bicg,bicgstab,gmres, ...Iterative methods for top eigen-pairs and singular pairs:

eigs,svds, ...Pre-conditioner:

cholinc,luinc, ...Get familiar with the reverse communication interface (RCI) for utilizing iterative solvers:

x = gmres(A, b)

x = gmres(@Afun, b)

eigs(A)

eigs(@Afun)

Julia¶

eigsandsvdsin the Arpack.jl package. Numerical examples later.IterativeSolvers.jlpackage. CG numerical examplesLeast squares examples:

Least squares example¶

using BenchmarkTools, IterativeSolvers, LinearAlgebra, Random, SparseArrays

Random.seed!(280) # seed

n, p = 10000, 5000

X = sprandn(n, p, 0.001) # iid standard normals with sparsity 0.01

β = ones(p)

y = X * β + randn(n)

β̂_qr = X \ y

# least squares by QR

@benchmark $X \ $y

BenchmarkTools.Trial: memory estimate: 1.42 GiB allocs estimate: 37941 -------------- minimum time: 5.171 s (1.41% GC) median time: 5.171 s (1.41% GC) mean time: 5.171 s (1.41% GC) maximum time: 5.171 s (1.41% GC) -------------- samples: 1 evals/sample: 1

β̂_lsqr = lsqr(X, y)

@show norm(β̂_qr - β̂_lsqr)

# least squares by lsqr

@benchmark lsqr($X, $y)

norm(β̂_qr - β̂_lsqr) = 7.186597401024407e-5

BenchmarkTools.Trial: memory estimate: 11.59 MiB allocs estimate: 1346 -------------- minimum time: 27.416 ms (0.00% GC) median time: 34.065 ms (11.22% GC) mean time: 34.335 ms (6.47% GC) maximum time: 45.718 ms (13.40% GC) -------------- samples: 146 evals/sample: 1

β̂_lsmr = lsmr(X, y)

@show norm(β̂_qr - β̂_lsmr)

# least squares by lsmr

@benchmark lsmr($X, $y)

norm(β̂_qr - β̂_lsmr) = 0.02228791821986182

BenchmarkTools.Trial: memory estimate: 4.06 MiB allocs estimate: 611 -------------- minimum time: 17.392 ms (0.00% GC) median time: 18.636 ms (0.00% GC) mean time: 19.384 ms (3.91% GC) maximum time: 26.789 ms (17.91% GC) -------------- samples: 258 evals/sample: 1

Use LinearMaps in iterative solvers¶

In many applications, it is advantageous to define linear maps indead of forming the actual (sparse) matrix. For a linear map, we need to specify how it acts on right- and left-multiplication on a vector. The LinearMaps.jl package is exactly for this purpose and interfaces nicely with IterativeSolvers.jl, Arnoldi.jl and other iterative solver packages.

Applications:

- The matrix is not sparse but admits special structure, e.g., easy + low rank (PageRank), Kronecker proudcts, etc.

- Less memory usage.

- Linear algebra on a standardized (centered and scaled) sparse matrix.

Consider the differencing operator that takes differences between neighboring pixels.

using LinearMaps, IterativeSolvers

# Overwrite y with A * x

# left difference assuming periodic boundary conditions

function leftdiff!(y::AbstractVector, x::AbstractVector)

N = length(x)

length(y) == N || throw(DimensionMismatch())

@inbounds for i in 1:N

y[i] = x[i] - x[mod1(i - 1, N)]

end

return y

end

# Overwrite y with A' * x

# minus right difference

function mrightdiff!(y::AbstractVector, x::AbstractVector)

N = length(x)

length(y) == N || throw(DimensionMismatch())

@inbounds for i in 1:N

y[i] = x[i] - x[mod1(i + 1, N)]

end

return y

end

# define linear map

D = LinearMap{Float64}(leftdiff!, mrightdiff!, 100; ismutating=true)

LinearMaps.FunctionMap{Float64}(leftdiff!, mrightdiff!, 100, 100; ismutating=true, issymmetric=false, ishermitian=false, isposdef=false)

Linear maps can be used like a regular matrix.

@show size(D)

v = ones(size(D, 2)) # vector of all 1s

@show D * v

@show D' * v;

size(D) = (100, 100) D * v = [0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0] D' * v = [0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0]

If we form the corresponding dense matrix, it will look like

Matrix(D)

100×100 Array{Float64,2}:

1.0 0.0 0.0 0.0 0.0 0.0 … 0.0 0.0 0.0 0.0 0.0 -1.0

-1.0 1.0 0.0 0.0 0.0 0.0 0.0 0.0 0.0 0.0 0.0 0.0

0.0 -1.0 1.0 0.0 0.0 0.0 0.0 0.0 0.0 0.0 0.0 0.0

0.0 0.0 -1.0 1.0 0.0 0.0 0.0 0.0 0.0 0.0 0.0 0.0

0.0 0.0 0.0 -1.0 1.0 0.0 0.0 0.0 0.0 0.0 0.0 0.0

0.0 0.0 0.0 0.0 -1.0 1.0 … 0.0 0.0 0.0 0.0 0.0 0.0

0.0 0.0 0.0 0.0 0.0 -1.0 0.0 0.0 0.0 0.0 0.0 0.0

0.0 0.0 0.0 0.0 0.0 0.0 0.0 0.0 0.0 0.0 0.0 0.0

0.0 0.0 0.0 0.0 0.0 0.0 0.0 0.0 0.0 0.0 0.0 0.0

0.0 0.0 0.0 0.0 0.0 0.0 0.0 0.0 0.0 0.0 0.0 0.0

0.0 0.0 0.0 0.0 0.0 0.0 … 0.0 0.0 0.0 0.0 0.0 0.0

0.0 0.0 0.0 0.0 0.0 0.0 0.0 0.0 0.0 0.0 0.0 0.0

0.0 0.0 0.0 0.0 0.0 0.0 0.0 0.0 0.0 0.0 0.0 0.0

⋮ ⋮ ⋱ ⋮

0.0 0.0 0.0 0.0 0.0 0.0 0.0 0.0 0.0 0.0 0.0 0.0

0.0 0.0 0.0 0.0 0.0 0.0 0.0 0.0 0.0 0.0 0.0 0.0

0.0 0.0 0.0 0.0 0.0 0.0 … 0.0 0.0 0.0 0.0 0.0 0.0

0.0 0.0 0.0 0.0 0.0 0.0 0.0 0.0 0.0 0.0 0.0 0.0

0.0 0.0 0.0 0.0 0.0 0.0 0.0 0.0 0.0 0.0 0.0 0.0

0.0 0.0 0.0 0.0 0.0 0.0 0.0 0.0 0.0 0.0 0.0 0.0

0.0 0.0 0.0 0.0 0.0 0.0 1.0 0.0 0.0 0.0 0.0 0.0

0.0 0.0 0.0 0.0 0.0 0.0 … -1.0 1.0 0.0 0.0 0.0 0.0

0.0 0.0 0.0 0.0 0.0 0.0 0.0 -1.0 1.0 0.0 0.0 0.0

0.0 0.0 0.0 0.0 0.0 0.0 0.0 0.0 -1.0 1.0 0.0 0.0

0.0 0.0 0.0 0.0 0.0 0.0 0.0 0.0 0.0 -1.0 1.0 0.0

0.0 0.0 0.0 0.0 0.0 0.0 0.0 0.0 0.0 0.0 -1.0 1.0

If we form the corresponding sparse matrix, it will look like

using SparseArrays

sparse(D)

100×100 SparseMatrixCSC{Float64,Int64} with 200 stored entries:

[1 , 1] = 1.0

[2 , 1] = -1.0

[2 , 2] = 1.0

[3 , 2] = -1.0

[3 , 3] = 1.0

[4 , 3] = -1.0

[4 , 4] = 1.0

[5 , 4] = -1.0

[5 , 5] = 1.0

[6 , 5] = -1.0

[6 , 6] = 1.0

[7 , 6] = -1.0

⋮

[95 , 95] = 1.0

[96 , 95] = -1.0

[96 , 96] = 1.0

[97 , 96] = -1.0

[97 , 97] = 1.0

[98 , 97] = -1.0

[98 , 98] = 1.0

[99 , 98] = -1.0

[99 , 99] = 1.0

[100, 99] = -1.0

[1 , 100] = -1.0

[100, 100] = 1.0

using UnicodePlots

spy(sparse(D))

Sparsity Pattern ┌──────────────────────────────────────────┐ 1 │⠳⣄⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠈│ > 0 │⠀⠈⠳⣄⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀│ < 0 │⠀⠀⠀⠈⠳⣄⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀│ │⠀⠀⠀⠀⠀⠈⠳⣄⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀│ │⠀⠀⠀⠀⠀⠀⠀⠈⠳⣄⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀│ │⠀⠀⠀⠀⠀⠀⠀⠀⠀⠈⠳⣄⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀│ │⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠈⠳⣄⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀│ │⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠈⠳⣄⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀│ │⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠈⠳⣄⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀│ │⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠈⠳⣄⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀│ │⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠈⠳⣄⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀│ │⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠈⠳⣄⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀│ │⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠈⠳⣄⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀│ │⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠈⠳⣄⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀│ │⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠈⠳⣄⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀│ │⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠈⠳⣄⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀│ │⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠈⠳⣄⠀⠀⠀⠀⠀⠀⠀⠀│ │⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠈⠳⣄⠀⠀⠀⠀⠀⠀│ │⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠈⠳⣄⠀⠀⠀⠀│ │⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠈⠳⣄⠀⠀│ 100 │⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠈⠳⣄│ └──────────────────────────────────────────┘ 1 100 nz = 200

Compute top singular values using iterative method (Arnoldi).

using Arpack

Arpack.svds(D, nsv=3)

(SVD{Float64,Float64,Array{Float64,2}}([-0.1 0.0640197 -0.126101; 0.1 -0.0559754 0.129872; … ; -0.1 0.0793195 -0.117083; 0.1 -0.0718113 0.121832], [2.0, 1.99901, 1.99901], [0.1 -0.1 … 0.1 -0.1; -0.0600272 0.0518684 … -0.0756027 0.067949; 0.12805 -0.131566 … 0.119517 -0.124028]), 3, 35, 573, [0.0400615, 0.00610398, 0.0278884, 0.0776812, 0.08746, 0.00602873, 0.212726, 0.0333012, -0.0523244, 0.144026 … 0.083757, 0.0931424, -0.0237672, 0.00677622, 0.042755, 0.0557526, 0.0887591, 0.102905, 0.0669487, 0.0562545])

using LinearAlgebra

# check solution against the direct method for SVD

svdvals(Matrix(D))

100-element Array{Float64,1}:

2.0

1.999013120731463

1.9990131207314623

1.9960534568565431

1.9960534568565427

1.99112392920616

1.9911239292061598

1.9842294026289555

1.9842294026289549

1.9753766811902758

1.9753766811902744

1.9645745014573777

1.9645745014573768

⋮

0.37476262917144926

0.3128689300804618

0.31286893008046174

0.25066646712860846

0.25066646712860846

0.18821662663702868

0.18821662663702865

0.1255810390586268

0.12558103905862675

0.06282151815625672

0.06282151815625658

2.5308506128625597e-18

Compute top eigenvalues of the Gram matrix D'D using iterative method (Arnoldi).

Arpack.eigs(D'D, nev=3, which=:LM)

([4.0, 3.99605, 3.99605], [0.1 -0.00939969 -0.141109; -0.1 0.000520858 0.14142; … ; 0.1 -0.0270112 -0.138818; -0.1 0.0182414 0.14024], 3, 30, 493, [0.103736, 0.0100948, 0.0228213, -0.0164503, 0.10995, 0.0865899, 0.124362, -0.161938, -0.0933529, 0.125877 … -0.0628246, -0.0637099, 0.0916849, 0.179155, 0.14872, 0.214858, 0.0147322, 0.146649, -0.206126, 0.0174464])

Further reading¶

Chapter 5 of Numerical Optimization by Jorge Nocedal and Stephen Wright (1999).

Sections 11.3-11.5 of Matrix Computations by Gene Golub and Charles Van Loan (2013).