Seminar: simple question answering¶

Today we're going to build a retrieval-based question answering model with metric learning models.

this seminar is based on original notebook by Oleg Vasilev

import numpy as np

import matplotlib.pyplot as plt

%matplotlib inline

Dataset¶

Today's data is Stanford Question Answering Dataset (SQuAD). Given a paragraph of text and a question, our model's task is to select a snippet that answers the question.

We are not going to solve the full task today. Instead, we'll train a model to select the sentence containing answer among several options.

As usual, you are given an utility module with data reader and some helper functions

import utils

!wget https://rajpurkar.github.io/SQuAD-explorer/dataset/train-v2.0.json -O squad-v2.0.json 2> log

# backup download link: https://www.dropbox.com/s/q4fuihaerqr0itj/squad.tar.gz?dl=1

train, test = utils.build_dataset('./squad-v2.0.json')

pid, question, options, correct_indices, wrong_indices = train.iloc[40]

print('QUESTION', question, '\n')

for i, cand in enumerate(options):

print(['[ ]', '[v]'][i in correct_indices], cand)

Universal Sentence Encoder¶

We've already solved quite a few tasks from scratch, training our own embeddings and convolutional/recurrent layers. However, one can often achieve higher quality by using pre-trained models. So today we're gonna use pre-trained Universal Sentence Encoder from Tensorflow Hub.

Universal Sentence Encoder is a model that encoders phrases, sentences or short paragraphs into a fixed-size vector. It was trained simultaneosly on a variety of tasks to achieve versatility.

import tensorflow as tf

import keras.layers as L

import tensorflow_hub as hub

tf.reset_default_graph()

sess = tf.InteractiveSession()

universal_sentence_encoder = hub.Module("https://tfhub.dev/google/universal-sentence-encoder/2",

trainable=False)

# consider as well:

# * lite: https://tfhub.dev/google/universal-sentence-encoder-lite/2

# * large: https://tfhub.dev/google/universal-sentence-encoder-large/2

sess.run([tf.global_variables_initializer(), tf.tables_initializer()]);

# tfhub implementation does tokenization for you

dummy_ph = tf.placeholder(tf.string, shape=[None])

dummy_vectors = universal_sentence_encoder(dummy_ph)

dummy_lines = [

"How old are you?", # 0

"In what mythology do two canines watch over the Chinvat Bridge?", # 1

"I'm sorry, okay, I'm not perfect, but I'm trying.", # 2

"What is your age?", # 3

"Beware, for I am fearless, and therefore powerful.", # 4

]

dummy_vectors_np = sess.run(dummy_vectors, {

dummy_ph: dummy_lines

})

plt.title('phrase similarity')

plt.imshow(dummy_vectors_np.dot(dummy_vectors_np.T), interpolation='none', cmap='gray')

As you can see, the strongest similarity is between lines 0 and 3. Indeed they correspond to "How old are you?" and "What is your age?"

Model (2 points)¶

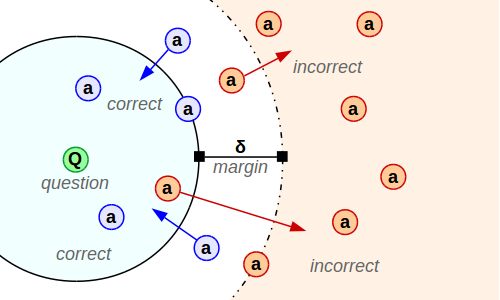

Our goal for today is to build a model that measures similarity between question and answer. In particular, it maps both question and answer into fixed-size vectors such that:

Our model is a pair of $V_q(q)$ and $V_a(a)$ - networks that turn phrases into vectors.

Objective: Question vector $V_q(q)$ should be closer to correct answer vectors $V_a(a^+)$ than to incorrect ones $V_a(a^-)$ .

Both vectorizers can be anything you wish. For starters, let's use a couple of dense layers on top of the Universal Sentence Encoder.

import keras.layers as L

class Vectorizer:

def __init__(self, output_size=256, hid_size=256, universal_sentence_encoder=universal_sentence_encoder):

""" A small feedforward network on top of universal sentence encoder. 2-3 layers should be enough """

self.universal_sentence_encoder = universal_sentence_encoder

# define a few layers to be applied on top of u.s.e.

# note: please make sure your final layer comes with _linear_ activation

<YOUR CODE HERE>

def __call__(self, input_phrases, is_train=True):

"""

Apply vectorizer. Use dropout and any other hacks at will.

:param input_phrases: [batch_size] of tf.string

:param is_train: if True, apply dropouts and other ops in train mode,

if False - evaluation mode

:returns: predicted phrase vectors, [batch_size, output_size]

"""

<YOUR CODE>

return <...>

question_vectorizer = Vectorizer()

answer_vectorizer = Vectorizer()

dummy_v_q = question_vectorizer(dummy_ph, is_train=True)

dummy_v_q_det = question_vectorizer(dummy_ph, is_train=False)

utils.initialize_uninitialized()

assert sess.run(dummy_v_q, {dummy_ph: dummy_lines}).shape == (5, 256)

assert np.allclose(

sess.run(dummy_v_q_det, {dummy_ph: dummy_lines}),

sess.run(dummy_v_q_det, {dummy_ph: dummy_lines})

), "make sure your model doesn't use dropout/noise or non-determinism if is_train=False"

print("Well done!")

Training: minibatches¶

Our model learns on triples $(q, a^+, a^-)$:

- q - __q__uestion

- (a+) - correct __a__nswer

- (a-) - wrong __a__nswer

Below you will find a generator that samples such triples from data.

import random

def iterate_minibatches(data, batch_size, shuffle=True, cycle=False):

"""

Generates minibatches of triples: {questions, correct answers, wrong answers}

If there are several wrong (or correct) answers, picks one at random.

"""

indices = np.arange(len(data))

while True:

if shuffle:

indices = np.random.permutation(indices)

for batch_start in range(0, len(indices), batch_size):

batch_indices = indices[batch_start: batch_start + batch_size]

batch = data.iloc[batch_indices]

questions = batch['question'].values

correct_answers = np.array([

row['options'][random.choice(row['correct_indices'])]

for i, row in batch.iterrows()

])

wrong_answers = np.array([

row['options'][random.choice(row['wrong_indices'])]

for i, row in batch.iterrows()

])

yield {

'questions' : questions,

'correct_answers': correct_answers,

'wrong_answers': wrong_answers,

}

if not cycle:

break

dummy_batch = next(iterate_minibatches(train.sample(3), 3))

print(dummy_batch)

Training: loss function (2 points)¶

We want our vectorizers to put correct answers closer to question vectors and incorrect answers farther away from them. One way to express this is to use is Pairwise Hinge Loss (aka Triplet Loss).

$$ L = \frac 1N \underset {q, a^+, a^-} \sum max(0, \space \delta - sim[V_q(q), V_a(a^+)] + sim[V_q(q), V_a(a^-)] )$$, where

- sim[a, b] is some similarity function: dot product, cosine or negative distance

- δ - loss hyperparameter, e.g. δ=1.0. If sim[a, b] is linear in b, all δ > 0 are equivalent.

This reads as Correct answers must be closer than the wrong ones by at least δ.

Note: in effect, we train a Deep Semantic Similarity Model DSSM.

def similarity(a, b):

""" Dot product as a similarity function """

<YOUR CODE>

return <...>

def compute_loss(question_vectors, correct_answer_vectors, wrong_answer_vectors, delta=1.0):

"""

Compute the triplet loss as per formula above.

Use similarity function above for sim[a, b]

:param question_vectors: float32[batch_size, vector_size]

:param correct_answer_vectors: float32[batch_size, vector_size]

:param wrong_answer_vectors: float32[batch_size, vector_size]

:returns: loss for every row in batch, float32[batch_size]

Hint: DO NOT use tf.reduce_max, it's a wrong kind of maximum :)

"""

<YOUR CODE>

return <...>

dummy_v1 = tf.constant([[0.1, 0.2, -1], [-1.2, 0.6, 1.0]], dtype=tf.float32)

dummy_v2 = tf.constant([[0.9, 2.1, -6.6], [0.1, 0.8, -2.2]], dtype=tf.float32)

dummy_v3 = tf.constant([[-4.1, 0.1, 1.2], [0.3, -1, -2]], dtype=tf.float32)

assert np.allclose(similarity(dummy_v1, dummy_v2).eval(), [7.11, -1.84])

assert np.allclose(compute_loss(dummy_v1, dummy_v2, dummy_v3, delta=5.0).eval(), [0.0, 3.88])

Once loss is working, let's train our model by our usual means.

placeholders = {

key: tf.placeholder(tf.string, [None]) for key in dummy_batch.keys()

}

v_q = question_vectorizer(placeholders['questions'], is_train=True)

v_a_correct = answer_vectorizer(placeholders['correct_answers'], is_train=True)

v_a_wrong = answer_vectorizer(placeholders['wrong_answers'], is_train=True)

loss = tf.reduce_mean(compute_loss(v_q, v_a_correct, v_a_wrong))

step = tf.train.AdamOptimizer().minimize(loss)

# we also compute recall: probability that a^+ is closer to q than a^-

test_v_q = question_vectorizer(placeholders['questions'], is_train=False)

test_v_a_correct = answer_vectorizer(placeholders['correct_answers'], is_train=False)

test_v_a_wrong = answer_vectorizer(placeholders['wrong_answers'], is_train=False)

correct_is_closer = tf.greater(similarity(test_v_q, test_v_a_correct),

similarity(test_v_q, test_v_a_wrong))

recall = tf.reduce_mean(tf.to_float(correct_is_closer))

Training loop¶

Just as we always do, we can now train DSSM on minibatches and periodically measure recall on validation data.

Note 1: DSSM training may be very sensitive to the choice of batch size. Small batch size may decrease model quality.

Note 2: here we use the same dataset as "test set" and "validation (dev) set".

In any serious scientific experiment, those must be two separate sets. Validation is for hyperparameter tuning and testr is for final eval only.

import pandas as pd

from IPython.display import clear_output

from tqdm import tqdm

ewma = lambda x, span: pd.DataFrame({'x': x})['x'].ewm(span=span).mean().values

dev_batches = iterate_minibatches(test, batch_size=256, cycle=True)

loss_history = []

dev_recall_history = []

utils.initialize_uninitialized()

# infinite training loop. Stop it manually or implement early stopping

for batch in iterate_minibatches(train, batch_size=256, cycle=True):

feed = {placeholders[key] : batch[key] for key in batch}

loss_t, _ = sess.run([loss, step], feed)

loss_history.append(loss_t)

if len(loss_history) % 50 == 0:

# measure dev recall = P(correct_is_closer_than_wrong | q, a+, a-)

dev_batch = next(dev_batches)

recall_t = sess.run(recall, {placeholders[key] : dev_batch[key] for key in dev_batch})

dev_recall_history.append(recall_t)

if len(loss_history) % 50 == 0:

clear_output(True)

plt.figure(figsize=[12, 6])

plt.subplot(1, 2, 1), plt.title('train loss (hinge)'), plt.grid()

plt.scatter(np.arange(len(loss_history)), loss_history, alpha=0.1)

plt.plot(ewma(loss_history, span=100))

plt.subplot(1, 2, 2), plt.title('dev recall (1 correct vs 1 wrong)'), plt.grid()

dev_time = np.arange(1, len(dev_recall_history) + 1) * 100

plt.scatter(dev_time, dev_recall_history, alpha=0.1)

plt.plot(dev_time, ewma(dev_recall_history, span=10))

plt.show()

print("Mean recall:", np.mean(dev_recall_history[-10:]))

assert np.mean(dev_recall_history[-10:]) > 0.85, "Please train for at least 85% recall on test set. "\

"You may need to change vectorizer model for that."

print("Well done!")

Final evaluation (1 point)¶

Let's see how well does our model perform on actual question answering.

Given a question and a set of possible answers, pick answer with highest similarity to estimate accuracy.

# optional: build tf graph required for select_best_answer

# <...>

def select_best_answer(question, possible_answers):

"""

Predicts which answer best fits the question

:param question: a single string containing a question

:param possible_answers: a list of strings containing possible answers

:returns: integer - the index of best answer in possible_answer

"""

<YOUR CODE>

return <...>

predicted_answers = [

select_best_answer(question, possible_answers)

for i, (question, possible_answers) in tqdm(test[['question', 'options']].iterrows(), total=len(test))

]

accuracy = np.mean([

answer in correct_ix

for answer, correct_ix in zip(predicted_answers, test['correct_indices'].values)

])

print("Accuracy: %0.5f" % accuracy)

assert accuracy > 0.65, "we need more accuracy!"

print("Great job!")

def draw_results(question, possible_answers, predicted_index, correct_indices):

print("Q:", question, end='\n\n')

for i, answer in enumerate(possible_answers):

print("#%i: %s %s" % (i, '[*]' if i == predicted_index else '[ ]', answer))

print("\nVerdict:", "CORRECT" if predicted_index in correct_indices else "INCORRECT",

"(ref: %s)" % correct_indices, end='\n' * 3)

for i in [1, 100, 1000, 2000, 3000, 4000, 5000]:

draw_results(test.iloc[i].question, test.iloc[i].options,

predicted_answers[i], test.iloc[i].correct_indices)

question = "What is my name?" # your question here!

possible_answers = [

<...>

# ^- your options.

]

predicted answer = select_best_answer(question, possible_answers)

draw_results(question, possible_answers,

predicted_answer, [0])

Bonus tasks¶

There are many ways to improve our question answering model. Here's a bunch of things you can do to increase your understanding and get bonus points.

1. Hard Negatives (3+ pts)¶

Not all wrong answers are equally wrong. As the training progresses, most negative examples $a^-$ will be to easy. So easy in fact, that loss function and gradients on such negatives is exactly 0.0. To improve training efficiency, one can mine hard negative samples.

Given a list of answers,

- Hard negative is the wrong answer with highest similarity with question,

Semi-hard negative is the one with highest similarity _among wrong answers that are farther than positive one. This option is more useful if some wrong answers may actually be mislabelled correct answers.

One can also sample negatives proportionally to $$P(a^-_i) \sim e ^ {sim[V_q(q), V_a(a^-_i)]}$$

The task is to implement at least hard negative sampling and apply it for model training.

2. Bring Your Own Model (3+ pts)¶

In addition to Universal Sentence Encoder, one can also train a new model.

- You name it: convolutions, RNN, self-attention

- Use pre-trained ELMO or FastText embeddings

- Monitor overfitting and use dropout / word dropout to improve performance

Note: if you use ELMO please note that it requires tokenized text while USE can deal with raw strings. You can tokenize data manually or use tokenized=True when reading dataset.

- hard negatives (strategies: hardest, hardest farter than current, randomized)

- train model on the full dataset to see if it can mine answers to new questions over the entire wikipedia. Use approximate nearest neighbor search for fast lookup.

3. Search engine (3+ pts)¶

Our basic model only selects answers from 2-5 available sentences in paragraph. You can extend it to search over the whole dataset. All sentences in all other paragraphs are viable answers.

The goal is to train such a model and use it to quickly find top-10 answers from the whole set.

- You can ask such model a question of your own making - to see which answers it can find among the entire training dataset or even the entire wikipedia.

- Searching for top-K neighbors is easier if you use specialized methods: KD-Tree or HNSW.

- This task is much easier to train if you use hard or semi-hard negatives. You can even find hard negatives for one question from correct answers to other questions in batch - do so in-graph for maximum efficiency. See [1.] for more details.