The Bias-Variance Tradeoff¶

A Summary of lecture "Machine Learning with Tree-Based Models in Python

", via datacamp

- toc: true

- badges: true

- comments: true

- author: Chanseok Kang

- categories: [Python, Datacamp, Machine_Learning]

- image: images/ensemble.png

import pandas as pd

import numpy as np

import matplotlib.pyplot as plt

import seaborn as sns

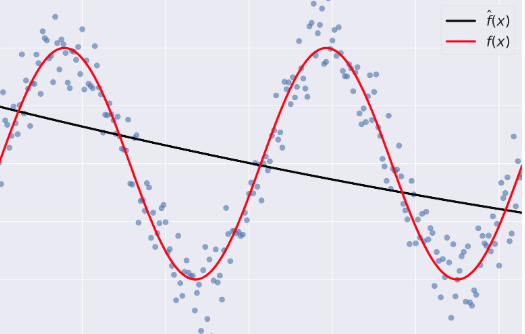

Generalization Error¶

- Supervised Learning - Under the Hood

- supervised learning: $y = f(x)$, $f$ is unknown.

- Goals

- Find a model $\hat{f}$ that best approximates: $f: \hat{f} \approx f$

- $\hat{f}$ can be Logistic Regression, Decision Tree, Neural Network,...

- Discard noise as much as possible

- End goal: $\hat{f}$ should achieve a low predictive error on unseen datasets.

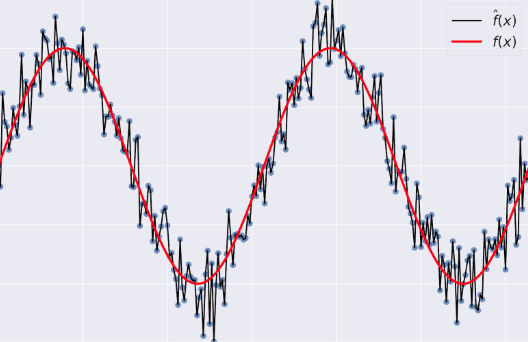

- Difficulties in Approximating $f$

- Overfitting: $\hat{f}(x)$ fits the training set noise.

- Underfitting: $\hat{f}$ is not flexible enough to approximate $f$.

- Generalization Error

- Generalization Error of $\hat{f}$: Does $\hat{f}$ generalize well on unseen data?

- It can be decomposed as follows:

- Bias: error term that tells you, on average, how much $\hat{f} \neq f$.

- Variance: tells you how much $\hat{f}$ is inconsistent over different training sets

- Variance: tells you how much $\hat{f}$ is inconsistent over different training sets

- Model Complexity

- Model Complexity: sets the flexibility of $\hat{f}$

- Example: Maximum tree depth, Minimum samples per leaf, ...

- Bias-Variance Tradeoff

Diagnose bias and variance problems¶

- Estimating the Generalization Error

- How do we estimate the generalization error of a model?

- Cannot be done directly because:

- $f$ is unknown

- usually you only have one dataset

- noise is unpredictable.

- Cannot be done directly because:

- Solution

- Split the data to training and test sets

- fit $\hat{f}$ to the training set

- evaluate the error of $\hat{f}$ on the unseen test set

- generalization error of $\hat{f} \approx$ test set error of $\hat{f}$

- How do we estimate the generalization error of a model?

- Better model Evaluation with Cross-Validation

- Test set should not be touched until we are confident about $\hat{f}$'s performance

- Evaluating $\hat{f}$ on training set: biased estimate, $\hat{f}$ has already seen all training points

- Solution: Cross-Validation (CV)

- K-Fold CV

- Hold-Out CV

- K-Fold CV $$\text{CV error} = \dfrac{E_1 + \cdots + E_{10}}{10} $$

- Diagnose Variance Problems

- If $\hat{f}$ suffers from high variance: CV error of $\hat{f} >$ training set error of $\hat{f}$

- $\hat{f}$ is said to overfit the training set. To remedy overfitting:

- Decrease model complexity

- Gather more data, ...

- $\hat{f}$ is said to overfit the training set. To remedy overfitting:

- If $\hat{f}$ suffers from high variance: CV error of $\hat{f} >$ training set error of $\hat{f}$

- Diagnose Bias Problems

- if $\hat{f}$ suffers from high bias: CV error of $\hat{f} \approx$ training set error of $\hat{f} >>$ desired error.

- $\hat{f}$ is said to underfit the training set. To remedy underfitting:

- Increase model complexity

- Gather more relevant features

Instantiate the model¶

In the following set of exercises, you'll diagnose the bias and variance problems of a regression tree. The regression tree you'll define in this exercise will be used to predict the mpg consumption of cars from the auto dataset using all available features.

Preprocess¶

mpg = pd.read_csv('./dataset/auto.csv')

mpg.head()

| mpg | displ | hp | weight | accel | origin | size | |

|---|---|---|---|---|---|---|---|

| 0 | 18.0 | 250.0 | 88 | 3139 | 14.5 | US | 15.0 |

| 1 | 9.0 | 304.0 | 193 | 4732 | 18.5 | US | 20.0 |

| 2 | 36.1 | 91.0 | 60 | 1800 | 16.4 | Asia | 10.0 |

| 3 | 18.5 | 250.0 | 98 | 3525 | 19.0 | US | 15.0 |

| 4 | 34.3 | 97.0 | 78 | 2188 | 15.8 | Europe | 10.0 |

mpg = pd.get_dummies(mpg)

mpg.head()

| mpg | displ | hp | weight | accel | size | origin_Asia | origin_Europe | origin_US | |

|---|---|---|---|---|---|---|---|---|---|

| 0 | 18.0 | 250.0 | 88 | 3139 | 14.5 | 15.0 | 0 | 0 | 1 |

| 1 | 9.0 | 304.0 | 193 | 4732 | 18.5 | 20.0 | 0 | 0 | 1 |

| 2 | 36.1 | 91.0 | 60 | 1800 | 16.4 | 10.0 | 1 | 0 | 0 |

| 3 | 18.5 | 250.0 | 98 | 3525 | 19.0 | 15.0 | 0 | 0 | 1 |

| 4 | 34.3 | 97.0 | 78 | 2188 | 15.8 | 10.0 | 0 | 1 | 0 |

X = mpg.drop('mpg', axis='columns')

y = mpg['mpg']

from sklearn.model_selection import train_test_split

from sklearn.tree import DecisionTreeRegressor

# Set SEED for reproducibility

SEED = 1

# Split the data into 70% train and 30% test

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.3, random_state=SEED)

# Instantiate a DecisionTreeRegressor dt

dt = DecisionTreeRegressor(max_depth=4, min_samples_leaf=0.26, random_state=SEED)

Evaluate the 10-fold CV error¶

In this exercise, you'll evaluate the 10-fold CV Root Mean Squared Error (RMSE) achieved by the regression tree dt that you instantiated in the previous exercise.

Note that since cross_val_score has only the option of evaluating the negative MSEs, its output should be multiplied by negative one to obtain the MSEs. The CV RMSE can then be obtained by computing the square root of the average MSE.

from sklearn.model_selection import cross_val_score

# Compute the array containing the 10-folds CV MSEs

MSE_CV_scores = - cross_val_score(dt, X_train, y_train, cv=10,

scoring='neg_mean_squared_error', n_jobs=-1)

# Compute the 10-folds CV RMSE

RMSE_CV = (MSE_CV_scores.mean()) ** 0.5

# Print RMSE_CV

print('CV RMSE: {:.2f}'.format(RMSE_CV))

CV RMSE: 5.14

Evaluate the training error¶

You'll now evaluate the training set RMSE achieved by the regression tree dt that you instantiated in a previous exercise.

Note that in scikit-learn, the MSE of a model can be computed as follows:

MSE_model = mean_squared_error(y_true, y_predicted)

where we use the function mean_squared_error from the metrics module and pass it the true labels y_true as a first argument, and the predicted labels from the model y_predicted as a second argument.

from sklearn.metrics import mean_squared_error as MSE

# Fit dt to the training set

dt.fit(X_train, y_train)

# Predict the labels of the training set

y_pred_train = dt.predict(X_train)

# Evaluate the training set RMSE of dt

RMSE_train = (MSE(y_train, y_pred_train)) ** 0.5

# Print RMSE_train

print("Train RMSE: {:.2f}".format(RMSE_train))

Train RMSE: 5.15

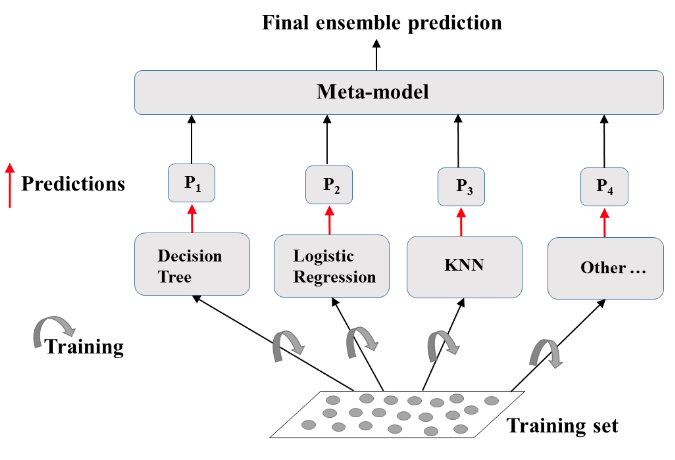

Ensemble Learning¶

- Advantages of CARTs

- Simple to understand

- Simple to interpret

- Easy to use

- Flexibility: ability to describe non-linear dependencies.

- Preprocessing: no need to standardize or normalize features.

- Limitations of CARTs

- Classification: can only produce orthogonal decision boundaries

- Sensitive to small variations in the training set

- High variance: unconstrained CARTs may overfit the training set

- Solution: ensemble learning

- Ensemble Learning

- Train different models on the same dataset.

- Let each model make its predictions

- Meta-Model: aggregates predictionsof individual models

- Final prediction: more robust and less prone to errors

- Best results: models are skillfull in different ways.

Define the ensemble¶

In the following set of exercises, you'll work with the Indian Liver Patient Dataset from the UCI Machine learning repository.

In this exercise, you'll instantiate three classifiers to predict whether a patient suffers from a liver disease using all the features present in the dataset.

Preprocess¶

indian = pd.read_csv('./dataset/indian_liver_patient_preprocessed.csv', index_col=0)

indian.head()

| Age_std | Total_Bilirubin_std | Direct_Bilirubin_std | Alkaline_Phosphotase_std | Alamine_Aminotransferase_std | Aspartate_Aminotransferase_std | Total_Protiens_std | Albumin_std | Albumin_and_Globulin_Ratio_std | Is_male_std | Liver_disease | |

|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | 1.247403 | -0.420320 | -0.495414 | -0.428870 | -0.355832 | -0.319111 | 0.293722 | 0.203446 | -0.147390 | 0 | 1 |

| 1 | 1.062306 | 1.218936 | 1.423518 | 1.675083 | -0.093573 | -0.035962 | 0.939655 | 0.077462 | -0.648461 | 1 | 1 |

| 2 | 1.062306 | 0.640375 | 0.926017 | 0.816243 | -0.115428 | -0.146459 | 0.478274 | 0.203446 | -0.178707 | 1 | 1 |

| 3 | 0.815511 | -0.372106 | -0.388807 | -0.449416 | -0.366760 | -0.312205 | 0.293722 | 0.329431 | 0.165780 | 1 | 1 |

| 4 | 1.679294 | 0.093956 | 0.179766 | -0.395996 | -0.295731 | -0.177537 | 0.755102 | -0.930414 | -1.713237 | 1 | 1 |

X = indian.drop('Liver_disease', axis='columns')

y = indian['Liver_disease']

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.3, random_state=SEED)

from sklearn.linear_model import LogisticRegression

from sklearn.tree import DecisionTreeClassifier

from sklearn.neighbors import KNeighborsClassifier as KNN

# Set seed for reproducibility

SEED = 1

# Instantiate lr

lr = LogisticRegression(random_state=SEED)

# Instantiate knn

knn = KNN(n_neighbors=27)

# Instantiate dt

dt = DecisionTreeClassifier(min_samples_leaf=0.13, random_state=SEED)

# Define the list classifiers

classifiers = [

('Logistic Regression', lr),

('K Nearest Neighbors', knn),

('Classification Tree', dt)

]

Evaluate individual classifiers¶

In this exercise you'll evaluate the performance of the models in the list classifiers that we defined in the previous exercise. You'll do so by fitting each classifier on the training set and evaluating its test set accuracy.

from sklearn.metrics import accuracy_score

# Iterate over the pre-defined list of classifiers

for clf_name, clf in classifiers:

# Fit clf to the training set

clf.fit(X_train, y_train)

# Predict y_pred

y_pred = clf.predict(X_test)

# Calculate accuracy

accuracy = accuracy_score(y_test, y_pred)

# Evaluate clf's accuracy on the test set

print('{:s} : {:.3f}'.format(clf_name, accuracy))

Logistic Regression : 0.759 K Nearest Neighbors : 0.701 Classification Tree : 0.730

Better performance with a Voting Classifier¶

Finally, you'll evaluate the performance of a voting classifier that takes the outputs of the models defined in the list classifiers and assigns labels by majority voting.

from sklearn.ensemble import VotingClassifier

# Instantiate a VotingClassifier vc

vc = VotingClassifier(estimators=classifiers)

# Fit vs to the training set

vc.fit(X_train, y_train)

# Evaluate the test set predictions

y_pred = vc.predict(X_test)

# Calculate accuracy score

accuracy = accuracy_score(y_test, y_pred)

print('Voting Classifier: {:.3f}'.format(accuracy))

Voting Classifier: 0.770