%%html

<script src="https://bits.csb.pitt.edu/preamble.js"></script>

What is machine learning?¶

Machine learning is a subfield of computer science that evolved from the study of pattern recognition and computational learning theory in artificial intelligence. Machine learning explores the study and construction of algorithms that can learn from and make predictions on data. --Wikipedia

Creating useful and/or predictive computational models from data --dkoes

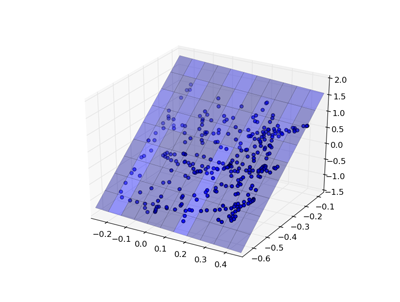

Unsupervised Learning¶

Construct a model from unlabeled data. That is, discover an underlying structure in the data.

We've done this!

- Clustering

- Principal Components Analysis (PCA)

- UMAP

Also

- Latent variable methods

- Expectation-maximization

- Self-organizing map

import numpy as np

from matplotlib.pylab import cm

import scipy.cluster.vq as vq #vq: vector quantization

import matplotlib.pylab as plt

%matplotlib inline

randpts = np.vstack((np.random.randn(100,2)/(4,1),(np.random.randn(100,2)+(1,0))/(1,4)))

(means,clusters) = vq.kmeans2(randpts,4)

plt.scatter(randpts[:,0],randpts[:,1],c=clusters)

plt.plot(means[:,0],means[:,1],'*',ms=20);

Supervised Learning¶

Create a model from labeled data. The data consists of a set of examples where each example has a number of features X and a label y.

Our assumption is that the label is a function of the features:

$$y = f(X)$$

And our goal is to determine what f is.

We want a model/estimator/classifier that accurately predicts y given an X.

Labels¶

There are two main types of supervised learning depending on the type of label.

Classification¶

The label is one of a limited number of classes. Most commonly it is a binary label.

- Will it rain tomorrow?

- Is the protein overexpressed?

- Do the cells die?

Regression¶

The label is a continuous value.

- How much precipitation will there be tomorrow?

- What is the expression level of the protein?

- What percent of the cells died?

Features¶

The features, X, are what make each example distinct. Ideally they contain enough information to predict y. The choice of features is critical and problem-specific.

There are three main three main types:

- Binary - zero or one

- Categorical - one of a limited number of values

- Ordinal - low, medium, high

- Nominal - nucleus, endoplasmic recticulum, mitochondria, ribosome

- Numerical

- Interval - temperature (in celcius or fahrenheight)

- Ratio - temperature (in Kelvin scale)

Not all classifiers can handle all three types, but we can inter-convert.

How?

Example¶

Let's use chemical fingerprints as features!

!wget http://mscbio2025.csb.pitt.edu/files/er.smi

--2023-10-30 20:28:02-- http://mscbio2025.csb.pitt.edu/files/er.smi Resolving mscbio2025.csb.pitt.edu (mscbio2025.csb.pitt.edu)... 136.142.4.139 Connecting to mscbio2025.csb.pitt.edu (mscbio2025.csb.pitt.edu)|136.142.4.139|:80... connected. HTTP request sent, awaiting response... 200 OK Length: 20022 (20K) [application/smil+xml] Saving to: ‘er.smi.1’ er.smi.1 100%[===================>] 19.55K --.-KB/s in 0s 2023-10-30 20:28:02 (47.1 MB/s) - ‘er.smi.1’ saved [20022/20022]

from openbabel import pybel

yvals = []

fps = []

for mol in pybel.readfile('smi','er.smi'):

yvals.append(float(mol.title))

fpbits = mol.calcfp().bits

fp = np.zeros(1024)

fp[fpbits] = 1

fps.append(fp)

X = np.array(fps)

y = np.array(yvals)

plt.figure(figsize=(12,5))

plt.matshow(X,cmap=cm.seismic)

plt.show()

plt.figure(figsize=(10,5))

plt.plot(y,color='blue')

plt.show()

X.shape

<Figure size 864x360 with 0 Axes>

(387, 1024)

The y-values, taken from the second column of the smiles file, are the logS solubility.

plt.hist(y);

%%html

<div id="classtype" style="width: 500px"></div>

<script>

var divid = '#classtype';

jQuery(divid).asker({

id: divid,

question: "What sort of problem is this??",

answers: ['Classification','Regression','Unsupervised'],

server: "https://bits.csb.pitt.edu/asker.js/example/asker.cgi",

charter: chartmaker})

$(".jp-InputArea .o:contains(html)").closest('.jp-InputArea').hide();

</script>

sklearn¶

scikit-learn provides a complete set machine learning tools.

- Classification

- Regression

- Clustering

- Dimensionality reduction

- Model selection and evaluation

- Preprocessing

import sklearn

Linear Model¶

One of the simplest models is a linear regression, where the goal is to find weights w to minimize: $$\sum(Xw - y)^2$$

|

|

%%html

<div id="wshape" style="width: 500px"></div>

<script>

var divid = '#wshape';

jQuery(divid).asker({

id: divid,

question: "What is the shape of w?",

answers: ['387','1024','(378,1024)','(1024,387)',"I've never taken matrix algebra"],

server: "https://bits.csb.pitt.edu/asker.js/example/asker.cgi",

charter: chartmaker})

$(".jp-InputArea .o:contains(html)").closest('.jp-InputArea').hide();

</script>

Linear Model¶

sklearn has a uniform interface for all its models:

from sklearn import linear_model

model = linear_model.LinearRegression() # create the model

model.fit(X,y) # fit the model to the data

p = model.predict(X) # make predictions with the model

plt.figure(figsize = (5,5))

plt.scatter(y,p); plt.xlabel('True'); plt.ylabel('Predict');

Let's reframe this is a classification problem for illustrative purposes...

ylabel = y > -1

plabel = p > -1

plt.hist(np.uint8(ylabel));

Evaluating Predictions¶

Confusion matrix provides ways to evaluate prediction accuracy

* *TP* true positive, a correctly predicted positive example

* *TN* true negatie, a correctly predicted negative example

* *FP* false positive, a negative example incorrectly predicted as positive

* *FN* false negative, a positive example incorrectly predicted as negative

* *P* total number of positives (*TP* + *FN*)

* *N* total number of negatives (*TN* + *FP*)

* *TP* true positive, a correctly predicted positive example

* *TN* true negatie, a correctly predicted negative example

* *FP* false positive, a negative example incorrectly predicted as positive

* *FN* false negative, a positive example incorrectly predicted as negative

* *P* total number of positives (*TP* + *FN*)

* *N* total number of negatives (*TN* + *FP*)

Accuracy: $\frac{TP+TN}{P+N}$

from sklearn.metrics import * #pull in accuracy score, amount other things

print('Accuracy')

print(accuracy_score(ylabel, plabel))

print('\nConfusion Matrix')

print(np.array([['TP', 'FN'],['FP','TP']]))

print(confusion_matrix(ylabel,plabel).transpose())

Accuracy 0.9431524547803618 Confusion Matrix [['TP' 'FN'] ['FP' 'TP']] [[200 10] [ 12 165]]

Other measures¶

Precision. Of those predicted true, how may are accurate? $\frac{TP}{TP+FP}$

Recall (true positive rate). How many of the true examples were retrieved? $\frac{TP}{P}$

F1 Score. The harmonic mean of precision and recall. $\frac{2TP}{2TP+FP+FN}$

print(classification_report(ylabel,plabel))

precision recall f1-score support

False 0.95 0.94 0.95 212

True 0.93 0.94 0.94 175

accuracy 0.94 387

macro avg 0.94 0.94 0.94 387

weighted avg 0.94 0.94 0.94 387

%%html

<div id="confq" style="width: 500px"></div>

<script>

var divid = '#confq';

jQuery(divid).asker({

id: divid,

question: "What would the recall be if our classifer predicted everything as true?",

answers: ['0','81/306','0.5','1.0'],

server: "https://bits.csb.pitt.edu/asker.js/example/asker.cgi",

charter: chartmaker})

$(".jp-InputArea .o:contains(html)").closest('.jp-InputArea').hide();

</script>

ROC Curves¶

The previous metrics work on classification results (yes or no). Many models are capable of producing scores or probabilities (recall we had to threshold our results). The classification performance then depends on what score threshold is chosen to distinguish the two classes.

ROC curves plot the false positive rate and true positive rate as this treshold is changed.

fpr, tpr, thresholds = roc_curve(ylabel, p) #not using rounded values

plt.figure(figsize=(7,7))

plt.plot(fpr,tpr,linewidth=4,clip_on=False)

plt.xlabel("FPR"); plt.ylabel("TPR")

plt.gca().set_aspect('equal')

plt.ylim(0,1); plt.xlim(0,1); plt.show()

AUC¶

The area under the ROC curve (AUC) has a statistical meaning. It is equal to the probability that the classifier will rank a randomly chosen positive example higher than a randomly chosen negative example.

An AUC of one is perfect prediction.

An AUC of 0.5 is the same as random.

np.random.shuffle(p)

fpr, tpr, thresholds = roc_curve(ylabel, p)

plt.plot(fpr,tpr,linewidth=3); plt.xlabel("FPR"); plt.ylabel("TPR"); plt.ylim(0,1); plt.xlim(0,1)

plt.gca().set_aspect('equal')

plt.figure(figsize=(10,10))

print(roc_auc_score(ylabel,p))

0.49897574123989225

<Figure size 720x720 with 0 Axes>

Correct Model Evaluation¶

We have been evaluating how well our model can fit the training data. This is not the relevant metric.

We are most interested in generalization error: the ability of the model to predict new data that was not part of the training set.

In order to assess the predictiveness of the model, we must use it to predict data it has not been trained on.

%%html

<div id="crossq" style="width: 500px"></div>

<script>

var divid = '#crossq';

jQuery(divid).asker({

id: divid,

question: "In 5-fold cross validation, on average, how many times will a given example be in the training set?",

answers: ['0','1','2.5','4','5'],

server: "https://bits.csb.pitt.edu/asker.js/example/asker.cgi",

charter: chartmaker})

$(".jp-InputArea .o:contains(html)").closest('.jp-InputArea').hide();

</script>

Cross Validation¶

sklearn implement a number of cross validation variants. They provide a way to generate test/train sets.

from sklearn.model_selection import KFold

kf = KFold(n_splits=5)

accuracies = []; rocs = []

accuracies_train = []; rocs_train = [];

for train,test in kf.split(X): # these are arrays of indices

model = linear_model.LinearRegression()

model.fit(X[train],y[train]) #slice out the training folds

p = model.predict(X[test]) #slice out the test fold

accuracies.append(accuracy_score(ylabel[test],p > -1))

fpr, tpr, thresholds = roc_curve(ylabel[test], p)

rocs.append( (fpr,tpr, roc_auc_score(ylabel[test], p)))

#training data

p_train = model.predict(X[train]) #slice out the test fold

accuracies_train.append(accuracy_score(ylabel[train],p_train > -1))

fpr, tpr, thresholds = roc_curve(ylabel[train], p_train)

rocs_train.append( (fpr,tpr, roc_auc_score(ylabel[train], p_train)))

print(accuracies)

print("Average accuracy:",np.mean(accuracies),'\n')

print(accuracies_train)

print("Average accuracy (training data):",np.mean(accuracies_train))

[0.6282051282051282, 0.6410256410256411, 0.5454545454545454, 0.7142857142857143, 0.7012987012987013] Average accuracy: 0.6460539460539461 [0.9514563106796117, 0.948220064724919, 0.9612903225806452, 0.9580645161290322, 0.9483870967741935] Average accuracy (training data): 0.9534836621776803

fig, ax = plt.subplots(1,2, figsize=(10,5));

for roc in rocs:

ax[0].plot(roc[0],roc[1],label="AUC %.2f" %roc[2]);

ax[0].set(xlabel="FPR", ylabel="TPR", ); ax[0].set_ylim(0,1); ax[0].set_xlim(0,1); ax[0].legend(loc='best');

for roc in rocs_train:

ax[1].plot(roc[0],roc[1],label="AUC %.2f" %roc[2]);

ax[1].set(xlabel="FPR", ylabel="TPR", ); ax[1].set_ylim(0,1); ax[1].set_xlim(0,1); ax[1].legend(loc='best');

plt.show()

Alternatively...¶

from sklearn.model_selection import cross_validate

cross_validate(linear_model.LinearRegression(),X,y > -1,cv=5,scoring=['roc_auc'])

{'fit_time': array([0.08149719, 0.09700084, 0.07871628, 0.0587194 , 0.05852962]),

'score_time': array([0.01111794, 0.01063108, 0.002177 , 0.00211978, 0.0022378 ]),

'test_roc_auc': array([0.67105263, 0.52777778, 0.65067568, 0.69740741, 0.63090418])}

%%html

<div id="howgood" style="width: 500px"></div>

<script>

var divid = '#howgood';

jQuery(divid).asker({

id: divid,

question: "How good is the predictiveness of our model?",

answers: ['A','B','C','D'],

extra: ['Still Perfect','Not perfect, but still good','Not great, but better than random','Horrible'],

server: "https://bits.csb.pitt.edu/asker.js/example/asker.cgi",

charter: chartmaker})

$(".jp-InputArea .o:contains(html)").closest('.jp-InputArea').hide();

</script>

Generalization Error¶

There are several sources of generalization error:

- overfitting - using artifacts of the data to make predictions

- our data set has 387 examples and 1024 features

- insufficient data - not enough or not the right kind

- inappropriate model - isn't capable of representing reality

A large different between cross-validation performance and fit (test-on-train) performance indicates overfitting.

One way to reduce overfitting is to reduce the number of features used for training (this is called feature selection).

LASSO¶

Lasso is a modified form of linear regression that includes a regularization parameter $\alpha$ $$\sum(Xw - y)^2 + \alpha\sum|w|$$

The higher the value of $\alpha$, the greater the penalty for having non-zero weights. This has the effect of driving weights to zero and selecting fewer features for the model.

kf = KFold(n_splits=5)

accuracies = []; rocs = []

for train,test in kf.split(X): # these are arrays of indices

model = linear_model.Lasso(alpha=0.005)

model.fit(X[train],y[train]) #slice out the training folds

p = model.predict(X[test]) #slice out the test fold

accuracies.append(accuracy_score(ylabel[test],p > 0))

fpr, tpr, thresholds = roc_curve(ylabel[test], p)

rocs.append( (fpr,tpr, roc_auc_score(ylabel[test],p)))

print(accuracies)

print("Average accuracy:",np.mean(accuracies))

[0.6666666666666666, 0.7948717948717948, 0.6493506493506493, 0.8051948051948052, 0.7402597402597403] Average accuracy: 0.7312687312687312

for roc in rocs:

plt.plot(roc[0],roc[1],label="AUC %.2f" %roc[2]);

plt.gca().set_aspect('equal')

plt.xlabel("FPR"); plt.ylabel("TPR"); plt.ylim(0,1); plt.xlim(0,1); plt.legend(loc='best'); plt.show()

Lasso vs. LinearRegression¶

linmodel = linear_model.LinearRegression()

linmodel.fit(X,y)

lassomodel = linear_model.Lasso(alpha=0.005)

lassomodel.fit(X,y);

The Lasso model is much simpler

print("Nonzero coefficients in linear:",np.count_nonzero(linmodel.coef_))

print("Nonzero coefficients in LASSO:",np.count_nonzero(lassomodel.coef_))

Nonzero coefficients in linear: 881 Nonzero coefficients in LASSO: 64

Model Parameter Optimization¶

Most classifiers have parameters, like $\alpha$ in Lasso, that can be set to change the classification behavior.

A key part of training a model is figuring out what parameters to use.

This is typically done by a brute-force grid search (i.e., try a bunch of values and see which ones work)

from sklearn import model_selection

#setup grid search with default 5-fold CV and scoring

searcher = model_selection.GridSearchCV(linear_model.Lasso(max_iter=10000), {'alpha': [0.001,0.005,0.01,0.1]})

searcher.fit(X,y)

searcher.best_params_

{'alpha': 0.005}

Model specific optimization¶

Some classifiers (mostly linear models) can identify optimal parameters more efficiently and have a "CV" version that automatically determines the best parameters.

lassomodel = linear_model.LassoCV(n_jobs=8,max_iter=10000)

lassomodel.fit(X,y)

LassoCV(max_iter=10000, n_jobs=8)In a Jupyter environment, please rerun this cell to show the HTML representation or trust the notebook.

On GitHub, the HTML representation is unable to render, please try loading this page with nbviewer.org.

LassoCV(max_iter=10000, n_jobs=8)

lassomodel.alpha_

0.0045520394784971056

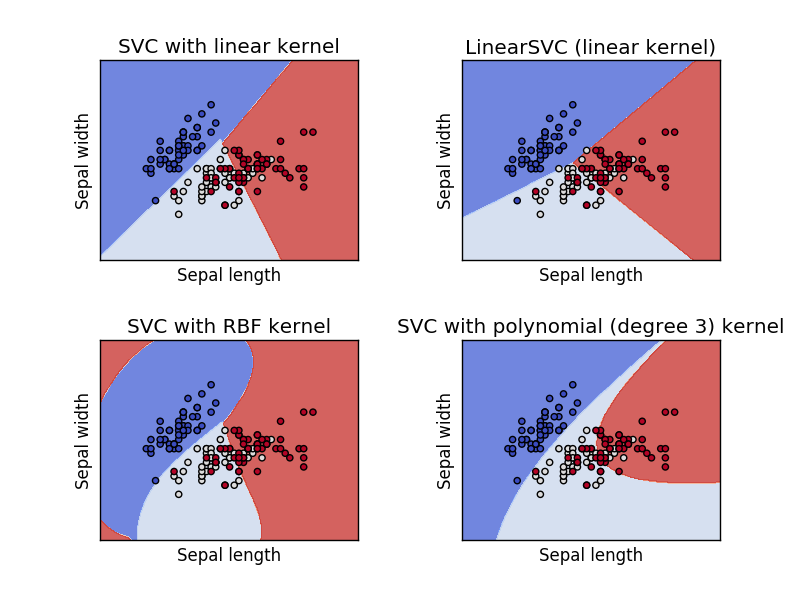

Support Vector Machine (SVM)¶

A support vector machine is orthogonal to a linear model - it attempts to find a plane that separates the classes of data with the maximum margin.

There are two key parameters in an SVM: the kernel and the penalty term (and some kernels may have additional parameters).

SVM Kernels¶

A kernel function, $\phi$, is a transformation of the input data that let's us apply SVM (linear separation) to problems that are not linearly separable.

Training SVM¶

We can get both the predictions (0 or 1) from the SVM as well as probabilities, a confidence in how accurate the predictions are. We use the probabilities to compute the ROC curve.

from sklearn import svm

kf = KFold(n_splits=5)

accuracies = []; rocs = []

for train,test in kf.split(X): # these are arrays of indices

model = svm.SVC(probability=True)

model.fit(X[train],ylabel[train]) #slice out the training folds

p = model.predict(X[test]) # prediction (0 or 1)

probs = model.predict_proba(X[test])[:,1] #probability of being 1

accuracies.append(accuracy_score(ylabel[test],p))

fpr, tpr, thresholds = roc_curve(ylabel[test], probs)

rocs.append( (fpr,tpr, roc_auc_score(ylabel[test],probs)))

Training SVM¶

print(accuracies)

print("Average accuracy:",np.mean(accuracies))

[0.6923076923076923, 0.7307692307692307, 0.6883116883116883, 0.7922077922077922, 0.6753246753246753] Average accuracy: 0.7157842157842158

for roc in rocs:

plt.plot(roc[0],roc[1],label="AUC %.2f" %roc[2]);

plt.gca().set_aspect('equal')

plt.xlabel("FPR"); plt.ylabel("TPR"); plt.ylim(0,1); plt.xlim(0,1); plt.legend(loc='best'); plt.show()

Training SVM¶

from sklearn import model_selection

searcher = model_selection.GridSearchCV(svm.SVC(), {'kernel': ['linear','rbf'],'C': [1,10,100,1000]},scoring='roc_auc',n_jobs=-1)

searcher.fit(X,ylabel)

GridSearchCV(estimator=SVC(), n_jobs=-1,

param_grid={'C': [1, 10, 100, 1000], 'kernel': ['linear', 'rbf']},

scoring='roc_auc')In a Jupyter environment, please rerun this cell to show the HTML representation or trust the notebook. On GitHub, the HTML representation is unable to render, please try loading this page with nbviewer.org.

GridSearchCV(estimator=SVC(), n_jobs=-1,

param_grid={'C': [1, 10, 100, 1000], 'kernel': ['linear', 'rbf']},

scoring='roc_auc')SVC()

SVC()

print("Best AUC:",searcher.best_score_)

print("Parameters",searcher.best_params_)

Best AUC: 0.8149612403100776

Parameters {'C': 10, 'kernel': 'rbf'}

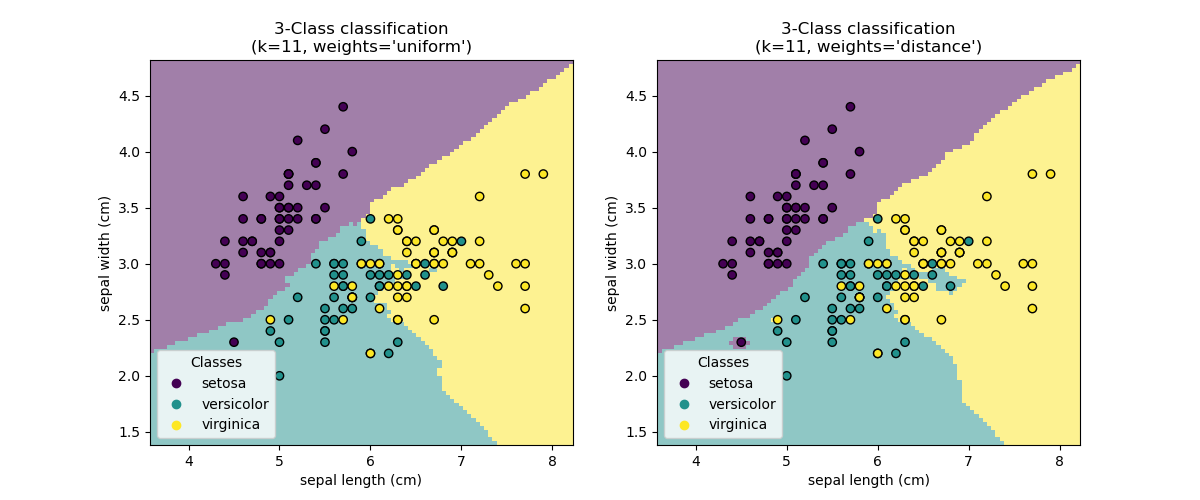

Nearest Neighbors (NN)¶

Nearest Neighbors models classify new points based on the values of the closest points in the training set.

The main parameter is $k$, the number of neighbors to consider, and the method of combining the neighbor results.

Training NN¶

from sklearn import neighbors

kf = KFold(n_splits=5)

accuracies = []; rocs = []

for train,test in kf.split(X): # these are arrays of indices

model = neighbors.KNeighborsClassifier() # defaults to k=5

model.fit(X[train],ylabel[train]) #slice out the training folds

p = model.predict(X[test]) # prediction (0 or 1)

probs = model.predict_proba(X[test])[:,1] #probability of being 1

accuracies.append(accuracy_score(ylabel[test],p))

fpr, tpr, thresholds = roc_curve(ylabel[test], probs)

rocs.append( (fpr,tpr, roc_auc_score(ylabel[test],probs)))

Training NN¶

print(accuracies)

print("Average accuracy:",np.mean(accuracies))

[0.7307692307692307, 0.6923076923076923, 0.7532467532467533, 0.7272727272727273, 0.7662337662337663] Average accuracy: 0.733966033966034

for roc in rocs:

plt.plot(roc[0],roc[1],label="AUC %.2f" %roc[2]);

plt.gca().set_aspect('equal')

plt.xlabel("FPR"); plt.ylabel("TPR"); plt.ylim(0,1); plt.xlim(0,1); plt.legend(loc='best'); plt.show()

%%html

<div id="knnq" style="width: 500px"></div>

<script>

var divid = '#knnq';

jQuery(divid).asker({

id: divid,

question: "What could <b>not</b> be a valid probability from the previous k-nn (k=5) model?",

answers: ['0','.5','.6','1'],

server: "https://bits.csb.pitt.edu/asker.js/example/asker.cgi",

charter: chartmaker})

$(".jp-InputArea .o:contains(html)").closest('.jp-InputArea').hide();

</script>

Training NN¶

searcher = model_selection.GridSearchCV(neighbors.KNeighborsClassifier(), \

{'n_neighbors': [1,2,3,4,5,10]},scoring='roc_auc',n_jobs=-1)

searcher.fit(X,ylabel);

print("Best AUC:",searcher.best_score_)

print("Parameters",searcher.best_params_)

Best AUC: 0.8024758740705586

Parameters {'n_neighbors': 5}

Decision Trees¶

A decision tree is a tree where each node make a decision based on the value of a single feature. At the bottom of the tree is the classification that results from all those decisions.

Significant model parameters include the depth of the tree and how features and splits are determined.

%%html

<div id="dtqml" style="width: 500px"></div>

<script>

var divid = '#dtqml';

jQuery(divid).asker({

id: divid,

question: "Humidity is low, it's windy, and it is sunny. What do you do?",

answers: ["Play","Don't Play"],

server: "https://bits.csb.pitt.edu/asker.js/example/asker.cgi",

charter: chartmaker})

$(".jp-InputArea .o:contains(html)").closest('.jp-InputArea').hide();

</script>

Random Forest¶

A bunch of decision trees trained on different sub-samples of the data.

They vote (or are averaged).

Training a Decision Tree¶

from sklearn import tree

kf = KFold(n_splits=5)

accuracies = []; rocs = []

for train,test in kf.split(X): # these are arrays of indices

model = tree.DecisionTreeClassifier()

model.fit(X[train],ylabel[train]) #slice out the training folds

p = model.predict(X[test]) # prediction (0 or 1)

probs = model.predict_proba(X[test])[:,1] #probability of being 1

accuracies.append(accuracy_score(ylabel[test],p))

fpr, tpr, thresholds = roc_curve(ylabel[test], probs)

rocs.append( (fpr,tpr, roc_auc_score(ylabel[test],probs)))

Training a Decision Tree¶

print(accuracies)

print("Average accuracy:",np.mean(accuracies))

[0.7435897435897436, 0.717948717948718, 0.6753246753246753, 0.7272727272727273, 0.7142857142857143] Average accuracy: 0.7156843156843158

for roc in rocs:

plt.plot(roc[0],roc[1],label="AUC %.2f" %roc[2]);

plt.gca().set_aspect('equal')

plt.xlabel("FPR"); plt.ylabel("TPR"); plt.ylim(0,1); plt.xlim(0,1); plt.legend(loc='best'); plt.show()

set(probs)

{0.0, 0.5, 1.0}

Training a Decision Tree¶

searcher = model_selection.GridSearchCV(tree.DecisionTreeClassifier(), \

{'max_depth': [1,2,3,4,5,10]},scoring='roc_auc',n_jobs=-1)

searcher.fit(X,ylabel);

print("Best AUC:",searcher.best_score_)

print("Parameters",searcher.best_params_)

Best AUC: 0.7580414491377947

Parameters {'max_depth': 5}

model = tree.DecisionTreeClassifier(max_depth=5).fit(X,ylabel)

set(model.predict_proba(X)[:,1])

{0.0,

0.046153846153846156,

0.15217391304347827,

0.2391304347826087,

0.5,

0.6666666666666666,

1.0}

Regression¶

Regression in sklearn is pretty much the same as classification, but you use a score appropriate for regression (e.g., squared error or correlation).

# Code source: Gaël Varoquaux

# Andreas Müller

# Modified for documentation by Jaques Grobler

# License: BSD 3 clause

import matplotlib.pyplot as plt

import numpy as np

from matplotlib.colors import ListedColormap

from sklearn.datasets import make_circles, make_classification, make_moons

from sklearn.discriminant_analysis import QuadraticDiscriminantAnalysis

from sklearn.ensemble import *

from sklearn.gaussian_process import GaussianProcessClassifier

from sklearn.gaussian_process.kernels import RBF

from sklearn.inspection import DecisionBoundaryDisplay

from sklearn.model_selection import train_test_split

from sklearn.naive_bayes import GaussianNB

from sklearn.neighbors import KNeighborsClassifier

from sklearn.neural_network import MLPClassifier

from sklearn.pipeline import make_pipeline

from sklearn.preprocessing import StandardScaler

from sklearn.svm import SVC

from sklearn.tree import DecisionTreeClassifier

names = [

"Nearest Neighbors",

"Linear SVM",

"RBF SVM",

"Decision Tree",

"Random Forest",

"Gradient Boosting",

"Neural Net"

]

classifiers = [

KNeighborsClassifier(3),

SVC(kernel="linear", C=0.025, random_state=42),

SVC(gamma=2, C=1, random_state=42),

DecisionTreeClassifier(max_depth=5, random_state=42),

RandomForestClassifier(

max_depth=5, n_estimators=10, max_features=1, random_state=42

),

GradientBoostingClassifier(),

MLPClassifier(alpha=1, max_iter=1000, random_state=42)

]

X, y = make_classification(

n_features=2, n_redundant=0, n_informative=2, random_state=1, n_clusters_per_class=1

)

rng = np.random.RandomState(2)

X += 2 * rng.uniform(size=X.shape)

linearly_separable = (X, y)

datasets = [

make_moons(noise=0.3, random_state=0),

make_circles(noise=0.2, factor=0.5, random_state=1),

linearly_separable,

]

figure = plt.figure(figsize=(27, 12))

i = 1

# iterate over datasets

for ds_cnt, ds in enumerate(datasets):

# preprocess dataset, split into training and test part

X, y = ds

X_train, X_test, y_train, y_test = train_test_split(

X, y, test_size=0.4, random_state=42

)

x_min, x_max = X[:, 0].min() - 0.5, X[:, 0].max() + 0.5

y_min, y_max = X[:, 1].min() - 0.5, X[:, 1].max() + 0.5

# just plot the dataset first

cm = plt.cm.RdBu

cm_bright = ListedColormap(["#FF0000", "#0000FF"])

ax = plt.subplot(len(datasets), len(classifiers) + 1, i)

if ds_cnt == 0:

ax.set_title("Input data",fontsize=24)

# Plot the training points

ax.scatter(X_train[:, 0], X_train[:, 1], c=y_train, cmap=cm_bright, edgecolors="k")

# Plot the testing points

ax.scatter(

X_test[:, 0], X_test[:, 1], c=y_test, cmap=cm_bright, alpha=0.6, edgecolors="k"

)

ax.set_xlim(x_min, x_max)

ax.set_ylim(y_min, y_max)

ax.set_xticks(())

ax.set_yticks(())

i += 1

# iterate over classifiers

for name, clf in zip(names, classifiers):

ax = plt.subplot(len(datasets), len(classifiers) + 1, i)

clf = make_pipeline(StandardScaler(), clf)

clf.fit(X_train, y_train)

score = clf.score(X_test, y_test)

DecisionBoundaryDisplay.from_estimator(

clf, X, cmap=cm, alpha=0.8, ax=ax, eps=0.5

)

# Plot the training points

ax.scatter(

X_train[:, 0], X_train[:, 1], c=y_train, cmap=cm_bright, edgecolors="k"

)

# Plot the testing points

ax.scatter(

X_test[:, 0],

X_test[:, 1],

c=y_test,

cmap=cm_bright,

edgecolors="k",

alpha=0.6,

)

ax.set_xlim(x_min, x_max)

ax.set_ylim(y_min, y_max)

ax.set_xticks(())

ax.set_yticks(())

if ds_cnt == 0:

ax.set_title(name,fontsize=24)

ax.text(

x_max - 0.3,

y_min + 0.3,

("%.2f" % score).lstrip("0"),

size=15,

horizontalalignment="right",

)

i += 1

plt.tight_layout()

plt.savefig('imgs/sklearn_decisions.png',dpi=300)

Project¶

Pick a model and train it to predict the actual y values (regression) of our er.smi dataset.

!wget http://mscbio2025.csb.pitt.edu/files/er.smi

from openbabel import pybel

yvals = []

fps = []

for mol in pybel.readfile('smi','er.smi'):

yvals.append(float(mol.title))

fpbits = mol.calcfp().bits

fp = np.zeros(1024)

fp[fpbits] = 1

fps.append(fp)

X = np.array(fps)

y = np.array(yvals)

--2023-10-30 20:29:41-- http://mscbio2025.csb.pitt.edu/files/er.smi Resolving mscbio2025.csb.pitt.edu (mscbio2025.csb.pitt.edu)... 136.142.4.139 Connecting to mscbio2025.csb.pitt.edu (mscbio2025.csb.pitt.edu)|136.142.4.139|:80... connected. HTTP request sent, awaiting response... 200 OK Length: 20022 (20K) [application/smil+xml] Saving to: ‘er.smi.2’ er.smi.2 100%[===================>] 19.55K --.-KB/s in 0s 2023-10-30 20:29:41 (41.0 MB/s) - ‘er.smi.2’ saved [20022/20022]

from sklearn.model_selection import KFold

from sklearn.metrics import *

from sklearn import ensemble

kf = KFold(n_splits=5)

errors = []

predictions = []

for train,test in kf.split(X): # these are arrays of indices

model = sklearn.ensemble.RandomForestRegressor()

model.fit(X[train],y[train]) #slice out the training folds

p = model.predict(X[test]) # prediction (0 or 1)

predictions += zip(y[test],p)

errors.append(mean_squared_error(y[test],p))

predictions = np.array(predictions)

print(errors)

print("Average squared error",np.mean(errors))

[0.2941807667340254, 0.3567487151063835, 0.19864288026362262, 0.3638722668982609, 0.209028520490121] Average squared error 0.2844946298984827

import seaborn as sns

sns.jointplot(x=predictions[:,0],y=predictions[:,1])

plt.xlabel("Experiment"); plt.ylabel("Predicted")

np.corrcoef(predictions[:,0],predictions[:,1])[0][1]

0.9037840225075521